Lastly I am working with the kinect one sensor, which comes with two sensors an RGB camera with resolution of 1920x1080 and an IR/Depth sensor with resolution 512x424. So far I managed to acquire the view from the kinect sensors using the kinect sdk v2.0 with opencv. However, now I would like to combine the rgb view with the dept view in order to create an rgbd image. Since I did not have any experience before with something like that I made a small research and I found out that I need first to calibrate my two sensors and afterwards to try to map the two views. It is mentioned that the best approach is to:

- calibrate individually the two cameras and extract the intrinsic/cameraMatrix and distortions coefficients(distCoeffs) values by using the

calibrate()function for each view. - and then having the above information pass it to the

stereoCalibrate()function in order to extract the rotation(R) and translation(T) matrices, which are needed for the remapping. - apply the remapping (not sure yet how to do that though still in the search).

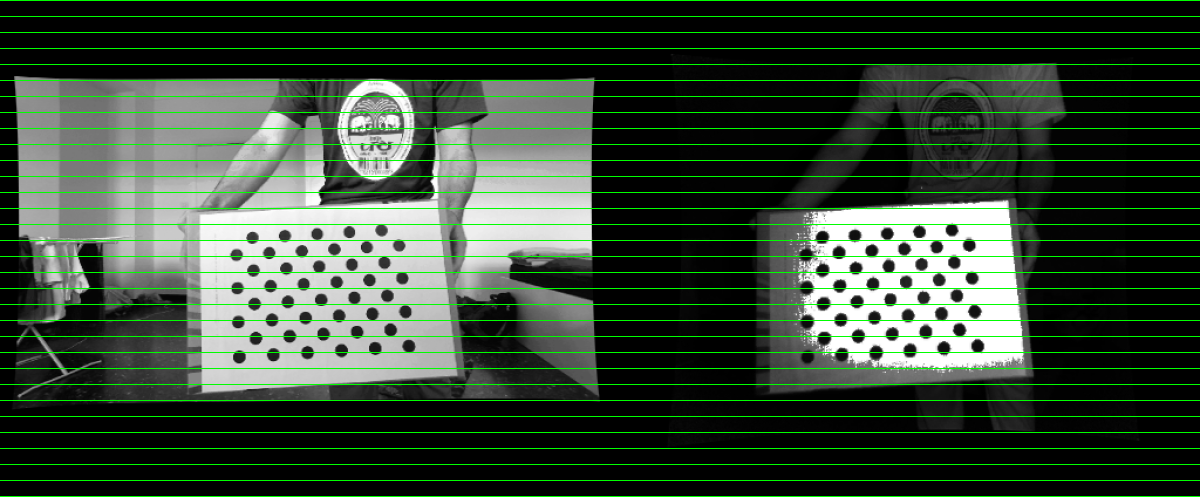

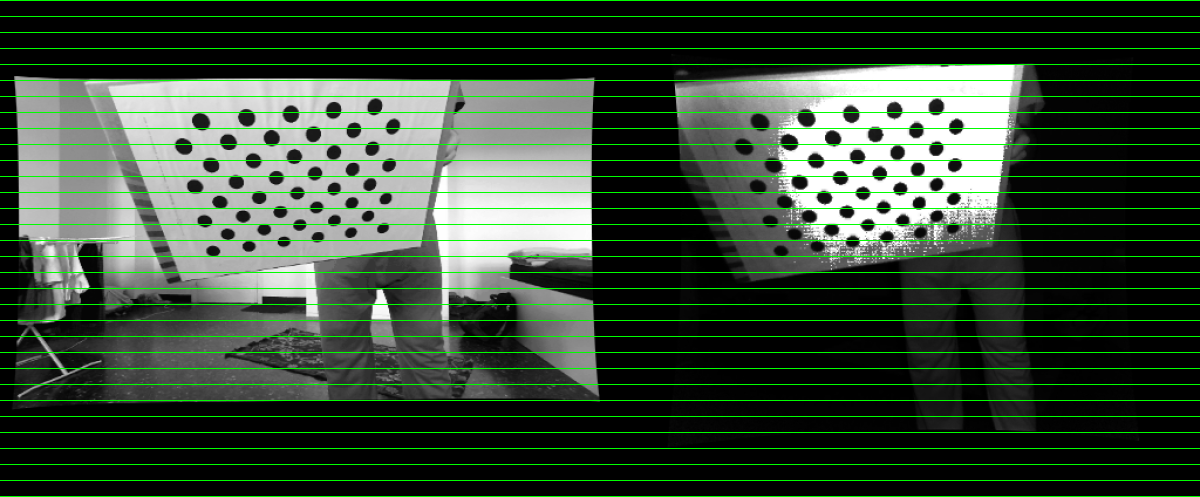

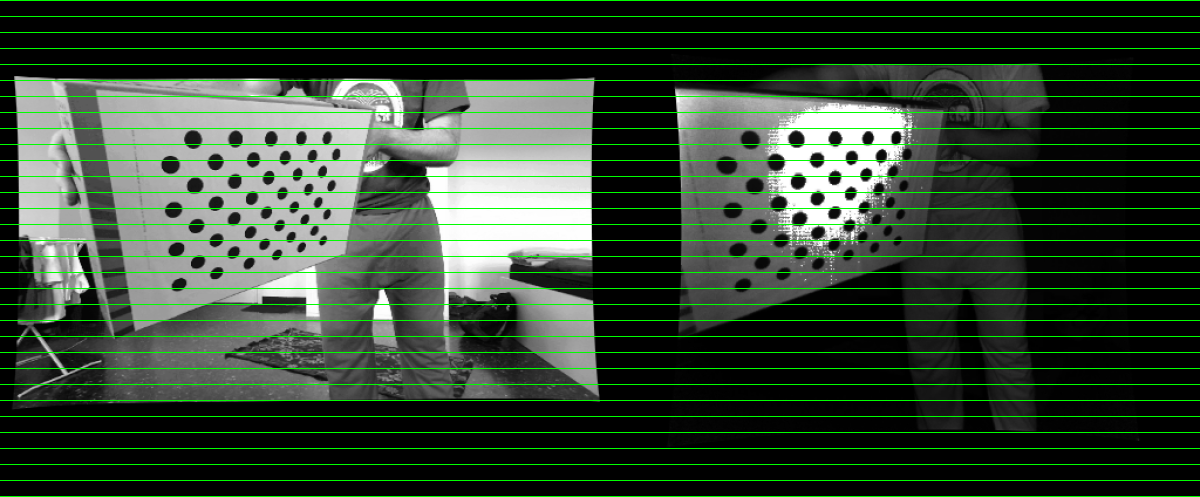

My progress so far is the following: I have implemented bullet 1 without problem using the an asymmetric circles chessboard and the calibrate() funciton with quite nice results. The reprojection error rms for the rgb sensor is ~0.3 and for the ir ~0.1 (it should be between 0.1 - 1, with best something <0.5). However, if I pass the extracted intrinsic and distCoeffs values from the two sensors to the stereoCalibrate() function the reprojection error is never below 1. The best that I managed was ~1.2 (I guess it should be also below 1 and in the best case <0.5) and epipolar error around ~6.0, no matter which flag I used. Basically in the case of predefined intrinsic and distCoeffs values they suggest CV_CALIB_USE_INTRINSIC_GUESS and CV_CALIB_FIX_INTRINSIC to be enabled but it did not help. So, I am trying to figure out what is wrong. Another question regarding the stereoCalibrate() that I have is one of the parameters is about the image size, however since I have two image sizes (i.e. 1920x1080 and 512x424) which one should I use (now I am using the 512x424 since this is the size that I want to map the rgb pixel values, though I am not sure if that is correct).

If I continue and use the extracted values from above despite, the bad rms the rectification is not that good. Therefore I would appreciate any feedback from someone that has any experience. Thanks.