I am working on a task of image registration in point cloud of scene. I have an image from camera, 3D point cloud of scene and camera calibration data (i.e. focal length). The image and point cloud of scene share the same space.

From my previous question here I have learnt that the SolvePnP method from OpenCV would work in this case but there is no obvious way to find robust correspondences btw query image and scene point cloud.

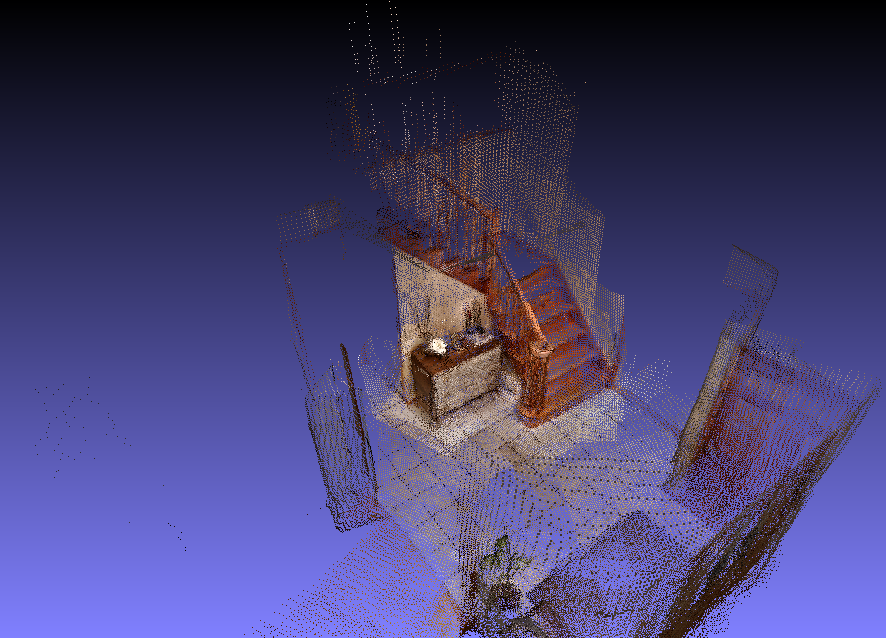

My intuition now is to project point cloud to image plane, match keypoints calculated on it against ones calculated on query image and use robust point correspondences in the SolvePnP method to get camera pose. I have found here a way to project point cloud to image plane.

My questions is could it work and is it possible to preserve the transformation btw the resultant image of scene and the original point cloud?