Hi everybody, I am opening a new question to add images and put together several other questions

- https://answers.opencv.org/question/227094/cvprojectpoints-giving-bad-results-when-undistorted-2d-projections-are-far/

- https://answers.opencv.org/question/197614/are-lens-distortion-coefficients-inverted-for-projectpoints/

To recap, I'm trying to project 3D points to an undistort image, in particular to the images from the KITTI raw dataset. For those who do not know it, for each of the sequences of the dataset, a calibration file is provided both in terms of intrinsic and extrinsic; the dataset contains both camera images and lidar 3D points; also, a tutorial for the projection of the 3D points is provided, but everything is proposed with the undistorted/rectified images that the dataset also provides. However, the intrinsic/extrinsic matrices are also provided so in general I should be able to project the 3D points directly on the image without having to either use their rectification or making any undistorsion of the raw image (raw means without processing in this case).

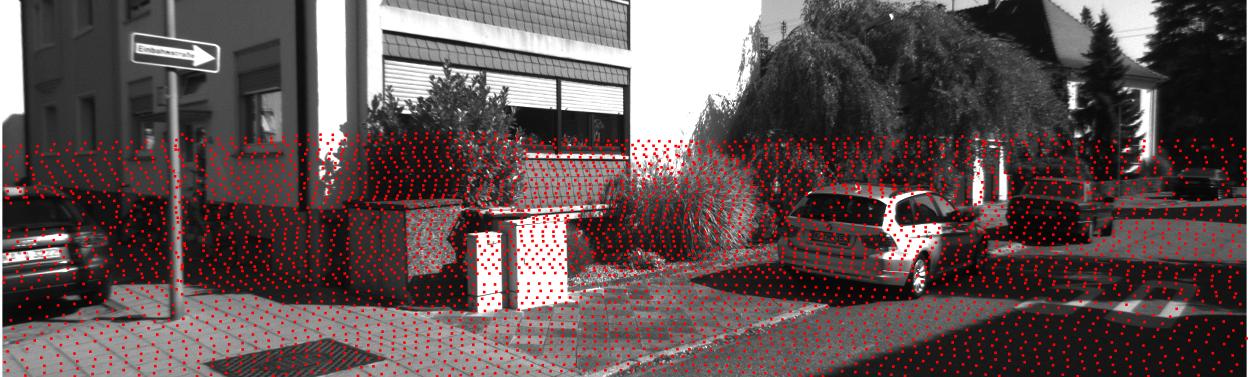

So let's start with their example, a projection of the points directly on the rectified image (please note, I'm saying rectified and not undistorted because the dataset has four cameras, so they provide the undistorted/rectified images).

as you can see this image is pretty undistorted and the lidar points should be correctly aligned (using their demo toolkit here in matlab).

Next, using the info provided:

- S_xx: 1x2 size of image xx before rectification

- K_xx: 3x3 calibration matrix of camera xx before rectification

- D_xx: 1x5 distortion vector of camera xx before rectification

- R_xx: 3x3 rotation matrix of camera xx (extrinsic)

- T_xx: 3x1 translation vector of camera xx (extrinsic)

together with the info about where the lidar-sensor is I can easily prepare an example to

- read the lidar data.

- rotate the points in the camera coordinate frame (it is the same thing that giving the projectpoints this transformation with the rvec/tvec, which i use as identities since the first camera is the origin of all the cordinate systems, but this is a detail)

- project the points using projectPoints

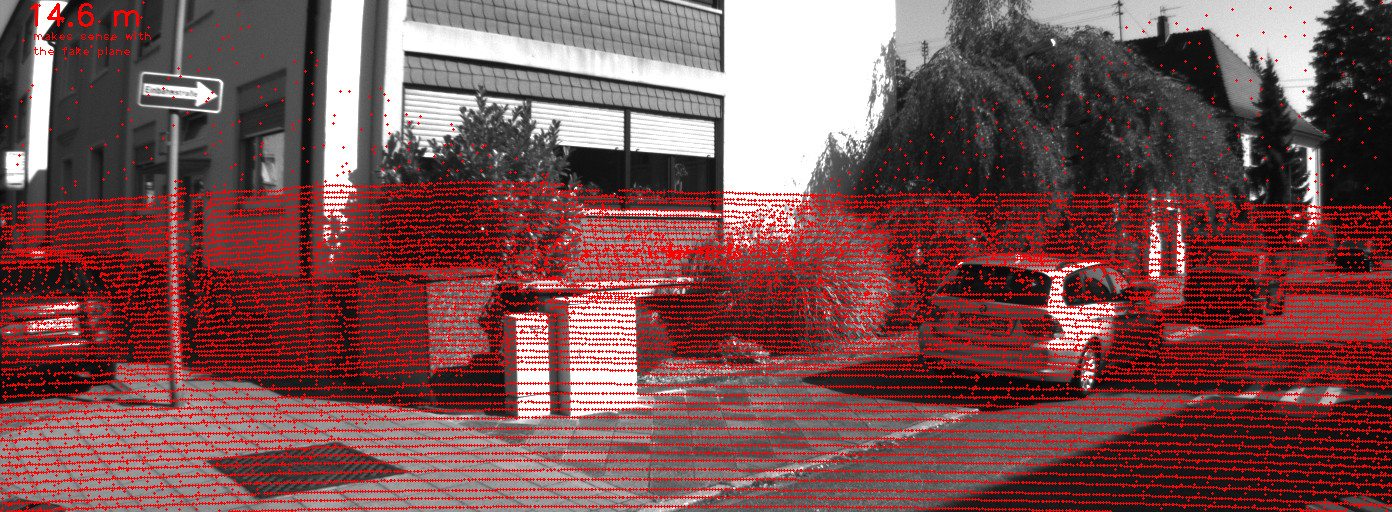

Ok let's try to do this.... using

D_00 = np.array([ -3.745594e-01, 2.049385e-01, 1.110145e-03, 1.379375e-03, -7.084798e-02], dtype=np.float64)

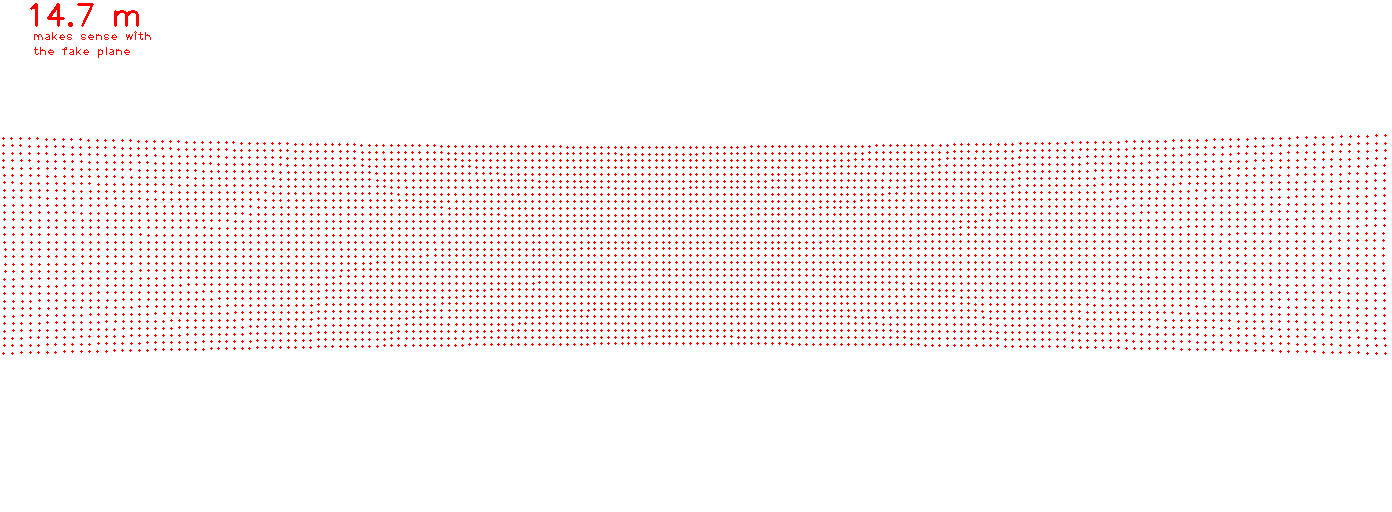

I hope you can see the image! there are points above the actual limit of the lidar points, as well some "noise" in between the other points (ok just to be fair, in the first image I didn't put all the points, but you should see a different "noise pattern" in this second image).

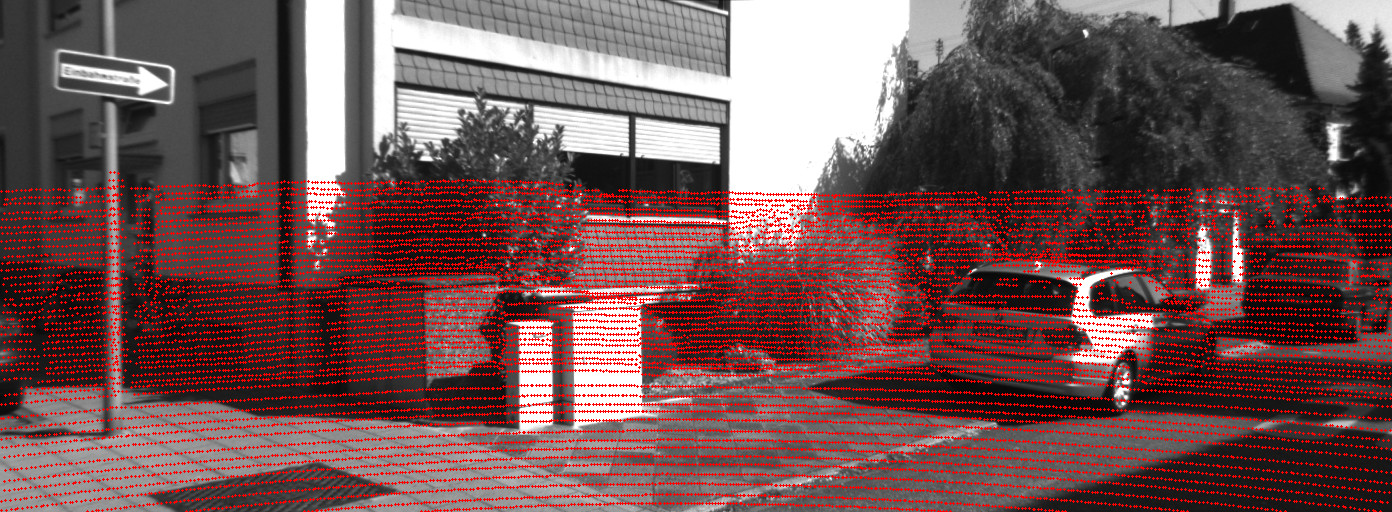

At the beginning I thought it was due to a stupid error in my code, parsing or whatsoever... but the I tried to do the same just using the undistorted image and thus setting the distortion coefficients to zero and... magic

D_00_zeros = np.array([ 0.0, 0.0, 0.0, 0.0, 0.0], dtype=np.float64)

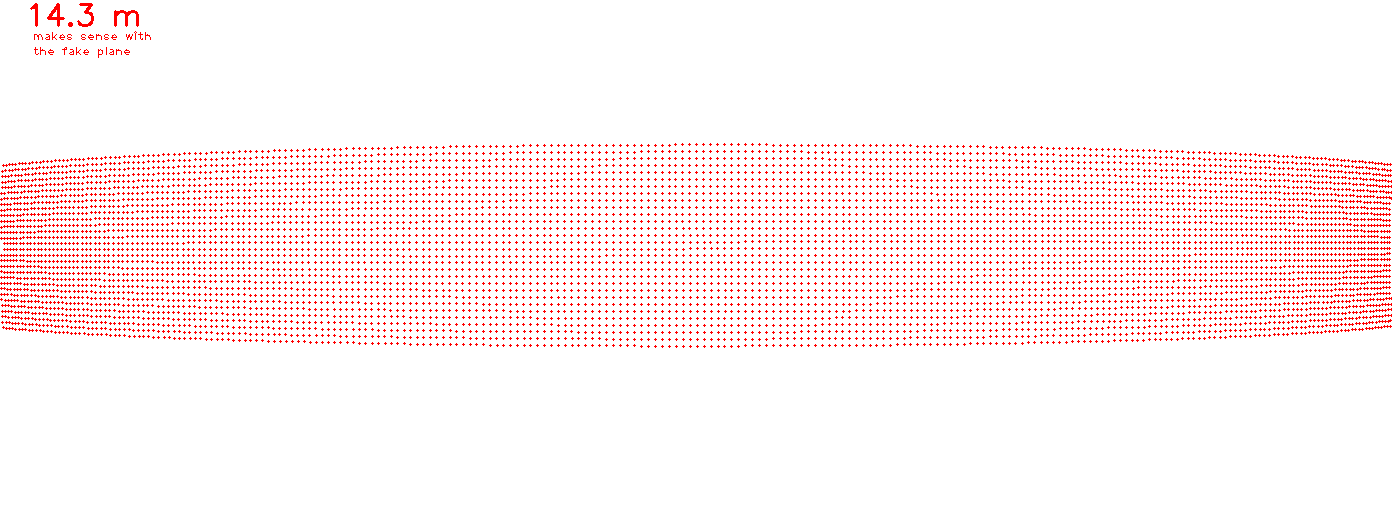

Can this be still my fault on parsing data? mmm ... so, I decided to create a "virtual plane" and move it through the 3D space, moving this plane close to the camera.

and.. whaaat?! what's going on here!? Then I started to look around the internet and experimenting solutions; in particular, one of the most "inspiring" was this one I linked before (the first link), when @HYPEREGO said I read somewhere that using more than the usual 4/5 distortion coefficients in this function sometimes lead to bad results ... so, just to the sake of argument, I tried to use

D_00 = np.array([ -3.745594e-01, 2.049385e-01, 1.110145e-03, 1.379375e-03], dtype=np.float64)

instead of

D_00 = np.array([ -3.745594e-01, 2.049385e-01, 1.110145e-03, 1.379375e-03, -7.084798e-02], dtype=np.float64)

and again... magic, here the same distance as before (9.1 m)

and even close to the camera

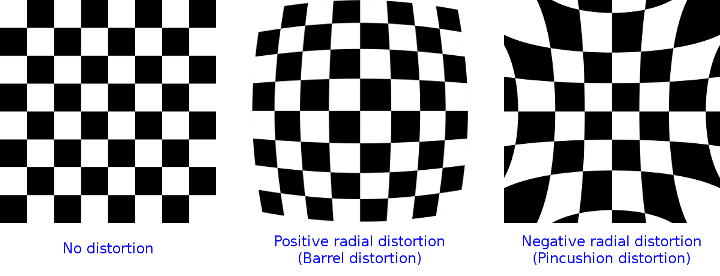

So what's going on here? Moreover, with respect to the second link, the K1 value is negative and I'm having a barrel distortion instead of the pincushion the wiki supposes

in particular

with k1 > 0

with k1<0

Following the wiki the effect should be the opposite as @Stringweasel pointed out

The vectors I used are, respectively,

D_00 = np.array([ 0.25, 0.0, 0.0, 0.0, 0.0], dtype=np.float64)

D_00 = np.array([ -0.25, 0.0, 0.0, 0.0, 0.0], dtype=np.float64)

So to conclude: I think I'm completely missing something here, OR there is a bug from the middle-age of opencv.. but It'd be weired..

Hope that some OpenCV guru can help me!

Bests, Augusto