I am simply trying to get rid of perspective of an image, in other words I am trying to get a birds-eye view image from a 2D image in order to make it easy to calculate distance between two points in image. At this point, the result image obtained with the cv2.warpPerspective method of OpenCV requires a size of final image as parameter which is unknown and should be given statically and it results in a cropped image of warped image. So I calculate the corner points of source image with cv2.getPerspective method and calculate a new homography with these points. After producing a new warped image with this homography matrix and with known final image size, I get full image without any crop. I am quite successful with most of my experiences but failing weirdly at some samples.

Here is what I am doing:

- Get 4 points of a known rectangle in real world from an image of a camera (the order of points are: TL(Top Left) -> TR(Top Right) -> BR(Bottom Right) -> BL(Bottom Left))

- Calculate the bounding box of these points and set it as destination of source points obtained with the step above.

- Calculate the homography and get the homography matrix.

- With this matrix, calculate the corners of the source image to be able to know the size of result image (warped image).

- As I know the result coordinate of the corner points of source image, I calculate a new homography matrix and produce the result image with known size.

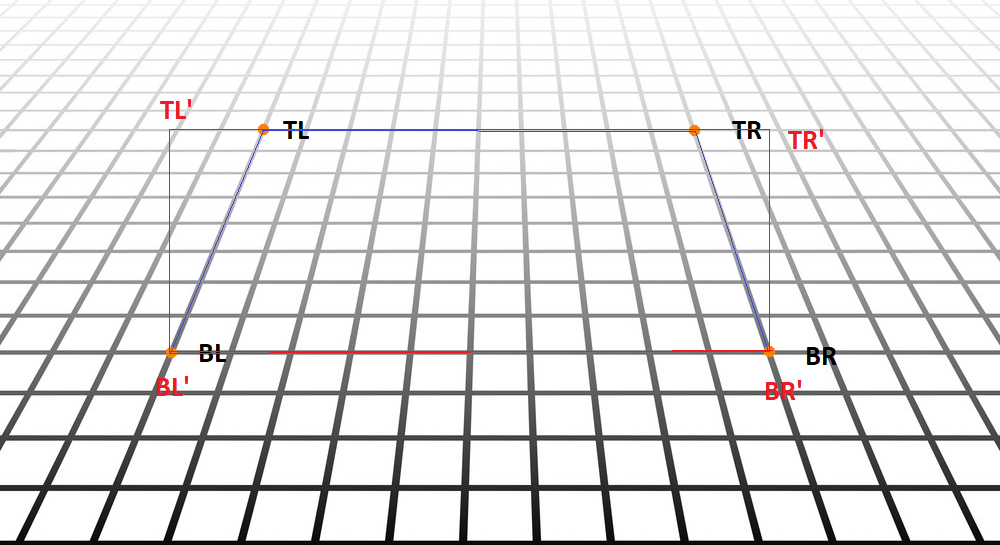

A successful attempt is shown below. The orange points are the points of a real world rectangle and the red rectangle is the destination points.

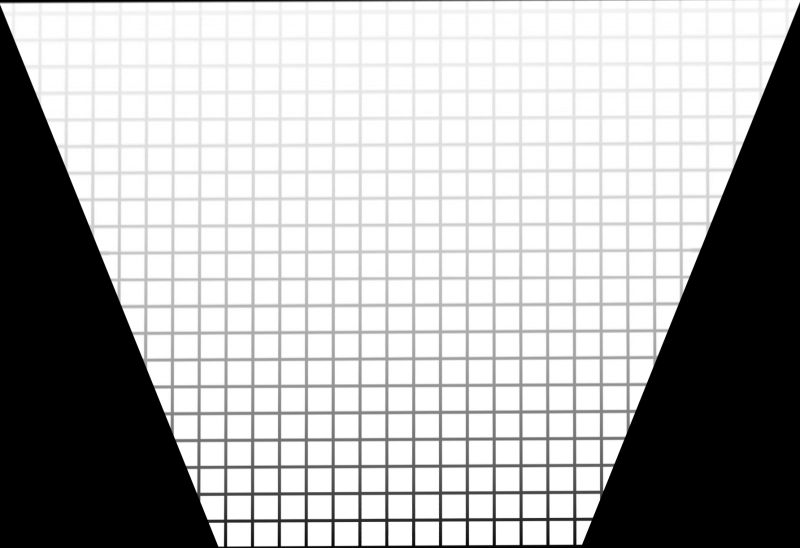

The following image is the result I calculated with above steps and it is quite good.(its resized as being a large image)

The obtained new corner positions which are coherent:

TL:(-385, -308), TR:(1397, -326), BR:(906, 778), BL:(97, 776)

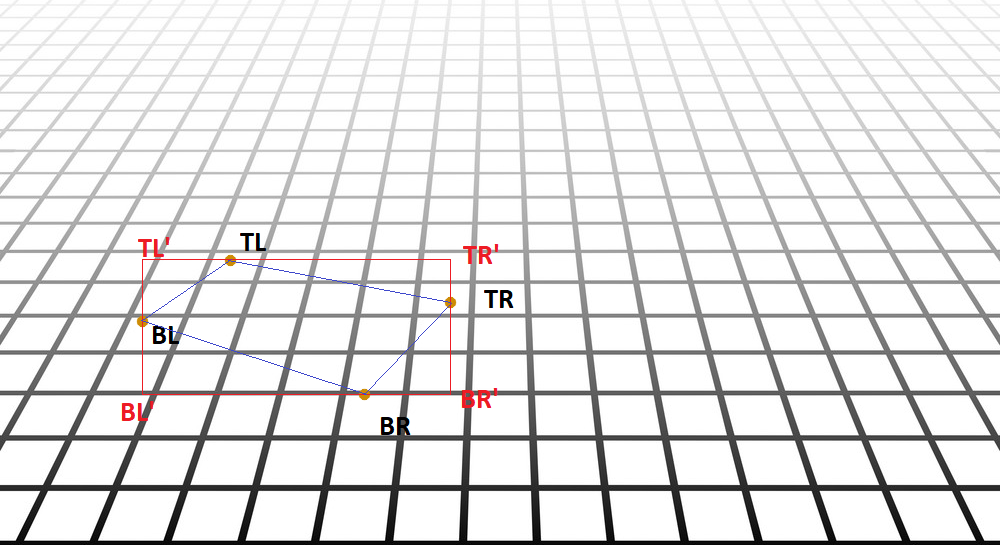

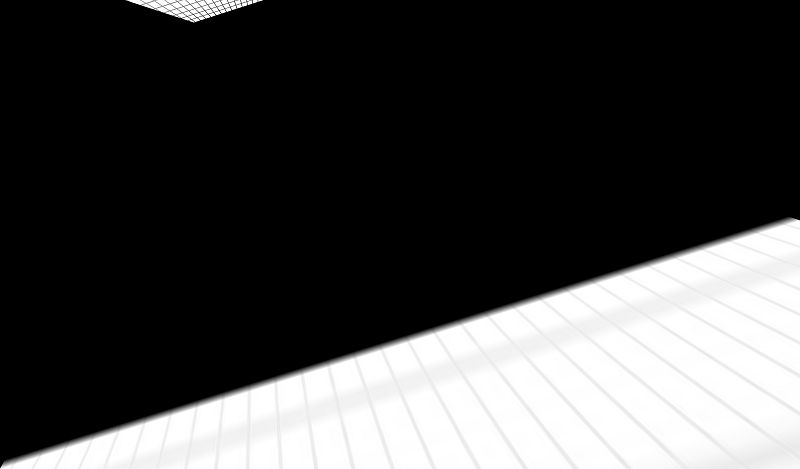

But when it comes to try on different examples, I faced some situations are failing like below:

And the resulted new corner points are as below which are not seem to be right: TL: (5415, 2218), TR: (-1314, 4309), BR: (909, 360), BL: (318, 551)

By the way, the homograpy matrix seems completely right. I tested it, the problem is with resulted image. And also there is no intersecting lines with these points (called vanishing point) inside image which may cause this kind of result image.

I am leaving the source image and the code snippet to reproduce the result below. There is a small thing I am overlooking but can not find it for 2 days. Thanks in advance

The source image: grid

The code:

import numpy as np

import cv2

import imutils

def warpImage(image, src_pts):

height, width = image.shape[:2]

min_rect = cv2.boundingRect(np.array(src_pts))

dst = [[min_rect[0], min_rect[1]],

[min_rect[0] + min_rect[2], min_rect[1]],

[min_rect[0] + min_rect[2], min_rect[1] + min_rect[3]],

[min_rect[0], min_rect[1] + min_rect[3]]]

corners = [[0, 0], [width-1, 0], [width-1, height-1], [0, height-1]]

src_np = np.float32(src_pts)

dst_np = np.float32(dst)

M = cv2.getPerspectiveTransform(src_np, dst_np)

ptsss = np.float32([corners]).reshape(-1, 1, 2)

scene_corners = cv2.perspectiveTransform(ptsss, M)

min_col, min_row = 50000, 50000

max_col, max_row = 0, 0

i = 0

for corner in scene_corners:

if corner[0][0] > max_col:

max_col = corner[0][0]

if corner[0][1] > max_row:

max_row = corner[0][1]

if corner[0][0] < min_col:

min_col = corner[0][0]

if corner[0][1] < min_row:

min_row = corner[0][1]

i += 1

new_dst = []

for corner in scene_corners:

pt = [int(corner[0][0] - min_col), int(corner[0][1] - min_row)]

new_dst.append(pt)

src2_np = np.float32(corners)

dst2_np = np.float32(new_dst)

M2 = cv2.getPerspectiveTransform(src2_np, dst2_np)

warped_image = cv2.warpPerspective(image, M2, (max_col - min_col, max_row - min_row))

return warped_image

img = cv2.imread("grid.png", 1)

img2 = img.copy()

# first one that works good

src1 = [[262, 129], [695, 130], [770, 350], [171, 352]]

cv2.circle(img, (src1[0][0], src1[0][1]), 3, (5, 140, 205), 3, 20)

cv2.circle(img, (src1[1][0], src1[1][1]), 3, (5, 140, 205), 3, 20)

cv2.circle(img, (src1[2][0], src1[2][1]), 3, (5, 140, 205), 3, 20)

cv2.circle(img, (src1[3][0], src1[3][1]), 3, (5, 140, 205), 3, 20)

cv2.imshow("img", img)

warped_img_1 = warpImage(img, src1)

warped_img_1_height, warped_img_1_width = warped_img_1.shape[:2]

if warped_img_1_width > warped_img_1_height:

warped_img_1 = imutils.resize(warped_img_1, width=800)

else:

warped_img_1 = imutils.resize(warped_img_1, height=800)

cv2.imshow("warped1", warped_img_1)

# second one that does not work

src2 = [[263, 260], [450, 302], [364, 394], [142, 321]]

cv2.circle(img2, (src2[0][0], src2[0][1]), 3, (5, 140, 205), 3, 20)

cv2.circle(img2, (src2[1][0], src2[1][1]), 3, (5, 140, 205), 3, 20)

cv2.circle(img2, (src2[2][0], src2[2][1]), 3, (5, 140, 205), 3, 20)

cv2.circle(img2, (src2[3][0], src2[3][1]), 3, (5, 140, 205), 3, 20)

cv2.imshow("img2", img2)

warped_img_2 = warpImage(img2, src2)

warped_img_2_height, warped_img_2_width = warped_img_2.shape[:2]

if warped_img_2_width > warped_img_2_height:

warped_img_2 = imutils.resize(warped_img_2, width=800)

else:

warped_img_2 = imutils.resize(warped_img_2, height=800)

cv2.imshow("warped2", warped_img_2)

cv2.waitKey()