Hello,

I am using the Bumblebee XB3 Stereo Camera and it has 3 lenses. I've spent about three weeks reading forums, tutorials, the Learning OpenCV book and the actual OpenCV documentation on using the stereo calibration and stereo matching functionality. In summary, my issue is that I have a good disparity map generated but very poor point-clouds, that seem skewed/squished and are not representative of the actual scene.

What I have done so far:

Used the OpenCV stereo_calibration and stereo_matching examples to:

1) Calibrate my stereo camera using chess board images

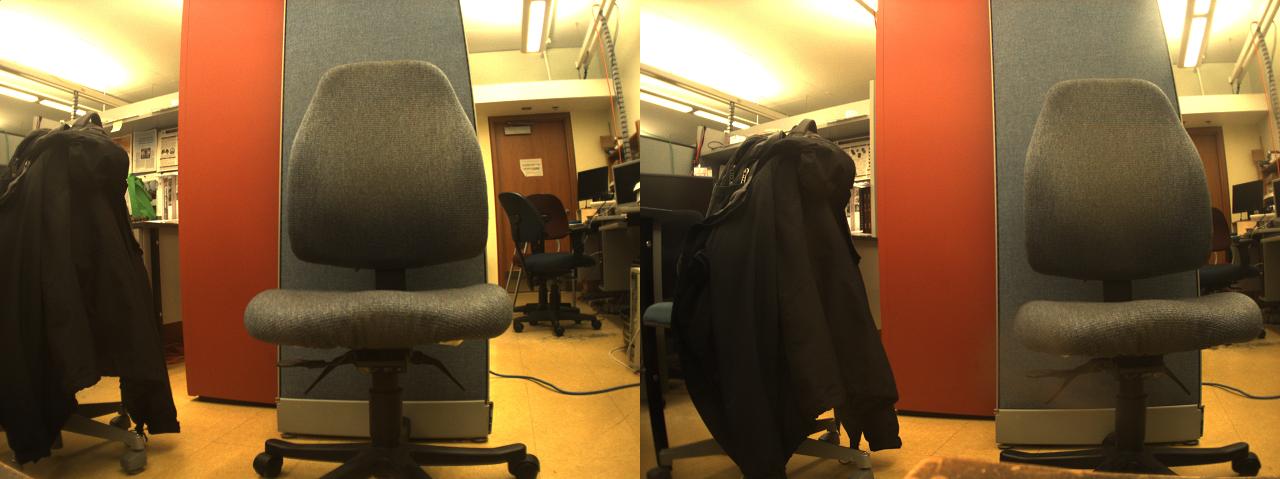

Raw Scene Images:

2) Rectified the raw images obtained from the camera

3) Generated a disparity image from the rectified images using Stereo Matching (SGBM)

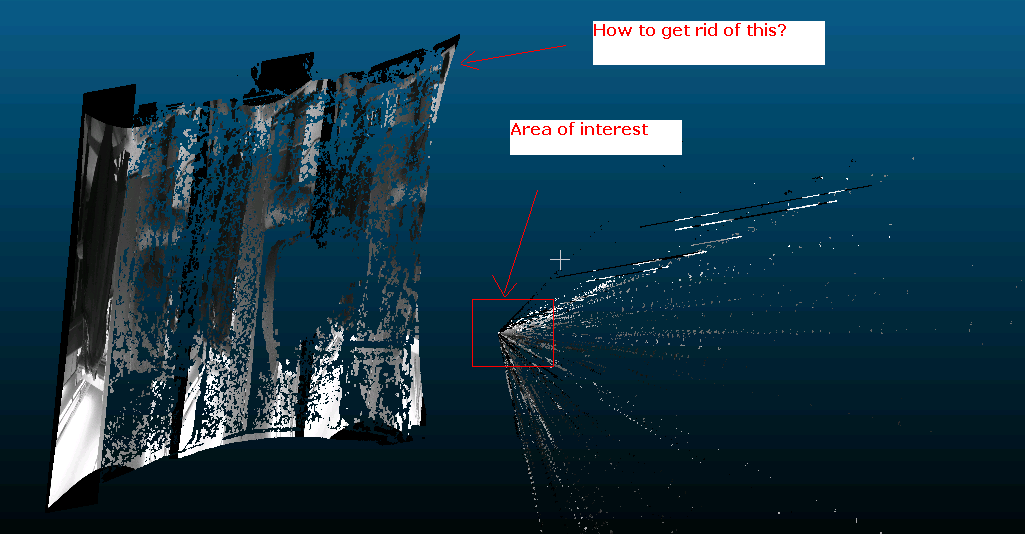

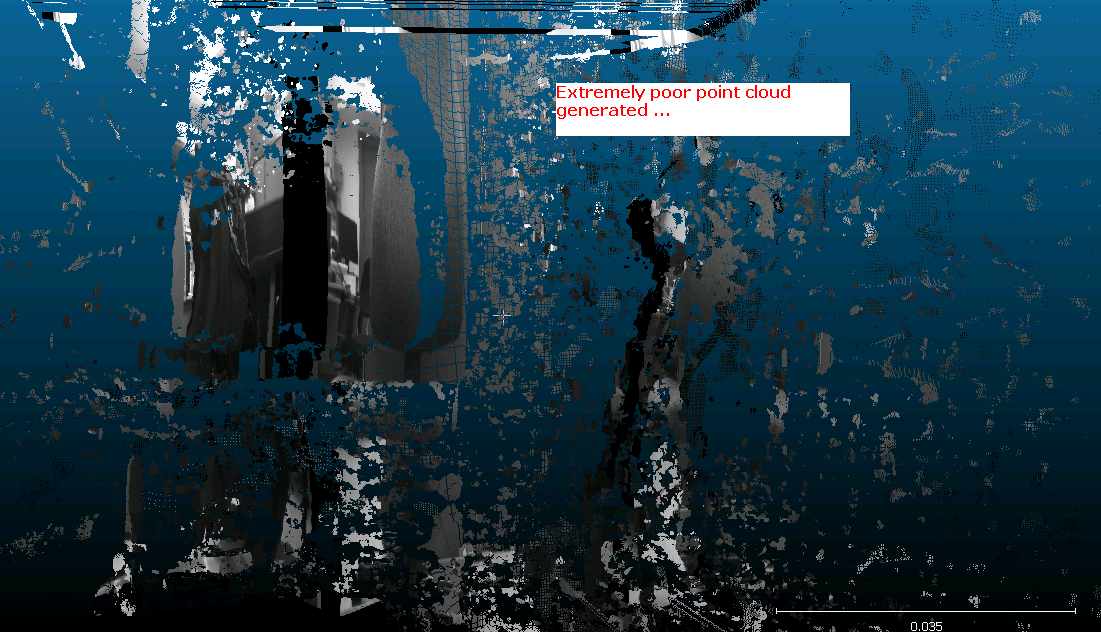

4) Projected these disparities to a 3D Point Cloud

and

What I have done so far as elimination towards my problem:

- I have tried the 1st and 2nd images, then the 2nd and 3rd lenses and finally the 1st and 2nd.

- I've re-run calibration of my chess-board captures by varying the distance (closer/farther away)

- I have used over 20 stereo pairs for the calibration

- Varying Chessboard size used: I have used a 9x6 Chessboard image for calibration and now switched to using a 8x5 one instead

- I've tried using the Block Matching as well as SGBM variants and get

relatively similar results. Getting

better results with SGBM so far. - I've varied the disparity ranges, changed the SAD Window size etc. with

little improvement

What I suspect the problem is:

My disparity image looks relatively acceptable, but the next step is to go to 3D Point cloud using the Q matrix. I suspect, I am not calibrating the cameras correctly to generate the right Q matrix. Unfortunately, I've hit the wall in terms of thinking what else I can do to get a better Q matrix. Can someone please suggest ways ahead?

PS: The reason I chose to upload these images are that the scene has some texture, so I was anticipating a reply saying the scene is too homogenous. The cover on the partition and the chair as well are quite rich in terms of texture.

Few Questions:

Can you help me remove the image/disparity plane that seems to be part of the point cloud? Why is this happening?

Is there something obvious I am doing incorrectly? I would post my code, but it is extremely similar to the OpenCV examples provided and I do not think I'm doing anything more creatively. I can if there is a specific section that might be concerning.

In my naive opinion, it seems that the disparity image is OK. But the point cloud is definitely nothing I would have expected from a relatively decent disparity image, it is WAY worse.

If it helps, I've mentioned the Q matrix I obtain after camera calibration, incase something obvious jumps out. Comparing this to the Learning OpenCV book, I don't think there is anything blatantly incorrect ...

Q: rows: 4

cols: 4

data: [ 1., 0., 0., -5.9767076110839844e+002, 0., 1., 0.,

-5.0785438156127930e+002, 0., 0., 0., 6.8683948509213735e+002, 0.,

0., -4.4965180874519222e+000, 0. ]

Thanks for reading and I'll honestly appreciate any suggestions at this point ...