So I've been playing around with training of cascade classifiers, and I seem to be getting very similar results regardless of changes I make to the various inputs, and I wanted to ask for some advice before I continued trying to guess what some of the issues might be.

The object I'm trying to detect is a hand holding a cell-phone. My positive samples look like this:

and my negative samples are a wide range of images that do not include somebody holding a camera, generally of people.

I have about 500 positive and 2000 negative images.

I'm mostly following this guide which is closely based on Naotoshi Seo's notes and tools.

Using his perl script, I'm creating 5000 positive samples with the opencv_createsamples utility; my actual command looks like this.

perl bin/createsamples.pl positives.txt negatives.txt samples 5000 "opencv_createsamples \

-bgcolor 0 -bgthresh 0 -maxxangle 1.1 -maxyangle 1.1 -maxzangle 0.5 -maxidev 40 -w 20 -h 40"

my call to opencv_traincascade then looks like this:

opencv_traincascade -data classifier -vec samples.vec -bg negatives.txt -numStages 20 -minHitRate 0.999 \

-maxFalseAlarmRate 0.5 -numPos 4600 -numNeg 2212 -w 20 -h 40 -mode ALL \

-precalcValBufSize 2048 -precalcIdxBufSize 2048 -featureType LBP

I've been alternating between LBP and Haar, but have lately been preferring LBP because I've been changing inputs and iterating a lot to try and see what seems to change my results.

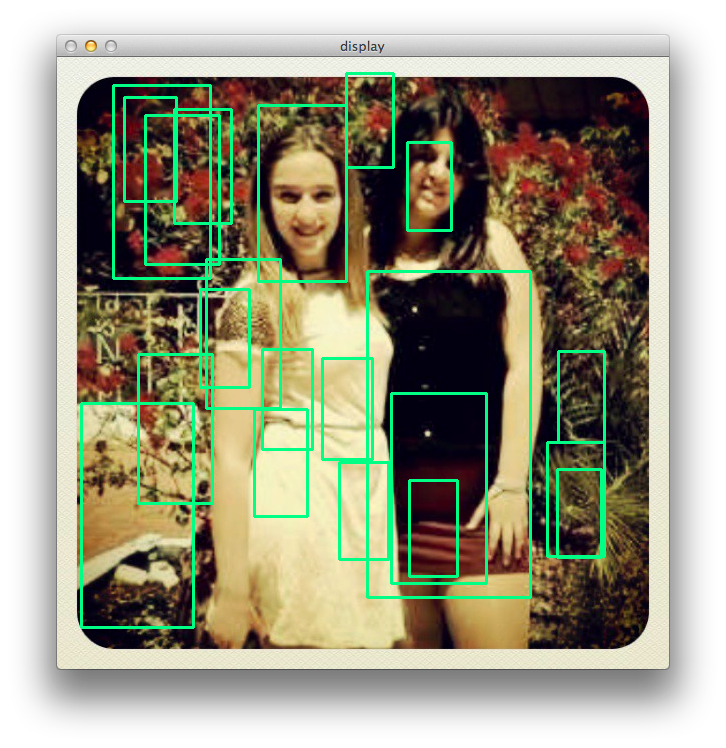

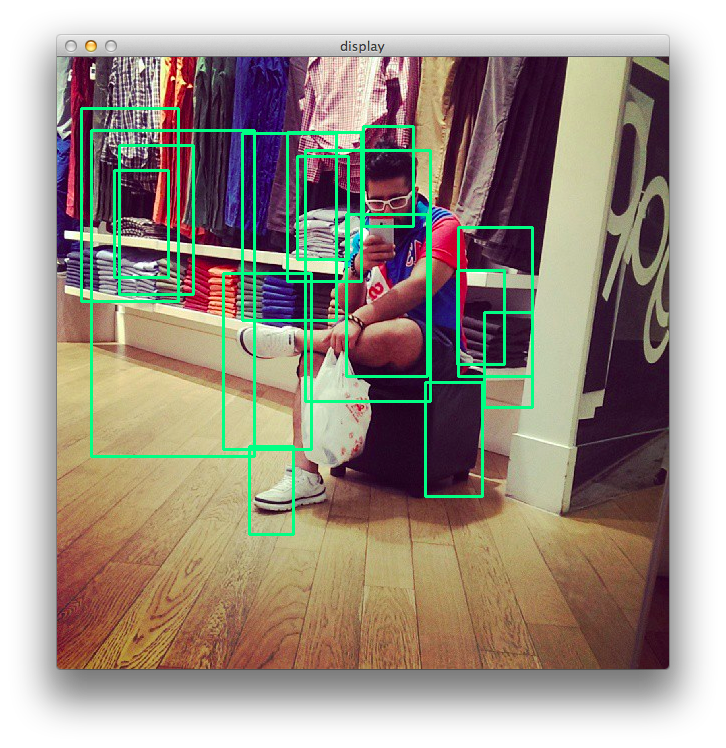

The conclusion so far being 'not much'. Here are two examples of the resulting classifier's running:

Which, well, isn't quite what I was looking for.

Any thoughts on what I should try differently? I can keep adding samples, if necessary, but that doesn't seem to have changed my results too much so far, and I'm wondering if there's something more significant that I'm doing wrong. I've tried samples of different sizes (20x40 and 40x80) I've tried increasing my number of stages, and I've tried both haar and LBP features. What should I try next?

Bonus questions:

1) How important is the subject matter of the negative images? If we assume that most of the positive matches will come from images in which we see a body from at least the waist up, should I try to really focus i selecting similar types of images for my negatives? More directly, does adding more negative images help if they aren't the type of image that a positive match would resemble (e.g. large face-only portraits, or landscapes).

2) Is it better to use the -info flag with create samples, if I have a large number of positive samples that contain the target object? I have a functionally unlimited set of positive and negative samples, so I could very conceivably have thousands of samples without needing to synthesize them from positive+negative images, but it seems like that is working pretty well for other people.