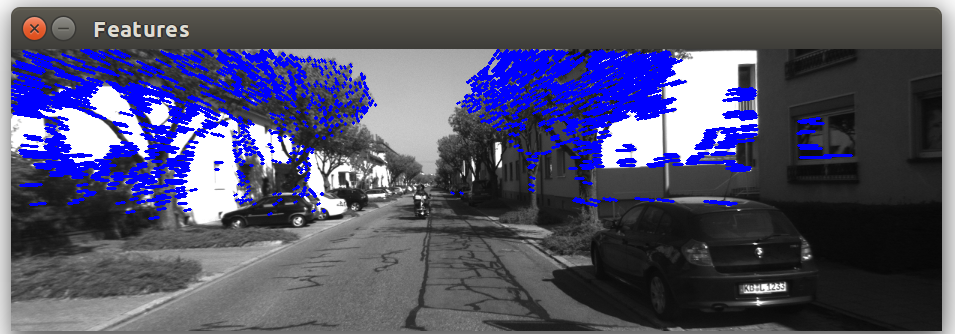

Hello I am having some trouble with triangulatePoints and the reslting 3D points are coming out incorrectly. I am using Opencv 3.4.2 on ubuntu 16.04. This is the function that I use to triangulate from two vectors of cv::Poin2f containing matches (I have verified that these matches are correct and make sense between the images).

std::vector<cv::Point3d> triangulate(const cv::Point2f &pp, double focal, const std::vector<cv::Point2f> &points0, const std::vector<cv::Point2f> &points1, const cv::Mat &P0, const cv::Mat &P1){

cv::Mat p_mat0(2, static_cast<int>(points0.size()), CV_64F);

cv::Mat p_mat1(2, static_cast<int>(points1.size()), CV_64F);

for (int i = 0; i < p_mat0.cols; i++) {

p_mat0.at<double>(0, i) = (points0[i].x - pp.x) / focal;

p_mat0.at<double>(1, i) = (points0[i].y - pp.y) / focal;

p_mat1.at<double>(0, i) = (points1[i].x - pp.x) / focal;

p_mat1.at<double>(1, i) = (points1[i].y - pp.y) / focal;

}

cv::Mat points_4d;

cv::triangulatePoints(P0, P1, p_mat0, p_mat1, points_4d);

std::vector<cv::Point3d> results;

for (int i = 0; i < points4d.cols; i++) {

results.emplace_back(cv::Point3d(points4d.at<double>(0, i) / points4d.at<double>(3, i),

points4d.at<double>(1, i) / points4d.at<double>(3, i),

points4d.at<double>(2, i) / points4d.at<double>(3, i)));

}

return results;

}

The input are the features tracked between two frames and the P0 and P1 are generated from findEssentialMatrix and recoverPose between the two sets of points. I know that points0 and points1 are reversed for the first two functions and switching them doesn't make a difference.

E = cv::findEssentialMat(points1, points0, focal_, pp_, cv::RANSAC, 0.999, 1.0, mask);

int res = recoverPose(E, points1, points0, R, t, focal_, pp_, mask);

cv::Mat P;

hconcat(R, t, P);

triangulate(pp, focal, points0, points1, cv::Mat::eye(3, 4, CV_64FC1), P);

The resultant P mat for this example is as follows:

[0.999994351093655, 0.00216084437549141, -0.002574593630994021, -0.08315501607210679;

-0.002166127328107902, 0.9999955507641086, -0.002050937440673792, 0.02514760517749096;

0.002570150419386047, 0.002056502752743189, 0.9999945825469503, 0.9962192736821971];

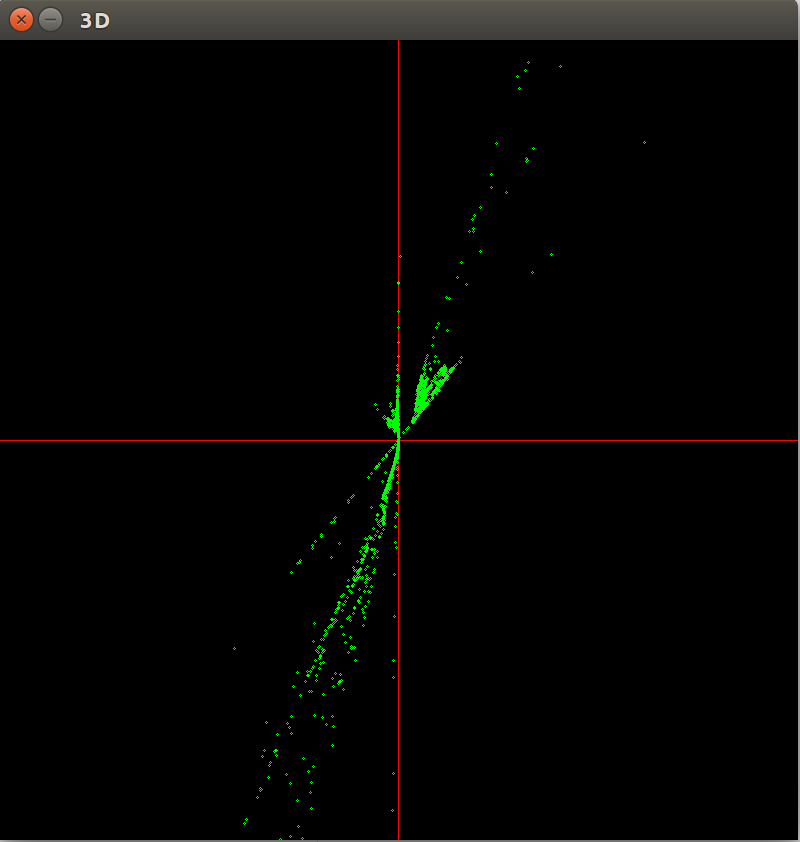

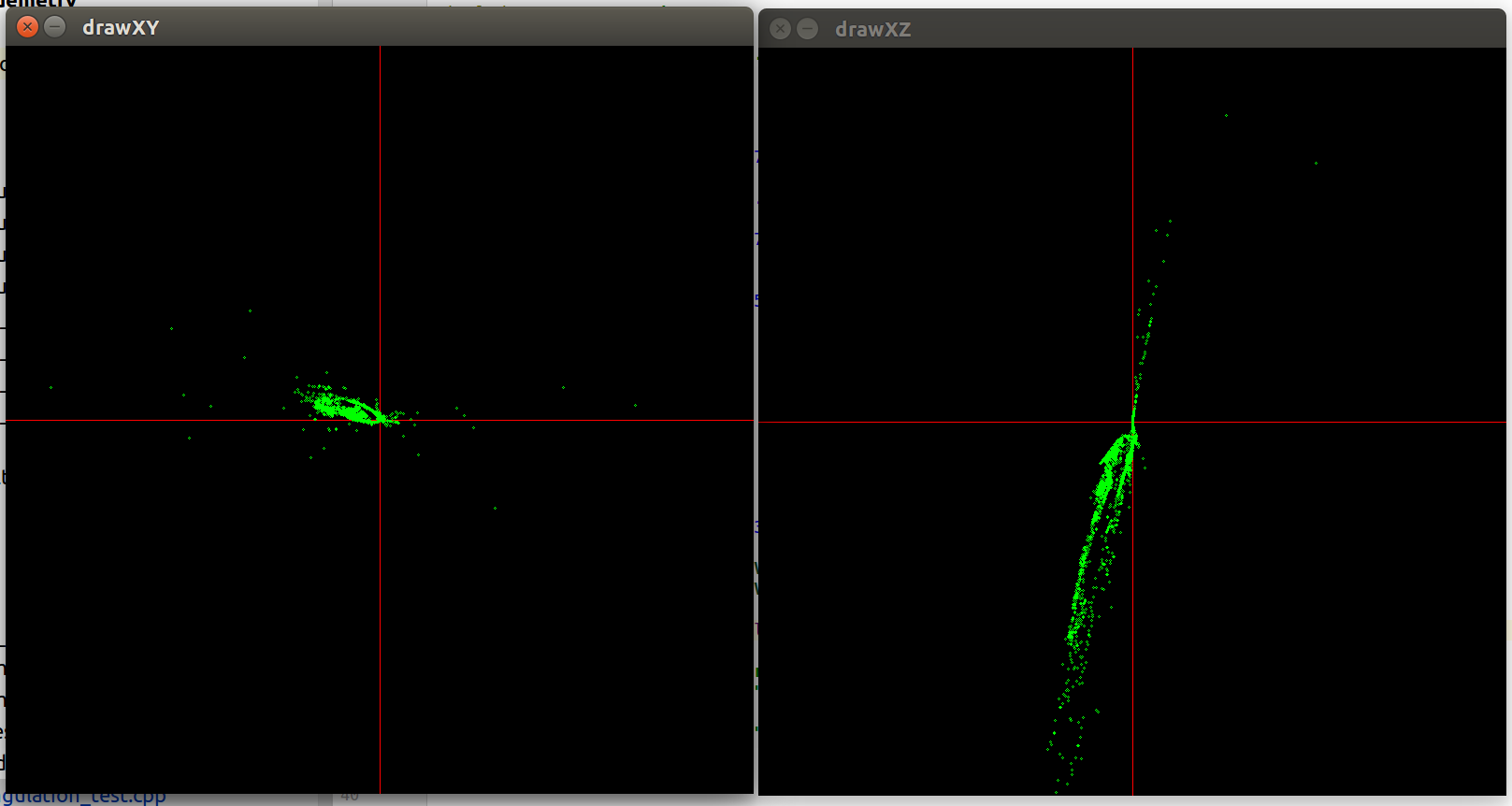

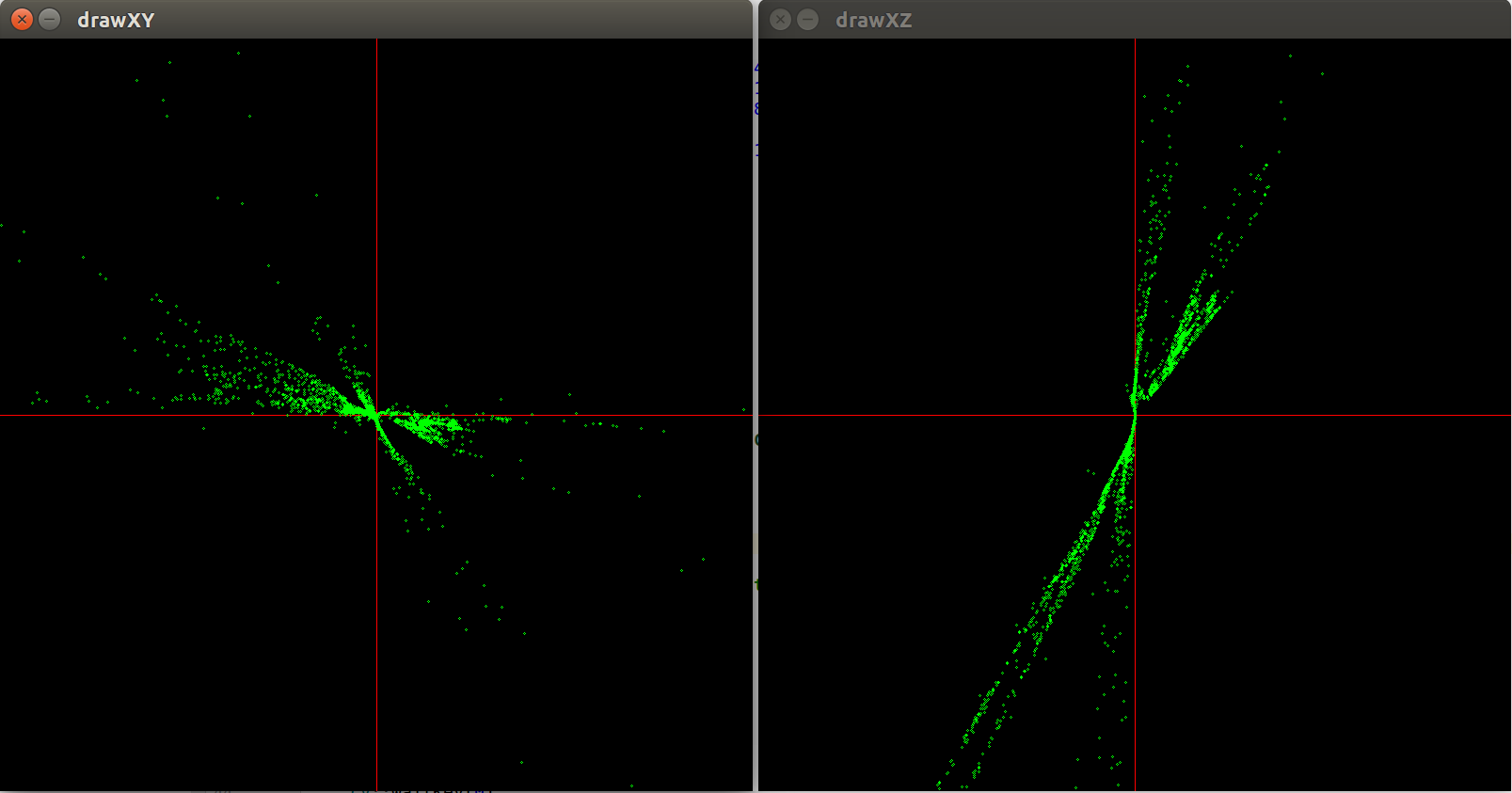

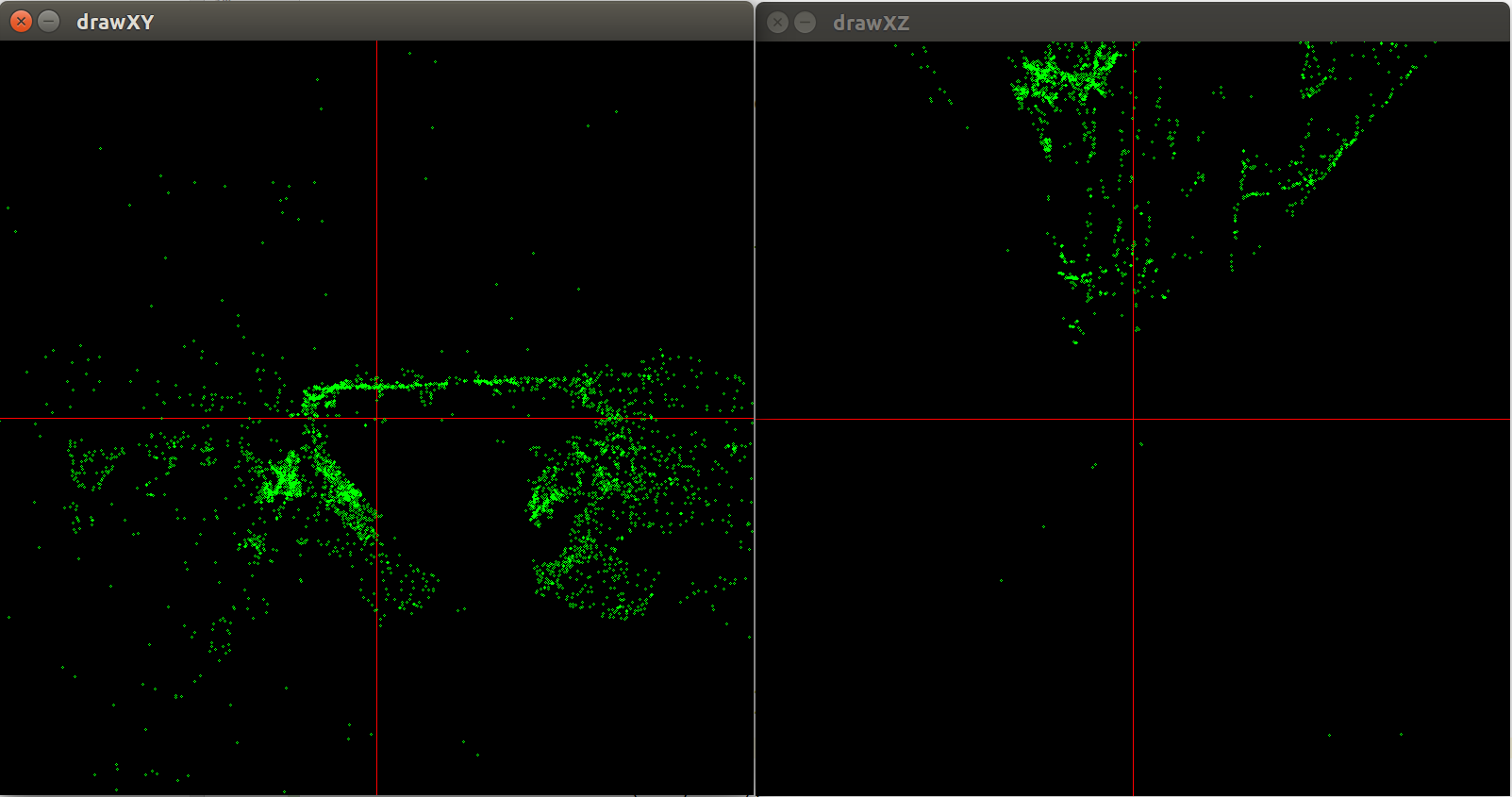

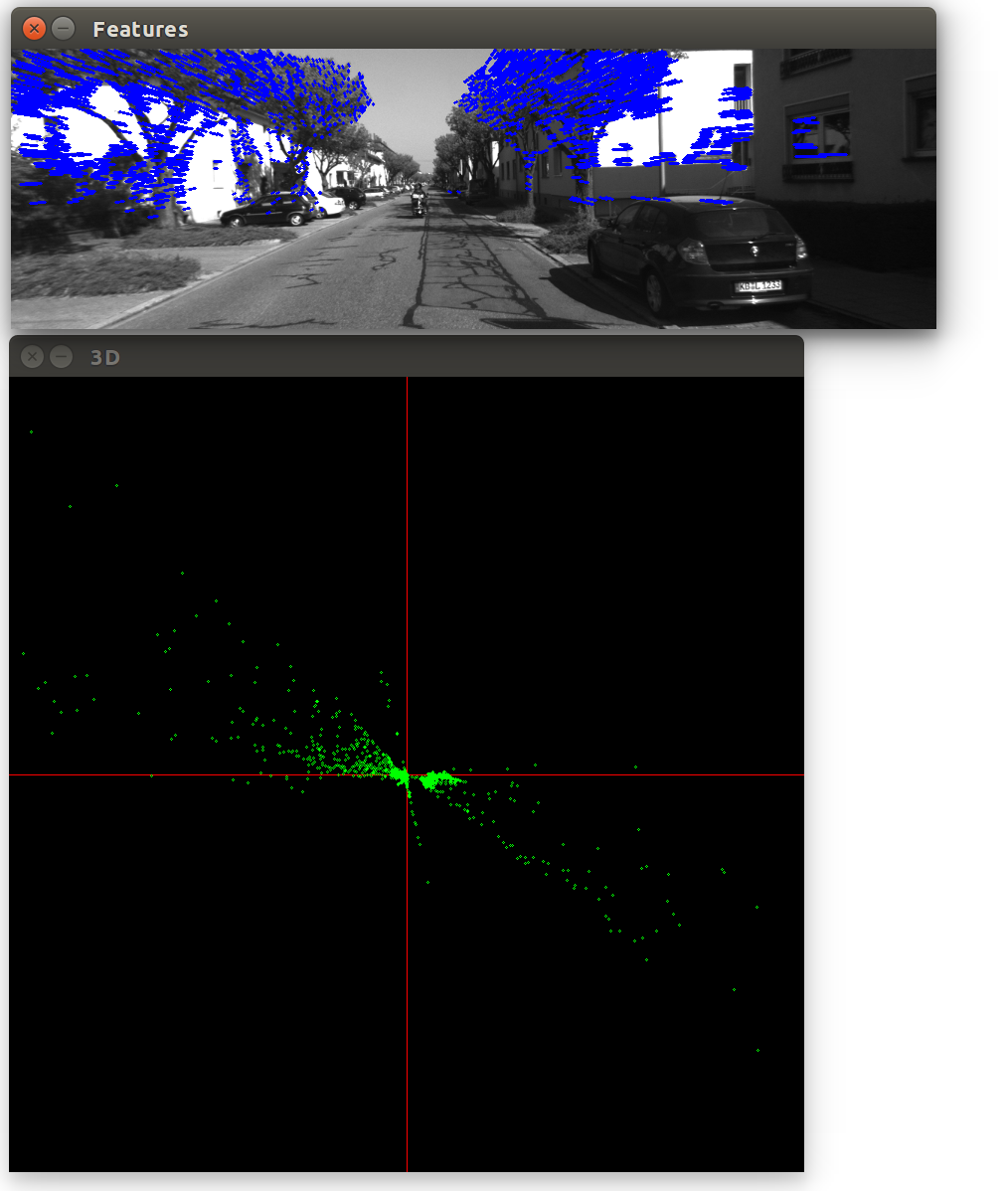

The result looks like this where the plot at the bottom is the x and y coordinates of the result, centered on the red cross:

Plotted with this loop:

for (int j = 0; j < points_3d.size(); j++) {

cv::Point2d draw_pos = cv::Point2d(points_3d[j].x * scale + drawXY.cols / 2,

points_3d[j].y * scale + drawXY.rows / 2);

cv::circle(drawXY, draw_pos, 1, cv::Scalar(0, 255, 0), 1);

}

Any idea what the issue is, I have been struggling with this for a few days.