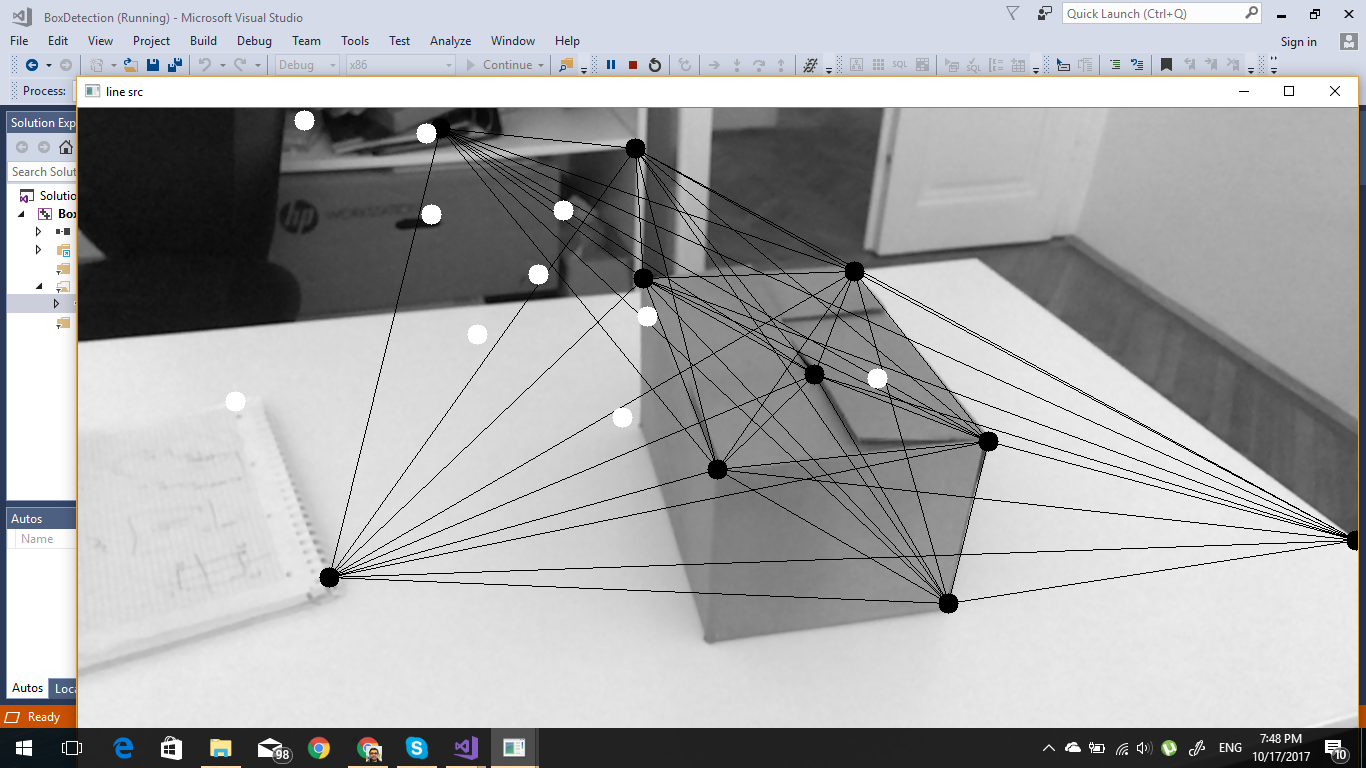

Step 1: I'm trying to detect the box volume. So I first detected the corners. The user will have a snap point and axis, so I will have a predefined point in world coordinates.

Step 2: I have converted the corner points into 3D Positions using the inverse camera internsics. Step 3: I have project rays, I have the ground plane at 0,0,0, those rays will intersect with a plane with normal UP.

Question: Now I want to know what are the points that are on the ground and what are the points that are above the ground.

Here is the code

struct Ray

{

cv::Vec3f origin;

cv::Vec3f direction;

};

bool linePlaneIntersection( Vec3f& contact, Vec3f direction, Vec3f rayOrigin,

Vec3f normal, Vec3f coord ) {

// get d value

float d = normal.dot(coord);

if ( normal.dot(direction) == 0 ) {

return false; // No intersection, the line is parallel to the plane

}

// Compute the X value for the directed line ray intersecting the plane

float x = (d - normal.dot(rayOrigin)) / normal.dot(direction);

// output contact point

contact = rayOrigin + normalize(direction)*x; //Make sure your ray vector is normalized

return true;

}

int main( int argc, char** argv )

{

Mat src, src_copy, edges, dst;

src = imread( "freezeFrame__1508152029892.png", 0 );

src_copy = src.clone();

std::vector< cv::Point2f > corners;

// maxCorners – The maximum number of corners to return. If there are more corners

// than that will be found, the strongest of them will be returned

int maxCorners = 10;

// qualityLevel – Characterizes the minimal accepted quality of image corners;

// the value of the parameter is multiplied by the by the best corner quality

// measure (which is the min eigenvalue, see cornerMinEigenVal() ,

// or the Harris function response, see cornerHarris() ).

// The corners, which quality measure is less than the product, will be rejected.

// For example, if the best corner has the quality measure = 1500,

// and the qualityLevel=0.01 , then all the corners which quality measure is

// less than 15 will be rejected.

double qualityLevel = 0.01;

// minDistance – The minimum possible Euclidean distance between the returned corners

double minDistance = 20.;

// mask – The optional region of interest. If the image is not empty (then it

// needs to have the type CV_8UC1 and the same size as image ), it will specify

// the region in which the corners are detected

cv::Mat mask;

// blockSize – Size of the averaging block for computing derivative covariation

// matrix over each pixel neighborhood, see cornerEigenValsAndVecs()

int blockSize = 3;

// useHarrisDetector – Indicates, whether to use operator or cornerMinEigenVal()

bool useHarrisDetector = false;

// k – Free parameter of Harris detector

double k = 0.04;

cv::goodFeaturesToTrack( src, corners, maxCorners, qualityLevel, minDistance, mask, blockSize, useHarrisDetector, k );

for ( size_t i = 0; i < corners.size(); i++ )

{

cv::circle( src, corners[i], 10, cv::Scalar( 0, 255, 255 ), -1 );

}

for ( size_t i = 0; i < corners.size(); i++ )

{

cv::Point2f pt1 = corners[i];

for ( int j = 0; j < corners.size(); j++)

{

cv::Point2f pt2 = corners[j];

line( src, pt1, pt2, cv::Scalar( 0, 255, 0 ) );

}

}

Mat cameraIntrinsics( 3, 3, CV_32F );

cameraIntrinsics.at<float>( 0, 0 ) = 1.6003814935684204;

cameraIntrinsics.at<float>( 0, 1 ) = 0;

cameraIntrinsics.at<float>( 0, 2 ) = -0.0021958351135253906;

cameraIntrinsics.at<float>( 1, 0 ) = 0;

cameraIntrinsics.at<float>( 1, 1 ) = 1.6003814935684204;

cameraIntrinsics.at<float>( 1, 2 ) = -0.0044271680526435375;

cameraIntrinsics.at<float>( 2, 0 ) = 0;

cameraIntrinsics.at<float>( 2, 1 ) = 0;

cameraIntrinsics.at<float>( 2, 2 ) = 1;

Mat invCameraIntrinsics = cameraIntrinsics.inv();

std::vector<cv::Point3f> points3D;

std::vector<Ray> rays;

for ( int i = 0; i < corners.size(); i++ )

{

cv::Point3f pt;

pt.z = -1.0f;

pt.x = corners[i].x;

pt.y = corners[i].y;

points3D.push_back( pt );

Ray ray;

ray.origin = Vec3f( 0, 0, 0 );

ray.direction = Vec3f( pt.x, pt.y, pt.z );

rays.push_back( ray );

}

std::vector<cv::Point3f> pointsTransformed3D;

cv::transform( points3D, pointsTransformed3D, invCameraIntrinsics );

std::vector<cv::Vec3f> contacts;

for ( int i = 0; i < pointsTransformed3D.size(); i++ )

{

Vec3f pt(pointsTransformed3D[i].x, pointsTransformed3D[i].y, pointsTransformed3D[i].z );

cv::Vec3f contact;

linePlaneIntersection( contact, rays[i].direction, rays[i].origin, Vec3f( 0, 1, 0 ), pt );

contacts.push_back( contact );

}

for ( size_t i = 0; i < points3D.size(); i++ )

{

cv::Point2f pt;

pt.x = pointsTransformed3D[i].x;

pt.y = pointsTransformed3D[i].y;

cv::circle( src, pt, 10, cv::Scalar( 255. ), -1 );

}

imshow( "line src", src );

cv::waitKey( 0 );

return 0;

}

Here is the code

Here is the code