When I try simple tests with OpenCV's BRISK feature detector, I get poor consistency of feature point location and orientation. If I take an image with clear features, and simply translate its pixels (no resampling), I would expect the feature locations and orientations to be translated, but otherwise unchanged, but that's not what I'm finding. Shift-invariant features is part of what the "R" (robust) in the name "BRISK" is supposed to deliver, right?

Below are pictures and source code for a simple test. I created (with Photoshop, and a 15 point Zapf Dingbat font), some nice white features on a black background, in a 200x200 pixel image (image zapf0.png). The features are far from the edges, to eliminate edge effects. You'd expect high contrast, high frequency image details like this to be about the easiest case for a feature detector that you could imagine. I then translated that picture 23 pixels to the left (simulating a stereo pair - also the simplest stereo pair you could imagine -- no noise, no scaling, no rotation, no occluded features..., zapf1.png). RGB pictures with 8 bits per channel.

Then I wrote a little C++ test program that runs BRISK with the default parameter values and visualizes feature point locations and orientations, linked that with OpenCV 2.4.5, and ran it, like so:

brisk_orient zapf0.png zapf0_orient.png; brisk_orient zapf1.png zapf1_orient.png

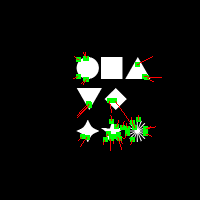

This program doesn't compute descriptors or do matching; it just does detection, and then visualizes the results. The two generated image files, zapf0_orient.png and zapf1_orient.png:

The results are disappointingly inconsistent. I'd expect these two images to have nearly identical features, except for translation. But if you look at the two squares and the two triangles, only about 2 out of 8 features match, in terms of position and orientation. I tried other parameter values for threshold and octaves and got similarly bad results.

My question: is this an unavoidable weakness of BRISK, a bug in OpenCV's implementation of BRISK, or a result of not using the right parameter settings and inputs to BRISK?

Have others encountered this weakness of BRISK?

thanks -Paul

the two input images:

the C++ source code, brisk_orient.cpp (also available via pastebin)

// read an RGB picture file, run BRISK feature detector on it

// create a picture of the feature points with their orientations, and write it out

#include <iostream>

#include <stdlib.h>

#include <opencv2/core/core.hpp> // for cv::Mat

#include <opencv2/features2d/features2d.hpp> // for cv::BRISK

#include <opencv2/highgui/highgui.hpp> // for cv::imread

static void draw_dot(cv::Mat &pic, float x, float y, int radius, const cv::Vec3b &color) {

int x0 = floor(x+.5);

int y0 = floor(y+.5);

assert(pic.type()==CV_8UC3);

for (int y=y0-radius; y<=y0+radius; y++) {

for (int x=x0-radius; x<=x0+radius; x++) {

if (x>=0 && y>=0 && x<pic.cols && y<pic.rows)

pic.at<cv::Vec3b>(y, x) = color;

}

}

}

static void draw_line_segment(cv::Mat &pic, float x0, float y0, float x1, float y1,

const cv::Vec3b &color) {

assert(pic.type()==CV_8UC3);

float dx = abs(x1-x0);

float dy = abs(y1-y0);

// crude jaggy line drawing

if (dx>=dy) {

if (dx==0) return;

int ix0 = ceil(std::min(x0, x1));

int ix1 = ceil(std::max(x0, x1));

for (int x=ix0; x<ix1; x++) {

int y = floor(y0+(x+.5-x0)*(y1-y0)/(x1-x0)+.5);

if (x>=0 && y>=0 && x<pic.cols && y<pic.rows)

pic.at<cv::Vec3b>(y, x) = color;

}

} else {

int iy0 = ceil(std::min(y0, y1));

int iy1 = ceil(std::max(y0, y1));

for (int y=iy0; y<iy1; y++) {

int x = floor(x0+(y+.5-y0)*(x1-x0)/(y1-y0)+.5);

if (x>=0 && y>=0 && x<pic.cols && y<pic.rows)

pic.at<cv::Vec3b>(y, x) = color;

}

}

}

int main(int argc, char **argv) {

if (argc<=2) {

std::cerr << "Usage: " << argv[0] << " INPIC OUTPIC [THRESH OCTAVES]" << std::endl;

exit(1);

}

std::string in_filename = argv[1];

std::string out_filename = argv[2];

int thresh = argc>3 ? atoi(argv[3]) : 30;

int octaves = argc>4 ? atoi(argv[4]) : 3;

float pattern_scale = 1;

// read image file

cv::Mat in_pic = cv::imread(in_filename);

std::cout << "thresh=" << thresh << " octaves=" << octaves << " pattern_scale=" << pattern_scale

<< std::endl;

std::cout << "in_pic is " << in_pic.cols << "x" << in_pic.rows

<< " type=" << in_pic.type() << " elemSize1=" << in_pic.elemSize1()

<< " channels=" << in_pic.channels() << std::endl;

// initialize brisk detector

cv::BRISK brisk(thresh, octaves, pattern_scale);

brisk.create("Feature2D.BRISK");

// run BRISK on input image to compute keypoints

std::vector<cv::KeyPoint> keypoints;

brisk.detect(in_pic, keypoints);

std::cout << "detected " << keypoints.size() << " keypoints" << std::endl;

// create annotated image with keypoint locations and orientations

cv::Mat out_pic = in_pic.clone();

for (int i=0; i<int(keypoints.size()); i++) {

float x = keypoints[i].pt.x;

float y = keypoints[i].pt.y;

float len = keypoints[i].size/2.;

float angle = M_PI/180.*keypoints[i].angle; // convert degrees to radians

draw_dot(out_pic, x, y, 2, cv::Vec3b(0,255,0));

draw_line_segment(out_pic, x, y, x+len*cos(angle), y+len*sin(angle), cv::Vec3b(0,0,255));

}

// write annotated image to file

imwrite(out_filename, out_pic);

}