Hello,

I apologize in advance for a question not directly related to opencv, but I have a question regarding a project paper I am reading about visual odometry. I am generally new to image processing and because I am not doing this for a project or something similar but for my interest, I don't have someone to ask these kind of questions. I will try to number my questions so the answer will be better organised:

In page 3, at 2.Algorithm->2.1 Problem formulation-> Output the paper metions:

"The vector, t can only be computed upto a scale factor in our monocular scheme."

It mentions that scale factor for the translation several times in the paper, but I cannot understand what this actually means.

Let me create an example and present you how I understand the translation vector and similarly the rotation vector and the general idea of visual odometry. Please correct me at the parts I am wrong

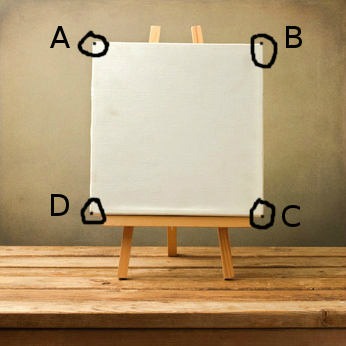

Let's say that we use one camera in a mobile robot for visual odometry and we capture the following image in our first frame in which we have used a feature detection function (like FAST for example) and we have detected the 4 corners (A,B,C,D - marked as black) of the portrait for simplification with their respective positions A(ax,ay) , B(bx,by), C(cx,cy) and D(dx,dy) :

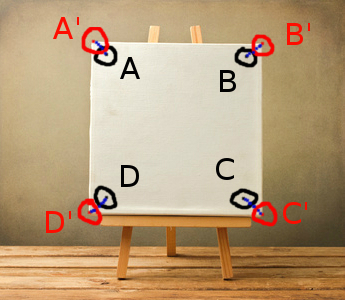

Now let's suppose that the robot moved forward linearly, without turning, and we got the next frame as follows in which we matched these corners (using for example the calcOpticalFlowPyrLK() function) but with red color this time (A',B',C',D') and their respective positions (A'(ax',ay'), B'(bx',by'), C'(cx',cy'), D'(dx',dy')), while visualizing the previous points of the first frame as black:

- Isn't the t vector a vector containing the difference between this matching (marked as blue in the image) ?

- How should I define it? For example t = [ax'-ax,ay'-ay] or t = [bx'-bx,by'-by] or t = [cx'-cx,cy'-cy] or t = [dx'-dx,dy'-dy] or something else?

- I imagine the rotation as the angles that these matched points rotated with the movement of the camera, is this correct?

- The third dimension is conceived constant?

- What does the phrase I outlined above actually means ? What is a scale factor and why should I use a scale factor for a translation?

Please bare with me for any follow up questions that may arise.

Thank you for your answers and for your time in advance,

Chris