This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

Let's wrap some stuff up, any remarks more than welcome. I tried applying a per element weight factor, however this didn't seem to work, giving raise to an error in OpenCV execution:

OpenCv Error: Bad argument < params.class_weights must be 1d floating-pint vector containing as many elements as the number of classes >

This however means that it doesn't follow the default approach of LibSVM.

However, by specifying an equal weight or different weights for each class, it seems that my support vectors do not change in my XML file, which is quite weird as I see it. Or does anyone know why the support vectors should be identical and is this behaviour meant to be?

| 2 | No.2 Revision |

Let's wrap some stuff up, any remarks more than welcome. I tried applying a per element weight factor, however this didn't seem to work, giving raise to an error in OpenCV execution:

OpenCv Error: Bad argument < params.class_weights must be 1d floating-pint vector containing as many elements as the number of classes >

This however means that it doesn't follow the default approach of LibSVM.

However, by specifying an equal weight or different weights for each class, it seems that my support vectors do not change in my XML file, which is quite weird as I see it. Or does anyone know why the support vectors should be identical and is this behaviour meant to be?

UPDATE 1

I have downloaded the Daimler person detector dataset. It has about 15.000 positive images (precropped to 48x96 resolution) and 7000 negative images (full size background images). If have taken the following steps:

Took the 15000 positive windows and created the HOG descriptor from it. Parameters are windowSize 48x96 cellSize 8x8 blockSize 16x16 and windowStride 8x8, which are the official parameters used.

Took the 7000 negatives and cut 15000 random 48x96 windows from those. Also put them through the descriptor creation process.

Have added both sets to a training vector matrix and created the corresponding labels.

Performed the SVM training using the following parameters

CvSVMParams params;

params.svm_type = CvSVM::C_SVC;

params.kernel_type = CvSVM::LINEAR;

params.C = 1000; //high misclassification cost

params.term_crit = cvTermCriteria(CV_TERMCRIT_ITER, 1000, 1e-6); //stopping criteria loops = 1000 or EPS = 1e-6

Used a conversion function, inside a wrapper_class to generate a single vector for the HOG descriptor using the following code

void LinearSVM::get_primal_form_support_vector(std::vector<float>& support_vector) const {

int sv_count = get_support_vector_count();

const CvSVMDecisionFunc* df = decision_func;

const double* alphas = df[0].alpha;

double rho = df[0].rho;

int var_count = get_var_count();

support_vector.resize(var_count, 0);

for (unsigned int r = 0; r < (unsigned)sv_count; r++) {

float myalpha = alphas[r];

const float* v = get_support_vector(r);

for (int j = 0; j < var_count; j++,v++) {

support_vector[j] += (-myalpha) * (*v);

}

}

support_vector.push_back(rho);

}

Then loaded in a set of test images and performed the multiscale detector on it which is integrated in the HOGDescriptor function.

vector<float> support_vector;

SVM_model.get_primal_form_support_vector(support_vector);

hog_detect.setSVMDetector(support_vector);

hog_detect.detectMultiScale(test_image, locations, 0.0, Size(), Size(), 1.01);

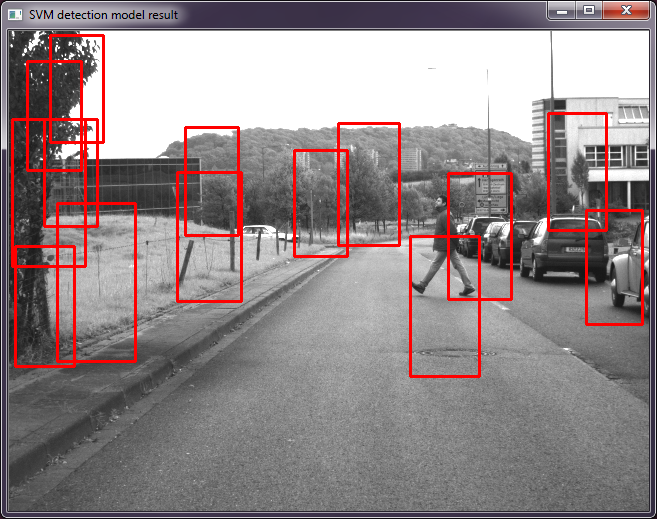

However, the result is far from satisfying ... as you can see below

So this raises some questions:

| 3 | No.3 Revision |

Let's wrap some stuff up, any remarks more than welcome. I tried applying a per element weight factor, however this didn't seem to work, giving raise to an error in OpenCV execution:

OpenCv Error: Bad argument < params.class_weights must be 1d floating-pint vector containing as many elements as the number of classes >

This however means that it doesn't follow the default approach of LibSVM.

However, by specifying an equal weight or different weights for each class, it seems that my support vectors do not change in my XML file, which is quite weird as I see it. Or does anyone know why the support vectors should be identical and is this behaviour meant to be?

UPDATE 1

I have downloaded the Daimler person detector dataset. It has about 15.000 positive images (precropped to 48x96 resolution) and 7000 negative images (full size background images). If have taken the following steps:

Took the 15000 positive windows and created the HOG descriptor from it. Parameters are windowSize 48x96 cellSize 8x8 blockSize 16x16 and windowStride 8x8, which are the official parameters used.

Took the 7000 negatives and cut 15000 random 48x96 windows from those. Also put them through the descriptor creation process.

Have added both sets to a training vector matrix and created the corresponding labels.

Performed the SVM training using the following parameters

CvSVMParams params;

params.svm_type = CvSVM::C_SVC;

params.kernel_type = CvSVM::LINEAR;

params.C = 1000; //high misclassification cost

params.term_crit = cvTermCriteria(CV_TERMCRIT_ITER, 1000, 1e-6); //stopping criteria loops = 1000 or EPS = 1e-6

Used a conversion function, inside a wrapper_class to generate a single vector for the HOG descriptor using the following code

void LinearSVM::get_primal_form_support_vector(std::vector<float>& support_vector) const {

int sv_count = get_support_vector_count();

const CvSVMDecisionFunc* df = decision_func;

const double* alphas = df[0].alpha;

double rho = df[0].rho;

int var_count = get_var_count();

support_vector.resize(var_count, 0);

for (unsigned int r = 0; r < (unsigned)sv_count; r++) {

float myalpha = alphas[r];

const float* v = get_support_vector(r);

for (int j = 0; j < var_count; j++,v++) {

support_vector[j] += (-myalpha) * (*v);

}

}

support_vector.push_back(rho);

}

Then loaded in a set of test images and performed the multiscale detector on it which is integrated in the HOGDescriptor function.

vector<float> support_vector;

SVM_model.get_primal_form_support_vector(support_vector);

hog_detect.setSVMDetector(support_vector);

hog_detect.detectMultiScale(test_image, locations, 0.0, Size(), Size(), 1.01);

However, the result is far from satisfying ... as you can see below

So this raises some questions: