This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

Are these model in any kind optimized for open cv (i doubt it)?

You don't optimize a network for OpenCV but you can design a network for speed. See for instance MobileNets (MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications) architecture.

The first one has been trained with (in my opinion) speed performance in mind: how_to_train_face_detector.txt

. fp16 should mean that weights are stored in 16-bits floating points (Half-precision floating-point format), so less memory usage / better memory transfer.

This is a brief description of training process which has been used to get res10_300x300_ssd_iter_140000.caffemodel. The model was created with SSD framework using ResNet-10 like architecture as a backbone. Channels count in ResNet-10 convolution layers was significantly dropped (2x- or 4x- fewer channels). The model was trained in Caffe framework on some huge and available online dataset.

The second model openface.nn4.small2.v1.t7 is a reduced network (3733968 vs 6959088 parameters for nn4) to try to achieve good accuracy with less parameters and so better computation time.

More information from my different research.

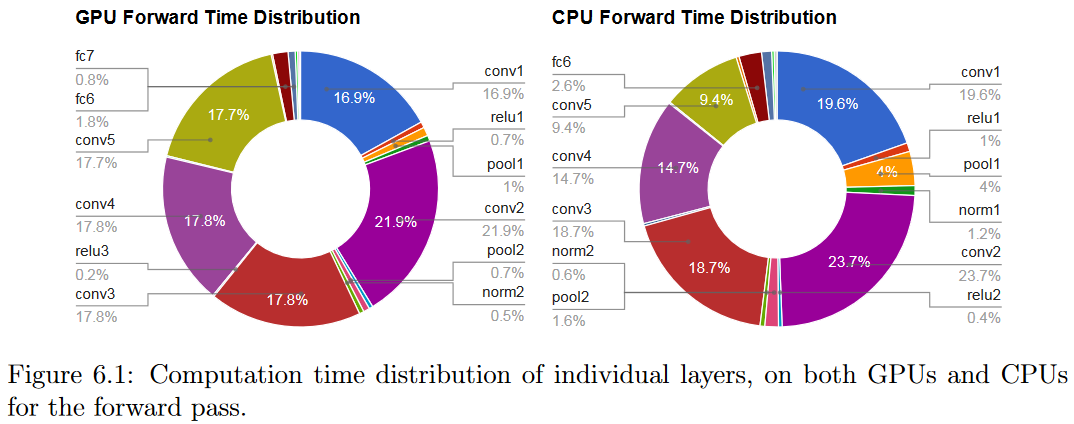

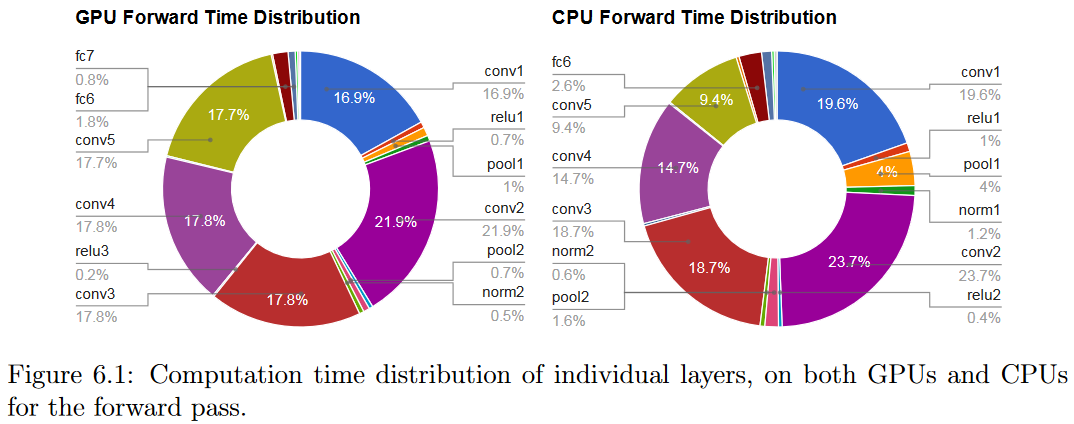

Convolution is a very expensive operation, as you can see in the following image (Learning Semantic Image Representations at a Large Scale, Yangqing Jia):

To perform efficient convolution, matrix-matrix multiplication (GEMM or General matrix-matrix multiplication) is done instead (Why GEMM is at the heart of deep learning). Data must be re-ordered in a way suitable to perform convolution as a GEMM operation. This is known as im2col operation.

BLAS (Basic Linear Algebra Subprograms) libraries are crucial to have good performance on CPU. You can see for instance the prerequisite dependencies of the Caffe framework:

Caffe has several dependencies:

CUDA is required for GPU mode.

library version 7+ and the latest driver version are recommended, but 6.* is fine too

5.5, and 5.0 are compatible but considered legacy

BLAS via ATLAS, MKL, or OpenBLAS.

Boost >= 1.55

protobuf, glog, gflags, hdf5

Optional dependencies:

OpenCV >= 2.4 including 3.0

IO libraries: lmdb, leveldb (note: leveldb requires snappy)

cuDNN for GPU acceleration (v6)

To achieve better performance, expensive deep learning operations are accelerated using dedicated libraries:

In OpenCV, convolution layer has been carefully optimized using multithreading, vectorized instruction, cache friendly optimizations. But still, better performance is achieved when Intel Inference Engine is installed.

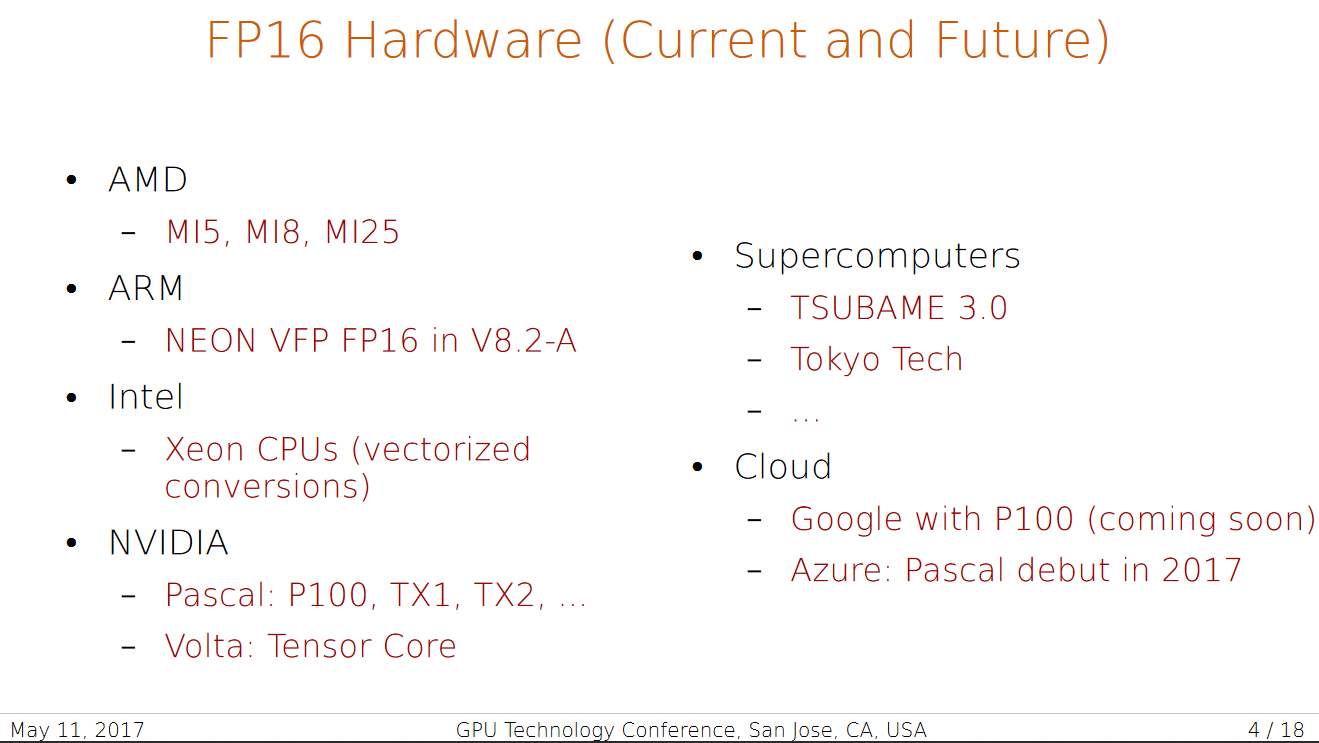

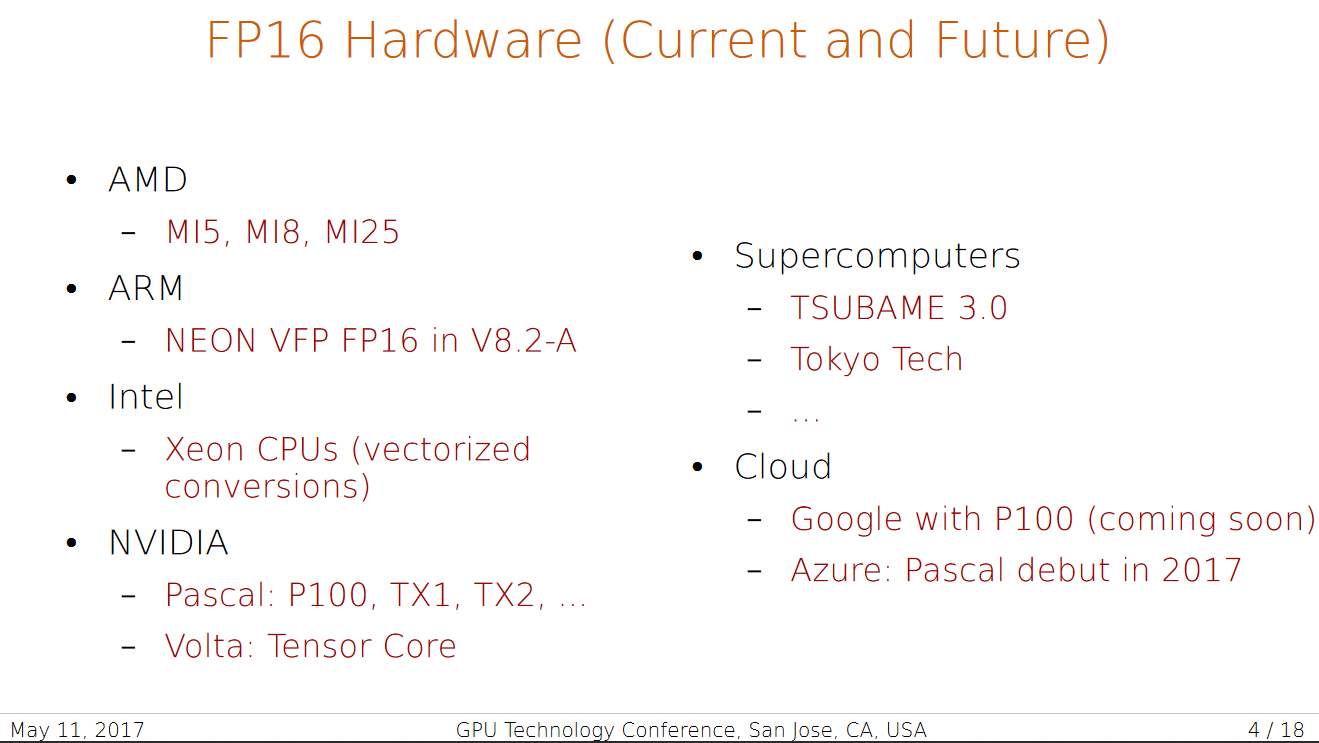

Half or reduced precision is also a mean to achieve better performance with better memory transfer, memory usage and higher operations per second. Higher theoretical FLOPS of FP16 vs FP32 is subject to the hardware used since if there is no dedicated FP16 CPU "unit", the operation should be done in FP32 precision. The following image shows FP16 hardware (Half Precision Benchmarking for HPC):

Specialized hardware for deep learning allow having a memory model and instruction set completely optimized:

| 2 | No.2 Revision |

Are these model in any kind optimized for open cv (i doubt it)?

You don't optimize a network for OpenCV but you can design a network for speed. See for instance MobileNets (MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications) architecture.

The first one has been trained with (in my opinion) speed performance in mind: how_to_train_face_detector.txt

. fp16 should mean that weights are stored in 16-bits floating points (Half-precision floating-point format), so less memory usage / better memory transfer.

This is a brief description of training process which has been used to get res10_300x300_ssd_iter_140000.caffemodel. The model was created with SSD framework using ResNet-10 like architecture as a backbone. Channels count in ResNet-10 convolution layers was significantly dropped (2x- or 4x- fewer channels). The model was trained in Caffe framework on some huge and available online dataset.

The second model openface.nn4.small2.v1.t7 is a reduced network (3733968 vs 6959088 parameters for nn4) to try to achieve good accuracy with less parameters and so better computation time.

More information from my different research.

Convolution is a very expensive operation, as you can see in the following image (Learning Semantic Image Representations at a Large Scale, Yangqing Jia):

To perform efficient convolution, matrix-matrix multiplication (GEMM or General matrix-matrix multiplication) is done instead (Why GEMM is at the heart of deep learning). Data must be re-ordered in a way suitable to perform convolution as a GEMM operation. This is known as im2col operation.

BLAS (Basic Linear Algebra Subprograms) libraries are crucial to have good performance on CPU. You can see for instance the prerequisite dependencies of the Caffe framework:

Caffe has several dependencies:

CUDA is required for GPU mode.

library version 7+ and the latest driver version are recommended, but 6.* is fine too

5.5, and 5.0 are compatible but considered legacy

BLAS via ATLAS, MKL, or OpenBLAS.

Boost >= 1.55

protobuf, glog, gflags, hdf5

Optional dependencies:

OpenCV >= 2.4 including 3.0

IO libraries: lmdb, leveldb (note: leveldb requires snappy)

cuDNN for GPU acceleration (v6)

To achieve better performance, expensive deep learning operations are accelerated using dedicated libraries:

In OpenCV, convolution layer has been carefully optimized using multithreading, vectorized instruction, cache friendly optimizations. But still, better performance is achieved when Intel Inference Engine is installed.

Half or reduced precision is also a mean to achieve better performance with better memory transfer, memory usage and higher operations per second. Higher theoretical FLOPS of FP16 vs FP32 is subject to the hardware used since if there is no dedicated FP16 CPU "unit", the operation should be done in FP32 precision. The following image shows FP16 hardware (Half Precision Benchmarking for HPC):

See also Mixed-Precision Training of Deep Neural Networks:

Mixed-precision training lowers the required resources by using lower-precision arithmetic, which has the following benefits.

Decrease the required amount of memory. Half-precision floating point format (FP16) uses 16 bits, compared to 32 bits for single precision (FP32). Lowering the required memory enables training of larger models or training with larger minibatches.

Shorten the training or inference time. Execution time can be sensitive to memory or arithmetic bandwidth. Half-precision halves the number of bytes accessed, thus reducing the time spent in memory-limited layers. NVIDIA GPUs offer up to 8x more half precision arithmetic throughput when compared to single-precision, thus speeding up math-limited layers.

Specialized hardware for deep learning allow having a memory model and instruction set completely optimized: