This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

@Paul Kuo, the problem is that graph has been saved in training mode. I think there is some placeholder similar to isTraining that should be turned into False before graph saving (note that it's about graph but not about checkpoint).

Moreover you may see some unusual transformations over input image.

May I ask you to try to find that isTraining flag, set it to false and save the graph again by tf.train.write_graph. Then freeze the same checkpoint files with new version of graph. Our first goal is emit train/test switches from the graph. Thank you!

| 2 | No.2 Revision |

@Paul Kuo, the problem is that graph has been saved in training mode. I think there is some placeholder similar to isTraining that should be turned into False before graph saving (note that it's about graph but not about checkpoint).

Moreover you may see some unusual transformations over input image.

May I ask you to try to find that isTraining flag, set it to false and save the graph again by tf.train.write_graph. Then freeze the same checkpoint files with new version of graph. Our first goal is emit train/test switches from the graph. Thank you!

UPDATE

@Paul Kuo, the following are steps to create a graph without training-testing switches. An extra steps are required to import it into OpenCV.

Make a script with the following code at the root folder of Age-Gender-Estimate-TF.

import tensorflow as tf import inception_resnet_v1

inp = tf.placeholder(tf.float32, shape=[None, 160, 160, 3], name='input_image')

age_logits, gender_logits, _ = inception_resnet_v1.inference(inp, keep_probability=0.8,

phase_train=False,

weight_decay=1e-5)

print age_logits # logits/age/BiasAdd

print gender_logits # logits/gender/BiasAdd

with tf.Session() as sess:

graph_def = sess.graph.as_graph_def()

tf.train.write_graph(graph_def, "", 'inception_resnet_v1.pb', as_text=False)

Here we create a graph definition for testing mode only (phase_train=False).

python ~/tensorflow/tensorflow/python/tools/freeze_graph.py \

--input_graph=inception_resnet_v1.pb \

--input_checkpoint=savedmodel.ckpt \

--output_graph=frozen_inception_resnet_v1.pb \

--output_node_names="logits/age/BiasAdd,logits/gender/BiasAdd" \

--input_binary

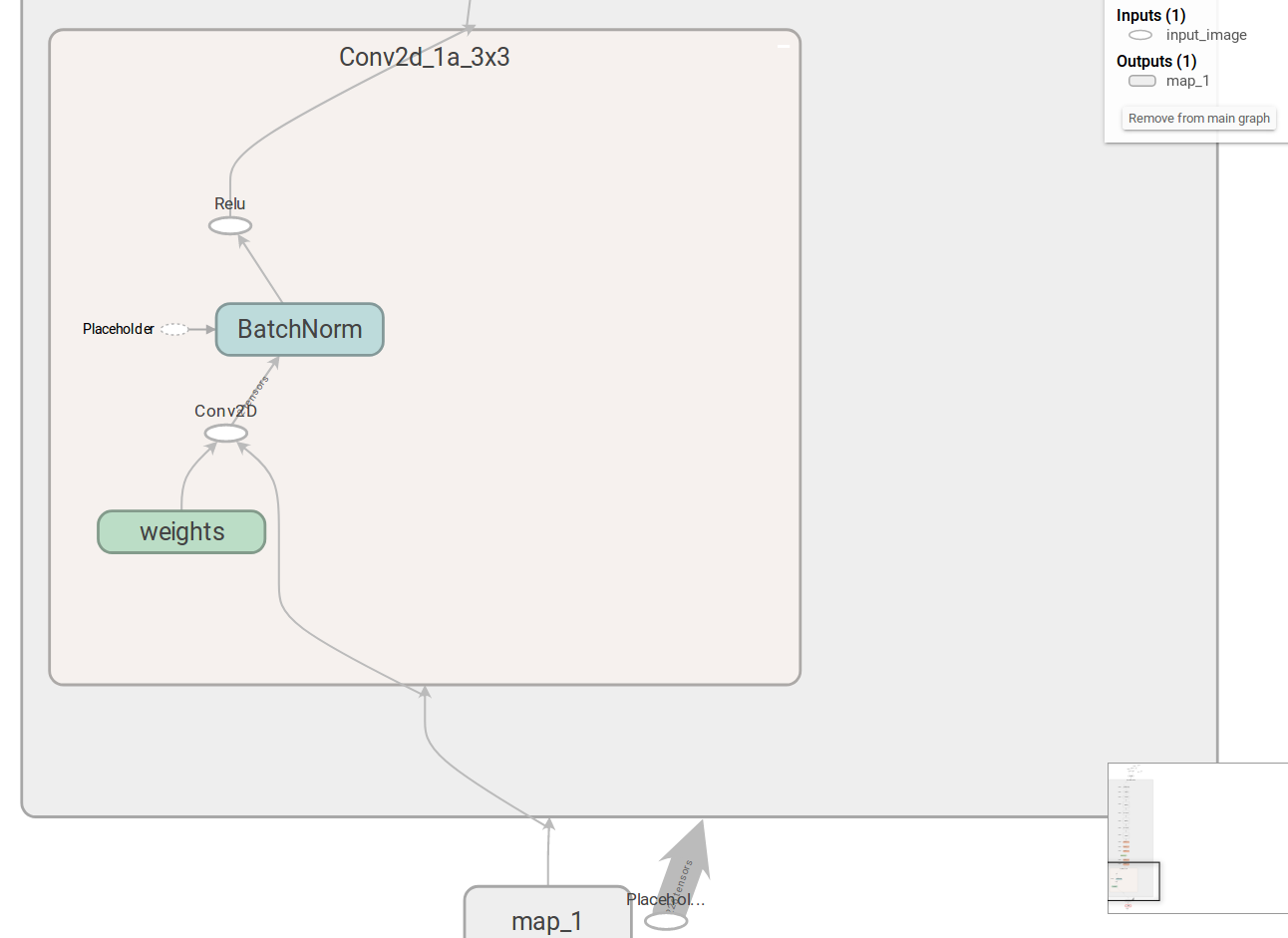

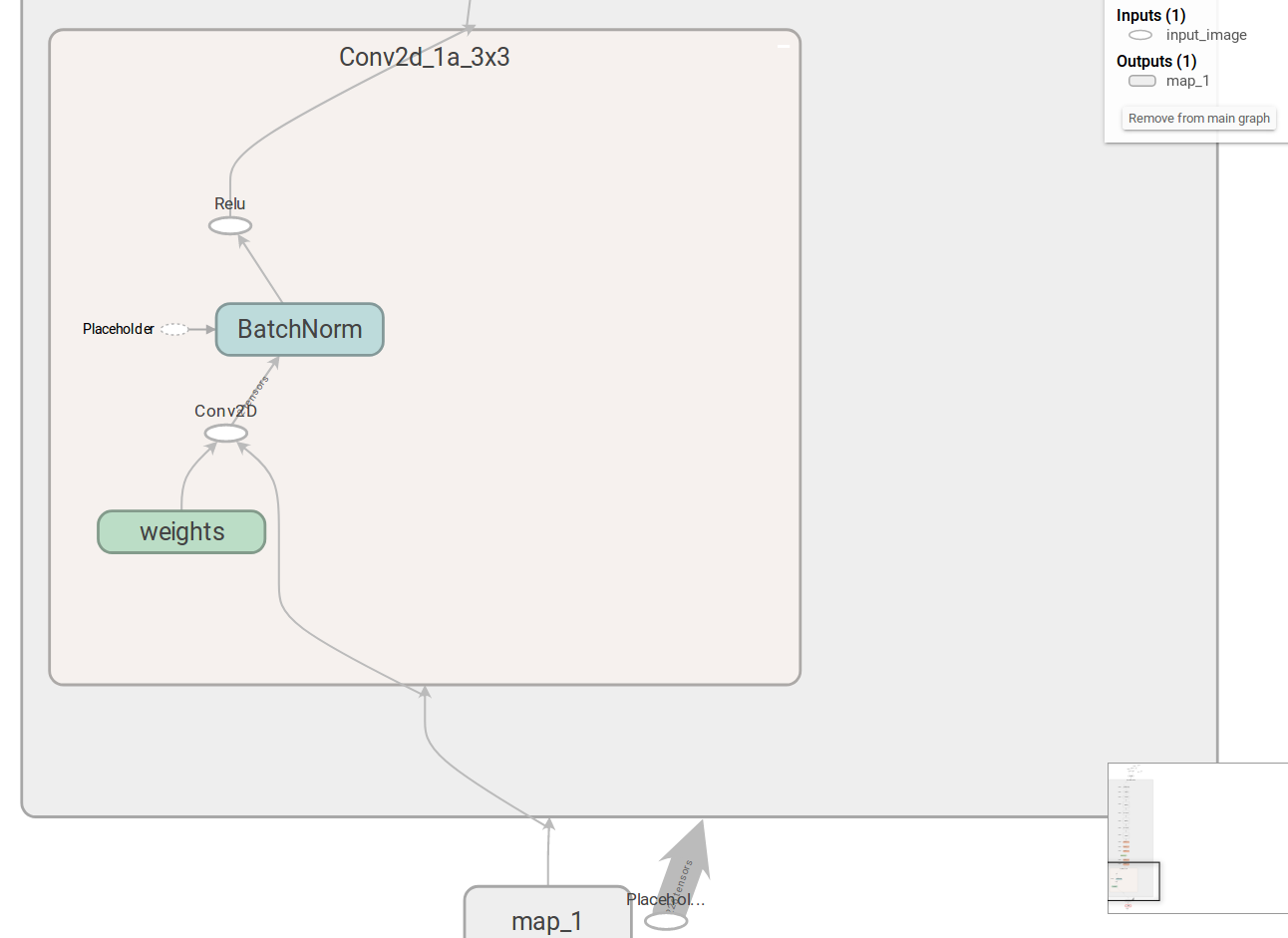

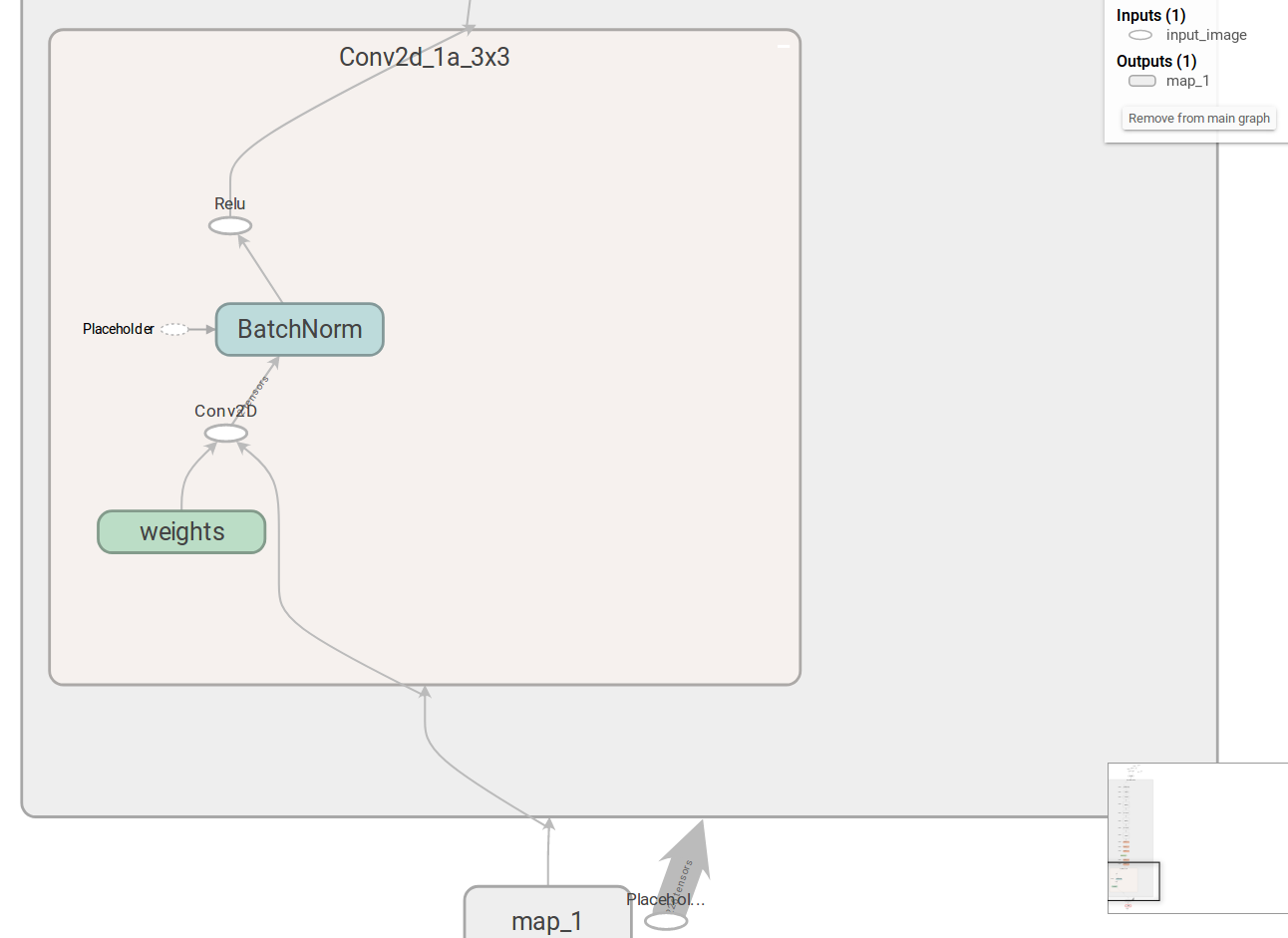

Using TensorBoard, check that our graph has no training subgraphs (compare with images above). C:\fakepath\Screenshot from 2018-03-30 10-41-03.png

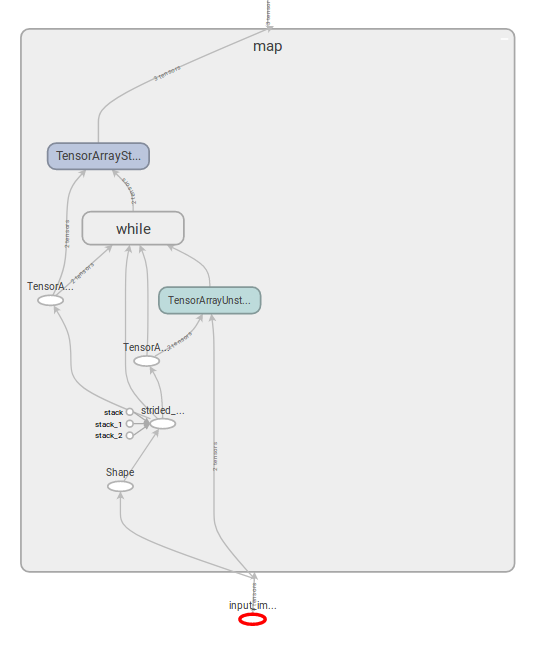

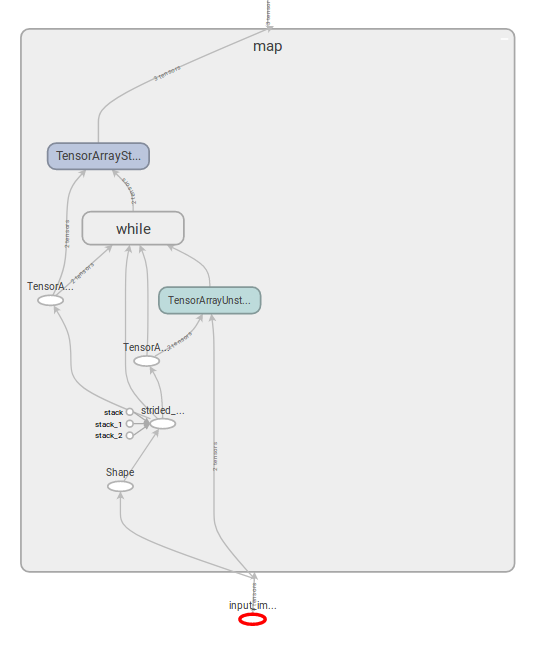

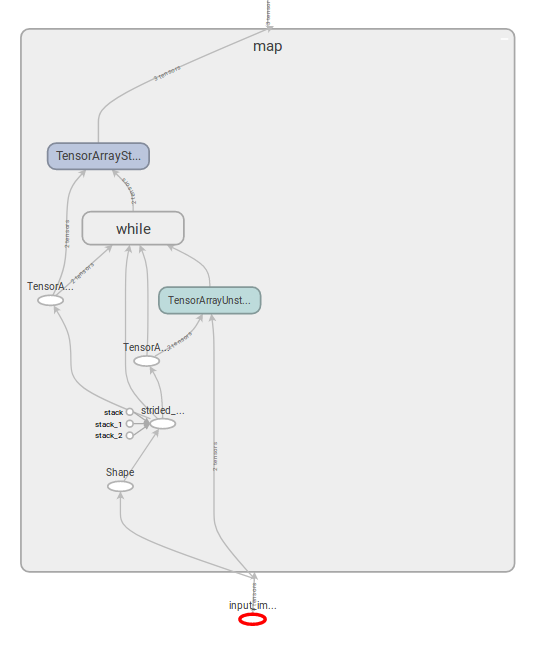

Unfortunately, current version of OpenCV cannot interpret this graph correctly because of single Reshape layer that takes dynamically estimated target shape:

C:\fakepath\Screenshot from 2018-03-30 10-30-43.png

Actually, it's just a flattening that means reshaping from 4-dimensional blob to 2-dimensional keeping the same batch size. We replace manage it during graph definition because it's out of user's code:

# inception_resnet_v1.py, line 262:

net = slim.fully_connected(net, bottleneck_layer_size, activation_fn=None,

scope='Bottleneck', reuse=False)

But we can help OpenCV to manage it by modifying a text graph.

import tensorflow as tf

# Read the graph.

with tf.gfile.FastGFile('frozen_inception_resnet_v1.pb') as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

# Remove Const nodes.

for i in reversed(range(len(graph_def.node))):

if graph_def.node[i].op == 'Const':

del graph_def.node[i]

for attr in ['T', 'data_format', 'Tshape', 'N', 'Tidx', 'Tdim',

'use_cudnn_on_gpu', 'Index', 'Tperm', 'is_training',

'Tpaddings']:

if attr in graph_def.node[i].attr:

del graph_def.node[i].attr[attr]

# Save as text.

tf.train.write_graph(graph_def, "", "frozen_inception_resnet_v1.pbtxt", as_text=True)

Remove the Shape node

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Shape"

op: "Shape"

input: "InceptionResnetV1/Bottleneck/MatMul"

attr {

key: "out_type"

value {

type: DT_INT32

}

}

}

Replace Reshape to Identity:

from

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape_1"

op: "Reshape"

input: "InceptionResnetV1/Bottleneck/BatchNorm/FusedBatchNorm"

input: "InceptionResnetV1/Bottleneck/BatchNorm/Shape"

}

to

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape_1"

op: "Identity"

input: "InceptionResnetV1/Bottleneck/BatchNorm/FusedBatchNorm"

}

Also replace node

from

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape"

op: "Reshape"

input: "InceptionResnetV1/Bottleneck/MatMul"

input: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape/shape"

}

to

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape"

op: "Identity"

input: "InceptionResnetV1/Bottleneck/MatMul"

}

Additionally, remove nodes with names

InceptionResnetV1/Logits/Flatten/flatten/Shape InceptionResnetV1/Logits/Flatten/flatten/strided_slice InceptionResnetV1/Logits/Flatten/flatten/Reshape/shape

Replace node with a name InceptionResnetV1/Logits/Flatten/flatten/Reshape

from

node {

name: "InceptionResnetV1/Logits/Flatten/flatten/Reshape"

op: "Reshape"

input: "InceptionResnetV1/Logits/AvgPool_1a_8x8/AvgPool"

input: "InceptionResnetV1/Logits/Flatten/flatten/Reshape/shape"

}

to

node {

name: "InceptionResnetV1/Logits/Flatten/flatten/Reshape"

op: "Flatten"

input: "InceptionResnetV1/Logits/AvgPool_1a_8x8/AvgPool"

}

graph = 'frozen_inception_resnet_v1.pb'

config = 'frozen_inception_resnet_v1.pbtxt'

cvNet = cv.dnn.readNetFromTensorflow(graph, config)

with tf.gfile.FastGFile(graph) as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

with tf.Session() as sess:

# Restore session

sess.graph.as_default()

tf.import_graph_def(graph_def, name='')

np.random.seed(324)

inp = np.random.standard_normal([1, 160, 160, 3]).astype(np.float32)

out = sess.run([sess.graph.get_tensor_by_name('logits/age/BiasAdd:0'),

sess.graph.get_tensor_by_name('logits/gender/BiasAdd:0')],

feed_dict={'input_image:0': inp})

cvNet.setInput(inp.transpose(0, 3, 1, 2))

cvOut = cvNet.forward(['logits/age/MatMul', 'logits/gender/MatMul'])

print np.max(np.abs(cvOut[0] - out[0]))

print np.max(np.abs(cvOut[1] - out[1]))

Note that this is a kind of rude solution but at least that works with the latest master branch. You may download resulting .pbtxt here. BTW you also can skip all the steps above and use frozenGraph_ageGender.pb with this text file (cvNet = cv.dnn.readNetFromTensorflow('frozenGraph_ageGender.pb', 'frozen_inception_resnet_v1.pbtxt')).

| 3 | No.3 Revision |

@Paul Kuo, the problem is that graph has been saved in training mode. I think there is some placeholder similar to isTraining that should be turned into False before graph saving (note that it's about graph but not about checkpoint).

Moreover you may see some unusual transformations over input image.

May I ask you to try to find that isTraining flag, set it to false and save the graph again by tf.train.write_graph. Then freeze the same checkpoint files with new version of graph. Our first goal is emit train/test switches from the graph. Thank you!

UPDATE

@Paul Kuo, the following are steps to create a graph without training-testing switches. An extra steps are required to import it into OpenCV.

Make a script with the following code at the root folder of Age-Gender-Estimate-TF.

Here we create a graph definition for testing mode only (phase_train=False).

python ~/tensorflow/tensorflow/python/tools/freeze_graph.py \

--input_graph=inception_resnet_v1.pb \

--input_checkpoint=savedmodel.ckpt \

--output_graph=frozen_inception_resnet_v1.pb \

--output_node_names="logits/age/BiasAdd,logits/gender/BiasAdd" \

--input_binary

Using TensorBoard, check that our graph has no training subgraphs (compare with images above). C:\fakepath\Screenshot from 2018-03-30 10-41-03.png

Unfortunately, current version of OpenCV cannot interpret this graph correctly because of single Reshape layer that takes dynamically estimated target shape:

C:\fakepath\Screenshot from 2018-03-30 10-30-43.png

Actually, it's just a flattening that means reshaping from 4-dimensional blob to 2-dimensional keeping the same batch size. We replace manage it during graph definition because it's out of user's code:

# inception_resnet_v1.py, line 262:

net = slim.fully_connected(net, bottleneck_layer_size, activation_fn=None,

scope='Bottleneck', reuse=False)

But we can help OpenCV to manage it by modifying a text graph.

import tensorflow as tf

# Read the graph.

with tf.gfile.FastGFile('frozen_inception_resnet_v1.pb') as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

# Remove Const nodes.

for i in reversed(range(len(graph_def.node))):

if graph_def.node[i].op == 'Const':

del graph_def.node[i]

for attr in ['T', 'data_format', 'Tshape', 'N', 'Tidx', 'Tdim',

'use_cudnn_on_gpu', 'Index', 'Tperm', 'is_training',

'Tpaddings']:

if attr in graph_def.node[i].attr:

del graph_def.node[i].attr[attr]

# Save as text.

tf.train.write_graph(graph_def, "", "frozen_inception_resnet_v1.pbtxt", as_text=True)

Remove the Shape node

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Shape"

op: "Shape"

input: "InceptionResnetV1/Bottleneck/MatMul"

attr {

key: "out_type"

value {

type: DT_INT32

}

}

}

Replace Reshape to Identity:

from

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape_1"

op: "Reshape"

input: "InceptionResnetV1/Bottleneck/BatchNorm/FusedBatchNorm"

input: "InceptionResnetV1/Bottleneck/BatchNorm/Shape"

}

to

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape_1"

op: "Identity"

input: "InceptionResnetV1/Bottleneck/BatchNorm/FusedBatchNorm"

}

Also replace node

from

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape"

op: "Reshape"

input: "InceptionResnetV1/Bottleneck/MatMul"

input: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape/shape"

}

to

node {

name: "InceptionResnetV1/Bottleneck/BatchNorm/Reshape"

op: "Identity"

input: "InceptionResnetV1/Bottleneck/MatMul"

}

Additionally, remove nodes with names

InceptionResnetV1/Logits/Flatten/flatten/Shape InceptionResnetV1/Logits/Flatten/flatten/strided_slice InceptionResnetV1/Logits/Flatten/flatten/Reshape/shape

Replace node with a name InceptionResnetV1/Logits/Flatten/flatten/Reshape

from

node {

name: "InceptionResnetV1/Logits/Flatten/flatten/Reshape"

op: "Reshape"

input: "InceptionResnetV1/Logits/AvgPool_1a_8x8/AvgPool"

input: "InceptionResnetV1/Logits/Flatten/flatten/Reshape/shape"

}

to

node {

name: "InceptionResnetV1/Logits/Flatten/flatten/Reshape"

op: "Flatten"

input: "InceptionResnetV1/Logits/AvgPool_1a_8x8/AvgPool"

}

graph = 'frozen_inception_resnet_v1.pb'

config = 'frozen_inception_resnet_v1.pbtxt'

cvNet = cv.dnn.readNetFromTensorflow(graph, config)

with tf.gfile.FastGFile(graph) as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

with tf.Session() as sess:

# Restore session

sess.graph.as_default()

tf.import_graph_def(graph_def, name='')

np.random.seed(324)

inp = np.random.standard_normal([1, 160, 160, 3]).astype(np.float32)

out = sess.run([sess.graph.get_tensor_by_name('logits/age/BiasAdd:0'),

sess.graph.get_tensor_by_name('logits/gender/BiasAdd:0')],

feed_dict={'input_image:0': inp})

cvNet.setInput(inp.transpose(0, 3, 1, 2))

cvOut = cvNet.forward(['logits/age/MatMul', 'logits/gender/MatMul'])

print np.max(np.abs(cvOut[0] - out[0]))

print np.max(np.abs(cvOut[1] - out[1]))

Note that this is a kind of rude solution but at least that works with the latest master branch. You may download resulting .pbtxt here. BTW you also can skip all the steps above and use frozenGraph_ageGender.pb with this text file (cvNet = cv.dnn.readNetFromTensorflow('frozenGraph_ageGender.pb', 'frozen_inception_resnet_v1.pbtxt')).