|

2015-10-06 15:52:22 -0600

| answered a question | Insert binary threshold image (CV_8UC1) into a ROI of a coloured mat (CV_8UC4)? |

|

2015-10-04 05:04:52 -0600

| asked a question | Insert binary threshold image (CV_8UC1) into a ROI of a coloured mat (CV_8UC4)? Hello. I've got a sequence of images of type CV_8UC4. It is of HD size 1280x720.

I'm executing the bgfg segmentation (MOG2 specifically) on a ROI of the image.

After the algo finished I've got the binary image of the size of ROI and of

type CV_8UC1.

I want to insert this binary image back to the original big image. Hwo can I do

this? Here's what I'm doing (the code is simplified for the sake of readability): // cvImage is the big Mat coming from outside

cv::Mat roi(cvImage, cv::Rect(200, 200, 400, 400));

mog2 = cv::createBackgroundSubtractorMOG2();

cv::Mat fgMask;

mog2->apply(roi, fgMask); // Here the fgMask is the binary mat which corresponds to the roi size

So, how can insert the fgMask back to the original image?

Hwo to do this CV_8UC1 -> CV_8UC4 conversion only for the ROI? Thank you. |

|

2015-09-23 05:47:09 -0600

| commented answer | Xcode 7 does not build project with opencv 3.0.0 framework No. This does not help.

OpenCV 2.4.10 for ios works fine in the exactly same setup. |

|

2015-09-23 04:23:23 -0600

| received badge | ● Editor

(source)

|

|

2015-09-23 04:21:02 -0600

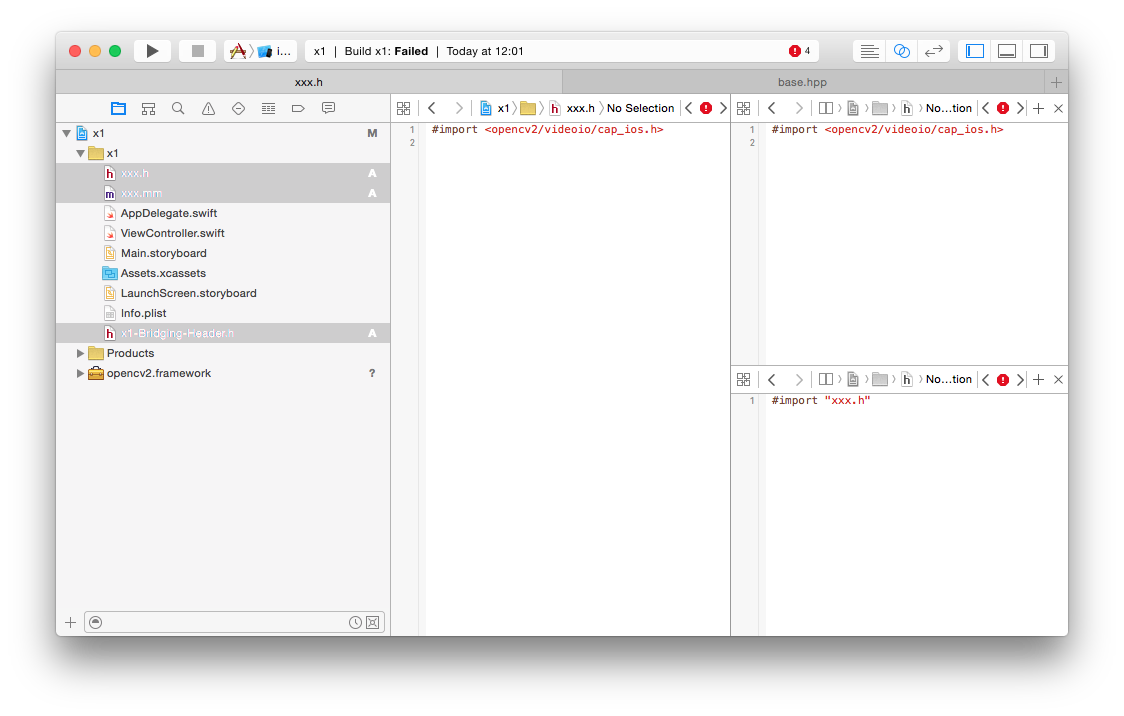

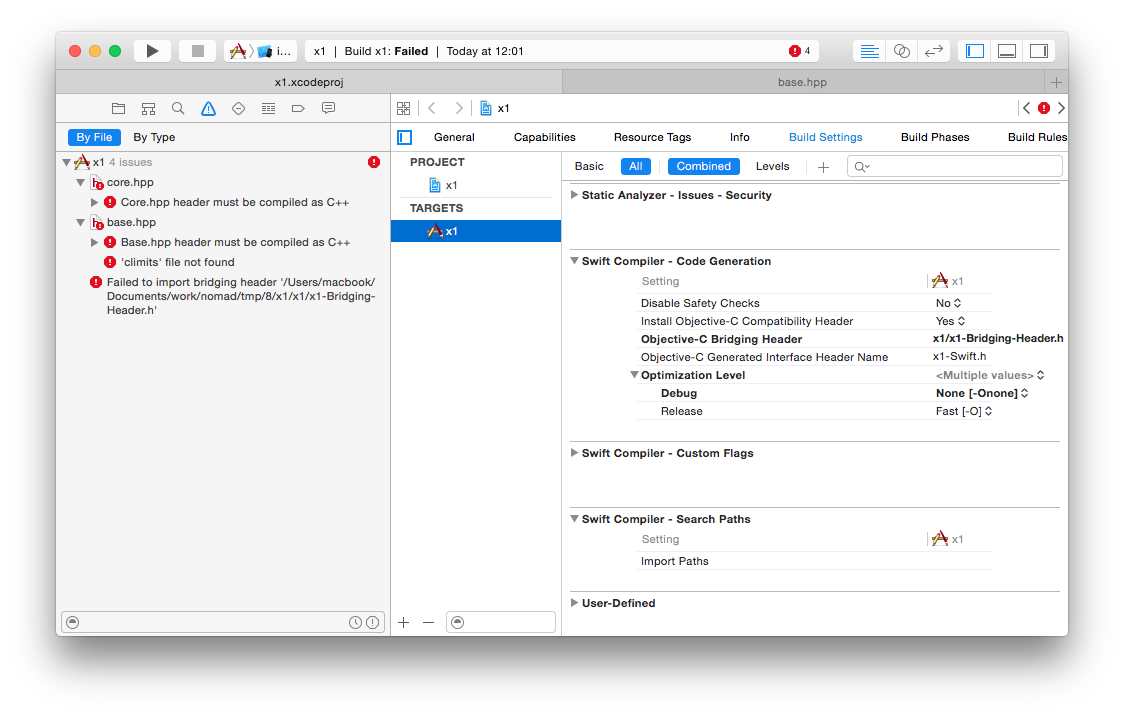

| asked a question | Xcode 7 does not build project with opencv 3.0.0 framework I can't build project with a new version of the opencv 3.0.0 framework (version 2 did not have this problem).

Xcode 7 does not compile c++ sources as c++. Here's the simplest setup that is not building: - Download the 3.0.0 framework from here http://netix.dl.sourceforge.net/proje...

- Create the simplest ios project with Swift language.

- Drag-and-drop the framework to the project.

- Create the

Objective-C++ source and headers and add them to the project. - Create the bridging header.

Here's the setup:

- Build.

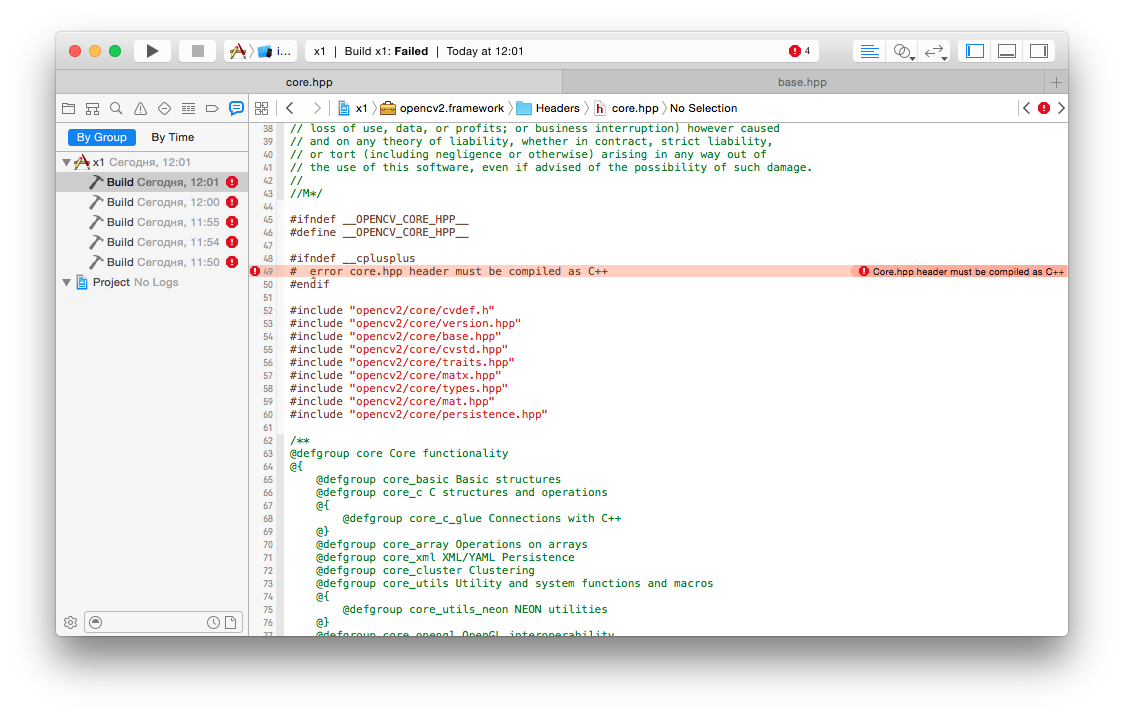

And here's what the compiler says: opencv2.framework/Headers/core/base.hpp:49:4: error: base.hpp header must be compiled as C++

Why is that? I've got the .mm file and it is supposed to compile as the Objective-C++ source. |

|

2015-09-23 02:10:16 -0600

| received badge | ● Enthusiast

|

|

2015-08-20 04:58:34 -0600

| commented question | What are additions in OpenCV4Tegra? Steven, may I ask you for a little bit more details on "optimized for architecture"? Does it mean optimized for CPU or for the GPU? Here's the Nvidia's list http://docs.nvidia.com/gameworks/inde... and there are no words about optimizations for GPU.. I'm a bit confused. Thanks. |

|

2015-08-19 07:15:12 -0600

| asked a question | What are additions in OpenCV4Tegra? Hello. I'm studying OpenCV and running examples on an Nvidia Jetson Tk1 dev board. It has the Tegra K1 SoC. Nvidia's SDK comes with its own distr of OpenCV4Tegra. It is actually 2.4.10 with, as I understand, some additions from Nvidia. So what's the difference between standard OpenCV 2.4.10 and the OpenCV4Tegra? Thank you. |

|

2015-08-07 09:13:49 -0600

| received badge | ● Scholar

(source)

|

|

2015-08-07 09:12:02 -0600

| commented answer | GaussianBlur and Canny execution times are much longer on T-API Laurent, thank you for such helpful comments. I will mark your comment as the answer.

Regarding your question. I actually do not know. I'm just in the beginning of the way, I don't know if it is the card or smth else. I will investigate it further. BTW, I'm going to test this whole stuff on NVIDIA Jetson Tk1 with Tegra chip. I can share the results with you if you want.. |

|

2015-08-07 08:51:14 -0600

| commented answer | GaussianBlur and Canny execution times are much longer on T-API Ok, now I used the aloeL image. And the results changed dramatically in favor of OpenCL. Here are the results: Without opencl/ with opencl for cvtColor(0),Blur(1),Canny(2)

test 0 = 2.43725(+/-0.370315) /0.204953(+/-0.219866)

test 1 = 6.33891(+/-1.01956) /2.03366(+/-14.2318)

test 2 = 26.6822(+/-2.07312) /2.30163(+/-8.92984)

So what's the verdict? Small image processing has large overhead for OpenCL? Correct me if I'm wrong.. |

|

2015-08-07 08:06:30 -0600

| commented answer | GaussianBlur and Canny execution times are much longer on T-API Here are my results of your test: 1 GPU devices are detected.

name : GeForce GT 330M

available : 1

imageSupport : 1

OpenCL_C_Version : OpenCL C 1.1

getNumberOfCPUs [4] getNumThreads [512]

...

Without opencl/ with opencl for cvtColor(0),Blur(1),Canny(2)

test 0 = 0.193865(+/-0.113415) /0.393258(+/-0.792693)

test 1 = 0.391398(+/-0.222591) /1.38872(+/-1.74931)

test 2 = 0.908895(+/-0.257745) /2.11392(+/-4.36291)

And what are yours? |

|

2015-08-07 05:56:53 -0600

| commented answer | GaussianBlur and Canny execution times are much longer on T-API The file size is 15K. It is actually the lena.jpg that comes with the opencv samples. The size of matrices from the debugger: image cv::Mat

dims int 2 2

rows int 225 225

cols int 200 200

uimage cv::UMat

dims int 2 2

rows int 225 225

cols int 200 200

|

|

2015-08-07 04:10:03 -0600

| received badge | ● Student

(source)

|

|

2015-08-07 04:04:00 -0600

| commented answer | GaussianBlur and Canny execution times are much longer on T-API I did exactly what you said and the results are much more confusing... cvtColor ms [0.242088]

GaussianBlur ms [0.580397]

Canny ms [1.18715]

= Total [2.00964]

TAPI results

TAPI cvtColor ms [14.6621]

TAPI GaussianBlur ms [14.7547]

TAPI Canny ms [181.67]

= Total [211.087]

In my understanding methods using Mat must work slower than with UMat. Even with forced turned off use of OpenCL in the first case. But it's totally the other way. I'm really confused.. |

|

2015-08-07 03:24:23 -0600

| asked a question | GaussianBlur and Canny execution times are much longer on T-API Hello. I've just started to learn OpenCV 3. I'm on OS X Yosemite. Here's my clinfo in the GPU part: Device Name GeForce GT 330M

Device Vendor NVIDIA

Device Vendor ID 0x1022600

Device Version OpenCL 1.0

Driver Version 10.0.31 310.90.10.05b12

Device OpenCL C Version OpenCL C 1.1

Device Type GPU

Device Profile FULL_PROFILE

Max compute units 6

Max clock frequency 1100MHz

Max work item dimensions 3

Max work item sizes 512x512x64

Max work group size 512

Preferred work group size multiple 32

Preferred / native vector sizes

char 1 / 1

short 1 / 1

int 1 / 1

long 1 / 1

half 0 / 0 (n/a)

float 1 / 1

double 0 / 0 (n/a)

Half-precision Floating-point support (n/a)

Single-precision Floating-point support (core)

Denormals No

Infinity and NANs Yes

Round to nearest Yes

Round to zero Yes

Round to infinity Yes

IEEE754-2008 fused multiply-add No

Support is emulated in software No

Correctly-rounded divide and sqrt operations No

Double-precision Floating-point support (n/a)

Address bits 32, Little-Endian

Global memory size 268435456 (256MiB)

Error Correction support No

Max memory allocation 134217728 (128MiB)

Unified memory for Host and Device No

Minimum alignment for any data type 128 bytes

Alignment of base address 1024 bits (128 bytes)

Global Memory cache type None

Image support Yes

Max number of samplers per kernel 16

Max 2D image size 4096x4096 pixels

Max 3D image size 2048x2048x2048 pixels

Max number of read image args 128

Max number of write image args 8

Local memory type Local

Local memory size 16384 (16KiB)

Max constant buffer size 65536 (64KiB)

Max number of constant args 9

Max size of kernel argument 4352 (4.25KiB)

Queue properties

Out-of-order execution No

Profiling Yes

Profiling timer resolution 1000ns

Execution capabilities

Run OpenCL kernels Yes

Run native kernels No

Device Available Yes

Compiler Available Yes

Device Extensions cl_APPLE_SetMemObjectDestructor cl_APPLE_ContextLoggingFunctions cl_APPLE_clut cl_APPLE_query_kernel_names cl_APPLE_gl_sharing cl_khr_gl_event cl_khr_byte_addressable_store cl_khr_global_int32_base_atomics cl_khr_global_int32_extended_atomics cl_khr_local_int32_base_atomics cl_khr_local_int32_extended_atomics

I wrote a little program to test T-API and it turns out that GaussianBlur and Canny take much much longer time to execute on T-API. Here's the code. It loads image and applies these two filter without and with T-API: double totalTime = 0;

int64 start = getTickCount();

cvtColor(image, gray, COLOR_BGR2GRAY);

double timeMs = (getTickCount() - start) / getTickFrequency() * 1000;

totalTime += timeMs;

cout << "cvtColor ms [" << timeMs<< "]" << endl;

start = getTickCount();

GaussianBlur(gray, gray, Size(7, 7), 1.5);

timeMs = (getTickCount() - start) / getTickFrequency() * 1000;

totalTime += timeMs;

cout << "GaussianBlur ms [" << timeMs<< "]" << endl;

start = getTickCount();

Canny(gray, gray, 0, 50);

timeMs = (getTickCount() - start) / getTickFrequency() * 1000;

totalTime += timeMs;

cout << "Canny ms [" << timeMs<< "]" << endl;

cout << "= Total [" << totalTime << "]" << endl;

// TAPI

cout << endl << "TAPI results" << endl;

totalTime = 0;

UMat uimage;

UMat ugray;

imread(argv[1], CV_LOAD_IMAGE_COLOR).copyTo(uimage);

start = getTickCount();

cvtColor(uimage, ugray, COLOR_BGR2GRAY);

timeMs = (getTickCount() - start) / getTickFrequency() * 1000;

totalTime += timeMs;

cout << "TAPI cvtColor ms [" << timeMs<< "]" << endl;

start = getTickCount();

GaussianBlur(ugray, ugray, Size(7, 7), 1.5);

timeMs = (getTickCount() - start) / getTickFrequency() * 1000;

totalTime += timeMs;

cout << "TAPI GaussianBlur ms [" << timeMs<< "]" << endl;

start = getTickCount();

Canny(ugray, ugray, 0, 50);

timeMs = (getTickCount() - start) / getTickFrequency ...

(more) |