With a fisheye lens that's about 180 degrees, the goal is to help ensure the best accuracy in detecting the presence of faces (especially near the edges) by first undistorting the image. I understand it's unrealistic to expect to be able to do it super close to the edges since it's 180 degrees, but I'd like to at least get relatively close.

In OpenCV (v3.1 if that matters), I tried to use calibrate.py to do this. While it can detect the checkerboard pattern in a lot of the calibration images I took, it has trouble with ones where the distortion is more extreme. The end result was that it provided a camera matrix and distortion coefficients that correct the center fairly well, but don't do too much about the edges.

Is there some straightforward way to manually tweak the matrices to get the desired correction or is there another good approach to this? I am hoping for an intuitive way to tweak the numbers to do it qualitatively without having to understand it much from a math standpoint.

What I got from calibrate.py is a camera matrix of

[[537.04, 0, 961.19], [0, 536.42 , 506.01], [0, 0, 1]]

and distortion coefficients of

[-2.897e-01, 7.527e-02, 0, 0, 0]

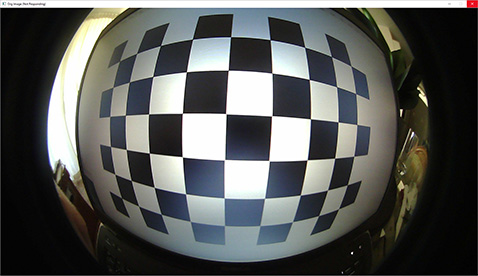

This takes an image like this (shown here at 25% size):

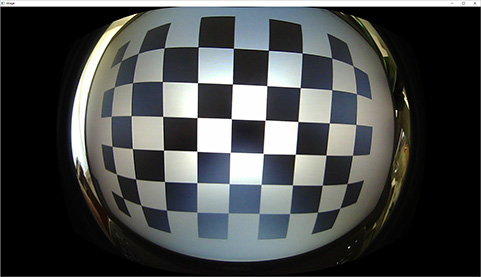

And corrects it to something like

This is certainly better than nothing, but not quite the desired level of correction.

In case it's helpful, here's the code to undistort the image (OpenCV 3.1 + Python 2.7). NOTE: this expects the images to be sized at 1920x1080 (i.e. the native resolution of the camera)

import cv2

import numpy as np

cammatrix = np.array([[537.04, 0, 961.19], [0, 536.42 , 506.01], [0, 0, 1]])

distcoeffs = np.array([-2.897e-01, 7.527e-02, 0, 0, 0])

image = cv2.imread("PATH_TO_IMAGE.jpg")

h,w = image.shape[:2]

newcam,roi = cv2.getOptimalNewCameraMatrix(cammatrix, distcoeffs, (w,h), 1)

newimage = cv2.undistort(image, cammatrix, distcoeffs, None, newcam)

cv2.imshow("Orig Image", image)

cv2.imshow("Image", newimage)

cv2.waitKey(0)