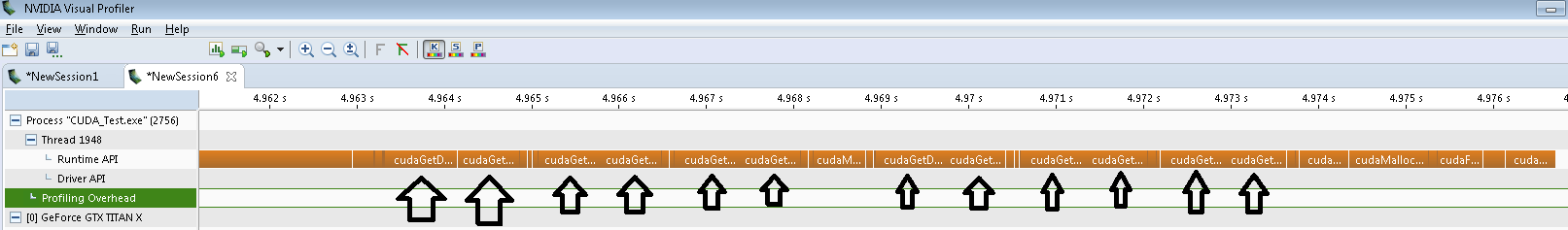

I am trying to move some of my processing to the GPU and it is taking much longer than I would expect. Running the NVIDIA profiler, I see that there are large gaps between the segments of work in the stream. Those gaps are almost perfectly replicated by the presence of calls to getDeviceProperties as shown in the picture below.

Each call to getDeviceProperties takes about 300us, and since there's two of them for each call, that makes almost a full ms in between each call to CUDA.

The calls in this section are a few meanStdDev, subtract, scaleAdd and convertTo with scale and add. So it's happening on more than one method. I can't find anywhere the method is called directly, but it could be implicit somewhere in chain. meanStdDev is fairly easy to understand, but the call stack on the arithmetic methods gets hard to follow with templates and other things.

In the kernels I wrote myself, there is no call to getDeviceProperties, and there is no delay from the previous call.

Has anyone else seen this, know why it happens, or how to make it stop?