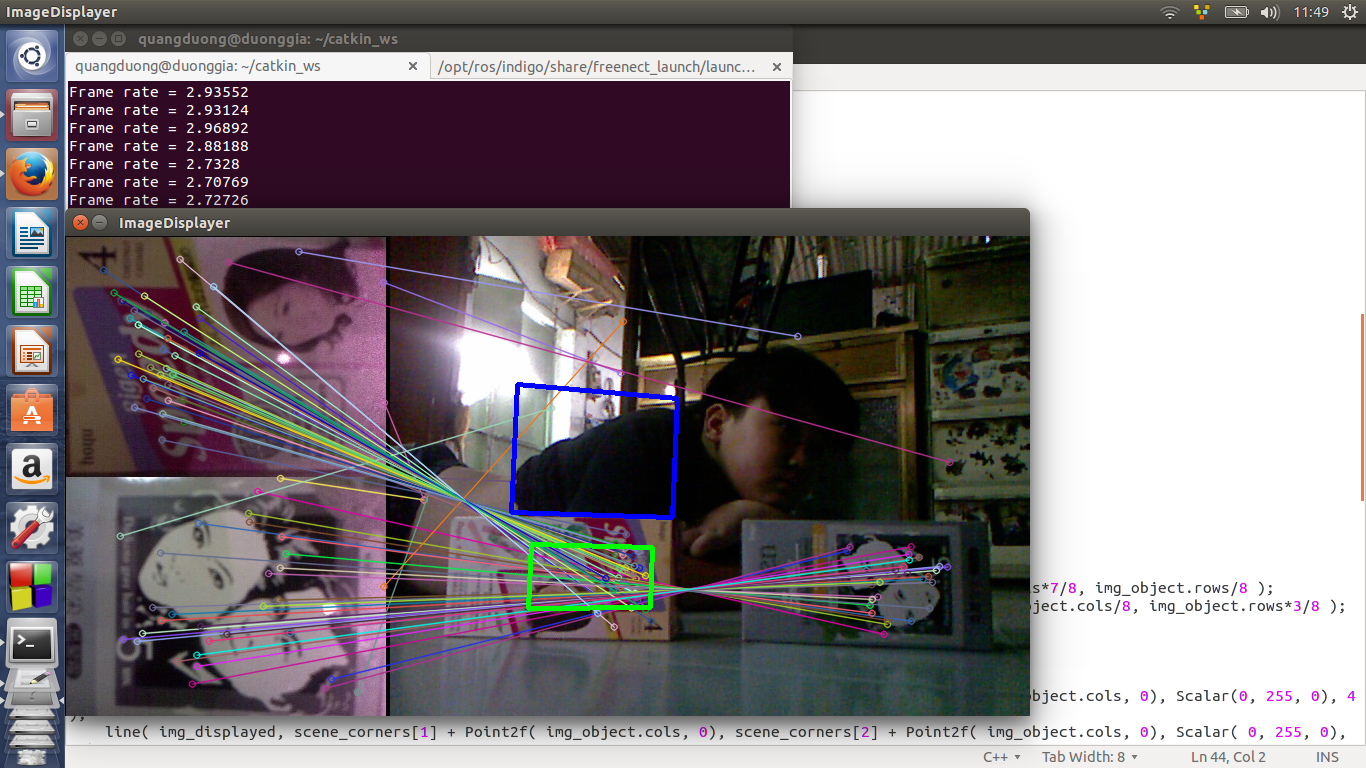

I would like to detect 2 object using Sift algorithm. But i can't distinguish 2 object. Anyone can help me??

this is my code

this is my code

include <ros ros.h="">

include <cv_bridge cv_bridge.h="">

include <sensor_msgs image_encodings.h="">

include <opencv2 opencv.hpp="">

include <opencv2 highgui="" highgui.hpp="">

include <opencv2 nonfree="" features2d.hpp="">

include <opencv2 features2d="" features2d.hpp="">

using namespace cv; using namespace std;

SiftFeatureDetector detector; SiftDescriptorExtractor extractor; FlannBasedMatcher matcher;

Mat img_object, img_scene; Mat img_object_g, img_scene_g;

vector<keypoint> keypoints_object, keypoints_scene; Mat descriptors_object, descriptors_scene;

////////////////////////////////////////////////////////////////////////////////// cv_bridge::CvImagePtr cv_ptr; void image_cb (const sensor_msgs::ImageConstPtr& msg) { double t0 = getTickCount (); cv_ptr = cv_bridge::toCvCopy (msg, sensor_msgs::image_encodings::BGR8); img_scene = cv_ptr->image; cvtColor (img_scene, img_scene_g, CV_BGR2GRAY);

detector.detect (img_scene_g, keypoints_scene);

extractor.compute (img_scene_g, keypoints_scene, descriptors_scene);

std::vector< DMatch > matches;

matcher.match( descriptors_object, descriptors_scene, matches );

double max_dist = 0; double min_dist = 100;

for( int i = 0; i < descriptors_object.rows; i++ ) {

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_object.rows; i++ ) {

if( matches[i].distance < 3*min_dist ) {

good_matches.push_back( matches[i]);

}

}

Mat img_displayed;

Mat img_displayed_1;

// ve cac duong thang va xoa cac diem khong tot drawMatches( img_object, keypoints_object, img_scene, keypoints_scene, good_matches, img_displayed, Scalar::all(-1), Scalar::all(-1), vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Localize the object

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < good_matches.size(); i++ ) {

obj.push_back( keypoints_object[ good_matches[i].queryIdx ].pt );

scene.push_back( keypoints_scene[ good_matches[i].trainIdx ].pt );

}

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(img_object.cols/8,img_object.rows/8); obj_corners[1] = cvPoint( img_object.cols*7/8, img_object.rows/8 );

obj_corners[2] = cvPoint( img_object.cols*7/8, img_object.rows*3/8 ); obj_corners[3] = cvPoint( img_object.cols/8, img_object.rows*3/8 );

std::vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H); //-- Draw lines between the corners (the mapped object in the scene - image_2 ) line( img_displayed, scene_corners[0] + Point2f( img_object.cols, 0), scene_corners[1] + Point2f( img_object.cols, 0), Scalar(0, 255, 0), 4 ); line( img_displayed, scene_corners[1] + Point2f( img_object.cols, 0), scene_corners[2] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 ); line( img_displayed, scene_corners[2] + Point2f( img_object.cols, 0), scene_corners[3] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 ); line( img_displayed, scene_corners[3] + Point2f( img_object.cols, 0), scene_corners[0] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

//-- Get the corners from the image_2 ( the object to be "detected" ) std::vector<point2f> obj_corners_2(4); obj_corners_2[0] = cvPoint(0,img_object.rows/2); obj_corners_2[1] = cvPoint( img_object.cols, img_object.rows/2 ); obj_corners_2[2] = cvPoint( img_object.cols, img_object.rows ); obj_corners_2[3] = cvPoint( 0, img_object.rows ); std::vector<point2f> scene_corners_2(4);

perspectiveTransform( obj_corners_2, scene_corners_2, H); //-- Draw lines between the corners (the mapped object in the scene - image_2 ) line( img_displayed, scene_corners_2[0] + Point2f( img_object.cols, 0), scene_corners_2[1] + Point2f( img_object.cols, 0), Scalar(255, 0, 0), 4 ); line( img_displayed, scene_corners_2[1] + Point2f( img_object.cols, 0), scene_corners_2[2] + Point2f( img_object.cols, 0), Scalar( 255, 0, 0), 4 ); line( img_displayed, scene_corners_2[2] + Point2f( img_object.cols, 0), scene_corners_2[3] + Point2f( img_object.cols, 0), Scalar( 255, 0, 0), 4 ); line( img_displayed, scene_corners_2[3] + Point2f( img_object.cols, 0), scene_corners_2[0] + Point2f( img_object.cols, 0), Scalar( 255, 0, 0), 4 );

//imshow ("ImageDisplayer_1", img_displayed_1);

imshow ("ImageDisplayer", img_displayed);

cout << "Frame rate = " << getTickFrequency () / (getTickCount () - t0) << endl;

}

int main( int argc, char** argv ) { ros::init (argc, argv, "VisionBasedLineFollowing"); ros::NodeHandle nh; ros::Subscriber image_sub = nh.subscribe ("/camera/rgb/image_color", 1, image_cb); namedWindow ("ImageDisplayer", CV_WINDOW_AUTOSIZE);

img_object = imread ("imageDst1.jpg");

cvtColor (img_object, img_object_g, CV_BGR2GRAY);

detector.detect (img_object_g, keypoints_object);

extractor.compute (img_object_g, keypoints_object, descriptors_object);

while (char (waitKey (1)) != 'q') {

ros::spinOnce ();

}

}