Hello everyone,

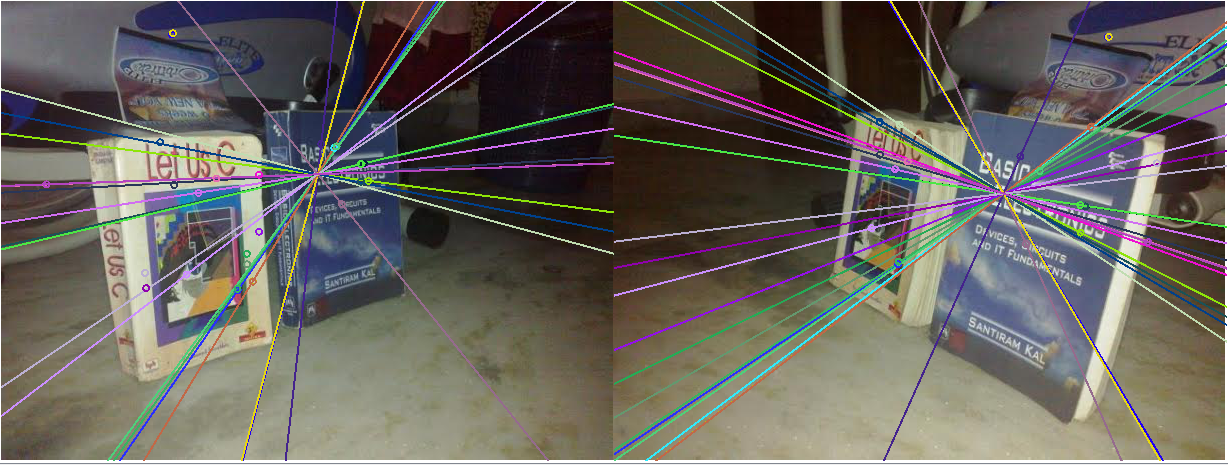

I was following OpenCV tutorial for Epipolar geometry in python and wanted to see in my little test code converting to c++ (Qt environment). Testing with the standart dataset of OpenCV concludes the following scene. The epilines seem to converge wrong. The F (fundamental) matrix was calculated with least median algorithm. I changed to 8-point algorithm with not much change.

Can anyone shed a light what might be going wrong please?

Thanks in advance,

int minHessian = 400;

Ptr<SurfFeatureDetector> surf_ = SURF::create( minHessian );

FlannBasedMatcher matcher;

std::tie(img1, imgp1) = m_images[ui->cmbImage1->currentText()];

std::tie(img2, imgp2) = m_images[ui->cmbImage2->currentText()];

cvut::convertToGray(img1, img_gray_1);

cvut::convertToGray(img2, img_gray_2);

surf_->detect(img_gray_1, keypoints_1);

surf_->detect(img_gray_2, keypoints_2);

surf_->compute(img_gray_1, keypoints_1, descriptors_1);

surf_->compute(img_gray_2, keypoints_2, descriptors_2);

matcher.match( descriptors_1, descriptors_2, matches );

vector< DMatch > good_matches;

cvut::getGoodMatchesWithMinDist(matches, good_matches);

DMatch match;

for( size_t m = 0; m < good_matches.size(); m++ )

{

match = good_matches[m];

pts1.push_back( keypoints_1[match.queryIdx].pt );

pts2.push_back( keypoints_2[match.trainIdx].pt );

}

F = findFundamentalMat(pts1, pts2, FM_LMEDS);

computeCorrespondEpilines(pts1, 1, F, lines2);

computeCorrespondEpilines(pts2, 2, F, lines1);

and the drawing code :

QPointF pt0, pt1;

Vec3f r;

qreal c0 = m_bbImg1.width();

qreal c = m_bbImg1.width();

for(int i = 0; i < lines1.size(); i++)

{

r = lines1[i];

pt0 = QPointF(0, -r[2]/r[1]);

pt1 = QPointF(c, -(r[2]+r[0]*c)/r[1]);

m_epilines1.push_back(QLineF(pt0,pt1));

}

c = m_bbImg2.width();

for(int i = 0; i < lines2.size(); i++)

{

r = lines2[i];

pt0 = QPointF(c0, -r[2]/r[1]);

pt1 = QPointF(c0+c, -(r[2]+r[0]*c)/r[1]);

m_epilines2.push_back(QLineF(pt0,pt1));

}