Hi

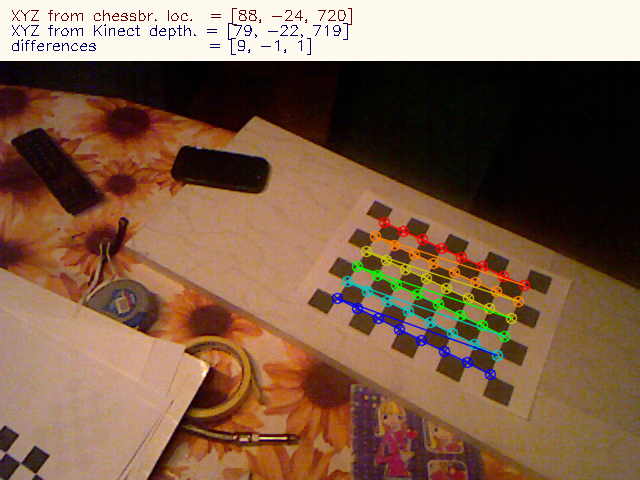

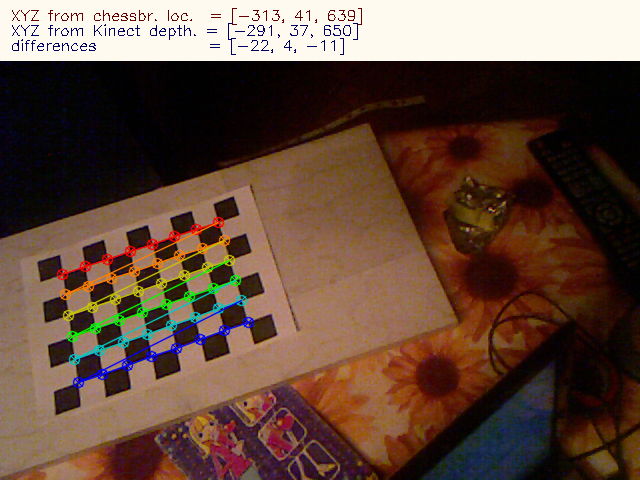

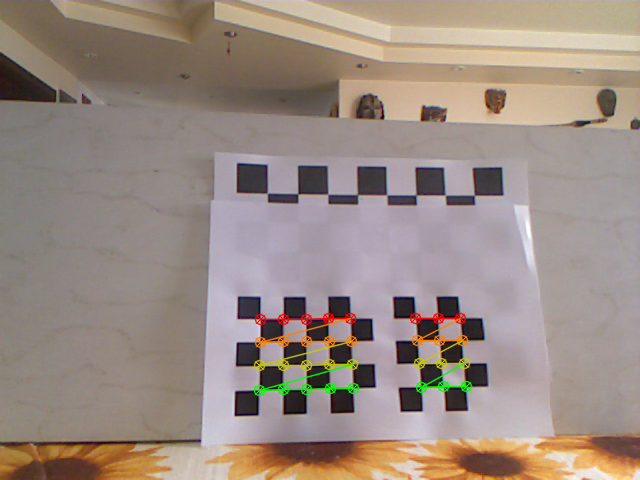

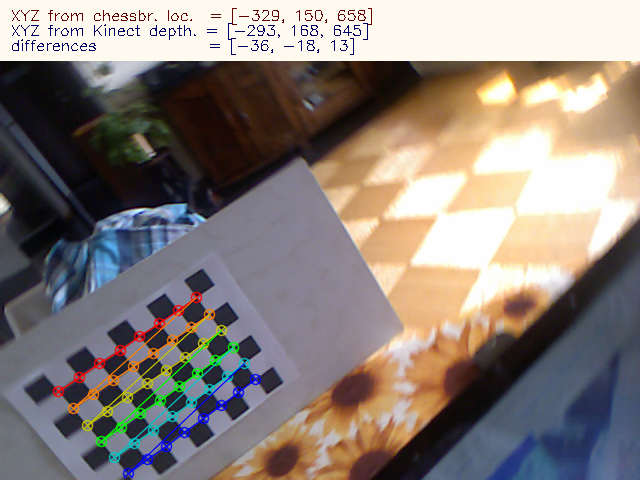

I am trying to verify the accuracy of Kinect depth estimation. I am using openNi to capture Kinect data. Both images (RGB and depth) are pixel aligned. In order to get the true XYZ coordinates of a pixel, I use a chessboard and localize it in a standard way. The RGB camera was calibrated beforehand. I believe, after calibration I get quite precise results of chessboard localization - I printed 2 small chessboards on a sheet of paper, localized them and calculated the distance between their origins. The errors were less than 1 mm. Here is the image:

These two chessboards are less than 140 mm apart (as measured with a ruler) and the Euclidean distance between their detected origins in 3D space is calculated to 139.5 +-0.5 mm. Seems absolutely correct.

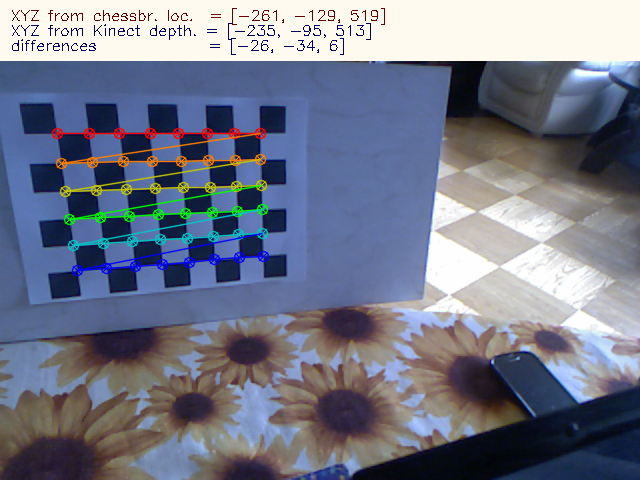

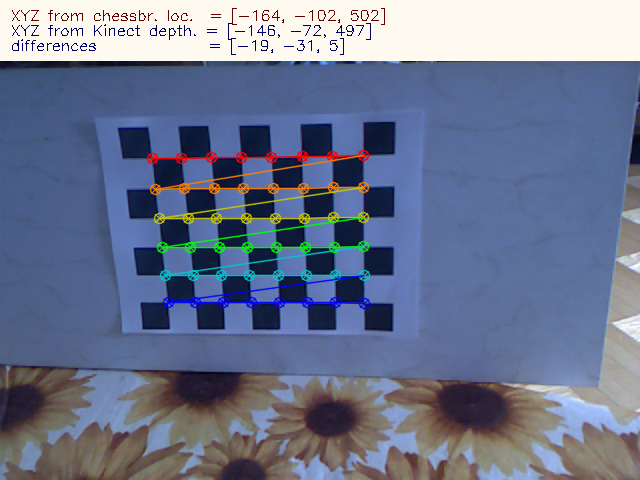

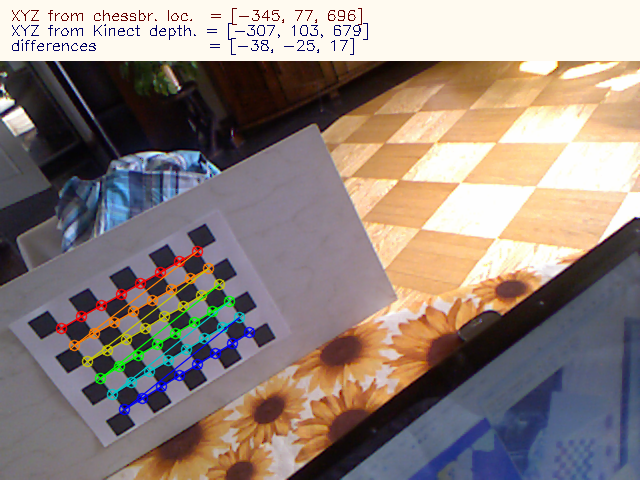

Now, since RGB and depth images are aligned and I know the pixel coordinates of my chessboard origin, I cen get the depth or full XYZ coords of this point. The results are, unfortunately, slightly inconsistent:

From chessboard localization I get similar but clearly different results:

(I inverted the depth-based Y coordinate for consistency with chessboard measurement) As you see, the results are slightly off, but the differences are not constant.

What am I doing wrong? I understand that since images are pixel aligned, I do not need do transform between the coorinates frames of RGB and IR cameras as this is actually done by the Kinect in a way. Or is it possible, that this transformation is good enough for pixel alignment, but still lacks some precision in XYZ calculation?

If I could grab the IR image instead of the RGB, that problem would not exist as I would get the depth map and the chessboard with the same camera, but with OpenNI I don't think I can do it...or am I wrong?

Your comments will be appreciated.

Witek