I'm writing my own sobel filter and am having trouble getting it to run properly. I'm applying a vertical and horizontal convolution to a grayscale image, which appear to have some result when applied separately, but not together. I only get a slightly darkened image from the original.

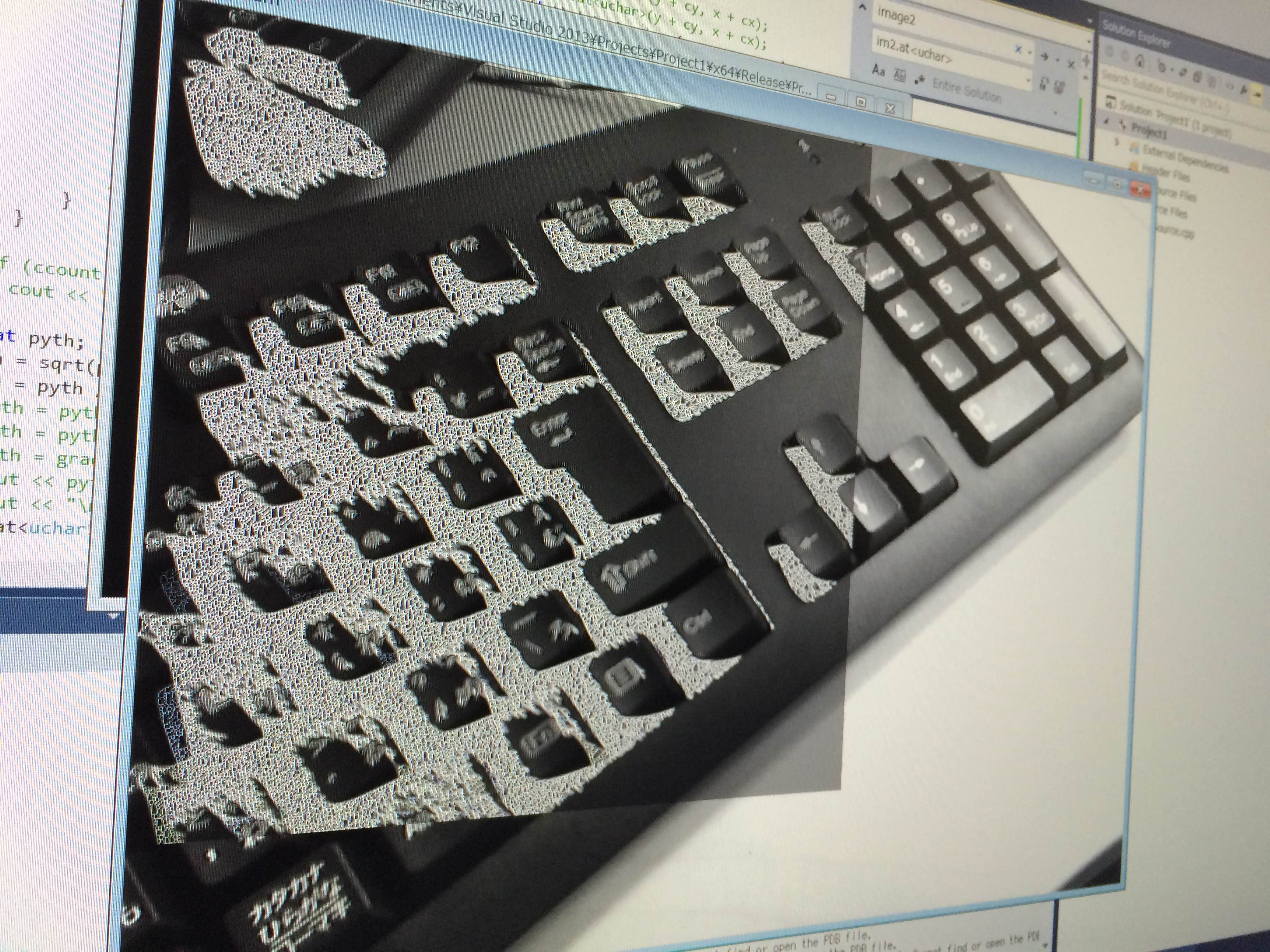

As you can tell from my code, I've had some trouble with exactly how to normalize the results to be within 0-255. I believe 255/1442 is correct for grayscale, though if I change the multiplier, I get white spaces around corners. Though if I do this, I also get a lot of noise that seems to be extend towards the lower left corner. In the image, I've only applied the filter to a section of the image.

Am I using the right multiplier? Is there another problem I'm encountering?

Hopefully I'm not making a pixel coordinate mistake or something, I've gone through a lot of the code in the debugger and all seems to work okay from the algorithm descriptions I've read.

Thanks in advance for any help or advice.

void sobel_filter(Mat im1, Mat im2, int x_size, int y_size){

int hweight[3][3] = { { -1, 0, 1 },

{ -2, 0, 2 },

{ -1, 0, 1 } };

int vweight[3][3] = { { -1, -2, -1 },

{ 0, 0, 0 },

{ 1, 2, 1 } };

//Apply filter

float gradx;

float grady;

for (int x = 0; x < x_size; x++){

for (int y = 0; y < y_size; y++){

gradx = 0;

grady = 0;

for (int cx = -1; cx < 2; cx++){

for (int cy = -1; cy < 2; cy++){

if (x + cx>0 && y + cy > 0){

gradx = gradx + hweight[cx + 1][cy + 1] * (int) im1.at<uchar>(y + cy, x + cx);

grady = grady + vweight[cx + 1][cy + 1] * (int) im1.at<uchar>(y + cy, x + cx);

}

}

}

//Use pythagorean theorem to combine both directions.

float pyth;

pyth = sqrt(pow(gradx, 2) + pow(grady, 2));

//pyth = pyth / 3;

pyth = pyth * 255/1442;

//pyth = pyth * 255 / (1442 * 3);

//pyth = gradx * 255.0 / 1020.0;

im2.at<uchar>(y, x) = (int) pyth;

}

}

}