Hi there,

I have a LI-USB30-V024STEREO board which interlaces the grayscale image into a single image. I am reading the images from the two cameras and displaying it into several windows, including the original image, but am unable to properly grab the gray-scale video from the source.

My

I have gotten some information and code from Leopard Imaging, but I think there is below...

still a disconnect. They have provided to me what they are telling me is required to extract the two images from a frame. I am told that this card has one byte that is from the left camera and the next byte is from the second camera. There is a third byte which doesn't seem to do anything, so that is probably the problem. I tried blacking out one camera and saturating the other to identify what is going on, but have included the code below seems to bleed data between the cameras. It also seems to skip lines? The as well as before and after images of a frame. I am calling their code works in that it displays the four images (original color and three grayscale byte-offset images) but the grayscale images are incorrect.from ImageProcessing::process(). Any help you may provide would be most appreciated.

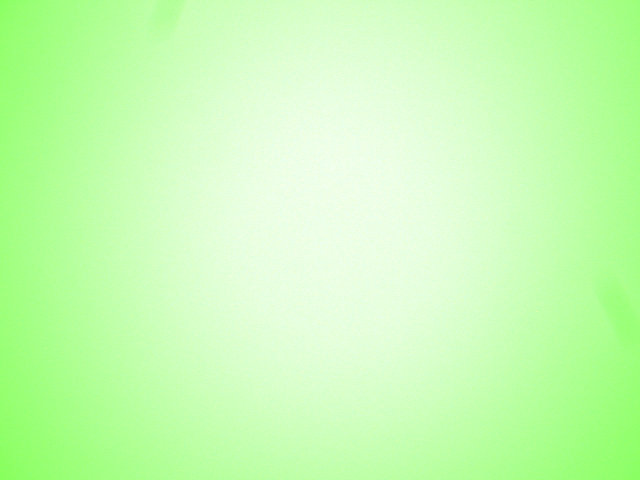

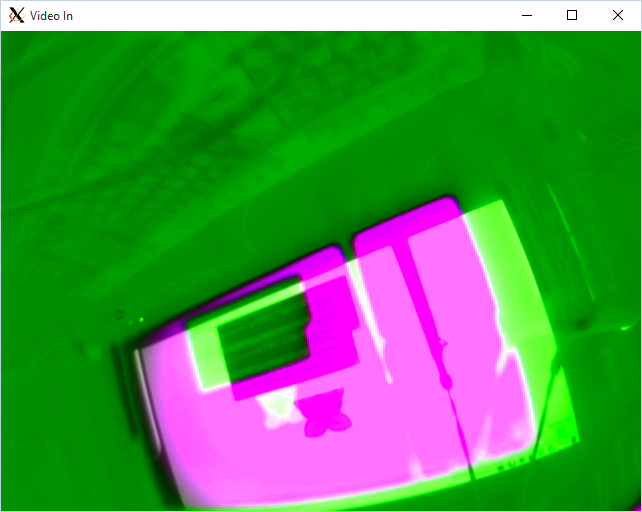

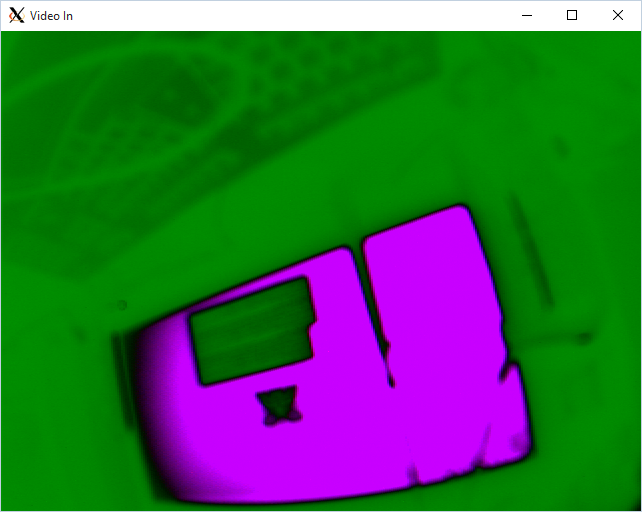

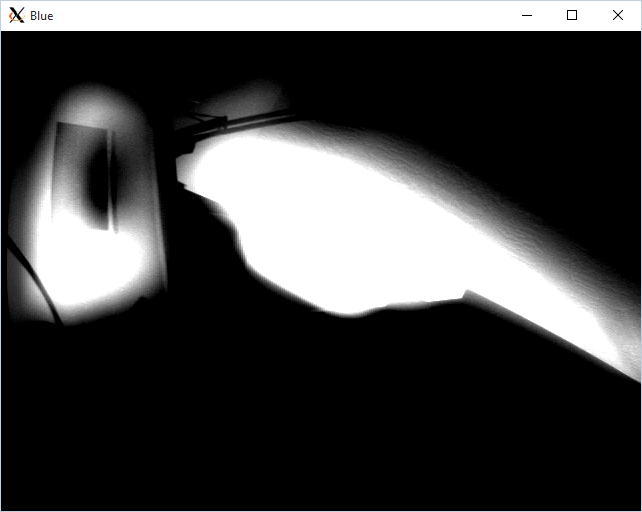

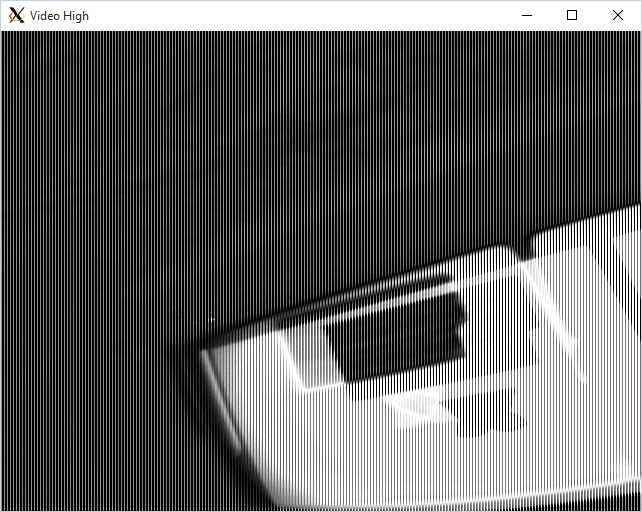

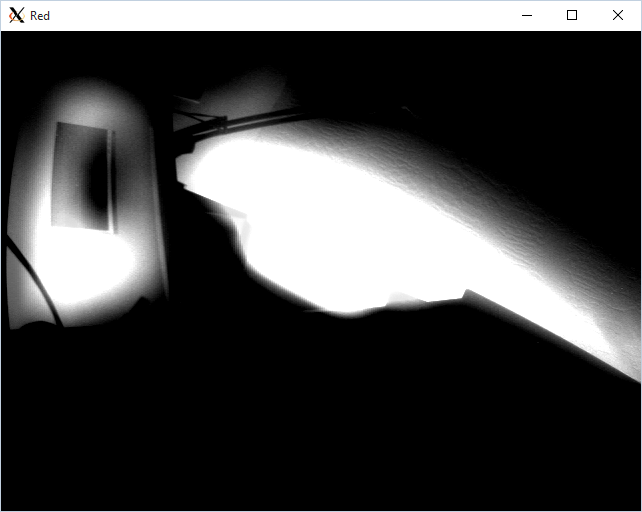

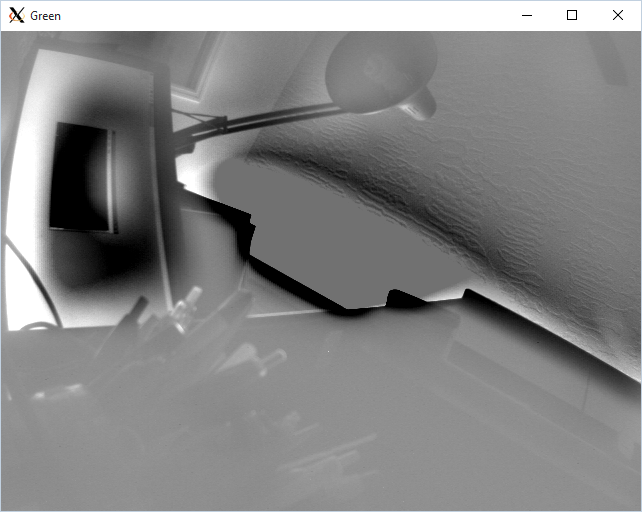

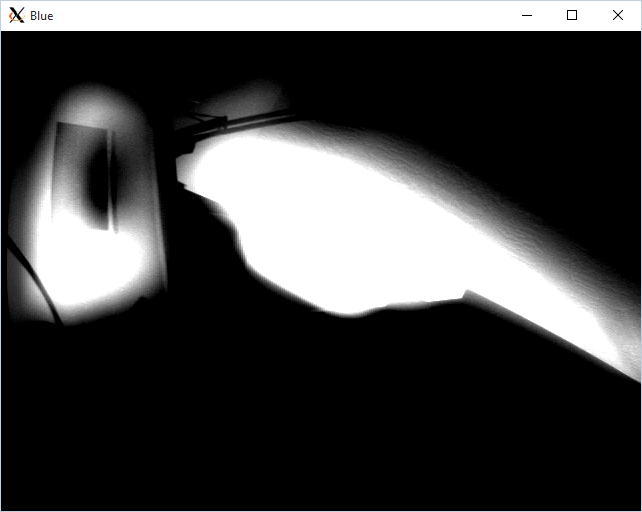

Raw, original image:

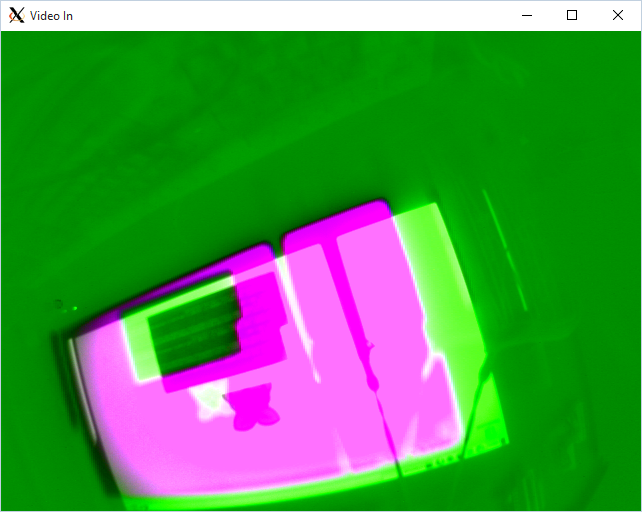

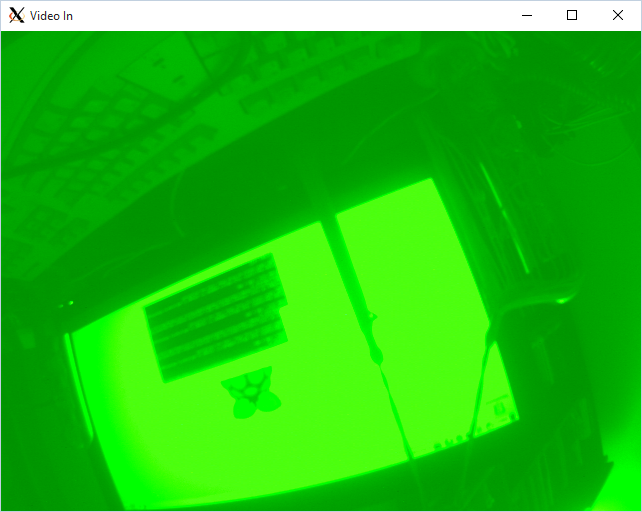

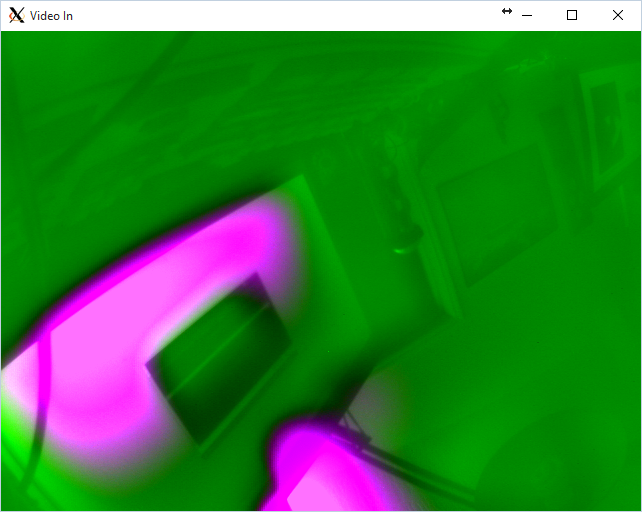

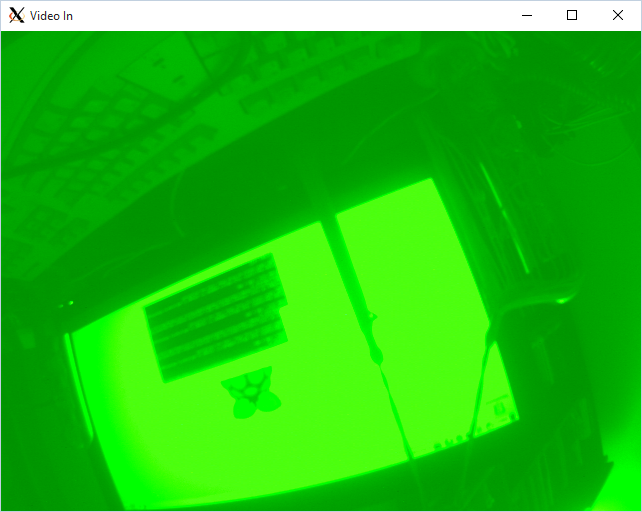

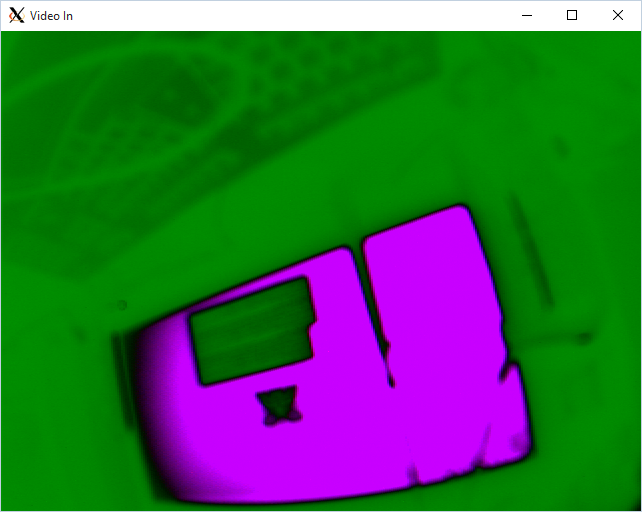

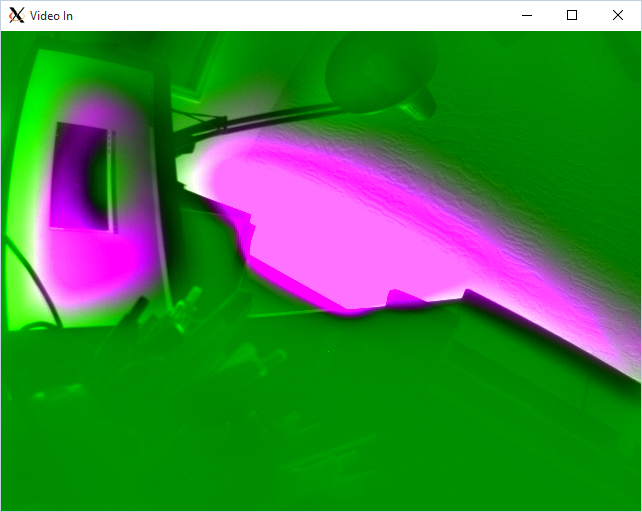

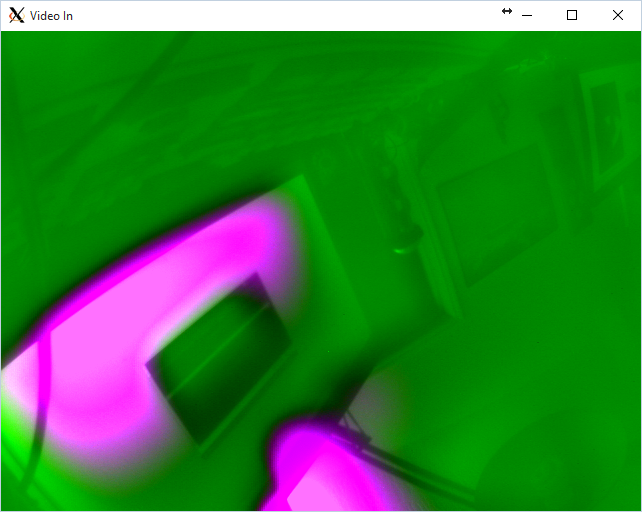

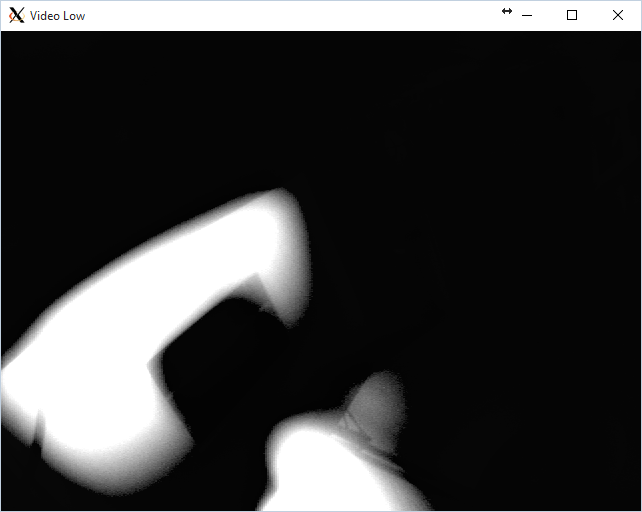

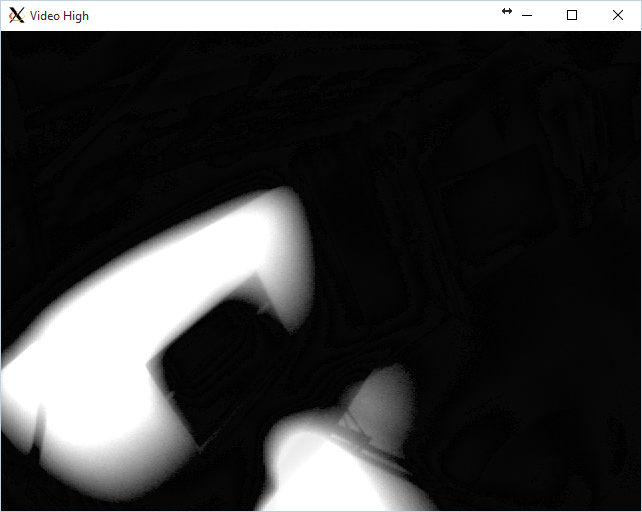

Processed Image:

VideoCapture cap(0); // open the video camera no. 0

#include "ImageProcessor.h"

#include "../handlers/menu/Menu.h"

#include "../utils/WindowUtils.h"

#include <vector>

#include <iostream>

#include <opencv2/highgui/highgui.hpp>

#include <stdio.h>

#include <SDL/SDL.h>

using namespace cv;

using namespace std;

string ImageProcessor::theWindowName = "Video";

ImageProcessor::ImageProcessor()

: videoCapture(0), myScreen(0), myFrameNumber(0) {

isRunningX = WindowUtils::isRunningX();

if (!cap.isOpened()) // if not success, exit program

(!videoCapture.isOpened()) {

cout << "Cannot open the video cam" << endl;

return }

if (isRunningX) {

namedWindow(theWindowName.c_str(), CV_WINDOW_FULLSCREEN);

cvNamedWindow(theWindowName.c_str(), CV_WINDOW_NORMAL);

cvSetWindowProperty(theWindowName.c_str(), CV_WND_PROP_FULLSCREEN, CV_WINDOW_FULLSCREEN);

} else {

SDL_Init(SDL_INIT_EVERYTHING);

SDL_ShowCursor(0);

}

}

#define BYTE uchar

#define WORD ushort

#define TRUE 1

#define FALSE 0

static unsigned short linear_to_gamma[65536];

static double gammaValue = -1;

}

static int gBPP = 0;

void bayer_to_rgb24(uint8_t *pBay, uint8_t *pRGB24, int width, int height, int pix_order);

int raw_to_bmp_mono(BYTE* in_bytes, BYTE* out_bytes, int width, int height, int bpp,

bool GammaEna, double dWidth = cap.get(CV_CAP_PROP_FRAME_WIDTH); //get the width of frames of the video

double dHeight = cap.get(CV_CAP_PROP_FRAME_HEIGHT); //get the height of frames of the video

cout << "Frame size : " << dWidth << " x " << dHeight << endl;

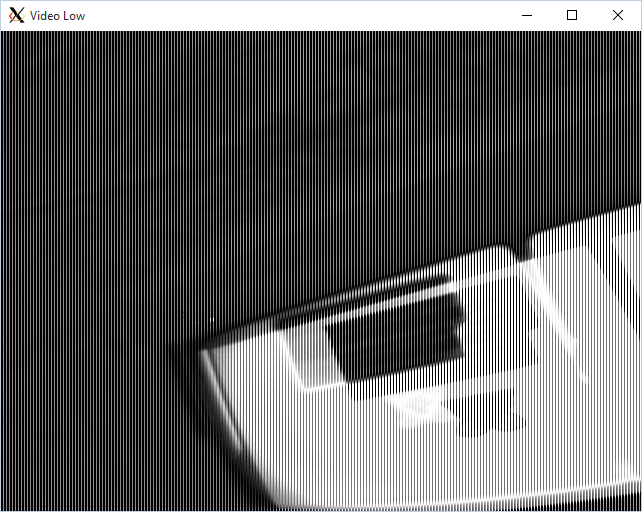

string stringLow("Video Low");

string stringMid("Video Mid");

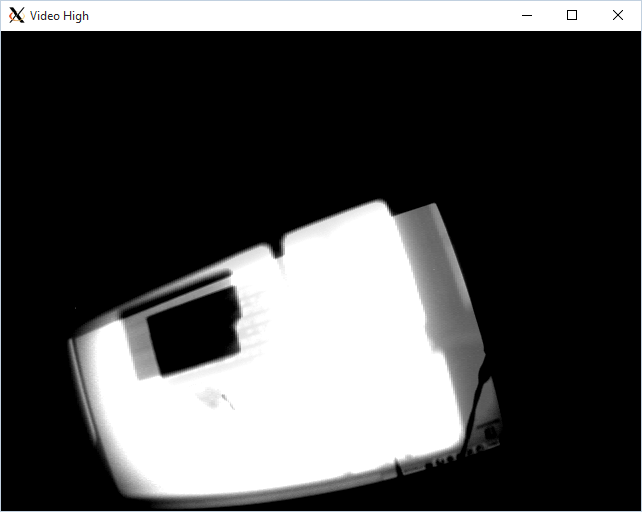

string stringHigh("Video High");

string stringRaw("Video Raw");

namedWindow(stringRaw.c_str(), CV_WINDOW_AUTOSIZE); //create a window called "MyVideo"

cvNamedWindow(stringLow.c_str(), CV_WINDOW_AUTOSIZE);

cvNamedWindow(stringMid.c_str(), CV_WINDOW_AUTOSIZE);

cvNamedWindow(stringHigh.c_str(), CV_WINDOW_AUTOSIZE);

while (1) {

Mat matIn;

if (!cap.read(matIn)) //if not success, break loop

{

cout << "Cannot read a frame from video stream" << endl;

break;

}

Mat matLow(matIn.cols, matIn.rows, CV_8UC1);

Mat matMid(matIn.cols, matIn.rows, CV_8UC1);

Mat matHigh(matIn.cols, matIn.rows, CV_8UC1);

uchar* pLow = matLow.data;

uchar* pMid = matMid.data;

uchar* pHigh = matHigh.data;

gamma);

bool ImageProcessor::process(Mat& matOut) {

// Leopard Imaging

unsigned char* ptr = (unsigned char*) malloc(myMat.cols * myMat.rows * 3);

unsigned char* ptr2 = (unsigned char*) malloc(myMat.cols * myMat.rows * 3);

bayer_to_rgb24(myMat.data, ptr, myMat.cols, myMat.rows, 1);

raw_to_bmp_mono(ptr, ptr2, myMat.cols, myMat.rows, 8, true, 1.6);

int i = 0;

for (int row = 0; row < matIn.rows; matOut.rows; row++) {

for (int col = 0; col < matIn.cols; matOut.cols; col++) {

Vec3b pixelIn = matIn.at<Vec3b>(row, col);

*pLow = pixelIn[0];

*pMid = pixelIn[1];

*pHigh = pixelIn[2];

// printf("%02X %02X %02X ", *pLow, *pMid, *pHigh);

// colorVect;

colorVect.val[0] = ptr2[i];

colorVect.val[1] = ptr2[i];

colorVect.val[2] = ptr2[i];

matOut.at<Vec3b>(Point(col, row)) = colorVect;

i+=2;

}

}

if ((x + 1) % 640 (myFrameNumber == 0) {

// printf("\n");

// printf("%4d ", x);

// }

pLow++;

pMid++;

pHigh++;

}

}

imshow(stringRaw, matIn); //show the frame in "MyVideo" window

imshow(stringLow, matLow);

imshow(stringMid, matMid);

imshow(stringHigh, matHigh);

if (waitKey(30) == 27) //wait for 'esc' key press for 30ms. If 'esc' key is pressed, break loop

{

10) {

writePngImage("/tmp/image.png", matOut, false);

writeInfo("/tmp/image.csv", matOut, false);

cout << "esc key is pressed by user" "image snapped" << endl;

break;

}

}

That was a good catch, LBerger. I made the changes you suggested (below) and did not see any difference in the displayed images (which really surprised me.) I tried the following:

Mat matLow(matIn.rows, matIn.cols, CV_8UC1);

Mat matMid(matIn.rows, matIn.rows, CV_8UC1);

Mat matHigh(matIn.rows, matIn.cols, CV_8UC1);

uchar* pLow = matLow.data;

uchar* pMid = matMid.data;

uchar* pHigh = matHigh.data;

uchar* pIn = matIn.data;

for (int row = 0; row < matIn.rows; row++) {

for (int col = 0; col < matIn.cols; col++) {

*pLow = *pIn;

pLow++;

pIn++;

*pMid = *pIn;

// pMid++;

pIn++;

*pHigh = *pIn;

pHigh++;

pIn++;

}

}

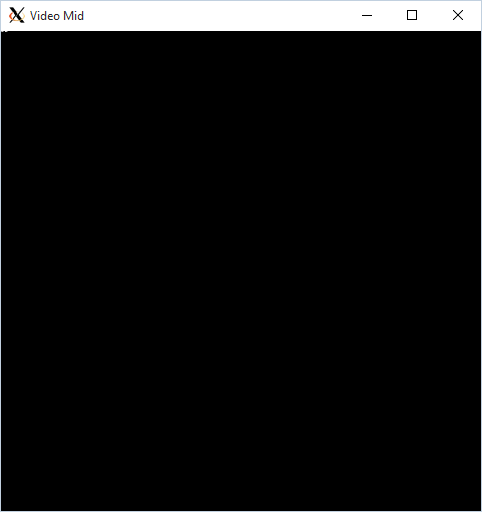

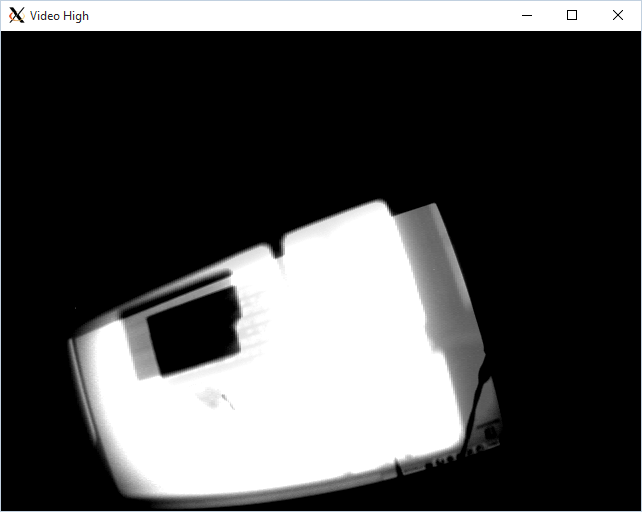

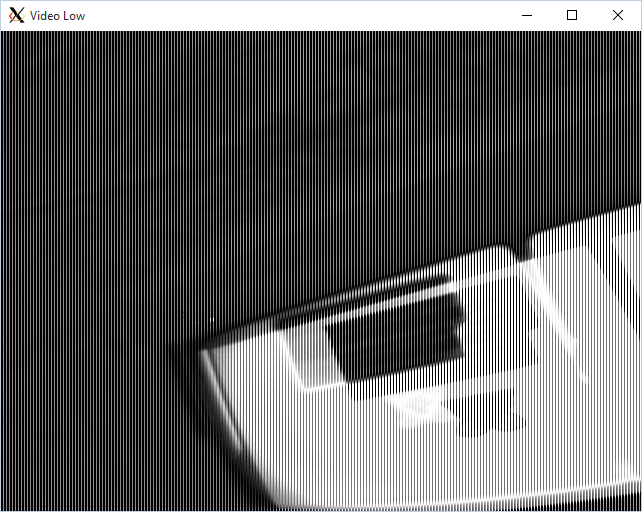

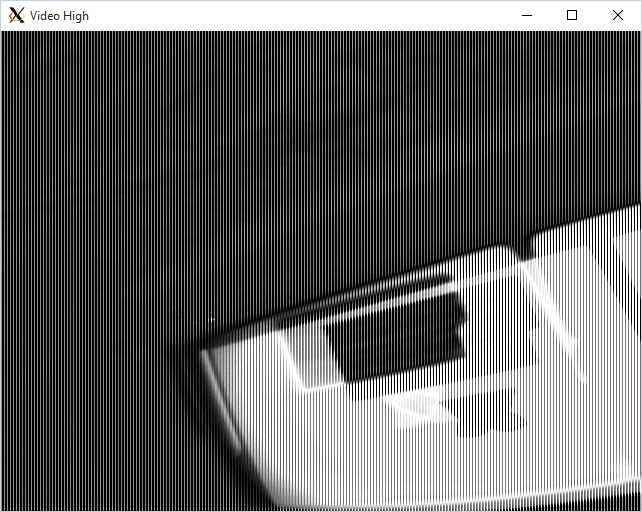

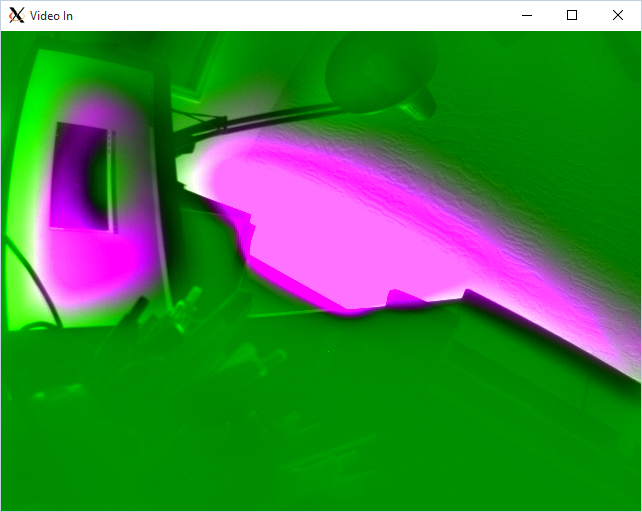

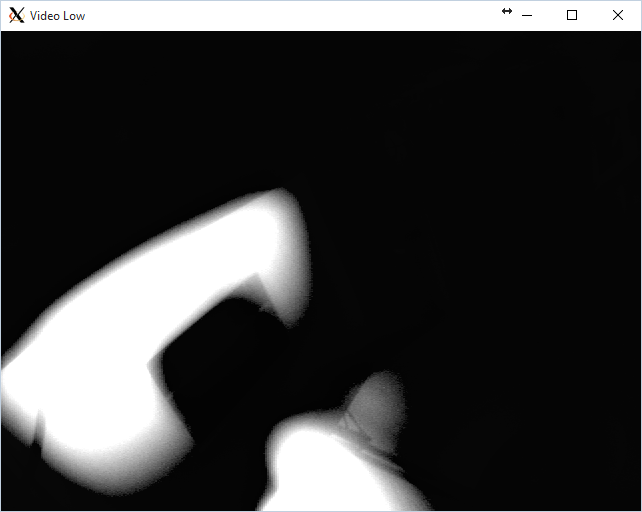

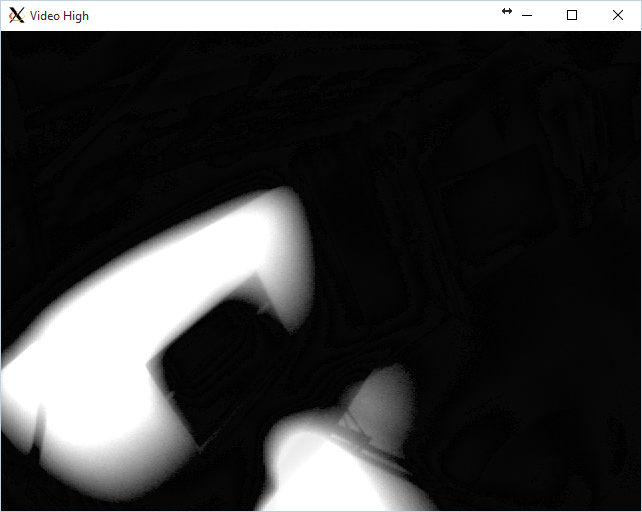

This is as close as I've gotten. I have attached screenshots of the the three screens to see if that sheds any light on this.

I realize that "Video Mid" should be black as I am not writing to it, but I was surprised to see it had different dimensions than the other two.

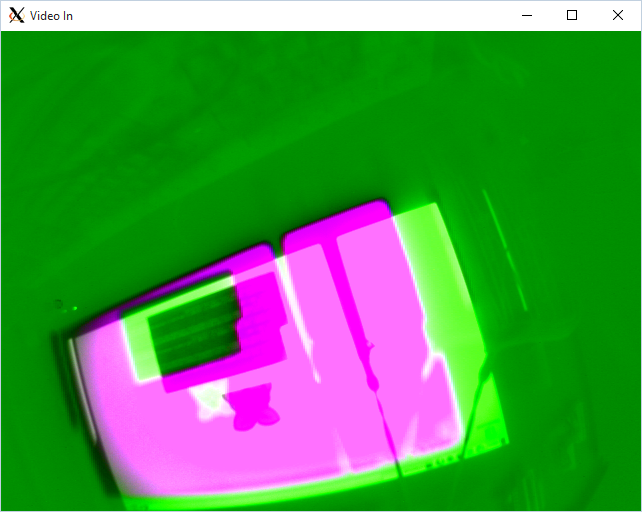

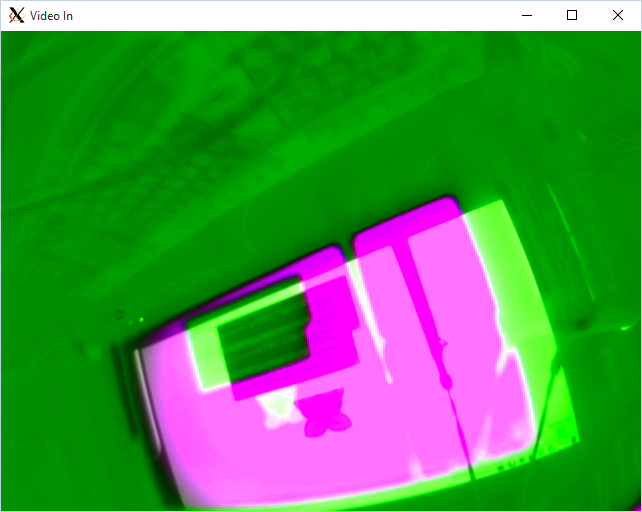

- Note that "Video In" looks exactly like I am expecting and have seen LeopardImaging examples of.

- The other two images are extremely dark and both seem to track a single camera.

- If I uncomment the line where *pMid is incremented, the application crashes(!)

I made the suggestions you added and it is a little better. I seem to have grayscale images, but it looks like I am missing some columns.

The other issue that has been plaguing me all along is that when I cover one of the sensors, the color image changes, but the other two images just become dimmer (see attached images below.)

Left Sensor Covered:

Right Sensor Covered:

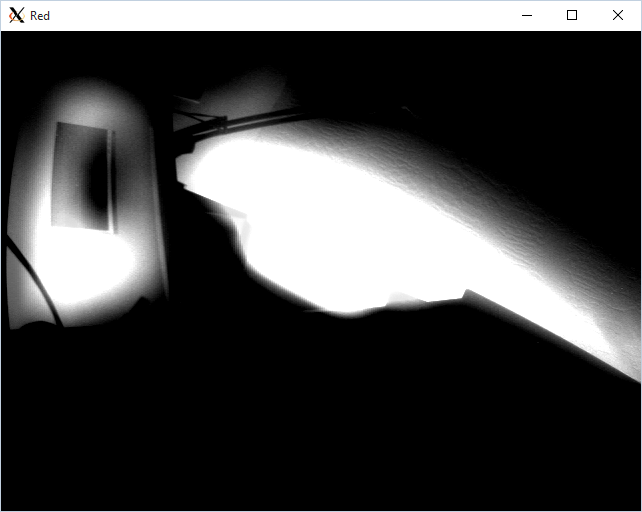

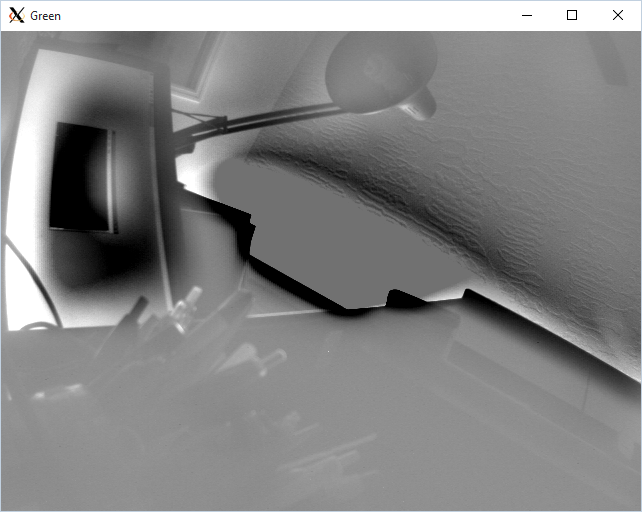

Using the code you suggested:

vector<Mat> colorPlane;

split(matIn, colorPlane);

imshow("Blue", colorPlane[0]);

imshow("Green", colorPlane[1]);

imshow("Red", colorPlane[2]);

I got the following images:

When I cover up one sensor, they all are affected as before.

I believe you are asking for what I called "Video In"? If not, please explain.

the following does not work (cannot find a writer for the specified extension.)

imwrite("test.yml",matIn);

I see that there are a number of supported extensions:

- Windows bitmaps - \*.bmp, \*.dib (always supported)

- JPEG files - \*.jpeg, \*.jpg, \*.jpe (see the *Notes* section)

- JPEG 2000 files - \*.jp2 (see the *Notes* section)

- Portable Network Graphics - \*.png (see the *Notes* section)

- WebP - \*.webp (see the *Notes* section)

- Portable image format - \*.pbm, \*.pgm, \*.ppm (always supported)

- Sun rasters - \*.sr, \*.ras (always supported)

- TIFF files - \*.tiff, \*.tif (see the *Notes* section)

Which one would you prefer

Using the following:

}

myFrameNumber++;

free(ptr);

free(ptr2);

}

void ImageProcessor::writePngImage(const string & fileName,

const Mat& mat, bool isColor) {

vector<int> compression_params;

compression_params.push_back(IMWRITE_PNG_COMPRESSION);

if (isColor) {

compression_params.push_back(9);

} else {

compression_params.push_back(3);

}

try {

imwrite("alpha.png", matIn, imwrite(fileName, mat, compression_params);

} catch (...) {

fprintf(stderr, "Exception converting image to PNG format\n");

exit(0);

}

I got this file:

C:\fakepath\alpha.png

"My" code now looks like this:

VideoCapture cap(0);

}

}

void ImageProcessor::writeInfo(const std::string & fileName,

const Mat& mat, bool isColor) {

FILE* file = fopen(fileName.c_str(), "w");

uchar* ptr = mat.data;

// write the header row

for (int col = 0; col < mat.cols; col++) {

if (!cap.isOpened()) {

cout << "Cannot open (isColor) {

fprintf(file, "%3d[0]\t%3d[1]\t%3d[2]", col, col, col);

} else {

fprintf(file, "%3d[0]\t%3d[1]", col, col);

}

if (col < mat.cols - 1) {

fprintf(file, "\t");

}

}

fprintf(file, "\n");

int val = 2;

switch (val) {

case 1:

// write the video cam" << endl;

return -1;

}

string stringLow("Video Low");

string stringHigh("Video High");

string stringIn("Video In");

namedWindow(stringIn.c_str(), CV_WINDOW_AUTOSIZE);

cvNamedWindow(stringLow.c_str(), CV_WINDOW_AUTOSIZE);

cvNamedWindow(stringHigh.c_str(), CV_WINDOW_AUTOSIZE);

while (1) {

data using the class

for (int row = 0; row < mat.rows; row++) {

for (int col = 0; col < mat.cols; col++) {

Vec3b color = mat.at<Vec3b>(Point(col, row));

if (isColor) {

fprintf(file, "%02X\t%02X\t%02X", color[0], color[1], color[2]);

} else {

fprintf(file, "%02X\t%02X", color[0], color[1]);

}

if (col < mat.cols - 1) {

fprintf(file, "\t");

}

}

fprintf(file, "\n");

}

break;

case 2:

// write the data using pointers

for (int row = 0; row < mat.rows; row++) {

for (int col = 0; col < mat.cols; col++) {

if (isColor) {

fprintf(file, "%02X\t%02X\t%02X", *(ptr++), *(ptr++), *(ptr++));

} else {

fprintf(file, "%02X\t%02X", *(ptr++), *(ptr++));

}

if (col < mat.cols - 1) {

fprintf(file, "\t");

}

}

fprintf(file, "\n");

}

break;

}

fclose(file);

}

void ImageProcessor::update() {

videoCapture.grab();

videoCapture.retrieve(myMat);

Mat matIn;

mat(myMat.rows, myMat.cols, myMat.type()); //CV_8U);

process(mat);

myMenu.update(mat);

if (!cap.read(matIn)) //if not success, break loop

{

cout << "Cannot read a frame from video stream" << endl;

break;

}

Mat matLow(matIn.rows, matIn.cols, CV_8UC1);

Mat matHigh(matIn.rows, matIn.cols, CV_8UC1);

uchar *pSrc;

(isRunningX) {

imshow(theWindowName, mat);

} else {

IplImage opencvimg = (IplImage) mat;

if (myScreen == 0) {

myScreen = SDL_SetVideoMode(mat.cols, mat.rows, 32, SDL_HWSURFACE);

}

SDL_LockSurface(myScreen);

SDL_Surface* currentFrame = SDL_CreateRGBSurfaceFrom(opencvimg.imageData,

opencvimg.width, opencvimg.height,

opencvimg.depth * opencvimg.nChannels,

opencvimg.widthStep,

0xff0000, 0x00ff00, 0x0000ff, 0);

SDL_UnlockSurface(myScreen);

if (currentFrame) {

SDL_BlitSurface(currentFrame, NULL, myScreen, NULL);

}

SDL_Flip(myScreen);

SDL_FreeSurface(currentFrame);

}

}

// create gamma table

static void initGammaTable(double gamma, int bpp) {

int result;

double dMax;

int iMax;

unsigned short *pDst1, *pDst2;

addr, value;

if (bpp > 12)

return;

dMax = pow(2, (double) bpp);

iMax = (int) dMax;

for (int i = 0; i < matIn.rows; iMax; i++) {

pDst1 = (unsigned short*) matLow.ptr(i);

pDst2 = (unsigned short*) matHigh.ptr(i);

pSrc = matIn.ptr(i);

result = (int) (pow((double) i / dMax, 1.0 / gamma) * dMax);

linear_to_gamma[i] = result;

}

gammaValue = gamma;

gBPP = bpp;

}

static void gammaCorrection(BYTE* in_bytes, BYTE* out_bytes, int width, int height, int bpp, double gamma) {

int i;

WORD *srcShort;

WORD *dstShort;

if (gamma != gammaValue || gBPP != bpp)

initGammaTable(gamma, bpp);

if (bpp > 8) {

srcShort = (WORD *) (in_bytes);

dstShort = (WORD *) (out_bytes);

for (int j (i = 0; i < width * height; i++)

*dstShort++ = linear_to_gamma[*srcShort++];

} else {

for (i = 0; i < width * height; i++)

*out_bytes++ = linear_to_gamma[*in_bytes++];

}

}

int raw_to_bmp_mono(BYTE* in_bytes, BYTE* out_bytes, int width, int height, int bpp,

bool GammaEna, double gamma) {

int i, j;

int shift = bpp - 8;

unsigned short tmp;

BYTE* dst = out_bytes;

BYTE* src = in_bytes;

WORD* srcWord = (WORD *) in_bytes;

if (GammaEna) {

src = in_bytes;

gammaCorrection(src, src, width, height, bpp, gamma);

}

if (bpp > 8) {

srcWord = (WORD *) in_bytes;

// convert 16bit bayer to 8bit bayer

for (i = 0; i < height; i++)

for (j = 0; j < matIn.cols; width; j++) {

unsigned short v = *pSrc++;

unsigned short v1 = (*pSrc & 0xF0) tmp = (*srcWord++) >> 4;

v = v shift;

*dst++ = (BYTE) tmp;

*dst++ = (BYTE) tmp;

*dst++ = (BYTE) tmp;

}

} else {

for (i = 0; i < height; i++)

for (j = 0; j < width; j++) {

tmp = (*src++);

*dst++ = (BYTE) tmp;

*dst++ = (BYTE) tmp;

*dst++ = (BYTE) tmp;

}

}

return 0;

}

void convert_border_bayer_line_to_bgr24(uint8_t* bayer, uint8_t* adjacent_bayer,

uint8_t *bgr, int width, int start_with_green, int blue_line) {

int t0, t1;

if (start_with_green) {

/* First pixel */

if (blue_line) {

*bgr++ = bayer[1];

*bgr++ = bayer[0];

*bgr++ = adjacent_bayer[0];

} else {

*bgr++ = adjacent_bayer[0];

*bgr++ = bayer[0];

*bgr++ = bayer[1];

}

/* Second pixel */

t0 = (bayer[0] + bayer[2] + adjacent_bayer[1] + 1) / 3;

t1 = (adjacent_bayer[0] + adjacent_bayer[2] + 1) >> 1;

if (blue_line) {

*bgr++ = bayer[1];

*bgr++ = t0;

*bgr++ = t1;

} else {

*bgr++ = t1;

*bgr++ = t0;

*bgr++ = bayer[1];

}

bayer++;

adjacent_bayer++;

width -= 2;

} else {

/* First pixel */

t0 = (bayer[1] + adjacent_bayer[0] + 1) >> 1;

if (blue_line) {

*bgr++ = bayer[0];

*bgr++ = t0;

*bgr++ = adjacent_bayer[1];

} else {

*bgr++ = adjacent_bayer[1];

*bgr++ = t0;

*bgr++ = bayer[0];

}

width--;

}

if (blue_line) {

for (; width > 2; width -= 2) {

t0 = (bayer[0] + bayer[2] + 1) >> 1;

*bgr++ = t0;

*bgr++ = bayer[1];

*bgr++ = adjacent_bayer[1];

bayer++;

adjacent_bayer++;

t0 = (bayer[0] + bayer[2] + adjacent_bayer[1] + 1) / 3;

t1 = (adjacent_bayer[0] + adjacent_bayer[2] + 1) >> 1;

*bgr++ = bayer[1];

*bgr++ = t0;

*bgr++ = t1;

bayer++;

adjacent_bayer++;

}

} else {

for (; width > 2; width -= 2) {

t0 = (bayer[0] + bayer[2] + 1) >> 1;

*bgr++ = adjacent_bayer[1];

*bgr++ = bayer[1];

*bgr++ = t0;

bayer++;

adjacent_bayer++;

t0 = (bayer[0] + bayer[2] + adjacent_bayer[1] + 1) / 3;

t1 = (adjacent_bayer[0] + adjacent_bayer[2] + 1) >> 1;

*bgr++ = t1;

*bgr++ = t0;

*bgr++ = bayer[1];

bayer++;

adjacent_bayer++;

}

}

if (width == 2) {

/* Second to last pixel */

t0 = (bayer[0] + bayer[2] + 1) >> 1;

if (blue_line) {

*bgr++ = t0;

*bgr++ = bayer[1];

*bgr++ = adjacent_bayer[1];

} else {

*bgr++ = adjacent_bayer[1];

*bgr++ = bayer[1];

*bgr++ = t0;

}

/* Last pixel */

t0 = (bayer[1] + adjacent_bayer[2] + 1) >> 1;

if (blue_line) {

*bgr++ = bayer[2];

*bgr++ = t0;

*bgr++ = adjacent_bayer[1];

} else {

*bgr++ = adjacent_bayer[1];

*bgr++ = t0;

*bgr++ = bayer[2];

}

} else {

/* Last pixel */

if (blue_line) {

*bgr++ = bayer[0];

*bgr++ = bayer[1];

*bgr++ = adjacent_bayer[1];

} else {

*bgr++ = adjacent_bayer[1];

*bgr++ = bayer[1];

*bgr++ = bayer[0];

}

}

}

static void bayer_to_rgbbgr24(uint8_t *bayer,

uint8_t *bgr, int width, int height,

int start_with_green, int blue_line) {

/* render the first line */

convert_border_bayer_line_to_bgr24(bayer, bayer + width, bgr, width,

start_with_green, blue_line);

bgr += width * 16 + v1;

v = pow(v 3;

/* reduce height by 2 because of the special case top/bottom line */

for (height -= 2; height; height--) {

int t0, t1;

/* (width - 2) because of the border */

uint8_t *bayerEnd = bayer + (width - 2);

if (start_with_green) {

/* OpenCV has a bug in the next line, which was

t0 = (bayer[0] + bayer[width * 2] + 1) >> 1; */

t0 = (bayer[1] + bayer[width * 2 + 1] + 1) >> 1;

/* Write first pixel */

t1 = (bayer[0] + bayer[width * 2] + bayer[width + 1] + 1) / 4096.0, 1.0 3;

if (blue_line) {

*bgr++ = t0;

*bgr++ = t1;

*bgr++ = bayer[width];

} else {

*bgr++ = bayer[width];

*bgr++ = t1;

*bgr++ = t0;

}

/* Write second pixel */

t1 = (bayer[width] + bayer[width + 2] + 1) >> 1;

if (blue_line) {

*bgr++ = t0;

*bgr++ = bayer[width + 1];

*bgr++ = t1;

} else {

*bgr++ = t1;

*bgr++ = bayer[width + 1];

*bgr++ = t0;

}

bayer++;

} else {

/* Write first pixel */

t0 = (bayer[0] + bayer[width * 2] + 1) >> 1;

if (blue_line) {

*bgr++ = t0;

*bgr++ = bayer[width];

*bgr++ = bayer[width + 1];

} else {

*bgr++ = bayer[width + 1];

*bgr++ = bayer[width];

*bgr++ = t0;

}

}

if (blue_line) {

for (; bayer <= bayerEnd - 2; bayer += 2) {

t0 = (bayer[0] + bayer[2] + bayer[width * 2] +

bayer[width * 2 + 2] + 2) >> 2;

t1 = (bayer[1] + bayer[width] +

bayer[width + 2] + bayer[width * 2 + 1] +

2) >> 2;

*bgr++ = t0;

*bgr++ = t1;

*bgr++ = bayer[width + 1];

t0 = (bayer[2] + bayer[width * 2 + 2] + 1) >> 1;

t1 = (bayer[width + 1] + bayer[width + 3] +

1) >> 1;

*bgr++ = t0;

*bgr++ = bayer[width + 2];

*bgr++ = t1;

}

} else {

for (; bayer <= bayerEnd - 2; bayer += 2) {

t0 = (bayer[0] + bayer[2] + bayer[width * 2] +

bayer[width * 2 + 2] + 2) >> 2;

t1 = (bayer[1] + bayer[width] +

bayer[width + 2] + bayer[width * 2 + 1] +

2) >> 2;

*bgr++ = bayer[width + 1];

*bgr++ = t1;

*bgr++ = t0;

t0 = (bayer[2] + bayer[width * 2 + 2] + 1) >> 1;

t1 = (bayer[width + 1] + bayer[width + 3] +

1) >> 1;

*bgr++ = t1;

*bgr++ = bayer[width + 2];

*bgr++ = t0;

}

}

if (bayer < bayerEnd) {

/* write second to last pixel */

t0 = (bayer[0] + bayer[2] + bayer[width * 2] +

bayer[width * 2 + 2] + 2) >> 2;

t1 = (bayer[1] + bayer[width] +

bayer[width + 2] + bayer[width * 2 + 1] +

2) >> 2;

if (blue_line) {

*bgr++ = t0;

*bgr++ = t1;

*bgr++ = bayer[width + 1];

} else {

*bgr++ = bayer[width + 1];

*bgr++ = t1;

*bgr++ = t0;

}

/* write last pixel */

t0 = (bayer[2] + bayer[width * 2 + 2] + 1) >> 1;

if (blue_line) {

*bgr++ = t0;

*bgr++ = bayer[width + 2];

*bgr++ = bayer[width + 1];

} else {

*bgr++ = bayer[width + 1];

*bgr++ = bayer[width + 2];

*bgr++ = t0;

}

bayer++;

} else {

/* write last pixel */

t0 = (bayer[0] + bayer[width * 2] + 1) >> 1;

t1 = (bayer[1] + bayer[width * 2 + 1] + bayer[width] + 1) / 1.6)*4096;

*pDst1++ = v 3;

if (blue_line) {

*bgr++ = t0;

*bgr++ = t1;

*bgr++ = bayer[width + 1];

} else {

*bgr++ = bayer[width + 1];

*bgr++ = t1;

*bgr++ = t0;

}

}

/* skip 2 border pixels */

bayer += 2;

blue_line = !blue_line;

start_with_green = !start_with_green;

}

/* render the last line */

convert_border_bayer_line_to_bgr24(bayer + width, bayer, bgr, width,

!start_with_green, !blue_line);

}

/*convert bayer raw data to rgb24

* 16;

v1 = (*pSrc & 0x0F);

pSrc++;

v = v1 + (*pSrc++)*16;

v = pow(v / 4096.0, 1.0 / 1.6)*4096;

*pDst2++ = (v args:

* 16);

}

}

cout << "done" << endl << flush;

imshow(stringIn, matIn);

imshow(stringLow, matLow);

imshow(stringHigh, matHigh);

pBay: pointer to buffer containing Raw bayer data data

* pRGB24: pointer to buffer containing rgb24 data

* width: picture width

* height: picture height

* pix_order: bayer pixel order (0=gb/rg 1=gr/bg 2=bg/gr 3=rg/bg)

*/

void bayer_to_rgb24(uint8_t *pBay, uint8_t *pRGB24, int width, int height, int pix_order) {

switch (pix_order) {

/*conversion functions are build for bgr, by switching b and r lines we get rgb*/

case 0: /* gbgbgb... | rgrgrg... (V4L2_PIX_FMT_SGBRG8)*/

bayer_to_rgbbgr24(pBay, pRGB24, width, height, TRUE, FALSE);

break;

case 1: /* grgrgr... | bgbgbg... (V4L2_PIX_FMT_SGRBG8)*/

bayer_to_rgbbgr24(pBay, pRGB24, width, height, TRUE, TRUE);

break;

case 2: /* bgbgbg... | grgrgr... (V4L2_PIX_FMT_SBGGR8)*/

bayer_to_rgbbgr24(pBay, pRGB24, width, height, FALSE, FALSE);

break;

case 3: /* rgrgrg... ! gbgbgb... (V4L2_PIX_FMT_SRGGB8)*/

bayer_to_rgbbgr24(pBay, pRGB24, width, height, FALSE, TRUE);

break;

default: /* default is 0*/

bayer_to_rgbbgr24(pBay, pRGB24, width, height, TRUE, FALSE);

break;

}

}

===================

main

#include "handlers/menu/Menu.h"

#include "handlers/menu/MainMenu.h"

#include "handlers/Button.h"

#include "utils/ImageProcessor.h"

#include <opencv2/core/core.hpp>

#include <iostream>

#include <vector>

using namespace std;

using namespace cv;

int main(int argc, char* argv[]) {

// calibrate(argc, argv);

// exit(0);

ImageProcessor imageProcessor();

while (1) {

imageProcessor.update();

usleep(10000);

}

}

The output looks like this no window is ever displayed:

done

done

Segmentation fault

Unfortunately, without a core file, I am not sure where it went south.

With your change to:

Mat matLow(matIn.rows, matIn.cols, CV_16UC1);

Mat matHigh(matIn.rows, matIn.cols, CV_16UC1);

I am not getting any windows displayed at all, but "done" is repeatedly printed to the screen.

Now I get the following images (BTW, I had put a sleep(10) into the loop but it did not work (waitKey(20);does...)

Unfortunately I am running on a Raspberry Pi. I don't have Visual Studio. I believe that the image is coming into the library correctly as that is what it looks like when I run it on my Windows PC with the standard camera app.

Here are the files:

I looked at the pictures again and I think I must have sent you two of the same. This time I uploaded the images also to make sure!.

Here is the left one saturated and right black:

Left

Here is the right one saturated and the left black:

Right