Hello everyone,

I'm facing some trouble while training an SVM and I'm wondering if it has to do with the size of each of the input vectors. As usually, I'm computing some descriptors to be passed into the classifier (vector<float> descriptors). Everything goes alright (the SVM is trained without problems) until the vector I use goes too big (around 220000 in length - just an approximation, haven't tested/found exact amount yet) when it arises an exception (error at memory location whatever position)

So... is there any know maximum size for those input vectors? I haven't found anything on the docs, and neither on LibSVM docs (as OpenCV implementation is based on it).

Just to clarify: 1. the exception does not point running out of memory, there's plenty of memory available, and 2. I'm using OpenCV 2.4.102.4.10 on a Windows 7 64 bits computer with 16GB RAM

UPDATE:UPDATE 1:

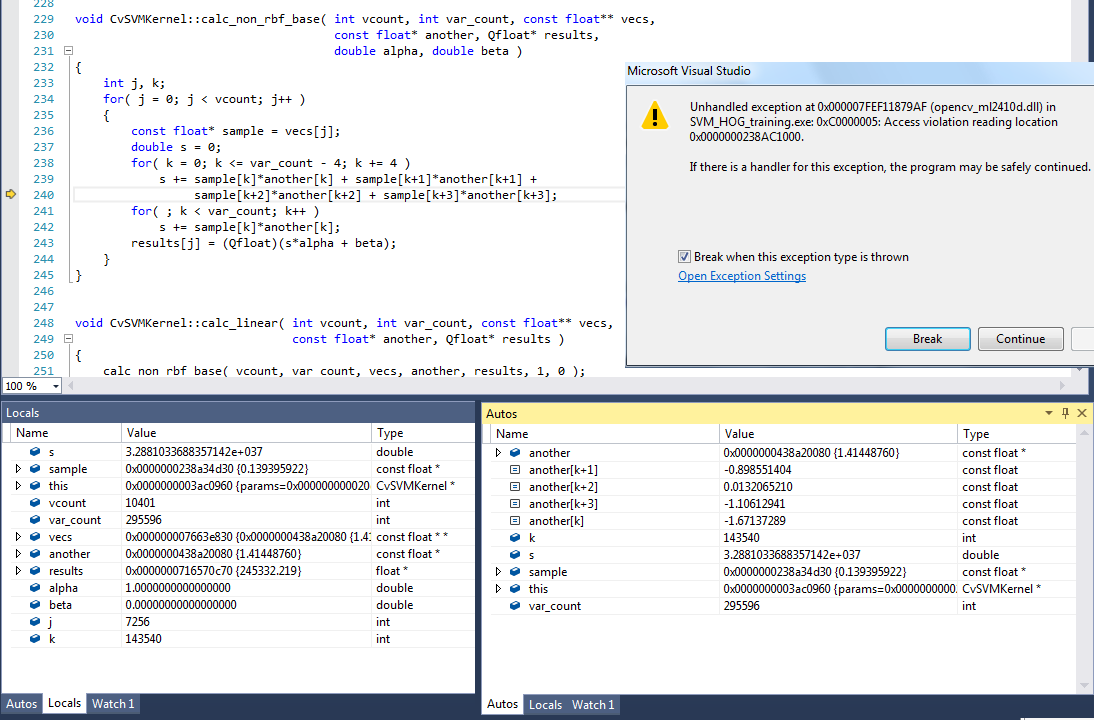

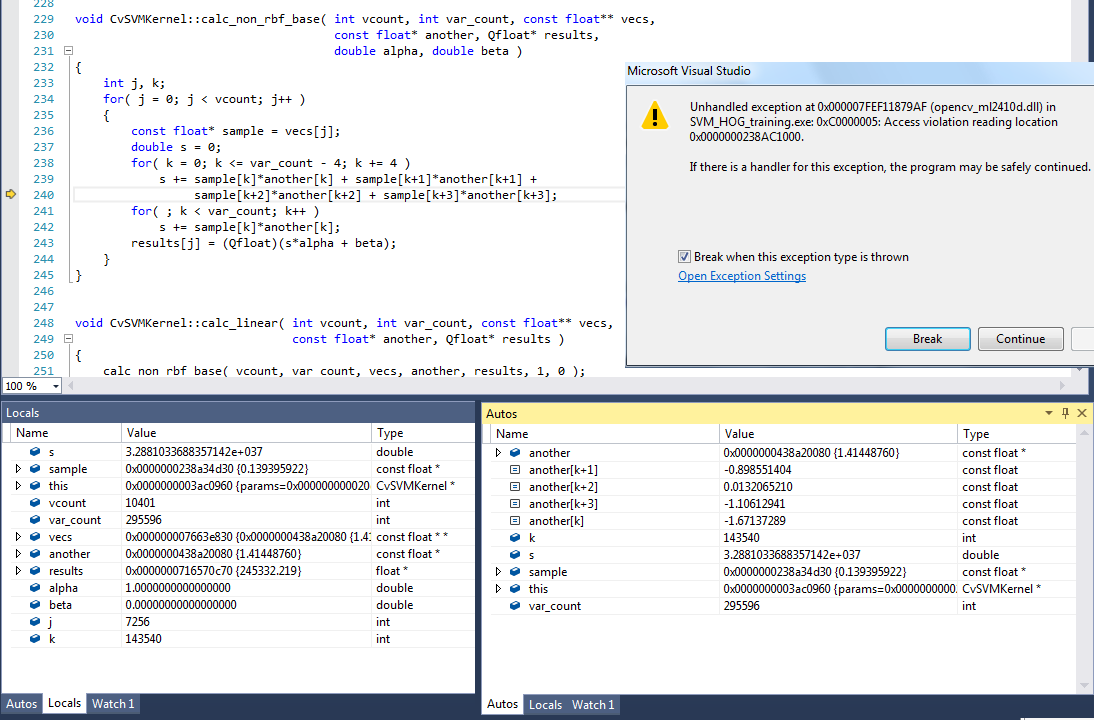

I've been debugging the program and the error arises at the calc_non_rbf_base function in svm.cpp. I still don't know the reason of the crash, it seems like something is happening at the low-level programming, but my skills at that level are not enough to understand what's going on.

I'm posting one screen capture with the values of all variables at the exception point, and also the exception error, as I think it's the best way to show all relevant info. It seems something's happening while accesing the samples variable.

More details about my code:

- The capture has been taken while using 10400 samples, each represented by a descriptor of size 295596. However as you can see, the exception arises while using sample number 7256 (j variable) and component number 143540 (k variable). That's quite interesting and unexpected as I have succesfully trained the SVM using 10400 samples x ~185000 descriptor size

- I've sucessfully trained a SVM with dummy data of size 50 samples x 1,000,000 descriptor size. So, I guess it has to do not only with descriptor size but with a combination of both sizes.

- Using OpenCV 2.4.10. I haven't tested the issue with 2.4.11 nor 3.0.0

I really hope some of the gurus of the forum can take a look and give some insights on the issue, because it could be a quite huge hiccup in my research. Of course, ask whatever extra info you want. I'll be incredibly thankful for all help

EDIT: (EDIT: changed title of question to better address issueissue)

UPDATE 2:

I've been doing more tests and I've come across new findings. First of all, I tested on the same code as Update 1 different lenghts of the features vector (all with 10401 number of samples), with these results: (screenshots available is someone needs them)

- Features length 295596 -> crashes at j variable = 7256

- Features length 238140 -> crashes at j variable = 9008

- Features length 220000 -> crashes at j variable = 9752

- Features length 210000 -> crashes at j variable = 10217

- Features length 208000 -> crashes at j variable = 10315

- Features length 205000 -> no crash, training goes on

There's clearly some kind of tradeoff between the number of samples and the length of features per sample. I tested both with my own original data and with randomly generated one, same results (so error is indepedent of data values).

So the next thing I did was to create standalone code to easily reproduce the error by others. Then I tested with length 208000 and... the program crashed at j=5125!!! This variation made no sense at all, so I tried to figure out where the difference could be and... major finding: the error is dependent on the proportion of positive and negative samples in the dataset. That means that changing that proportion changes at which j the program crashes, if it crashes at all. For example, using 10401x206000 dataset, program crashes when there is a 50-50 proportion, but does not crash when the number of positive samples is just 10401/40. I have also proved that changing the labels values does not affect the output (using pairs such as (0,1), (-1,1), (0,2)).

I'm extremely puzzled, to say the least. I'll appreciate if someone can test and reproduce the issue. Some code to do so can be found here (needs a minimun of 12GB RAM). As I don't know if this is OS/version dependent it might be needed to play around a little bit with the numbers to reproduce error. If someone can reproduce this I'll open a bug ticket.