I have been trying to port some python code on github for iris recognition to OpenCV for the past few days. I have been succesfully porting the code but kind of got stuck now.

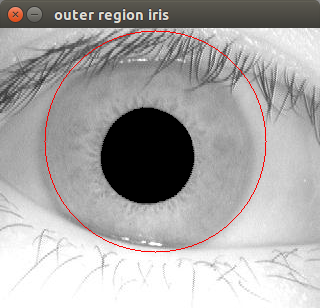

If I look at the results of his test image he gets a pretty decent result

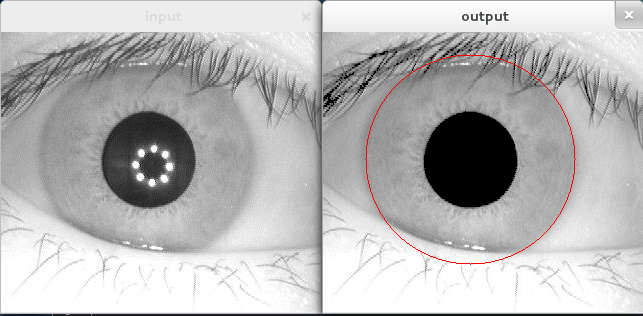

However, I get the following result

The code that I am using for this is

// -----------------------------------

// STEP 2: find the iris outer contour

// -----------------------------------

// Detect iris outer border

// Apply a canny edge filter to look for borders

// Then clean it a bit by adding a smoothing filter, reducing noise

Mat blacked_canny, preprocessed;

Canny(blacked_pupil, blacked_canny, 5, 70, 3);

GaussianBlur(blacked_canny, preprocessed, Size(7,7), 0, 0);

// Now run a set of HoughCircle detections with different parameters

// We increase the second accumulator value until a single circle is left and take that one for granted

int i = 80;

Vec3f found_circle;

while (i < 151){

vector< Vec3f > storage;

// If you use other data than the database provided, tweaking of these parameters will be neccesary

HoughCircles(preprocessed, storage, CV_HOUGH_GRADIENT, 2, 100.0, 30, i, 100, 140);

if(storage.size() == 1){

found_circle = storage[0];

break;

}

i++;

}

// Now draw the outer circle of the iris

// For that we need two 3 channel BGR images, else we cannot draw in color

Mat blacked_c(blacked_pupil.rows, blacked_pupil.cols, CV_8UC3);

Mat in[] = { blacked_pupil, blacked_pupil, blacked_pupil };

int from_to[] = { 0,0, 1,1, 2,2 };

mixChannels( in, 3, &blacked_c, 1, from_to, 3 );

circle(blacked_c, Point(found_circle[0], found_circle[1]), found_circle[2], Scalar(0,0,255), 1);

imshow("outer region iris", blacked_c); waitKey(0);

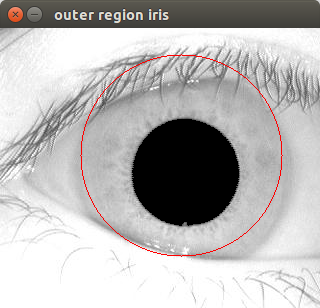

Getting the black pupil area was no problem and works flawlessly. I am guessing it goes wrong at detecting the edges uses the HoughTransform. I like the idea of looping over the accumulator value until a single circle remains, but it does not seem to be the same result.

Anyone has an idea on how I could improve the result?