Hello,

I am working on Dense reconstruction using multiview geometry, which is based heavily on this book.

ftp://vista.eng.tau.ac.il/dropbox/aviad/Hartley,%20Zisserman%20-%20Multiple%20View%20Geometry%20in%20Computer%20Vision.pdf

Currently, i have a sequence of 7 images from a calibrated camera. From these sequences, all of the features have been matched and triangulated to create a point cloud. In creating the point cloud, we have generated a rotation matrix and a translation vector for each view. This information yields a fussy point cloud when visualized with OpenGL.

(Original, View1 with points, View2 with points, Addweights view1 and view2(+warp perspective with homography))

Next, i used the LevMarqSparse bundle adjuster to optimise the point cloud and camera matrices. When displayed in OpenGL, it has a much nicer point cloud

Now we want to create a dense reconstruction of the scene. With all of the information available, i can find fundemental matrices between views and associated homographies. With a perspective transform, i can remove the rotation of one frame to the next. The x or y translation can be silenced by setting the appropirate rotation matrix value to 0.

So far i have attempted the following.

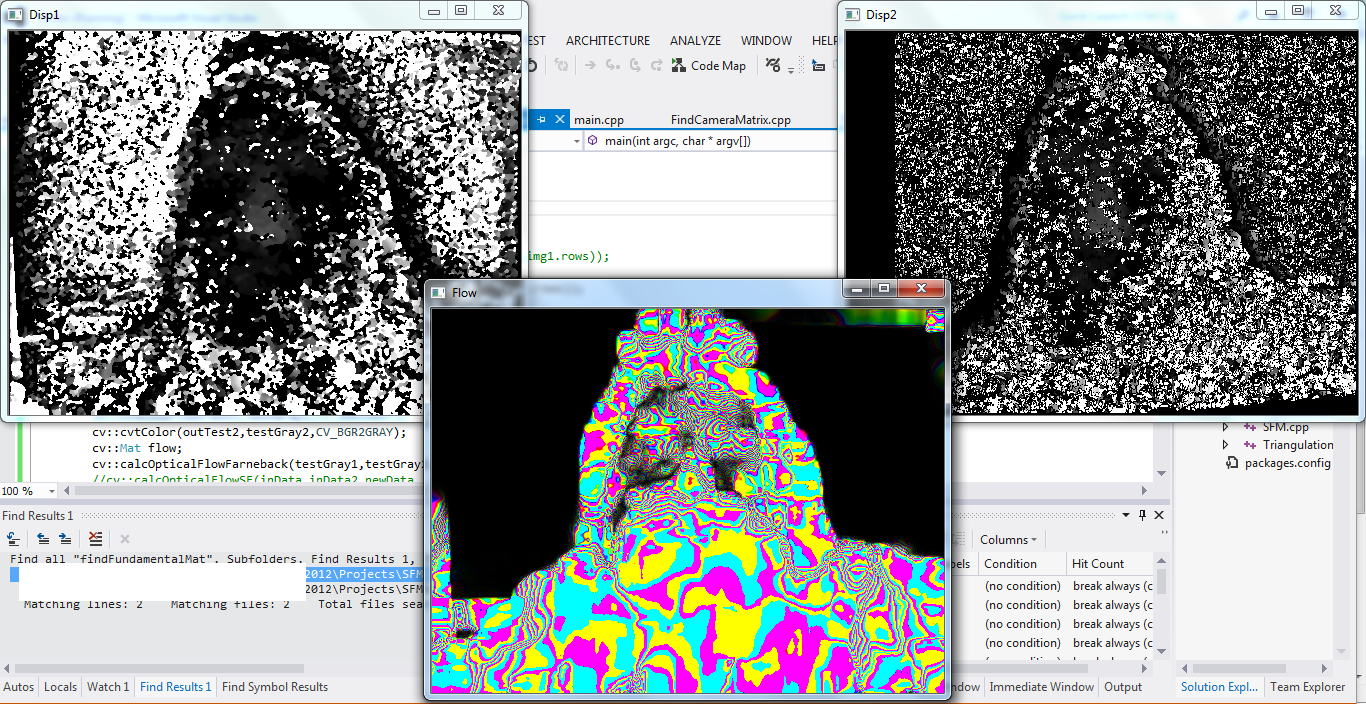

1) Using homography, align the images for stereomatching with StereoBM and StereioSGBM.

2) Ran stereomatching for many ndisparities and window sizes.

2) Dense optical flow with original aligned images to get global motion.

We have the overall movement in the scene and the position of the frames relative to one another.

Any pointers on what i should be doing here? This part seems to be more than possible, but very little available to help with the techniques.