Hi all,

I am using ROS Mapstitch package based on Opencv to find transformation bewtween maps ( grayscale images of same size and resolution) to combine them.

The implementation is based on opencvs estimateRigidTransform function, ORB feature extraction and matching the distance of pairwise feature candidates

For my input files the result from estimateRigidTransform is not correct.

Can somebody has any suggestion or know anyother existing methods to improve the result.

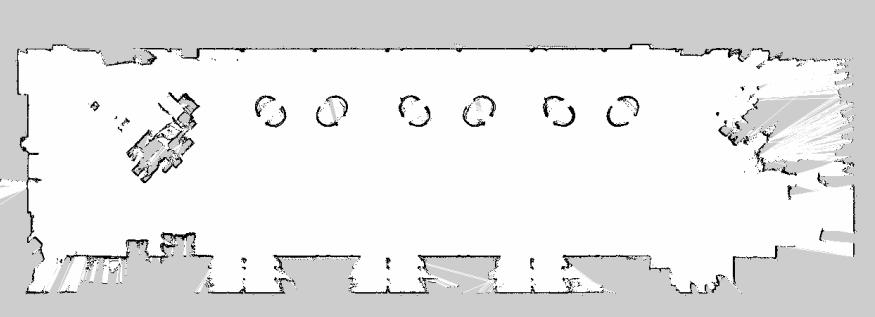

input image1

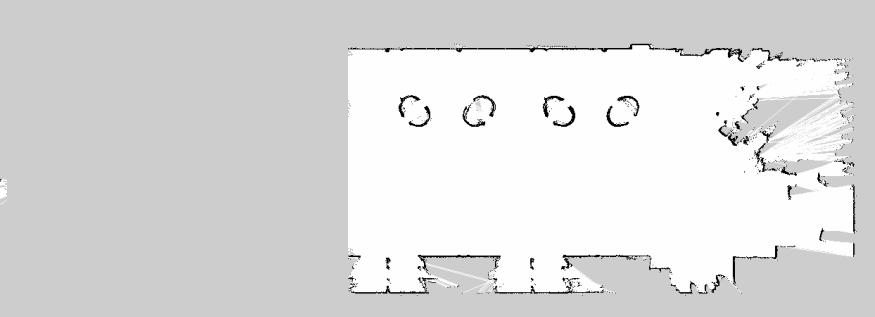

input image 2

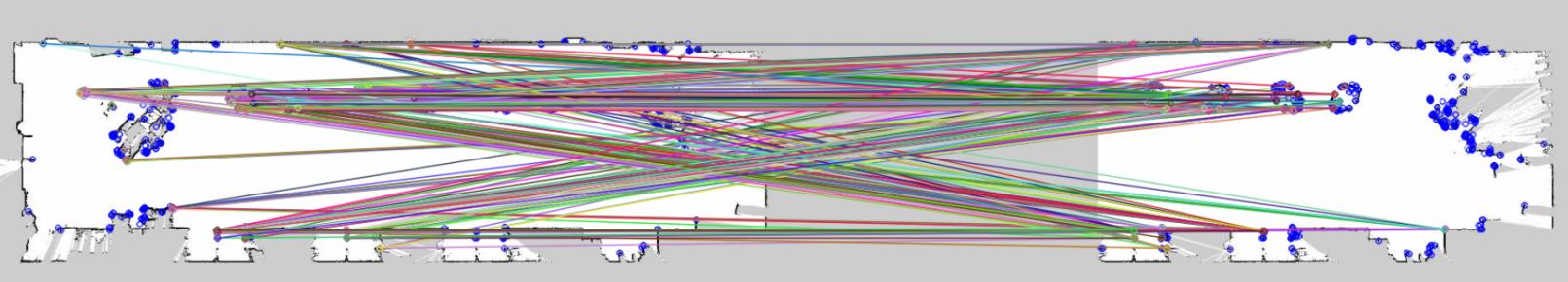

keypoint matching

I can see Keypoints are matching to wrong location. In Ideal case upperwall keypoint should match to upperwall.

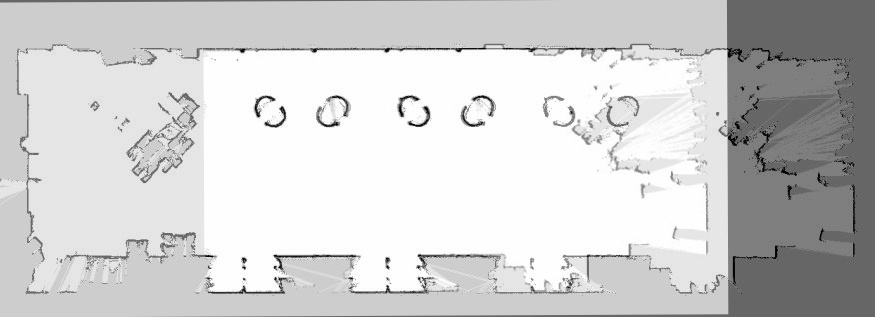

result

Thanks.