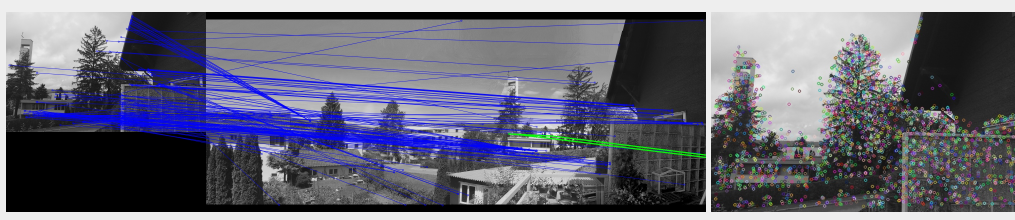

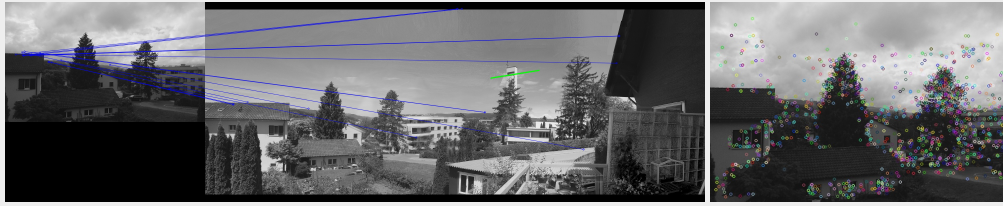

I'm trying to use features2d SURF to detect a given image (a video frame) in a bigger panorama image. But either SURF isn't suitable for this task or I couldn't use it correctly. What I get as output are images that look like this:

which seems pretty poor to me. I did filter only the good matches. I used this tutorial: homography tutorial

Along with this Java code from another question: homography tutorial in Java

To explain my code: the panoramaImage is the big image I want to match every video frame with and the smallMats is a List from Mats that are video frames.

Now my question: (1) Is anything wrong with my code (besides that its ugly sometimes) or (2) is SURF just not good for this task? If (2) -> please tell me alternatives :)

Your answers are greatly appreciated, thanks!

Isa

log.append(TAG + "----Sampling using SURF algo-----" + "\n");

log.append(TAG + "----Detecting SURF keypoints-----" + "\n");

MatOfKeyPoint keypointsPanorama = new MatOfKeyPoint();

LinkedList<MatOfKeyPoint> keypointsVideo = new LinkedList<MatOfKeyPoint>();

// detect panorama keypoints

SURFfeatureDetector.detect(panoramaImage, keypointsPanorama);

// detect video keypoints

for (int i = 0; i < videoSize; i++) {

MatOfKeyPoint keypoints = new MatOfKeyPoint();

SURFfeatureDetector.detect(smallMats.get(i), keypoints);

keypointsVideo.add(keypoints);

}

log.append(TAG + "----Drawing SURF keypoints-----" + "\n");

SURFPointedPanoramaImage.release();

Features2d.drawKeypoints(panoramaImage, keypointsPanorama,

SURFPointedPanoramaImage);

clearList(SURFPointedSmallImages);

for (int i = 0; i < videoSize; i++) {

Mat out = new Mat();

Features2d.drawKeypoints(smallMats.get(i), keypointsVideo.get(i),

out);

SURFPointedSmallImages.add(out);

}

log.append(TAG + "----Extracting SURF keypoints-----" + "\n");

Mat descriptorPanorama = new Mat();

LinkedList<Mat> descriptorVideo = new LinkedList<Mat>();

// extracting panorama keypoints

SURFextractor.compute(panoramaImage, keypointsPanorama,

descriptorPanorama);

// extracting video keypoints

for (int i = 0; i < videoSize; i++) {

Mat descriptorVid = new Mat();

SURFextractor.compute(smallMats.get(i), keypointsVideo.get(i),

descriptorVid);

descriptorVideo.add(descriptorVid);

}

log.append(TAG + "----Matching SURF keypoints-----" + "\n");

LinkedList<MatOfDMatch> matches = new LinkedList<MatOfDMatch>();

for (int i = 0; i < videoSize; i++) {

MatOfDMatch match = new MatOfDMatch();

FLANNmatcher.match(descriptorVideo.get(i), descriptorPanorama,

match);

// extract only good matches

List<DMatch> matchesList = match.toList();

match.release();

double max_dist = 0.0;

double min_dist = 100.0;

for (int j = 0; j < descriptorVideo.get(i).rows(); j++) {

Double dist = (double) matchesList.get(j).distance;

if (dist < min_dist)

min_dist = dist;

if (dist > max_dist)

max_dist = dist;

}

log.append(TAG

+ "-- Extracting good matches from video object nr: " + i

+ "\n");

log.append(TAG + "-- Max dist : " + max_dist + "\n");

log.append(TAG + "-- Min dist : " + min_dist + "\n");

LinkedList<DMatch> good_matches = new LinkedList<DMatch>();

MatOfDMatch goodMatch = new MatOfDMatch();

for (int j = 0; j < descriptorVideo.get(i).rows(); j++) {

if (matchesList.get(j).distance < 3 * min_dist) {

good_matches.addLast(matchesList.get(j));

}

}

goodMatch.fromList(good_matches);

matches.add(goodMatch);

}

log.append(TAG + "----Drawing SURF matches-----" + "\n");

clearList(matchedBigMatsSURF);

locationsSURFMatch.clear();

for (int i = 0; i < videoSize; i++) {

Mat img_matches = new Mat();

Features2d.drawMatches(smallMats.get(i), keypointsVideo.get(i),

panoramaImage, keypointsPanorama, matches.get(i),

img_matches, new Scalar(255, 0, 0), new Scalar(0, 0, 255),

new MatOfByte(), 2);

LinkedList<Point> objList = new LinkedList<Point>();

LinkedList<Point> sceneList = new LinkedList<Point>();

List<KeyPoint> keypoints_objectList = keypointsVideo.get(i)

.toList();

List<KeyPoint> keypoints_sceneList = keypointsPanorama.toList();

List<DMatch> good_matches = matches.get(i).toList();

for (int j = 0; j < good_matches.size(); j++) {

objList.addLast(keypoints_objectList.get(good_matches.get(j).queryIdx).pt);

sceneList

.addLast(keypoints_sceneList.get(good_matches.get(j).trainIdx).pt);

}

MatOfPoint2f obj = new MatOfPoint2f();

obj.fromList(objList);

MatOfPoint2f scene = new MatOfPoint2f();

scene.fromList(sceneList);

Mat hg = Calib3d.findHomography(obj, scene);

Mat obj_corners = new Mat(4, 1, CvType.CV_32FC2);

Mat scene_corners = new Mat(4, 1, CvType.CV_32FC2);

obj_corners.put(0, 0, new double[] { 0, 0 });

obj_corners.put(1, 0, new double[] { smallMats.get(i).cols(), 0 });

obj_corners.put(2, 0, new double[] { smallMats.get(i).cols(),

smallMats.get(i).rows() });

obj_corners.put(3, 0, new double[] { 0, smallMats.get(i).rows() });

Core.perspectiveTransform(obj_corners, scene_corners, hg);

Point one = new Point(scene_corners.get(0, 0));

Point two = new Point(scene_corners.get(1, 0));

Point three = new Point(scene_corners.get(2, 0));

Point four = new Point(scene_corners.get(3, 0));

one.x = one.x + smallMats.get(i).cols();

two.x = two.x + smallMats.get(i).cols();

three.x = three.x + smallMats.get(i).cols();

four.x = four.x + smallMats.get(i).cols();

Core.line(img_matches, one, two, new Scalar(0, 255, 0), 4);

Core.line(img_matches, two, three, new Scalar(0, 255, 0), 4);

Core.line(img_matches, three, four, new Scalar(0, 255, 0), 4);

Core.line(img_matches, four, one, new Scalar(0, 255, 0), 4);

// calculate centroid of quadrilateral

double x = new Point(scene_corners.get(0, 0)).x

+ new Point(scene_corners.get(1, 0)).x

+ new Point(scene_corners.get(2, 0)).x

+ new Point(scene_corners.get(3, 0)).x;

double y = new Point(scene_corners.get(0, 0)).y

+ new Point(scene_corners.get(1, 0)).y

+ new Point(scene_corners.get(2, 0)).y

+ new Point(scene_corners.get(3, 0)).y;

obj.release();

scene.release();

obj_corners.release();

scene_corners.release();

locationsSURFMatch.add(new Vector2((int) x, (int) y));

matchedBigMatsSURF.add(img_matches);