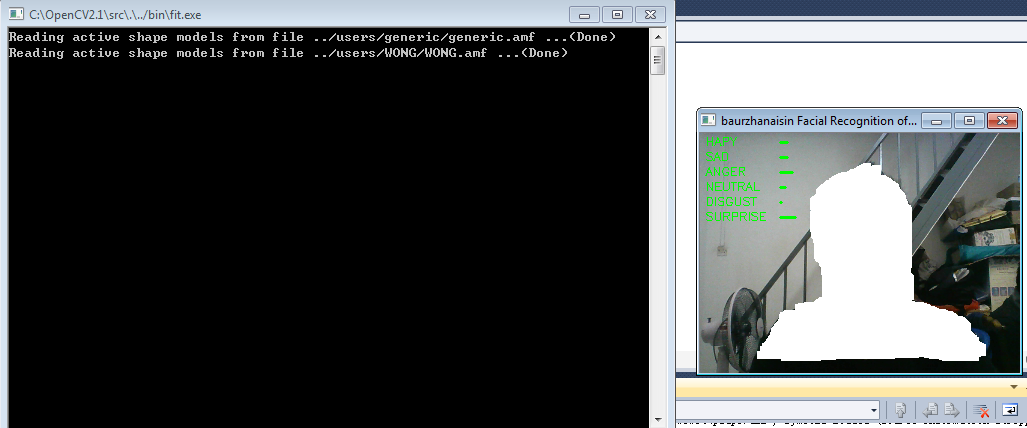

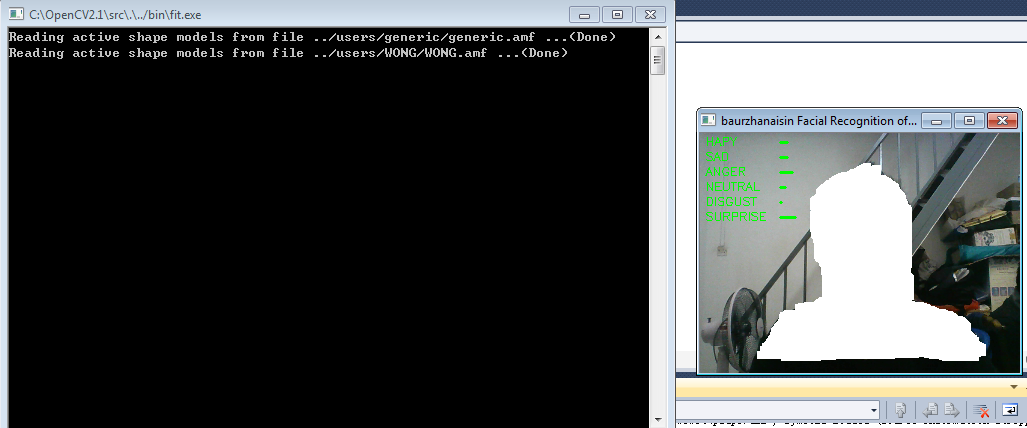

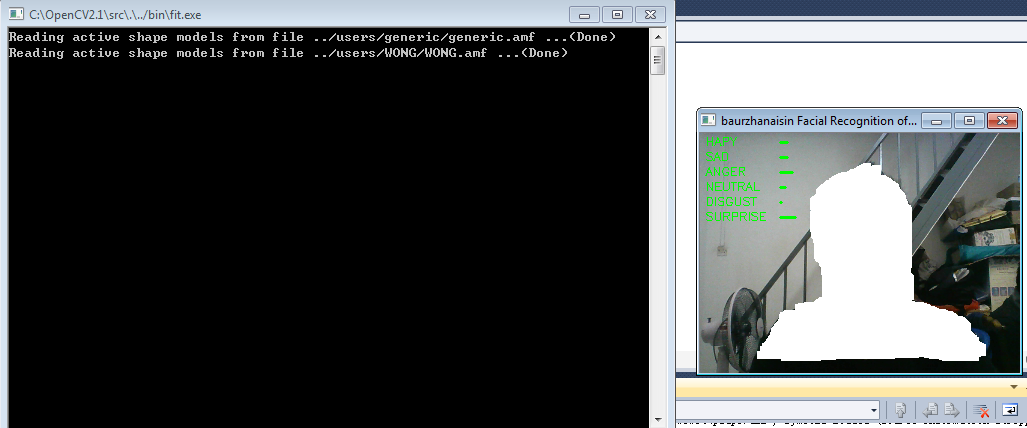

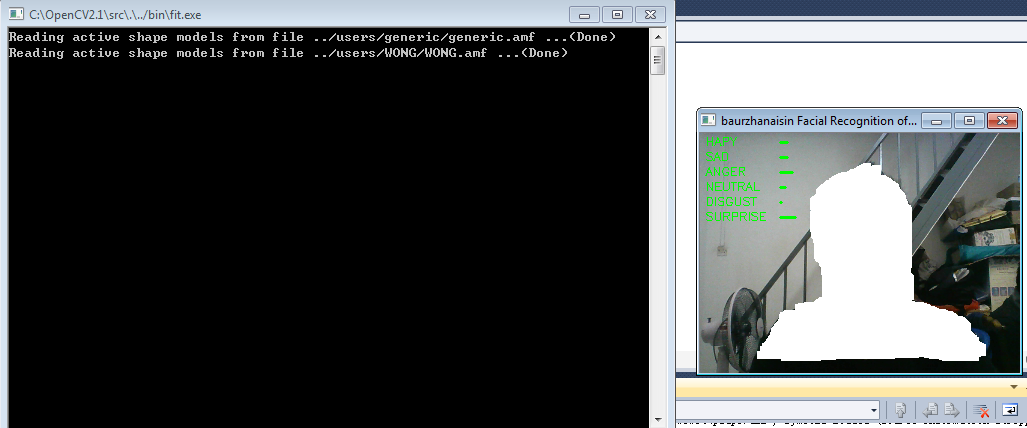

The image below are my current work but I want to add percentage to the bar? or just maybe percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related. Thank you in advanced.

| 1 | initial version |

The image below are my current work but I want to add percentage to the bar? or just maybe percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related. Thank you in advanced.

| 2 | No.2 Revision |

The image below are my current work but I want to add percentage to the bar? or just maybe percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related.

related.

Thank you in advanced.

| 3 | No.3 Revision |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase. The image below are my current work but I want to add percentage to the bar? or just maybe percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related.

Thank you in advanced.

| 4 | No.4 Revision |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase.

The image below are my current work but I want to add percentage to the bar? or just maybe percentage instead of bar? How can I do so?I had search the Q&A, but I couldn't found anything related.

Thank you in advanced.

| 5 | No.5 Revision |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase.

The image below are my current work but I want to add percentage to the bar? bar, or maybe just maybe percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related.

Thank you in advanced.

| 6 | No.6 Revision |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase.

The image below are my current work but I want to add percentage to the bar, or maybe just percentage instead of bar? How can I do so?so?

I had search the Q&A, but I couldn't found anything related.

Thank you in advanced.

| 7 | retagged |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase.

The image below are my current work but I want to add percentage to the bar, or maybe just percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related.

Thank you in advanced.

| 8 | No.8 Revision |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase.

The image below are my current work but I want to add percentage to the bar, or maybe just percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related.

Thank you in advanced.

| 9 | No.9 Revision |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase.

The image below are my current work but I want to add percentage to the bar, or maybe just percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related.

Thank you in advanced.advanced...

| 10 | No.10 Revision |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase.

The image below are my current work but I want to add percentage to the bar, or maybe just percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related.

Thank you in advanced...advanced.

#include <vector>

#include <string>

#include <iostream>

#include <conio.h>

#include <direct.h>

#include "asmfitting.h"

#include "vjfacedetect.h"

#include "video_camera.h"

#include "F_C.h"

#include "util.h"

#include "scandir.h"

using namespace std;

#define N_SHAPES_FOR_FILTERING 3

#define MAX_FRAMES_UNDER_THRESHOLD 15

#define NORMALIZE_POSE_PARAMS 0

#define CAM_WIDTH 320

#define CAM_HEIGHT 240

#define FRAME_TO_START_DECISION 50

#define PRINT_TIME_TICKS 0

#define PRINT_FEATURES 0

#define PRINT_FEATURE_SCALES 0

#define UP 2490368

#define DOWN 2621440

#define RIGHT 2555904

#define LEFT 2424832

const char* WindowsName = "baurzhanaisin Facial Recognition of Emotions.";

const char* ProcessedName = "Results";

IplImage *tmpCimg1=0;

IplImage *tmpGimg1=0, *tmpGimg2=0;

IplImage *tmpSimg1 = 0, *tmpSimg2 = 0;

IplImage *tmpFimg1 = 0, *tmpFimg2 = 0;

IplImage *sobel1=0, *sobel2=0, *sobelF1=0, *sobelF2=0;

cv::Mat features, featureScales, expressions;

CvFont font;

int showTrackerGui = 1, showProcessedGui = 0, showRegionsOnGui = 0, showFeatures = 0, showShape = 0;

void setup_tracker(IplImage* sampleCimg)

{

if(showTrackerGui) cvNamedWindow(WindowsName,1);

if(showProcessedGui) cvNamedWindow(ProcessedName, 1);

tmpCimg1 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 3);

tmpGimg1 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

tmpGimg2 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

tmpSimg1 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_16S, 1);

tmpSimg2 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_16S, 1);

sobel1 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

sobel2 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

sobelF1 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

sobelF2 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

tmpFimg1 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

tmpFimg2 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

features = cv::Mat(1,N_FEATURES, CV_32FC1);

featureScales = cv::Mat(1,N_FEATURES, CV_32FC1);

cvZero(&(CvMat)featureScales);

}

void exit_tracker() {

cvReleaseImage(&tmpCimg1);

cvReleaseImage(&tmpGimg1);

cvReleaseImage(&tmpGimg2);

cvReleaseImage(&tmpSimg1);

cvReleaseImage(&tmpSimg2);

cvReleaseImage(&tmpFimg1);

cvReleaseImage(&tmpFimg2);

cvReleaseImage(&sobel1);

cvReleaseImage(&sobel2);

cvReleaseImage(&sobelF1);

cvReleaseImage(&sobelF2);

cvDestroyAllWindows();

}

void extract_features_and_display(IplImage* img, asm_shape shape){

int iFeature = 0;

CvScalar red = cvScalar(0,0,255);

CvScalar green = cvScalar(0,255,0);

CvScalar blue = cvScalar(255,0,0);

CvScalar gray = cvScalar(150,150,150);

CvScalar lightgray = cvScalar(150,150,150);

CvScalar white = cvScalar(255,255,255);

cvCvtColor(img, tmpCimg1, CV_RGB2HSV);

cvSplit(tmpCimg1, 0, 0, tmpGimg1, 0);

cvSobel(tmpGimg1, tmpSimg1, 1, 0, 3);

cvSobel(tmpGimg1, tmpSimg2, 0, 1, 3);

cvConvertScaleAbs(tmpSimg1, sobel1);

cvConvertScaleAbs(tmpSimg2, sobel2);

cvConvertScale(sobel1, sobelF1, 1/255.);

cvConvertScale(sobel2, sobelF2, 1/255.);

int nPoints[1];

CvPoint **faceComponent;

faceComponent = (CvPoint **) cvAlloc (sizeof (CvPoint *));

faceComponent[0] = (CvPoint *) cvAlloc (sizeof (CvPoint) * 10);

for(int i = 0; i < shape.NPoints(); i++)

if(showRegionsOnGui)

cvCircle(img, cvPoint((int)shape[i].x, (int)shape[i].y), 0, lightgray);

CvPoint eyeBrowMiddle1 = cvPoint((int)(shape[55].x+shape[63].x)/2, (int) (shape[55].y+shape[63].y)/2);

CvPoint eyeBrowMiddle2 = cvPoint((int)(shape[69].x+shape[75].x)/2, (int)(shape[69].y+shape[75].y)/2);

if(showRegionsOnGui) {

cvLine(img, cvPoint((int)(shape[53].x+shape[65].x)/2, (int)(shape[53].y+shape[65].y)/2), eyeBrowMiddle1, red, 2);

cvLine(img, eyeBrowMiddle1, cvPoint((int)(shape[58].x+shape[60].x)/2, (int)(shape[58].y+shape[60].y)/2), red, 2);

cvLine(img, cvPoint((int)(shape[66].x+shape[78].x)/2, (int)(shape[66].y+shape[78].y)/2), eyeBrowMiddle2, red, 2);

cvLine(img, eyeBrowMiddle2, cvPoint((int)(shape[71].x+shape[73].x)/2, (int)(shape[71].y+shape[73].y)/2), red, 2);

}

CvPoint eyeMiddle1 = cvPoint((int)(shape[3].x+shape[9].x)/2, (int)(shape[3].y+shape[9].y)/2);

CvPoint eyeMiddle2 = cvPoint((int)(shape[29].x+shape[35].x)/2, (int)(shape[29].y+shape[35].y)/2);

if(showRegionsOnGui){

cvCircle(img, eyeMiddle1, 2, lightgray, 1);

cvCircle(img, eyeMiddle2, 2, lightgray, 1);

}

double eyeBrowDist1 = (cvSqrt((double)(eyeMiddle1.x-eyeBrowMiddle1.x)*(eyeMiddle1.x-eyeBrowMiddle1.x)+

(eyeMiddle1.y-eyeBrowMiddle1.y)*(eyeMiddle1.y-eyeBrowMiddle1.y))

/ shape.GetWidth());

double eyeBrowDist2 = (cvSqrt((double)(eyeMiddle2.x-eyeBrowMiddle2.x)*(eyeMiddle2.x-eyeBrowMiddle2.x)+

(eyeMiddle2.y-eyeBrowMiddle2.y)*(eyeMiddle2.y-eyeBrowMiddle2.y))

/ shape.GetWidth());

features.at<float>(0,iFeature) = (float)((eyeBrowDist1+eyeBrowDist2)/2);

++iFeature;

nPoints[0] = 4;

faceComponent[0][0] = cvPoint((int)(6*shape[63].x-shape[101].x)/5, (int)(6*shape[63].y-shape[101].y)/5);

faceComponent[0][1] = cvPoint((int)(6*shape[69].x-shape[105].x)/5, (int)(6*shape[69].y-shape[105].y)/5);

faceComponent[0][2] = cvPoint((int)(21*shape[69].x-shape[105].x)/20, (int)(21*shape[69].y-shape[105].y)/20);

faceComponent[0][3] = cvPoint((int)(21*shape[63].x-shape[101].x)/20, (int)(21*shape[63].y-shape[101].y)/20);

if(showRegionsOnGui)

cvPolyLine(img, faceComponent, nPoints, 1, 1, blue);

cvSetZero(tmpFimg1);

cvSetZero(tmpFimg2);

cvFillPoly(tmpFimg1, faceComponent, nPoints, 1, cvScalar(1,1,1));

cvMultiplyAcc(tmpFimg1, sobelF2, tmpFimg2);

CvScalar area = cvSum(tmpFimg1);

CvScalar sum = cvSum(tmpFimg2);

features.at<float>(0,iFeature) = (float)(sum.val[0]/area.val[0]);

++iFeature;

nPoints[0] = 4;

faceComponent[0][0] = cvPoint((int)(29*shape[59].x+shape[102].x)/30, (int)(29*shape[59].y+shape[102].y)/30);

faceComponent[0][1] = cvPoint((int)(29*shape[79].x+shape[104].x)/30, (int)(29*shape[79].y+shape[104].y)/30);

faceComponent[0][2] = cvPoint((int)(11*shape[79].x-shape[104].x)/10, (int)(11*shape[79].y-shape[104].y)/10);

faceComponent[0][3] = cvPoint((int)(11*shape[59].x-shape[102].x)/10, (int)(11*shape[59].y-shape[102].y)/10);

if(showRegionsOnGui)

cvPolyLine(img, faceComponent, nPoints, 1, 1, green);

cvSetZero(tmpFimg1);

cvSetZero(tmpFimg2);

cvFillPoly(tmpFimg1, faceComponent, nPoints, 1, cvScalar(1,1,1));

cvMultiplyAcc(tmpFimg1, sobelF1, tmpFimg2);

area = cvSum(tmpFimg1);

sum = cvSum(tmpFimg2);

features.at<float>(0,iFeature) = (float)(sum.val[0]/area.val[0]);

++iFeature;

nPoints[0] = 4;

faceComponent[0][0] = cvPoint((int)(shape[80].x+4*shape[100].x)/5, (int)(shape[80].y+4*shape[100].y)/5);

faceComponent[0][1] = cvPoint((int)(shape[111].x+9*shape[96].x)/10, (int)(shape[111].y+9*shape[96].y)/10);

faceComponent[0][2] = cvPoint((int)(9*shape[111].x+shape[96].x)/10, (int)((9*shape[111].y+shape[96].y)/10 + shape.GetWidth()/20));

faceComponent[0][3] = cvPoint((int)(4*shape[80].x+shape[100].x)/5, (int)(4*shape[80].y+shape[100].y)/5);

if(showRegionsOnGui)

cvPolyLine(img, faceComponent, nPoints, 1, 1, blue);

cvSetZero(tmpFimg1);

cvSetZero(tmpFimg2);

cvFillPoly(tmpFimg1, faceComponent, nPoints, 1, cvScalar(1,1,1));

cvMultiplyAcc(tmpFimg1, sobelF1, tmpFimg2);

cvMultiplyAcc(tmpFimg1, sobelF2, tmpFimg2);

area = cvSum(tmpFimg1);

sum = cvSum(tmpFimg2);

features.at<float>(0,iFeature) = (float)(sum.val[0]/area.val[0]);

++iFeature;

faceComponent[0][0] = cvPoint((int)(shape[88].x+4*shape[106].x)/5, (int)(shape[88].y+4*shape[106].y)/5);

faceComponent[0][1] = cvPoint((int)(shape[115].x+9*shape[110].x)/10, (int)(shape[115].y+9*shape[110].y)/10);

faceComponent[0][2] = cvPoint((int)(9*shape[115].x+shape[110].x)/10, (int)((9*shape[115].y+shape[110].y)/10 + shape.GetWidth()/20));

faceComponent[0][3] = cvPoint((int)(4*shape[88].x+shape[106].x)/5, (int)(4*shape[88].y+shape[106].y)/5);

if(showRegionsOnGui)

cvPolyLine(img, faceComponent, nPoints, 1, 1, blue);

cvSetZero(tmpFimg1);

cvSetZero(tmpFimg2);

cvFillPoly(tmpFimg1, faceComponent, nPoints, 1, cvScalar(1,1,1));

cvMultiplyAcc(tmpFimg1, sobelF1, tmpFimg2);

cvMultiplyAcc(tmpFimg1, sobelF2, tmpFimg2);

area = cvSum(tmpFimg1);

sum = cvSum(tmpFimg2);

features.at<float>(0,iFeature) = (float)(sum.val[0]/area.val[0]);

++iFeature;

CvPoint lipHeightL = cvPoint((int)shape[92].x, (int)shape[92].y);

CvPoint lipHeightH = cvPoint((int)shape[84].x, (int)shape[84].y);

if(showRegionsOnGui)

cvLine(img, lipHeightL, lipHeightH, red, 2);

double lipDistH = (cvSqrt((shape[92].x-shape[84].x)*(shape[92].x-shape[84].x)+

(shape[92].y-shape[84].y)*(shape[92].y-shape[84].y))

/ shape.GetWidth());

features.at<float>(0,iFeature) = (float)lipDistH;

++iFeature;

CvPoint lipR = cvPoint((int)shape[80].x, (int)shape[80].y);

CvPoint lipL = cvPoint((int)shape[88].x, (int)shape[88].y);

if(showRegionsOnGui)

cvLine(img, lipR, lipL, red, 2);

double lipDistW = (cvSqrt((shape[80].x-shape[88].x)*(shape[80].x-shape[88].x)+

(shape[80].y-shape[88].y)*(shape[80].y-shape[88].y))

/ shape.GetWidth());

features.at<float>(0,iFeature) = (float)lipDistW;

++iFeature;

cvFree(faceComponent);

}

void initialize(IplImage* img, int iFrame)

{

if(iFrame >= 5) {

for(int iFeature = 0; iFeature < N_FEATURES; iFeature++){

featureScales.at<float>(0,iFeature) += features.at<float>(0,iFeature) / (FRAME_TO_START_DECISION-5);

}

}

if(showProcessedGui)

{

cvFlip(tmpGimg1, NULL, 1);

cvShowImage(ProcessedName, tmpGimg1);

}

if(showTrackerGui)

{

CvScalar expColor = cvScalar(0,0,0);

cvFlip(img, NULL, 1);

if(iFrame%5 != 0)

cvPutText(img, "Initializing", cvPoint(5, 12), &font, expColor);

}

}

void normalizeFeatures(IplImage* img)

{

cvFlip(img, NULL, 1);

for(int iFeature = 0; iFeature < N_FEATURES; iFeature++)

featureScales.at<float>(0,iFeature) = 1 / featureScales.at<float>(0,iFeature);

}

void track(IplImage* img)

{

for(int iFeature = 0; iFeature < N_FEATURES; iFeature++){

features.at<float>(0,iFeature) = features.at<float>(0,iFeature) * featureScales.at<float>(0,iFeature);

}

if(PRINT_FEATURES)

{

for(int i=0; i<N_FEATURES; i++)

printf("%3.2f ", features.at<float>(0,i));

printf("\n");

}

if(PRINT_FEATURE_SCALES)

{

for(int i=0; i<N_FEATURES; i++)

printf("%3.2f ", featureScales.at<float>(0,i));

printf("\n");

}

if(showProcessedGui)

{

cvFlip(sobelF1, NULL, 1);

cvShowImage(ProcessedName, sobelF1);

cvFlip(sobelF2, NULL, 1);

cvShowImage("ada", sobelF2);

}

if(showTrackerGui) {

CvScalar expColor = cvScalar(0,254,0);

cvFlip(img, NULL, 1);

int start=12, step=15, current;

current = start;

for(int i = 0; i < N_EXPRESSIONS; i++, current+=step)

{

cvPutText(img, EXP_NAMES[i], cvPoint(5, current), &font, expColor);

}

expressions = get_class_weights(features);

current = start - 3;

for(int i = 0; i < N_EXPRESSIONS; i++, current+=step)

{

cvLine(img, cvPoint(80, current), cvPoint((int)(80+expressions.at<double>(0,i)*50), current), expColor, 2);

}

current += step + step;

if(showFeatures == 1)

{

for(int i=0; i<N_FEATURES; i++)

{

current += step;

char buf[4];

sprintf(buf, "%.2f", features.at<float>(0,i));

cvPutText(img, buf, cvPoint(5, current), &font, expColor);

}

}

}

}

int write_features(string filename, int j, int numFeats, IplImage* img)

{

CvScalar expColor = cvScalar(0,0,0);

cvFlip(img, NULL, 1);

if(j%100 == 0)

{

ofstream file(filename, ios::app);

if(!file.is_open()){

cout << "error opening file: " << filename << endl;

exit(1);

}

cvPutText(img, "Features Taken:", cvPoint(5, 12), &font, expColor);

for(int i=0; i<N_FEATURES; i++)

{

char buf[4];

sprintf(buf, "%.2f", features.at<float>(0,i) * featureScales.at<float>(0,i));

printf("%3.2f, ", features.at<float>(0,i) * featureScales.at<float>(0,i));

file << buf << " ";

}

file << endl;

printf("\n");

cvShowImage("taken photo", img);

numFeats++;

file.close();

}

if(j%100 != 0)

{

cvPutText(img, "Current Expression: ", cvPoint(5, 12), &font, expColor);

cvPutText(img, EXP_NAMES[numFeats/N_SAMPLES], cvPoint(5, 27), &font, expColor);

cvPutText(img, "Features will be taken when the counter is 0.", cvPoint(5, 42), &font, expColor);

char buf[12];

sprintf(buf, "Counter: %2d", 100-(j%100));

cvPutText(img, buf, cvPoint(5, 57), &font, expColor);

}

return numFeats;

}

int select(int selection, int range)

{

int deneme = cvWaitKey();

if(deneme == UP)

{

selection = (selection+range-1)%range;

}

else if(deneme == DOWN)

{

selection = (selection+range+1)%range;

}

else if(deneme == 13)

{

selection += range;

}

else if(deneme == 27)

{

exit(0);

}

return selection;

}

int selectionMenu(IplImage* img, string title, vector<string> lines)

{

CvScalar expColor = cvScalar(0,0,0);

CvScalar mark = cvScalar(255,255,0);

int selection = 0, range = lines.size(), step = 15, current = 12;

IplImage* print = cvCreateImage( cvSize(img->width, img->height ), img->depth, img->nChannels );

do{

current=12;

cvCopy(img, print);

if(title != ""){

cvPutText(print, title.c_str(), cvPoint(5, current), &font, expColor);

current+=step;

}

cvRectangle(print, cvPoint(0, current-4+selection*step), cvPoint(319, current-7+selection*step), mark, 12);

for(int i=0 ; i<range; i++){

cvPutText(print, lines.at(i).c_str(), cvPoint(5, current), &font, expColor);

current += step;

}

cvShowImage(WindowsName, print);

selection = select(selection, range);

if(selection>=range){

return selection - range;

}

}while(1);

}

string getKey(string word, char key)

{

if(key == 27){

exit(1);

}

else if(key == 8 && word.size()>0)

{

word.pop_back();

}

else if(word.size()<10 && ((key>='a' && key<='z') || (key>='A' && key<='Z') || (key>='0' && key<='9' || (key=='_')))){

word.push_back(key);

}

return word;

}

bool fitImage(IplImage *image, char *pts_name, char* model_name, int n_iteration, bool show_result)

{

asmfitting fit_asm;

if(fit_asm.Read(model_name) == false)

exit(0);

int nFaces;

asm_shape *shapes = NULL, *detshapes = NULL;

bool flag = detect_all_faces(&detshapes, nFaces, image);

if(flag)

{

shapes = new asm_shape[nFaces];

for(int i = 0; i < nFaces; i++)

{

InitShapeFromDetBox(shapes[i], detshapes[i], fit_asm.GetMappingDetShape(), fit_asm.GetMeanFaceWidth());

}

}

else

{

return false;

}

fit_asm.Fitting2(shapes, nFaces, image, n_iteration);

for(int i = 0; i < nFaces; i++){

fit_asm.Draw(image, shapes[i]);

save_shape(shapes[i], pts_name);

}

if(showTrackerGui && show_result) {

cvNamedWindow("Fitting", 1);

cvShowImage("Fitting", image);

cvWaitKey(0);

cvReleaseImage(&image);

}

delete[] shapes;

free_shape_memeory(&detshapes);

return true;

}

bool takePhoto(int counter, bool printCounter, string folder)

{

CvScalar expColor = cvScalar(0,0,0);

while(1)

{

IplImage* image = read_from_camera();

cvFlip(image, NULL, 1);

char key = cvWaitKey(20);

if(key==32 || key==13)

{

std::ostringstream imageName, ptsName, xmlName;

imageName << folder << "/" << counter << ".jpg";

ptsName << folder << "/" << counter << ".pts";

xmlName << folder << "/" << counter << ".xml";

IplImage* temp = cvCreateImage( cvSize(image->width, image->height ), image->depth, image->nChannels );

cvCopy(image, temp);

if(fitImage(image, _strdup(ptsName.str().c_str()), "../users/generic/generic.amf", 30, false))

{

cvSaveImage(imageName.str().c_str(), temp);

string command = "python ../annotator/converter.py pts2xml " + ptsName.str() + " " + xmlName.str();

system(command.c_str());

cvShowImage("Photo", temp);

return true;

}

}

else if(key == 27)

{

cvDestroyWindow(WindowsName);

cvDestroyWindow("Photo");

return false;

}

cvPutText(image, "Press ENTER or SPACE to take photos.", cvPoint(5, 12), &font, expColor);

cvPutText(image, "To finish taking photos press ESCAPE.", cvPoint(5, 27), &font, expColor);

if(printCounter)

{

std::ostringstream counterText;

counterText << "Number of photos taken: " << counter;

cvPutText(image, counterText.str().c_str(), cvPoint(5, 42), &font, expColor);

}

cvShowImage(WindowsName, image);

}

}

void takePhotos(string folder)

{

int counter = 0;

while(takePhoto(counter, true, folder)){

counter++;

};

}

void fineTune(string imageFolder)

{

string command = "python ../annotator/annotator.py " + imageFolder;

system(command.c_str());

filelists conv = ScanNSortDirectory(imageFolder.c_str(), ".xml");

for(size_t i=0 ; i<conv.size() ; i++){

string ptsName = conv.at(i).substr(0, conv.at(i).size()-4) + ".pts";

command = "python ../annotator/converter.py xml2pts " + conv.at(i) + " " + ptsName;

system(command.c_str());

}

}

string createUser(IplImage* img)

{

CvScalar expColor = cvScalar(0,0,0);

CvScalar mark = cvScalar(255,255,0);

IplImage* print = cvCreateImage( cvSize(img->width, img->height ), img->depth, img->nChannels );

int step = 15, current = 12;

string userName;

char key;

do{

cvCopy(img, print);

cvPutText(print, "Enter name to create a new user.", cvPoint(5, current), &font, expColor);

cvPutText(print, userName.c_str(), cvPoint(5, current+step), &font, expColor);

cvShowImage(WindowsName, print);

key = cvWaitKey();

if(key != 13){

userName = getKey(userName, key);

}

else if(userName.size() > 0)

{

break;

}

}while(1);

cout << userName;

string userFolder = "../users/" + userName;

string imageFolder = userFolder + "/photos";

_mkdir(userFolder.c_str());

_mkdir(imageFolder.c_str());

takePhotos(imageFolder);

fineTune(imageFolder);

string command ="..\\bin\\build.exe -p 4 -l 5 " + imageFolder + " jpg pts ../cascades/haarcascade_frontalface_alt2.xml " + userFolder + "/" + userName;

cout << endl << command << endl;

system(command.c_str());

return userName;

}

string selectUser(IplImage* img){

vector<string> userList = directoriesOf("../users/");

userList.push_back("Create New User");

int selection = selectionMenu(img, "Select or Create User", userList);

return userList.at(selection);

}

string menu1(IplImage* img, string userName)

{

vector<string> files = ScanNSortDirectory(("../users/" + userName).c_str(), "txt");

vector<string> lines;

for(size_t i=0 ; i<files.size() && i<20 ; i++)

{

lines.push_back(files.at(i).substr(10+userName.size(),files.at(i).size()-14-userName.size()));

}

lines.push_back("Create New Expression Class");

files.push_back("Create New Expression Class");

int selection = selectionMenu(img, "Select or Create Expression Class", lines);

return files.at(selection);

}

string createClassMenu(IplImage* img, string userName){

CvScalar expColor = cvScalar(0,0,0);

CvScalar mark = cvScalar(255,255,0);

IplImage* print = cvCreateImage( cvSize(img->width, img->height ), img->depth, img->nChannels );

int step = 15, current = 12;

string filename;

char key;

do{

cvCopy(img, print);

cvPutText(print, "Enter filename to create a new file.", cvPoint(5, current), &font, expColor);

cvPutText(print, filename.c_str(), cvPoint(5, current+step), &font, expColor);

cvShowImage(WindowsName, print);

key = cvWaitKey();

if(key != 13){

filename = getKey(filename, key);

}

else if(filename.size() > 0){ //filename empty de餴lse yap

break;

}

}while(1);

string numOfExprStr = "Number Of Expressions: ";

cvPutText(print, numOfExprStr.c_str(), cvPoint(5, current+step*2), &font, expColor);

cvShowImage(WindowsName, print);

do{

key = cvWaitKey();

}while(key<'2' || key>'9');

numOfExprStr.push_back(key);

int numOfExpr = key -'0';

cout << numOfExpr << endl;

string numOfSampStr = "Number Of Samples per Expression: ";

cvPutText(print, numOfExprStr.c_str(), cvPoint(5, current+step*2), &font, expColor);

cvPutText(print, numOfSampStr.c_str(), cvPoint(5, current+step*3), &font, expColor);

cvShowImage(WindowsName, print);

do{

key = cvWaitKey();

}while(key<'2' || key>'9');

numOfSampStr.push_back(key);

int numOfSamp = key -'0';

cout << numOfSamp << endl;

cvPutText(print, numOfExprStr.c_str(), cvPoint(5, current+step*2), &font, expColor);

cvPutText(print, numOfSampStr.c_str(), cvPoint(5, current+step*3), &font, expColor);

cvPutText(print, "Expression Names:", cvPoint(5, current+step*5), &font, expColor);

string *exprNames = new string[numOfExpr];

for(int i=0 ; i<numOfExpr ; i++){

do{

cvCopy(img, print);

cvPutText(print, "Enter filename to create a new file.", cvPoint(5, current), &font, expColor);

cvPutText(print, filename.c_str(), cvPoint(5, current+step), &font, expColor);

cvPutText(print, numOfExprStr.c_str(), cvPoint(5, current+step*2), &font, expColor);

cvPutText(print, numOfSampStr.c_str(), cvPoint(5, current+step*3), &font, expColor);

cvPutText(print, "Expression Names:", cvPoint(5, current+step*5), &font, expColor);

for(int j=0 ; j<numOfExpr ; j++){

cvPutText(print, exprNames[j].c_str(), cvPoint(5, current+(step*(6+j))), &font, expColor);

}

cvShowImage(WindowsName, print);

key = cvWaitKey();

if(key != 13)

{

exprNames[i] = getKey(exprNames[i], key);

}

else if(exprNames[i].size() > 0)

{

break;

}

}while(1);

cout << exprNames[i] << endl;

}

filename = "../users/" + userName + "/" + filename + ".txt";

config(filename, numOfExpr, numOfSamp, exprNames);

return filename;

}

int main(int argc, char *argv[])

{

asmfitting fit_asm;

char* model_name = "../users/generic/generic.amf";

char* cascade_name = "../cascades/haarcascade_frontalface_alt2.xml";

char* VIfilename = NULL;

char* class_name = NULL;

char* shape_output_filename = NULL;

char* pose_output_filename = NULL;

char* features_output_filename = NULL;

char* expressions_output_filename = NULL;

int use_camera = 1;

int image_or_video = -1;

int i;

int n_iteration = 30;

int maxComponents = 10;

for(i = 1; i < argc; i++)

{

if(argv[i][0] != '-') usage_fit();

if(++i > argc) usage_fit();

switch(argv[i-1][1])

{

case 'm':

model_name = argv[i];

break;

case 's':

break;

case 'h':

cascade_name = argv[i];

break;

case 'i':

if(image_or_video >= 0)

{

fprintf(stderr, "only process image/video/camera once\n");

usage_fit();

}

VIfilename = argv[i];

image_or_video = 'i';

use_camera = 0;

break;

case 'v':

if(image_or_video >= 0)

{

fprintf(stderr, "only process image/video/camera once\n");

usage_fit();

}

VIfilename = argv[i];

image_or_video = 'v';

use_camera = 0;

break;

case 'c':

class_name = argv[i];

break;

case 'H':

usage_fit();

break;

case 'n':

n_iteration = atoi(argv[i]);

break;

case 'S':

shape_output_filename = argv[i];

case 'P':

pose_output_filename = argv[i];

case 'F':

features_output_filename = argv[i];

break;

case 'E':

expressions_output_filename = argv[i];

break;

case 'g':

showTrackerGui = atoi(argv[i]);

break;

case 'e':

showProcessedGui = atoi(argv[i]);

break;

case 'r':

showRegionsOnGui = atoi(argv[i]);

break;

case 'f':

showFeatures = atoi(argv[i]);

break;

case 't':

showShape = atoi(argv[i]);

break;

case 'x':

maxComponents = atoi(argv[i]);

break;

default:

fprintf(stderr, "unknown options\n");

usage_fit();

}

}

if(fit_asm.Read(model_name) == false)

return -1;

if(init_detect_cascade(cascade_name) == false)

return -1;

cvInitFont(&font, CV_FONT_HERSHEY_SIMPLEX, 0.4, 0.4, 0, 1, CV_AA);

if(image_or_video == 'i')

{

IplImage * image = cvLoadImage(VIfilename, 1);

if(image == 0)

{

fprintf(stderr, "Can not Open image %s\n", VIfilename);

exit(0);

}

if(shape_output_filename == NULL){

char* shape_output_filename = VIfilename;

shape_output_filename[strlen(shape_output_filename)-3]='p';

shape_output_filename[strlen(shape_output_filename)-2]='t';

shape_output_filename[strlen(shape_output_filename)-1]='s';

}

fitImage(image, shape_output_filename, model_name, n_iteration, true);

}

else if(image_or_video == 'v')

{

int frame_count;

asm_shape shape, detshape;

bool flagFace = false, flagShape = false;

int countFramesUnderThreshold = 0;

IplImage* image;

int j, key;

FILE *fpShape, *fpPose, *fpFeatures, *fpExpressions;

frame_count = open_video(VIfilename);

if(frame_count == -1) return false;

if(shape_output_filename != NULL)

{

fopen_s(&fpShape, shape_output_filename, "w");

if (fpShape == NULL)

{

fprintf(stderr, "Can't open output file %s!\n", shape_output_filename);

exit(1);

}

}

if(pose_output_filename != NULL)

{

fopen_s(&fpPose, pose_output_filename, "w");

if (fpPose == NULL)

{

fprintf(stderr, "Can't open output file %s!\n", shape_output_filename);

exit(1);

}

}

if(features_output_filename != NULL) {

fopen_s(&fpFeatures, features_output_filename, "w");

if (fpFeatures == NULL) {

fprintf(stderr, "Can't open output file %s!\n", features_output_filename);

exit(1);

}

}

if(expressions_output_filename != NULL) {

fopen_s(&fpExpressions, expressions_output_filename, "w");

if (fpExpressions == NULL) {

fprintf(stderr, "Can't open output file %s!\n", expressions_output_filename);

exit(1);

}

}

asm_shape shapes[N_SHAPES_FOR_FILTERING]; // Will be used for median filtering

asm_shape shapeCopy, shapeAligned;

for(j = 0; j < frame_count; j ++)

{

double t = (double)cvGetTickCount();

if(PRINT_TIME_TICKS)

printf("Tracking frame %04i: ", j);

image = read_from_video(j);

if(j == 0)

setup_tracker(image);

if(flagShape == false)

{

flagFace = detect_one_face(detshape, image);

if(flagFace)

{

InitShapeFromDetBox(shape, detshape, fit_asm.GetMappingDetShape(), fit_asm.GetMeanFaceWidth());

}

else goto show;

}

flagShape = fit_asm.ASMSeqSearch(shape, image, j, true, n_iteration);

shapeCopy = shape;

if(j==0)

for(int k=0; k < N_SHAPES_FOR_FILTERING; k++)

shapes[k] = shapeCopy;

else {

for(int k=0; k < N_SHAPES_FOR_FILTERING-1; k++)

shapes[k] = shapes[k+1];

shapes[N_SHAPES_FOR_FILTERING-1] = shapeCopy;

}

shapeCopy = get_weighted_mean(shapes, N_SHAPES_FOR_FILTERING);

if(flagShape && showShape){

fit_asm.Draw(image, shapeCopy);

}

if(!flagShape)

{

if(countFramesUnderThreshold == 0)

flagShape = false;

else

flagShape = true;

countFramesUnderThreshold = (countFramesUnderThreshold + 1) % MAX_FRAMES_UNDER_THRESHOLD;

}

extract_features_and_display(image, shapeCopy);

if(j < FRAME_TO_START_DECISION){

initialize(image, j);

}

else if(j == FRAME_TO_START_DECISION){

normalizeFeatures(image);

read_config_from_file(class_name);

if(expressions_output_filename != NULL){

fprintf(fpExpressions, "FrameNo");

for(i=0; i<N_EXPRESSIONS-1; i++){

fprintf(fpExpressions, "%11s", EXP_NAMES[i]);

}

fprintf(fpExpressions, "%11s\n", EXP_NAMES[i]);

}

}

else{

track(image);

if(features_output_filename != NULL) write_vector(features, fpFeatures);

if(expressions_output_filename != NULL) write_expressions(expressions, fpExpressions, j);

}

cout<<j<<endl;

if(flagShape && showShape){

cvFlip(image, NULL, 1);

fit_asm.Draw(image, shapeCopy);

cvFlip(image, NULL, 1);

}

cvShowImage(WindowsName, image);

show: key = cv::waitKey(10); if(key == 27) break;

t = ((double)cvGetTickCount() - t )/ (cvGetTickFrequency()*1000.);

if(PRINT_TIME_TICKS)

printf("Time spent: %.2f millisec\n", t);

}

if(shape_output_filename != NULL) fclose(fpShape);

if(pose_output_filename != NULL) fclose(fpPose);

if(features_output_filename != NULL) fclose(fpFeatures);

if(expressions_output_filename != NULL) fclose(fpExpressions);

close_video();

}

else if(use_camera)

{

if(open_camera(0, CAM_WIDTH, CAM_HEIGHT) == false)

return -1;

int numFeats = 0;

boolean create = false;

IplImage* image;

if(CAM_WIDTH==640) image = cvLoadImage("../images/BackgroundLarge.png");

else image = cvLoadImage("../images/Background.png");

string userName = selectUser(image);

if(userName == "Create New User"){

userName = createUser(image);

}

model_name = _strdup(("../users/" + userName + "/" + userName + ".amf").c_str());

if(fit_asm.Read(model_name) == false)

return -1;

string filename = menu1(image, userName);

if(filename == "Create New Expression Class"){

create = true;

filename = createClassMenu(image, userName);

}

asm_shape shape, detshape;

bool flagFace = false, flagShape = false;

int countFramesUnderThreshold = 0;

int j = 0, key;

asm_shape shapes[N_SHAPES_FOR_FILTERING];

asm_shape shapeCopy, shapeAligned;

while(1)

{

image = read_from_camera();

if(j == 0)

setup_tracker(image);

if(flagShape == false)

{

flagFace = detect_one_face(detshape, image);

if(flagFace)

InitShapeFromDetBox(shape, detshape, fit_asm.GetMappingDetShape(), fit_asm.GetMeanFaceWidth());

else

goto show2;

}

flagShape = fit_asm.ASMSeqSearch(shape, image, j, true, n_iteration);

shapeCopy = shape;

if(j==0)

for(int k=0; k < N_SHAPES_FOR_FILTERING; k++)

shapes[k] = shapeCopy;

else {

for(int k=0; k < N_SHAPES_FOR_FILTERING-1; k++)

shapes[k] = shapes[k+1];

shapes[N_SHAPES_FOR_FILTERING-1] = shapeCopy;

}

shapeCopy = get_weighted_mean(shapes, N_SHAPES_FOR_FILTERING);

if(!flagShape)

{

if(countFramesUnderThreshold == 0)

flagShape = false;

else

flagShape = true;

countFramesUnderThreshold = (countFramesUnderThreshold + 1) % MAX_FRAMES_UNDER_THRESHOLD;

}

extract_features_and_display(image, shapeCopy);

if(j < FRAME_TO_START_DECISION) {initialize(image, j); }

else if(j == FRAME_TO_START_DECISION) {normalizeFeatures(image); if(!create) read_config_from_file(filename); }

else if(numFeats < N_POINTS && create) {numFeats = write_features(filename, j, numFeats, image); }

else if(numFeats == N_POINTS && create) {read_config_from_file(filename); numFeats++; }

else {track(image); }

if(flagShape && showShape){

cvFlip(image, NULL, 1);

fit_asm.Draw(image, shapeCopy);

cvFlip(image, NULL, 1);

}

cvShowImage(WindowsName, image);

show2:

key = cvWaitKey(20);

if(key == 27)

break;

else if(key == 'r')

{

showRegionsOnGui = -1*showRegionsOnGui + 1;

}

else if(key == 't')

{

showShape = -1*showShape + 1;

}

else if(key == 's')

{

for(int i=0; i<N_FEATURES; i++)

printf("%3.2f, ", features.at<float>(0,i));

printf("\n");

}

j++;

}

close_camera();

}

exit_tracker();

return 0;

}

| 11 | No.11 Revision |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase.

The image below are my current work and code but I want to add percentage to the bar, or maybe just percentage instead of bar? How can I do so?

I had search the Q&A, but I couldn't found anything related.

Thank you in advanced.

#include <vector>

#include <string>

#include <iostream>

#include <conio.h>

#include <direct.h>

#include "asmfitting.h"

#include "vjfacedetect.h"

#include "video_camera.h"

#include "F_C.h"

#include "util.h"

#include "scandir.h"

using namespace std;

#define N_SHAPES_FOR_FILTERING 3

#define MAX_FRAMES_UNDER_THRESHOLD 15

#define NORMALIZE_POSE_PARAMS 0

#define CAM_WIDTH 320

#define CAM_HEIGHT 240

#define FRAME_TO_START_DECISION 50

#define PRINT_TIME_TICKS 0

#define PRINT_FEATURES 0

#define PRINT_FEATURE_SCALES 0

#define UP 2490368

#define DOWN 2621440

#define RIGHT 2555904

#define LEFT 2424832

const char* WindowsName = "baurzhanaisin Facial Recognition of Emotions.";

const char* ProcessedName = "Results";

IplImage *tmpCimg1=0;

IplImage *tmpGimg1=0, *tmpGimg2=0;

IplImage *tmpSimg1 = 0, *tmpSimg2 = 0;

IplImage *tmpFimg1 = 0, *tmpFimg2 = 0;

IplImage *sobel1=0, *sobel2=0, *sobelF1=0, *sobelF2=0;

cv::Mat features, featureScales, expressions;

CvFont font;

int showTrackerGui = 1, showProcessedGui = 0, showRegionsOnGui = 0, showFeatures = 0, showShape = 0;

void setup_tracker(IplImage* sampleCimg)

{

if(showTrackerGui) cvNamedWindow(WindowsName,1);

if(showProcessedGui) cvNamedWindow(ProcessedName, 1);

tmpCimg1 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 3);

tmpGimg1 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

tmpGimg2 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

tmpSimg1 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_16S, 1);

tmpSimg2 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_16S, 1);

sobel1 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

sobel2 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

sobelF1 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

sobelF2 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

tmpFimg1 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

tmpFimg2 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

features = cv::Mat(1,N_FEATURES, CV_32FC1);

featureScales = cv::Mat(1,N_FEATURES, CV_32FC1);

cvZero(&(CvMat)featureScales);

}

void exit_tracker() {

cvReleaseImage(&tmpCimg1);

cvReleaseImage(&tmpGimg1);

cvReleaseImage(&tmpGimg2);

cvReleaseImage(&tmpSimg1);

cvReleaseImage(&tmpSimg2);

cvReleaseImage(&tmpFimg1);

cvReleaseImage(&tmpFimg2);

cvReleaseImage(&sobel1);

cvReleaseImage(&sobel2);

cvReleaseImage(&sobelF1);

cvReleaseImage(&sobelF2);

cvDestroyAllWindows();

}

void extract_features_and_display(IplImage* img, asm_shape shape){

int iFeature = 0;

CvScalar red = cvScalar(0,0,255);

CvScalar green = cvScalar(0,255,0);

CvScalar blue = cvScalar(255,0,0);

CvScalar gray = cvScalar(150,150,150);

CvScalar lightgray = cvScalar(150,150,150);

CvScalar white = cvScalar(255,255,255);

cvCvtColor(img, tmpCimg1, CV_RGB2HSV);

cvSplit(tmpCimg1, 0, 0, tmpGimg1, 0);

cvSobel(tmpGimg1, tmpSimg1, 1, 0, 3);

cvSobel(tmpGimg1, tmpSimg2, 0, 1, 3);

cvConvertScaleAbs(tmpSimg1, sobel1);

cvConvertScaleAbs(tmpSimg2, sobel2);

cvConvertScale(sobel1, sobelF1, 1/255.);

cvConvertScale(sobel2, sobelF2, 1/255.);

int nPoints[1];

CvPoint **faceComponent;

faceComponent = (CvPoint **) cvAlloc (sizeof (CvPoint *));

faceComponent[0] = (CvPoint *) cvAlloc (sizeof (CvPoint) * 10);

for(int i = 0; i < shape.NPoints(); i++)

if(showRegionsOnGui)

cvCircle(img, cvPoint((int)shape[i].x, (int)shape[i].y), 0, lightgray);

CvPoint eyeBrowMiddle1 = cvPoint((int)(shape[55].x+shape[63].x)/2, (int) (shape[55].y+shape[63].y)/2);

CvPoint eyeBrowMiddle2 = cvPoint((int)(shape[69].x+shape[75].x)/2, (int)(shape[69].y+shape[75].y)/2);

if(showRegionsOnGui) {

cvLine(img, cvPoint((int)(shape[53].x+shape[65].x)/2, (int)(shape[53].y+shape[65].y)/2), eyeBrowMiddle1, red, 2);

cvLine(img, eyeBrowMiddle1, cvPoint((int)(shape[58].x+shape[60].x)/2, (int)(shape[58].y+shape[60].y)/2), red, 2);

cvLine(img, cvPoint((int)(shape[66].x+shape[78].x)/2, (int)(shape[66].y+shape[78].y)/2), eyeBrowMiddle2, red, 2);

cvLine(img, eyeBrowMiddle2, cvPoint((int)(shape[71].x+shape[73].x)/2, (int)(shape[71].y+shape[73].y)/2), red, 2);

}

CvPoint eyeMiddle1 = cvPoint((int)(shape[3].x+shape[9].x)/2, (int)(shape[3].y+shape[9].y)/2);

CvPoint eyeMiddle2 = cvPoint((int)(shape[29].x+shape[35].x)/2, (int)(shape[29].y+shape[35].y)/2);

if(showRegionsOnGui){

cvCircle(img, eyeMiddle1, 2, lightgray, 1);

cvCircle(img, eyeMiddle2, 2, lightgray, 1);

}

double eyeBrowDist1 = (cvSqrt((double)(eyeMiddle1.x-eyeBrowMiddle1.x)*(eyeMiddle1.x-eyeBrowMiddle1.x)+

(eyeMiddle1.y-eyeBrowMiddle1.y)*(eyeMiddle1.y-eyeBrowMiddle1.y))

/ shape.GetWidth());

double eyeBrowDist2 = (cvSqrt((double)(eyeMiddle2.x-eyeBrowMiddle2.x)*(eyeMiddle2.x-eyeBrowMiddle2.x)+

(eyeMiddle2.y-eyeBrowMiddle2.y)*(eyeMiddle2.y-eyeBrowMiddle2.y))

/ shape.GetWidth());

features.at<float>(0,iFeature) = (float)((eyeBrowDist1+eyeBrowDist2)/2);

++iFeature;

nPoints[0] = 4;

faceComponent[0][0] = cvPoint((int)(6*shape[63].x-shape[101].x)/5, (int)(6*shape[63].y-shape[101].y)/5);

faceComponent[0][1] = cvPoint((int)(6*shape[69].x-shape[105].x)/5, (int)(6*shape[69].y-shape[105].y)/5);

faceComponent[0][2] = cvPoint((int)(21*shape[69].x-shape[105].x)/20, (int)(21*shape[69].y-shape[105].y)/20);

faceComponent[0][3] = cvPoint((int)(21*shape[63].x-shape[101].x)/20, (int)(21*shape[63].y-shape[101].y)/20);

if(showRegionsOnGui)

cvPolyLine(img, faceComponent, nPoints, 1, 1, blue);

cvSetZero(tmpFimg1);

cvSetZero(tmpFimg2);

cvFillPoly(tmpFimg1, faceComponent, nPoints, 1, cvScalar(1,1,1));

cvMultiplyAcc(tmpFimg1, sobelF2, tmpFimg2);

CvScalar area = cvSum(tmpFimg1);

CvScalar sum = cvSum(tmpFimg2);

features.at<float>(0,iFeature) = (float)(sum.val[0]/area.val[0]);

++iFeature;

nPoints[0] = 4;

faceComponent[0][0] = cvPoint((int)(29*shape[59].x+shape[102].x)/30, (int)(29*shape[59].y+shape[102].y)/30);

faceComponent[0][1] = cvPoint((int)(29*shape[79].x+shape[104].x)/30, (int)(29*shape[79].y+shape[104].y)/30);

faceComponent[0][2] = cvPoint((int)(11*shape[79].x-shape[104].x)/10, (int)(11*shape[79].y-shape[104].y)/10);

faceComponent[0][3] = cvPoint((int)(11*shape[59].x-shape[102].x)/10, (int)(11*shape[59].y-shape[102].y)/10);

if(showRegionsOnGui)

cvPolyLine(img, faceComponent, nPoints, 1, 1, green);

cvSetZero(tmpFimg1);

cvSetZero(tmpFimg2);

cvFillPoly(tmpFimg1, faceComponent, nPoints, 1, cvScalar(1,1,1));

cvMultiplyAcc(tmpFimg1, sobelF1, tmpFimg2);

area = cvSum(tmpFimg1);

sum = cvSum(tmpFimg2);

features.at<float>(0,iFeature) = (float)(sum.val[0]/area.val[0]);

++iFeature;

nPoints[0] = 4;

faceComponent[0][0] = cvPoint((int)(shape[80].x+4*shape[100].x)/5, (int)(shape[80].y+4*shape[100].y)/5);

faceComponent[0][1] = cvPoint((int)(shape[111].x+9*shape[96].x)/10, (int)(shape[111].y+9*shape[96].y)/10);

faceComponent[0][2] = cvPoint((int)(9*shape[111].x+shape[96].x)/10, (int)((9*shape[111].y+shape[96].y)/10 + shape.GetWidth()/20));

faceComponent[0][3] = cvPoint((int)(4*shape[80].x+shape[100].x)/5, (int)(4*shape[80].y+shape[100].y)/5);

if(showRegionsOnGui)

cvPolyLine(img, faceComponent, nPoints, 1, 1, blue);

cvSetZero(tmpFimg1);

cvSetZero(tmpFimg2);

cvFillPoly(tmpFimg1, faceComponent, nPoints, 1, cvScalar(1,1,1));

cvMultiplyAcc(tmpFimg1, sobelF1, tmpFimg2);

cvMultiplyAcc(tmpFimg1, sobelF2, tmpFimg2);

area = cvSum(tmpFimg1);

sum = cvSum(tmpFimg2);

features.at<float>(0,iFeature) = (float)(sum.val[0]/area.val[0]);

++iFeature;

faceComponent[0][0] = cvPoint((int)(shape[88].x+4*shape[106].x)/5, (int)(shape[88].y+4*shape[106].y)/5);

faceComponent[0][1] = cvPoint((int)(shape[115].x+9*shape[110].x)/10, (int)(shape[115].y+9*shape[110].y)/10);

faceComponent[0][2] = cvPoint((int)(9*shape[115].x+shape[110].x)/10, (int)((9*shape[115].y+shape[110].y)/10 + shape.GetWidth()/20));

faceComponent[0][3] = cvPoint((int)(4*shape[88].x+shape[106].x)/5, (int)(4*shape[88].y+shape[106].y)/5);

if(showRegionsOnGui)

cvPolyLine(img, faceComponent, nPoints, 1, 1, blue);

cvSetZero(tmpFimg1);

cvSetZero(tmpFimg2);

cvFillPoly(tmpFimg1, faceComponent, nPoints, 1, cvScalar(1,1,1));

cvMultiplyAcc(tmpFimg1, sobelF1, tmpFimg2);

cvMultiplyAcc(tmpFimg1, sobelF2, tmpFimg2);

area = cvSum(tmpFimg1);

sum = cvSum(tmpFimg2);

features.at<float>(0,iFeature) = (float)(sum.val[0]/area.val[0]);

++iFeature;

CvPoint lipHeightL = cvPoint((int)shape[92].x, (int)shape[92].y);

CvPoint lipHeightH = cvPoint((int)shape[84].x, (int)shape[84].y);

if(showRegionsOnGui)

cvLine(img, lipHeightL, lipHeightH, red, 2);

double lipDistH = (cvSqrt((shape[92].x-shape[84].x)*(shape[92].x-shape[84].x)+

(shape[92].y-shape[84].y)*(shape[92].y-shape[84].y))

/ shape.GetWidth());

features.at<float>(0,iFeature) = (float)lipDistH;

++iFeature;

CvPoint lipR = cvPoint((int)shape[80].x, (int)shape[80].y);

CvPoint lipL = cvPoint((int)shape[88].x, (int)shape[88].y);

if(showRegionsOnGui)

cvLine(img, lipR, lipL, red, 2);

double lipDistW = (cvSqrt((shape[80].x-shape[88].x)*(shape[80].x-shape[88].x)+

(shape[80].y-shape[88].y)*(shape[80].y-shape[88].y))

/ shape.GetWidth());

features.at<float>(0,iFeature) = (float)lipDistW;

++iFeature;

cvFree(faceComponent);

}

void initialize(IplImage* img, int iFrame)

{

if(iFrame >= 5) {

for(int iFeature = 0; iFeature < N_FEATURES; iFeature++){

featureScales.at<float>(0,iFeature) += features.at<float>(0,iFeature) / (FRAME_TO_START_DECISION-5);

}

}

if(showProcessedGui)

{

cvFlip(tmpGimg1, NULL, 1);

cvShowImage(ProcessedName, tmpGimg1);

}

if(showTrackerGui)

{

CvScalar expColor = cvScalar(0,0,0);

cvFlip(img, NULL, 1);

if(iFrame%5 != 0)

cvPutText(img, "Initializing", cvPoint(5, 12), &font, expColor);

}

}

void normalizeFeatures(IplImage* img)

{

cvFlip(img, NULL, 1);

for(int iFeature = 0; iFeature < N_FEATURES; iFeature++)

featureScales.at<float>(0,iFeature) = 1 / featureScales.at<float>(0,iFeature);

}

void track(IplImage* img)

{

for(int iFeature = 0; iFeature < N_FEATURES; iFeature++){

features.at<float>(0,iFeature) = features.at<float>(0,iFeature) * featureScales.at<float>(0,iFeature);

}

if(PRINT_FEATURES)

{

for(int i=0; i<N_FEATURES; i++)

printf("%3.2f ", features.at<float>(0,i));

printf("\n");

}

if(PRINT_FEATURE_SCALES)

{

for(int i=0; i<N_FEATURES; i++)

printf("%3.2f ", featureScales.at<float>(0,i));

printf("\n");

}

if(showProcessedGui)

{

cvFlip(sobelF1, NULL, 1);

cvShowImage(ProcessedName, sobelF1);

cvFlip(sobelF2, NULL, 1);

cvShowImage("ada", sobelF2);

}

if(showTrackerGui) {

CvScalar expColor = cvScalar(0,254,0);

cvFlip(img, NULL, 1);

int start=12, step=15, current;

current = start;

for(int i = 0; i < N_EXPRESSIONS; i++, current+=step)

{

cvPutText(img, EXP_NAMES[i], cvPoint(5, current), &font, expColor);

}

expressions = get_class_weights(features);

current = start - 3;

for(int i = 0; i < N_EXPRESSIONS; i++, current+=step)

{

cvLine(img, cvPoint(80, current), cvPoint((int)(80+expressions.at<double>(0,i)*50), current), expColor, 2);

}

current += step + step;

if(showFeatures == 1)

{

for(int i=0; i<N_FEATURES; i++)

{

current += step;

char buf[4];

sprintf(buf, "%.2f", features.at<float>(0,i));

cvPutText(img, buf, cvPoint(5, current), &font, expColor);

}

}

}

}

int write_features(string filename, int j, int numFeats, IplImage* img)

{

CvScalar expColor = cvScalar(0,0,0);

cvFlip(img, NULL, 1);

if(j%100 == 0)

{

ofstream file(filename, ios::app);

if(!file.is_open()){

cout << "error opening file: " << filename << endl;

exit(1);

}

cvPutText(img, "Features Taken:", cvPoint(5, 12), &font, expColor);

for(int i=0; i<N_FEATURES; i++)

{

char buf[4];

sprintf(buf, "%.2f", features.at<float>(0,i) * featureScales.at<float>(0,i));

printf("%3.2f, ", features.at<float>(0,i) * featureScales.at<float>(0,i));

file << buf << " ";

}

file << endl;

printf("\n");

cvShowImage("taken photo", img);

numFeats++;

file.close();

}

if(j%100 != 0)

{

cvPutText(img, "Current Expression: ", cvPoint(5, 12), &font, expColor);

cvPutText(img, EXP_NAMES[numFeats/N_SAMPLES], cvPoint(5, 27), &font, expColor);

cvPutText(img, "Features will be taken when the counter is 0.", cvPoint(5, 42), &font, expColor);

char buf[12];

sprintf(buf, "Counter: %2d", 100-(j%100));

cvPutText(img, buf, cvPoint(5, 57), &font, expColor);

}

return numFeats;

}

int select(int selection, int range)

{

int deneme = cvWaitKey();

if(deneme == UP)

{

selection = (selection+range-1)%range;

}

else if(deneme == DOWN)

{

selection = (selection+range+1)%range;

}

else if(deneme == 13)

{

selection += range;

}

else if(deneme == 27)

{

exit(0);

}

return selection;

}

int selectionMenu(IplImage* img, string title, vector<string> lines)

{

CvScalar expColor = cvScalar(0,0,0);

CvScalar mark = cvScalar(255,255,0);

int selection = 0, range = lines.size(), step = 15, current = 12;

IplImage* print = cvCreateImage( cvSize(img->width, img->height ), img->depth, img->nChannels );

do{

current=12;

cvCopy(img, print);

if(title != ""){

cvPutText(print, title.c_str(), cvPoint(5, current), &font, expColor);

current+=step;

}

cvRectangle(print, cvPoint(0, current-4+selection*step), cvPoint(319, current-7+selection*step), mark, 12);

for(int i=0 ; i<range; i++){

cvPutText(print, lines.at(i).c_str(), cvPoint(5, current), &font, expColor);

current += step;

}

cvShowImage(WindowsName, print);

selection = select(selection, range);

if(selection>=range){

return selection - range;

}

}while(1);

}

string getKey(string word, char key)

{

if(key == 27){

exit(1);

}

else if(key == 8 && word.size()>0)

{

word.pop_back();

}

else if(word.size()<10 && ((key>='a' && key<='z') || (key>='A' && key<='Z') || (key>='0' && key<='9' || (key=='_')))){

word.push_back(key);

}

return word;

}

bool fitImage(IplImage *image, char *pts_name, char* model_name, int n_iteration, bool show_result)

{

asmfitting fit_asm;

if(fit_asm.Read(model_name) == false)

exit(0);

int nFaces;

asm_shape *shapes = NULL, *detshapes = NULL;

bool flag = detect_all_faces(&detshapes, nFaces, image);

if(flag)

{

shapes = new asm_shape[nFaces];

for(int i = 0; i < nFaces; i++)

{

InitShapeFromDetBox(shapes[i], detshapes[i], fit_asm.GetMappingDetShape(), fit_asm.GetMeanFaceWidth());

}

}

else

{

return false;

}

fit_asm.Fitting2(shapes, nFaces, image, n_iteration);

for(int i = 0; i < nFaces; i++){

fit_asm.Draw(image, shapes[i]);

save_shape(shapes[i], pts_name);

}

if(showTrackerGui && show_result) {

cvNamedWindow("Fitting", 1);

cvShowImage("Fitting", image);

cvWaitKey(0);

cvReleaseImage(&image);

}

delete[] shapes;

free_shape_memeory(&detshapes);

return true;

}

bool takePhoto(int counter, bool printCounter, string folder)

{

CvScalar expColor = cvScalar(0,0,0);

while(1)

{

IplImage* image = read_from_camera();

cvFlip(image, NULL, 1);

char key = cvWaitKey(20);

if(key==32 || key==13)

{

std::ostringstream imageName, ptsName, xmlName;

imageName << folder << "/" << counter << ".jpg";

ptsName << folder << "/" << counter << ".pts";

xmlName << folder << "/" << counter << ".xml";

IplImage* temp = cvCreateImage( cvSize(image->width, image->height ), image->depth, image->nChannels );

cvCopy(image, temp);

if(fitImage(image, _strdup(ptsName.str().c_str()), "../users/generic/generic.amf", 30, false))

{

cvSaveImage(imageName.str().c_str(), temp);

string command = "python ../annotator/converter.py pts2xml " + ptsName.str() + " " + xmlName.str();

system(command.c_str());

cvShowImage("Photo", temp);

return true;

}

}

else if(key == 27)

{

cvDestroyWindow(WindowsName);

cvDestroyWindow("Photo");

return false;

}

cvPutText(image, "Press ENTER or SPACE to take photos.", cvPoint(5, 12), &font, expColor);

cvPutText(image, "To finish taking photos press ESCAPE.", cvPoint(5, 27), &font, expColor);

if(printCounter)

{

std::ostringstream counterText;

counterText << "Number of photos taken: " << counter;

cvPutText(image, counterText.str().c_str(), cvPoint(5, 42), &font, expColor);

}

cvShowImage(WindowsName, image);

}

}

void takePhotos(string folder)

{

int counter = 0;

while(takePhoto(counter, true, folder)){

counter++;

};

}

void fineTune(string imageFolder)

{

string command = "python ../annotator/annotator.py " + imageFolder;

system(command.c_str());

filelists conv = ScanNSortDirectory(imageFolder.c_str(), ".xml");

for(size_t i=0 ; i<conv.size() ; i++){

string ptsName = conv.at(i).substr(0, conv.at(i).size()-4) + ".pts";

command = "python ../annotator/converter.py xml2pts " + conv.at(i) + " " + ptsName;

system(command.c_str());

}

}

string createUser(IplImage* img)

{

CvScalar expColor = cvScalar(0,0,0);

CvScalar mark = cvScalar(255,255,0);

IplImage* print = cvCreateImage( cvSize(img->width, img->height ), img->depth, img->nChannels );

int step = 15, current = 12;

string userName;

char key;

do{

cvCopy(img, print);

cvPutText(print, "Enter name to create a new user.", cvPoint(5, current), &font, expColor);

cvPutText(print, userName.c_str(), cvPoint(5, current+step), &font, expColor);

cvShowImage(WindowsName, print);

key = cvWaitKey();

if(key != 13){

userName = getKey(userName, key);

}

else if(userName.size() > 0)

{

break;

}

}while(1);

cout << userName;

string userFolder = "../users/" + userName;

string imageFolder = userFolder + "/photos";

_mkdir(userFolder.c_str());

_mkdir(imageFolder.c_str());

takePhotos(imageFolder);

fineTune(imageFolder);

string command ="..\\bin\\build.exe -p 4 -l 5 " + imageFolder + " jpg pts ../cascades/haarcascade_frontalface_alt2.xml " + userFolder + "/" + userName;

cout << endl << command << endl;

system(command.c_str());

return userName;

}

string selectUser(IplImage* img){

vector<string> userList = directoriesOf("../users/");

userList.push_back("Create New User");

int selection = selectionMenu(img, "Select or Create User", userList);

return userList.at(selection);

}

string menu1(IplImage* img, string userName)

{

vector<string> files = ScanNSortDirectory(("../users/" + userName).c_str(), "txt");

vector<string> lines;

for(size_t i=0 ; i<files.size() && i<20 ; i++)

{

lines.push_back(files.at(i).substr(10+userName.size(),files.at(i).size()-14-userName.size()));

}

lines.push_back("Create New Expression Class");

files.push_back("Create New Expression Class");

int selection = selectionMenu(img, "Select or Create Expression Class", lines);

return files.at(selection);

}

string createClassMenu(IplImage* img, string userName){

CvScalar expColor = cvScalar(0,0,0);

CvScalar mark = cvScalar(255,255,0);

IplImage* print = cvCreateImage( cvSize(img->width, img->height ), img->depth, img->nChannels );

int step = 15, current = 12;

string filename;

char key;

do{

cvCopy(img, print);

cvPutText(print, "Enter filename to create a new file.", cvPoint(5, current), &font, expColor);

cvPutText(print, filename.c_str(), cvPoint(5, current+step), &font, expColor);

cvShowImage(WindowsName, print);

key = cvWaitKey();

if(key != 13){

filename = getKey(filename, key);

}

else if(filename.size() > 0){ //filename empty de餴lse yap

break;

}

}while(1);

string numOfExprStr = "Number Of Expressions: ";

cvPutText(print, numOfExprStr.c_str(), cvPoint(5, current+step*2), &font, expColor);

cvShowImage(WindowsName, print);

do{

key = cvWaitKey();

}while(key<'2' || key>'9');

numOfExprStr.push_back(key);

int numOfExpr = key -'0';

cout << numOfExpr << endl;

string numOfSampStr = "Number Of Samples per Expression: ";

cvPutText(print, numOfExprStr.c_str(), cvPoint(5, current+step*2), &font, expColor);

cvPutText(print, numOfSampStr.c_str(), cvPoint(5, current+step*3), &font, expColor);

cvShowImage(WindowsName, print);

do{

key = cvWaitKey();

}while(key<'2' || key>'9');

numOfSampStr.push_back(key);

int numOfSamp = key -'0';

cout << numOfSamp << endl;

cvPutText(print, numOfExprStr.c_str(), cvPoint(5, current+step*2), &font, expColor);

cvPutText(print, numOfSampStr.c_str(), cvPoint(5, current+step*3), &font, expColor);

cvPutText(print, "Expression Names:", cvPoint(5, current+step*5), &font, expColor);

string *exprNames = new string[numOfExpr];

for(int i=0 ; i<numOfExpr ; i++){

do{

cvCopy(img, print);

cvPutText(print, "Enter filename to create a new file.", cvPoint(5, current), &font, expColor);

cvPutText(print, filename.c_str(), cvPoint(5, current+step), &font, expColor);

cvPutText(print, numOfExprStr.c_str(), cvPoint(5, current+step*2), &font, expColor);

cvPutText(print, numOfSampStr.c_str(), cvPoint(5, current+step*3), &font, expColor);

cvPutText(print, "Expression Names:", cvPoint(5, current+step*5), &font, expColor);

for(int j=0 ; j<numOfExpr ; j++){

cvPutText(print, exprNames[j].c_str(), cvPoint(5, current+(step*(6+j))), &font, expColor);

}

cvShowImage(WindowsName, print);

key = cvWaitKey();

if(key != 13)

{

exprNames[i] = getKey(exprNames[i], key);

}

else if(exprNames[i].size() > 0)

{

break;

}

}while(1);

cout << exprNames[i] << endl;

}

filename = "../users/" + userName + "/" + filename + ".txt";

config(filename, numOfExpr, numOfSamp, exprNames);

return filename;

}

int main(int argc, char *argv[])

{

asmfitting fit_asm;

char* model_name = "../users/generic/generic.amf";

char* cascade_name = "../cascades/haarcascade_frontalface_alt2.xml";

char* VIfilename = NULL;

char* class_name = NULL;

char* shape_output_filename = NULL;

char* pose_output_filename = NULL;

char* features_output_filename = NULL;

char* expressions_output_filename = NULL;

int use_camera = 1;

int image_or_video = -1;

int i;

int n_iteration = 30;

int maxComponents = 10;

for(i = 1; i < argc; i++)

{

if(argv[i][0] != '-') usage_fit();

if(++i > argc) usage_fit();

switch(argv[i-1][1])

{

case 'm':

model_name = argv[i];

break;

case 's':

break;

case 'h':

cascade_name = argv[i];

break;

case 'i':

if(image_or_video >= 0)

{

fprintf(stderr, "only process image/video/camera once\n");

usage_fit();

}

VIfilename = argv[i];

image_or_video = 'i';

use_camera = 0;

break;

case 'v':

if(image_or_video >= 0)

{

fprintf(stderr, "only process image/video/camera once\n");

usage_fit();

}

VIfilename = argv[i];

image_or_video = 'v';

use_camera = 0;

break;

case 'c':

class_name = argv[i];

break;

case 'H':

usage_fit();

break;

case 'n':

n_iteration = atoi(argv[i]);

break;

case 'S':

shape_output_filename = argv[i];

case 'P':

pose_output_filename = argv[i];

case 'F':

features_output_filename = argv[i];

break;

case 'E':

expressions_output_filename = argv[i];

break;

case 'g':

showTrackerGui = atoi(argv[i]);

break;

case 'e':

showProcessedGui = atoi(argv[i]);

break;

case 'r':

showRegionsOnGui = atoi(argv[i]);

break;

case 'f':

showFeatures = atoi(argv[i]);

break;

case 't':

showShape = atoi(argv[i]);

break;

case 'x':

maxComponents = atoi(argv[i]);

break;

default:

fprintf(stderr, "unknown options\n");

usage_fit();

}

}

if(fit_asm.Read(model_name) == false)

return -1;

if(init_detect_cascade(cascade_name) == false)

return -1;

cvInitFont(&font, CV_FONT_HERSHEY_SIMPLEX, 0.4, 0.4, 0, 1, CV_AA);

if(image_or_video == 'i')

{

IplImage * image = cvLoadImage(VIfilename, 1);

if(image == 0)

{

fprintf(stderr, "Can not Open image %s\n", VIfilename);

exit(0);

}

if(shape_output_filename == NULL){

char* shape_output_filename = VIfilename;

shape_output_filename[strlen(shape_output_filename)-3]='p';

shape_output_filename[strlen(shape_output_filename)-2]='t';

shape_output_filename[strlen(shape_output_filename)-1]='s';

}

fitImage(image, shape_output_filename, model_name, n_iteration, true);

}

else if(image_or_video == 'v')

{

int frame_count;

asm_shape shape, detshape;

bool flagFace = false, flagShape = false;

int countFramesUnderThreshold = 0;

IplImage* image;

int j, key;

FILE *fpShape, *fpPose, *fpFeatures, *fpExpressions;

frame_count = open_video(VIfilename);

if(frame_count == -1) return false;

if(shape_output_filename != NULL)

{

fopen_s(&fpShape, shape_output_filename, "w");

if (fpShape == NULL)

{

fprintf(stderr, "Can't open output file %s!\n", shape_output_filename);

exit(1);

}

}

if(pose_output_filename != NULL)

{

fopen_s(&fpPose, pose_output_filename, "w");

if (fpPose == NULL)

{

fprintf(stderr, "Can't open output file %s!\n", shape_output_filename);

exit(1);

}

}

if(features_output_filename != NULL) {

fopen_s(&fpFeatures, features_output_filename, "w");

if (fpFeatures == NULL) {

fprintf(stderr, "Can't open output file %s!\n", features_output_filename);

exit(1);

}

}

if(expressions_output_filename != NULL) {

fopen_s(&fpExpressions, expressions_output_filename, "w");

if (fpExpressions == NULL) {

fprintf(stderr, "Can't open output file %s!\n", expressions_output_filename);

exit(1);

}

}

asm_shape shapes[N_SHAPES_FOR_FILTERING]; // Will be used for median filtering

asm_shape shapeCopy, shapeAligned;

for(j = 0; j < frame_count; j ++)

{

double t = (double)cvGetTickCount();

if(PRINT_TIME_TICKS)

printf("Tracking frame %04i: ", j);

image = read_from_video(j);

if(j == 0)

setup_tracker(image);

if(flagShape == false)

{

flagFace = detect_one_face(detshape, image);

if(flagFace)

{

InitShapeFromDetBox(shape, detshape, fit_asm.GetMappingDetShape(), fit_asm.GetMeanFaceWidth());

}

else goto show;

}

flagShape = fit_asm.ASMSeqSearch(shape, image, j, true, n_iteration);

shapeCopy = shape;

if(j==0)

for(int k=0; k < N_SHAPES_FOR_FILTERING; k++)

shapes[k] = shapeCopy;

else {

for(int k=0; k < N_SHAPES_FOR_FILTERING-1; k++)

shapes[k] = shapes[k+1];

shapes[N_SHAPES_FOR_FILTERING-1] = shapeCopy;

}

shapeCopy = get_weighted_mean(shapes, N_SHAPES_FOR_FILTERING);

if(flagShape && showShape){

fit_asm.Draw(image, shapeCopy);

}

if(!flagShape)

{

if(countFramesUnderThreshold == 0)

flagShape = false;

else

flagShape = true;

countFramesUnderThreshold = (countFramesUnderThreshold + 1) % MAX_FRAMES_UNDER_THRESHOLD;

}

extract_features_and_display(image, shapeCopy);

if(j < FRAME_TO_START_DECISION){

initialize(image, j);

}

else if(j == FRAME_TO_START_DECISION){

normalizeFeatures(image);

read_config_from_file(class_name);

if(expressions_output_filename != NULL){

fprintf(fpExpressions, "FrameNo");

for(i=0; i<N_EXPRESSIONS-1; i++){

fprintf(fpExpressions, "%11s", EXP_NAMES[i]);

}

fprintf(fpExpressions, "%11s\n", EXP_NAMES[i]);

}

}

else{

track(image);

if(features_output_filename != NULL) write_vector(features, fpFeatures);

if(expressions_output_filename != NULL) write_expressions(expressions, fpExpressions, j);

}

cout<<j<<endl;

if(flagShape && showShape){

cvFlip(image, NULL, 1);

fit_asm.Draw(image, shapeCopy);

cvFlip(image, NULL, 1);

}

cvShowImage(WindowsName, image);

show: key = cv::waitKey(10); if(key == 27) break;

t = ((double)cvGetTickCount() - t )/ (cvGetTickFrequency()*1000.);

if(PRINT_TIME_TICKS)

printf("Time spent: %.2f millisec\n", t);

}

if(shape_output_filename != NULL) fclose(fpShape);

if(pose_output_filename != NULL) fclose(fpPose);

if(features_output_filename != NULL) fclose(fpFeatures);

if(expressions_output_filename != NULL) fclose(fpExpressions);

close_video();

}

else if(use_camera)

{

if(open_camera(0, CAM_WIDTH, CAM_HEIGHT) == false)

return -1;

int numFeats = 0;

boolean create = false;

IplImage* image;

if(CAM_WIDTH==640) image = cvLoadImage("../images/BackgroundLarge.png");

else image = cvLoadImage("../images/Background.png");

string userName = selectUser(image);

if(userName == "Create New User"){

userName = createUser(image);

}

model_name = _strdup(("../users/" + userName + "/" + userName + ".amf").c_str());

if(fit_asm.Read(model_name) == false)

return -1;

string filename = menu1(image, userName);

if(filename == "Create New Expression Class"){

create = true;

filename = createClassMenu(image, userName);

}

asm_shape shape, detshape;

bool flagFace = false, flagShape = false;

int countFramesUnderThreshold = 0;

int j = 0, key;

asm_shape shapes[N_SHAPES_FOR_FILTERING];

asm_shape shapeCopy, shapeAligned;

while(1)

{

image = read_from_camera();

if(j == 0)

setup_tracker(image);

if(flagShape == false)

{

flagFace = detect_one_face(detshape, image);

if(flagFace)

InitShapeFromDetBox(shape, detshape, fit_asm.GetMappingDetShape(), fit_asm.GetMeanFaceWidth());

else

goto show2;

}

flagShape = fit_asm.ASMSeqSearch(shape, image, j, true, n_iteration);

shapeCopy = shape;

if(j==0)

for(int k=0; k < N_SHAPES_FOR_FILTERING; k++)

shapes[k] = shapeCopy;

else {

for(int k=0; k < N_SHAPES_FOR_FILTERING-1; k++)

shapes[k] = shapes[k+1];

shapes[N_SHAPES_FOR_FILTERING-1] = shapeCopy;

}

shapeCopy = get_weighted_mean(shapes, N_SHAPES_FOR_FILTERING);

if(!flagShape)

{

if(countFramesUnderThreshold == 0)

flagShape = false;

else

flagShape = true;

countFramesUnderThreshold = (countFramesUnderThreshold + 1) % MAX_FRAMES_UNDER_THRESHOLD;

}

extract_features_and_display(image, shapeCopy);

if(j < FRAME_TO_START_DECISION) {initialize(image, j); }

else if(j == FRAME_TO_START_DECISION) {normalizeFeatures(image); if(!create) read_config_from_file(filename); }

else if(numFeats < N_POINTS && create) {numFeats = write_features(filename, j, numFeats, image); }

else if(numFeats == N_POINTS && create) {read_config_from_file(filename); numFeats++; }

else {track(image); }

if(flagShape && showShape){

cvFlip(image, NULL, 1);

fit_asm.Draw(image, shapeCopy);

cvFlip(image, NULL, 1);

}

cvShowImage(WindowsName, image);

show2:

key = cvWaitKey(20);

if(key == 27)

break;

else if(key == 'r')

{

showRegionsOnGui = -1*showRegionsOnGui + 1;

}

else if(key == 't')

{

showShape = -1*showShape + 1;

}

else if(key == 's')

{

for(int i=0; i<N_FEATURES; i++)

printf("%3.2f, ", features.at<float>(0,i));

printf("\n");

}

j++;

}

close_camera();

}

exit_tracker();

return 0;

}

| 12 | No.12 Revision |

This application can recognize a person emotion happy,anger,sad,neutral,disgust,surprise. For example when a person smiling, the happy's bar will increase.

The image below are my current work and code but I want to add percentage to the bar, or maybe just percentage instead of bar? How can I do so? so??

I had search the Q&A, but I couldn't found anything related.

Thank you in advanced.

#include <vector>

#include <string>

#include <iostream>

#include <conio.h>

#include <direct.h>

#include "asmfitting.h"

#include "vjfacedetect.h"

#include "video_camera.h"

#include "F_C.h"

#include "util.h"

#include "scandir.h"

using namespace std;

#define N_SHAPES_FOR_FILTERING 3

#define MAX_FRAMES_UNDER_THRESHOLD 15

#define NORMALIZE_POSE_PARAMS 0

#define CAM_WIDTH 320

#define CAM_HEIGHT 240

#define FRAME_TO_START_DECISION 50

#define PRINT_TIME_TICKS 0

#define PRINT_FEATURES 0

#define PRINT_FEATURE_SCALES 0

#define UP 2490368

#define DOWN 2621440

#define RIGHT 2555904

#define LEFT 2424832

const char* WindowsName = "baurzhanaisin Facial Recognition of Emotions.";

const char* ProcessedName = "Results";

IplImage *tmpCimg1=0;

IplImage *tmpGimg1=0, *tmpGimg2=0;

IplImage *tmpSimg1 = 0, *tmpSimg2 = 0;

IplImage *tmpFimg1 = 0, *tmpFimg2 = 0;

IplImage *sobel1=0, *sobel2=0, *sobelF1=0, *sobelF2=0;

cv::Mat features, featureScales, expressions;

CvFont font;

int showTrackerGui = 1, showProcessedGui = 0, showRegionsOnGui = 0, showFeatures = 0, showShape = 0;

void setup_tracker(IplImage* sampleCimg)

{

if(showTrackerGui) cvNamedWindow(WindowsName,1);

if(showProcessedGui) cvNamedWindow(ProcessedName, 1);

tmpCimg1 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 3);

tmpGimg1 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

tmpGimg2 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

tmpSimg1 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_16S, 1);

tmpSimg2 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_16S, 1);

sobel1 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

sobel2 = cvCreateImage(cvGetSize(sampleCimg), sampleCimg->depth, 1);

sobelF1 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

sobelF2 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

tmpFimg1 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

tmpFimg2 = cvCreateImage(cvGetSize(sampleCimg), IPL_DEPTH_32F, 1);

features = cv::Mat(1,N_FEATURES, CV_32FC1);

featureScales = cv::Mat(1,N_FEATURES, CV_32FC1);

cvZero(&(CvMat)featureScales);

}

void exit_tracker() {

cvReleaseImage(&tmpCimg1);

cvReleaseImage(&tmpGimg1);

cvReleaseImage(&tmpGimg2);

cvReleaseImage(&tmpSimg1);

cvReleaseImage(&tmpSimg2);

cvReleaseImage(&tmpFimg1);

cvReleaseImage(&tmpFimg2);

cvReleaseImage(&sobel1);

cvReleaseImage(&sobel2);

cvReleaseImage(&sobelF1);

cvReleaseImage(&sobelF2);

cvDestroyAllWindows();

}

void extract_features_and_display(IplImage* img, asm_shape shape){

int iFeature = 0;

CvScalar red = cvScalar(0,0,255);

CvScalar green = cvScalar(0,255,0);

CvScalar blue = cvScalar(255,0,0);

CvScalar gray = cvScalar(150,150,150);

CvScalar lightgray = cvScalar(150,150,150);

CvScalar white = cvScalar(255,255,255);

cvCvtColor(img, tmpCimg1, CV_RGB2HSV);

cvSplit(tmpCimg1, 0, 0, tmpGimg1, 0);

cvSobel(tmpGimg1, tmpSimg1, 1, 0, 3);

cvSobel(tmpGimg1, tmpSimg2, 0, 1, 3);

cvConvertScaleAbs(tmpSimg1, sobel1);

cvConvertScaleAbs(tmpSimg2, sobel2);

cvConvertScale(sobel1, sobelF1, 1/255.);

cvConvertScale(sobel2, sobelF2, 1/255.);

int nPoints[1];

CvPoint **faceComponent;

faceComponent = (CvPoint **) cvAlloc (sizeof (CvPoint *));

faceComponent[0] = (CvPoint *) cvAlloc (sizeof (CvPoint) * 10);

for(int i = 0; i < shape.NPoints(); i++)

if(showRegionsOnGui)

cvCircle(img, cvPoint((int)shape[i].x, (int)shape[i].y), 0, lightgray);

CvPoint eyeBrowMiddle1 = cvPoint((int)(shape[55].x+shape[63].x)/2, (int) (shape[55].y+shape[63].y)/2);

CvPoint eyeBrowMiddle2 = cvPoint((int)(shape[69].x+shape[75].x)/2, (int)(shape[69].y+shape[75].y)/2);

if(showRegionsOnGui) {

cvLine(img, cvPoint((int)(shape[53].x+shape[65].x)/2, (int)(shape[53].y+shape[65].y)/2), eyeBrowMiddle1, red, 2);

cvLine(img, eyeBrowMiddle1, cvPoint((int)(shape[58].x+shape[60].x)/2, (int)(shape[58].y+shape[60].y)/2), red, 2);

cvLine(img, cvPoint((int)(shape[66].x+shape[78].x)/2, (int)(shape[66].y+shape[78].y)/2), eyeBrowMiddle2, red, 2);

cvLine(img, eyeBrowMiddle2, cvPoint((int)(shape[71].x+shape[73].x)/2, (int)(shape[71].y+shape[73].y)/2), red, 2);

}

CvPoint eyeMiddle1 = cvPoint((int)(shape[3].x+shape[9].x)/2, (int)(shape[3].y+shape[9].y)/2);

CvPoint eyeMiddle2 = cvPoint((int)(shape[29].x+shape[35].x)/2, (int)(shape[29].y+shape[35].y)/2);

if(showRegionsOnGui){

cvCircle(img, eyeMiddle1, 2, lightgray, 1);

cvCircle(img, eyeMiddle2, 2, lightgray, 1);

}

double eyeBrowDist1 = (cvSqrt((double)(eyeMiddle1.x-eyeBrowMiddle1.x)*(eyeMiddle1.x-eyeBrowMiddle1.x)+

(eyeMiddle1.y-eyeBrowMiddle1.y)*(eyeMiddle1.y-eyeBrowMiddle1.y))

/ shape.GetWidth());

double eyeBrowDist2 = (cvSqrt((double)(eyeMiddle2.x-eyeBrowMiddle2.x)*(eyeMiddle2.x-eyeBrowMiddle2.x)+

(eyeMiddle2.y-eyeBrowMiddle2.y)*(eyeMiddle2.y-eyeBrowMiddle2.y))

/ shape.GetWidth());