Hey,

I am new to OpenCV and I'm working on an image recognition/matching iPhone App. The aim is to take a picture and to compare the matching to other pictures and find the correct picture.

For saving calculation time I wanted to store my descriptors of the images in a database. So I created some little classes, which handle that problem. But when I looked at my database every entry was exactly the same. This was not a fault of my code I think. I compared the cv::Mat descriptors output for all images and it exactly looks equal.

I know that my image recognition works quite good. But how could that work without different descriptors.

I am using ORBFeatureDetection and ORBDescriporExtraction

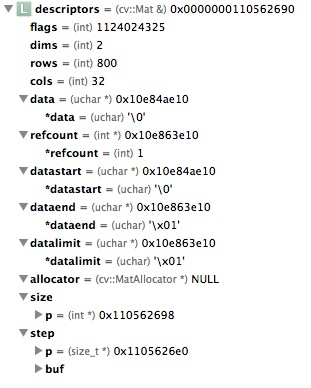

At my picture you can see the output for my image. Which of these values are important for the BFMatcher or the FlannBasedMatcher to compare the images.

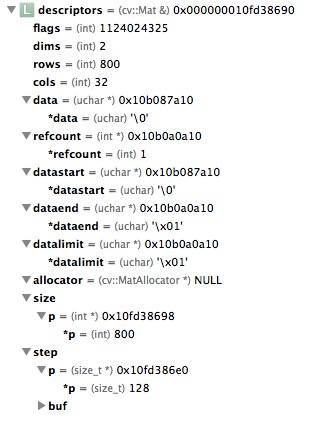

Here's an output of a different image:

As you can see its exactly the same.

So how could the matcher two exactly same descriptors.

Here's some sample Code:

- (cv::Mat)getDescriptorForImage:(cv::Mat)image andKeypoints:(std::vector<cv::KeyPoint>)keypoints {

cv::Mat descriptors;

cv::OrbDescriptorExtractor extractor;

extractor.compute( image, keypoints, descriptors);

if ( descriptors.empty() )

cvError(0,"MatchFinder","1st descriptor empty",__FILE__,__LINE__);

if(descriptors.type()!=CV_32F) {

descriptors.convertTo(descriptors, CV_32F);

}

return descriptors;

}