I am doing a project requiring composing full 360-degree view images. To produce an output image with no visible seam, I adopted the GraphCut algorithm on paper "Graphcut Textures: Image and Video Synthesis Using Graph Cuts" which based on the maxflow/min-cut algorithm. I used the implementation in CPU of Kolmogorov and Boykov described in the paper"An Experimental Comparison of Min-Cut/Max-Flow Algorithms for Energy Minimization in Vision" to find the best min-cut to stitch image which gives rise to a relatively good result.

However, I want a faster implementation to solve the maxflow/min-cut algorithm. That when I found an implementation on CUDA described in "CudaCuts: Fast Graph Cuts on the GPU". I successfully run their examples on their project page on image segmentation and produce the same results as they did. However, when I apply it to my problem, it did not work properly. The only difference in the 2 problems is the way I construct the graph representation for the maxflow/min-cut algorithm to solve. I constructed the graph as they suggested in "Graphcut Textures: Image and Video Synthesis Using Graph Cuts" in which the terminal weights(t-links) are infinite for valid source(sink) nodes and 0 otherwise and neighbor weights(n-links) corresponding to a cost function in paper. That graph representation worked well with Boykov implementation on CPU but it did not work for CUDA implementation by Vibhav Vineet and Narayanan.

I confused since the CUDA implementation worked well for the image segmentation problem meaning the core algorithm (maxflow/min-cut) was correct. Therefore, I guess I am doing something wrong with graph construction. Could anyone who has done experiments with this CUDA implementation give me a hint? Am I misunderstanding the meaning of dataTerm(terminal weights/t-links), smoothTerm(neighbor weights/ n-links) in their code

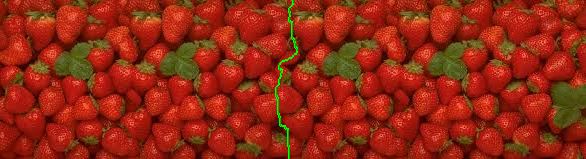

I take a copy of it and lay it to the original one to make a bigger without making a visible seam. I got the following result

I take a copy of it and lay it to the original one to make a bigger without making a visible seam. I got the following result

and the green line depicting the min-cut that Boykov's implementation produce

and the green line depicting the min-cut that Boykov's implementation produce

However, I want a faster implementation to solve the maxflow/min-cut algorithm. That when I found an implementation on CUDA described in "

However, I want a faster implementation to solve the maxflow/min-cut algorithm. That when I found an implementation on CUDA described in "