Hi

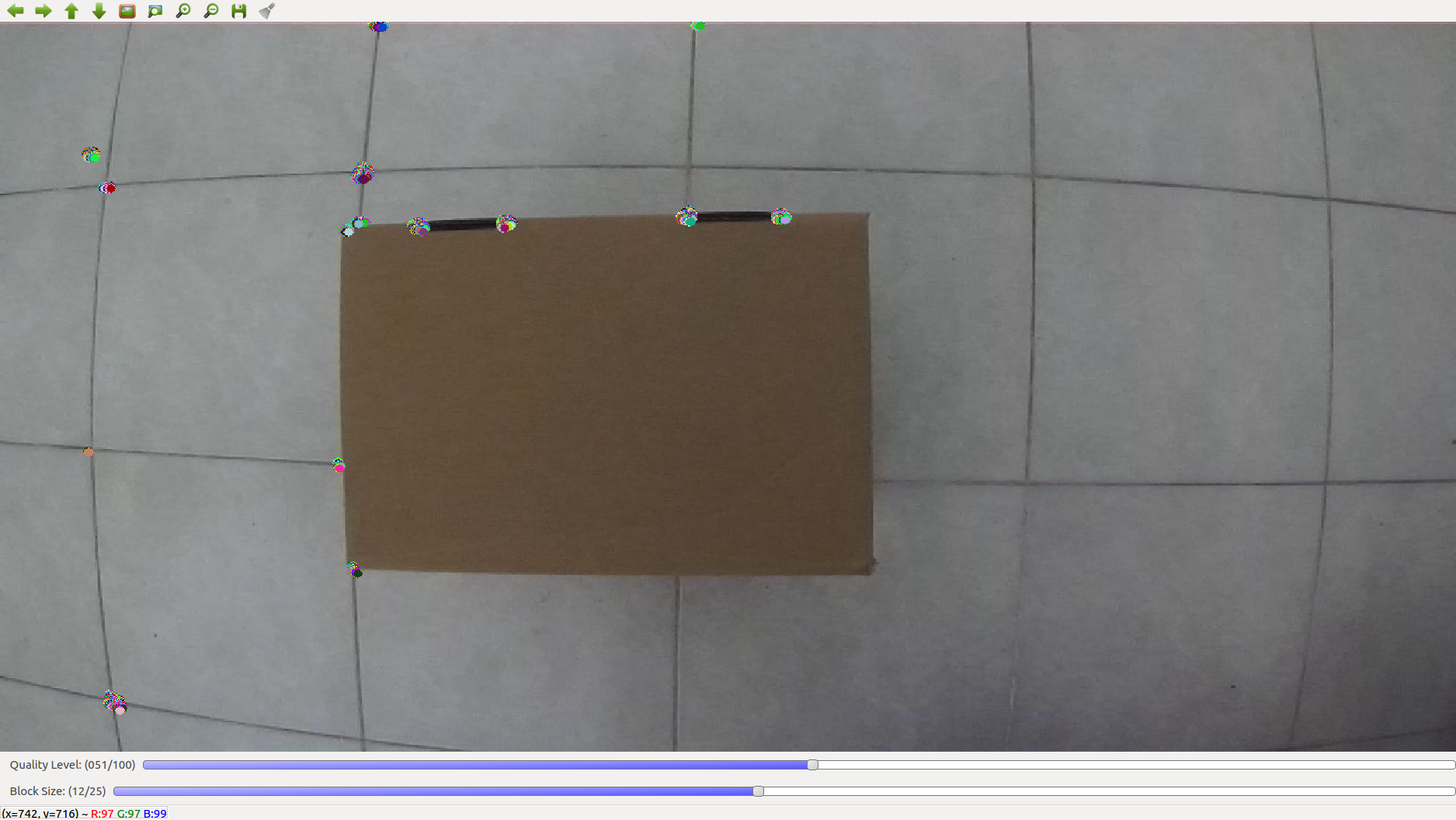

I have code to extract the 2D coordinates from prior knowledge, using e.g. Harris Corners and contour of the object. I'm using these features because the objects are without textures so ORB or SIFT or SURF not going to work. My goal is to get the 2D correspondences for my 3D cad model points and use them in solvePnPRansac to track the object and get 6D Pose in real-time.

I created code for Harris Corners Detection and Contours Detection as well. They are in two different C++ source codes. Here is the C++ code for Harris Corners Detection

void CornerDetection::imageCB(const sensor_msgs::ImageConstPtr& msg)

{

if(blockSize_harris == 0)

blockSize_harris = 1;

cv::Mat img, img_gray, myHarris_dst;

cv_bridge::CvImagePtr cvPtr;

try

{

cvPtr = cv_bridge::toCvCopy(msg, sensor_msgs::image_encodings::BGR8);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

cvPtr->image.copyTo(img);

cv::cvtColor(img, img_gray, cv::COLOR_BGR2GRAY);

myHarris_dst = cv::Mat::zeros( img_gray.size(), CV_32FC(6) );

Mc = cv::Mat::zeros( img_gray.size(), CV_32FC1 );

cv::cornerEigenValsAndVecs( img_gray, myHarris_dst, blockSize_harris, apertureSize, cv::BORDER_DEFAULT );

for( int j = 0; j < img_gray.rows; j++ )

for( int i = 0; i < img_gray.cols; i++ )

{

float lambda_1 = myHarris_dst.at<cv::Vec6f>(j, i)[0];

float lambda_2 = myHarris_dst.at<cv::Vec6f>(j, i)[1];

Mc.at<float>(j,i) = lambda_1*lambda_2 - 0.04f*pow( ( lambda_1 + lambda_2 ), 2 );

}

cv::minMaxLoc( Mc, &myHarris_minVal, &myHarris_maxVal, 0, 0, cv::Mat() );

this->myHarris_function(img, img_gray);

cv::waitKey(2);

}

void CornerDetection::myHarris_function(cv::Mat img, cv::Mat img_gray)

{

myHarris_copy = img.clone();

if( myHarris_qualityLevel < 1 )

myHarris_qualityLevel = 1;

for( int j = 0; j < img_gray.rows; j++ )

for( int i = 0; i < img_gray.cols; i++ )

if( Mc.at<float>(j,i) > myHarris_minVal + ( myHarris_maxVal - myHarris_minVal )*myHarris_qualityLevel/max_qualityLevel )

cv::circle( myHarris_copy, cv::Point(i,j), 4, cv::Scalar( rng.uniform(0,255), rng.uniform(0,255), rng.uniform(0,255) ), -1, 8, 0 );

cv::imshow( harris_win, myHarris_copy );

}

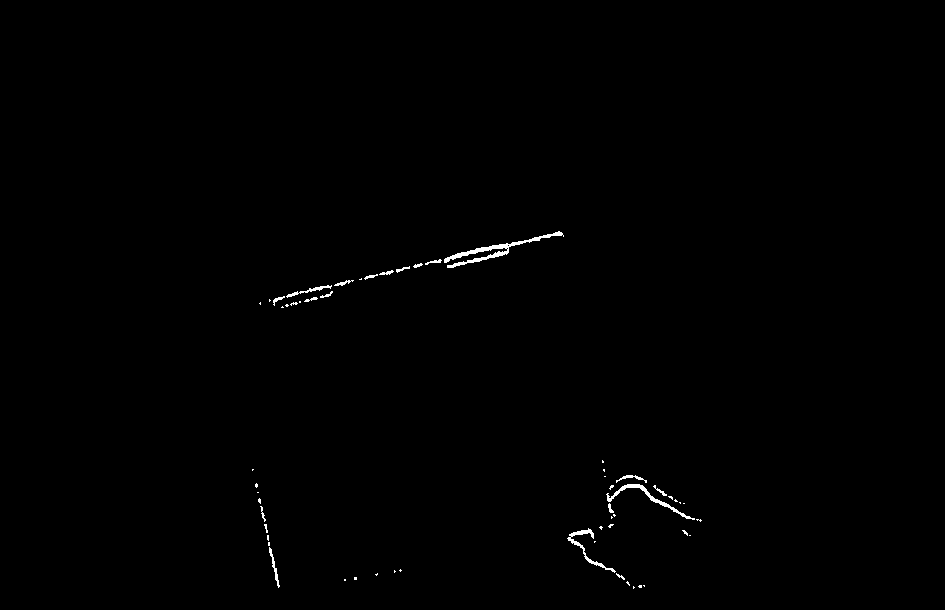

And here the C++ function for Countrours Detection

void TrackSequential::ContourDetection(cv::Mat thresh_in, cv::Mat &output_)

{

cv::Mat temp;

cv::Rect objectBoundingRectangle = cv::Rect(0,0,0,0);

thresh_in.copyTo(temp);

std::vector<std::vector<cv::Point> > contours;

std::vector<cv::Vec4i> hierarchy;

cv::findContours(temp, contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE);

if(contours.size()>0)

{

std::vector<std::vector<cv::Point> > largest_contour;

largest_contour.push_back(contours.at(contours.size()-1));

objectBoundingRectangle = cv::boundingRect(largest_contour.at(0));

int x = objectBoundingRectangle.x+objectBoundingRectangle.width/2;

int y = objectBoundingRectangle.y+objectBoundingRectangle.height/2;

cv::circle(output_,cv::Point(x,y),10,cv::Scalar(0,255,0),2);

}

}

Also, I have the 3D CAD model of the object I like to track and estimate the 6D Pose. My question is how to use the detected 2D Points from Harris Corners and Contours Functions in solvePnPRansac or solvePnP to track the object and get the 6D Pose in real-time?

Thanks