Hi,

I am still new with calibration using OpenCV. I have a setup right now which is composed of three similar cameras (same lens and sensor). Here the following arrangement of the camera, the measurements below are approximate. Rotation between the camera should be approximatively null.

Camera 1->2

- -380mm (+- 50mm) X axis

- -290mm (+- 50mm) Y axis

- -460mm (+- 50mm) Z axis

Camera 1->3

- -850mm (+- 10mm) X axis

- 0 mm (+- 50mm) Y axis

- 0 mm (+- 50mm) Z axis

Methodology

I did the intrinsic calibration for every camera using the code below. For every intrinsic, the returned error was around 1.5.

import pickle

import cv2

from cv2 import aruco as ar

ar_dict = ar.getPredefinedDictionary(ar.DICT_6X6_1000)

board = ar.GridBoard_create(markersX=6, markersY=9, markerLength=0.04,

markerSeparation=0.01, dictionary=ar_dict, firstMarker=108)

criteria = (cv2.TERM_CRITERIA_EPS & cv2.TERM_CRITERIA_COUNT, 10000, 1e-10)

flags = cv2.CALIB_RATIONAL_MODEL | cv2.CALIB_USE_INTRINSIC_GUESS | cv2.CALIB_FIX_TANGENT_DIST | cv2.CALIB_FIX_K5 | cv2.CALIB_FIX_K6

camera_matrix, distortion_coefficients0, _, _ = \

cv2.aruco.calibrateCameraAruco(data['corners'], data['ids'],

data['counter'], board, data['imsize'], cameraMatrix=data['cam_init'],

distCoeffs=data['dist_init'], flags=flags, criteria=criteria)

Here's the output intrinsic I got for camera 1 and 2 respectively. Focal lens of the lens is 2.8mm iwht sensor pixel of 3 microns and sensons dimension of 1208hx1928w:

camera matrix: [[ 951.48868149 0. 974.18640697]

[ 0. 949.82336112 615.18242086]

[ 0. 0. 1. ]]

distortion: [[ 2.47752302e-01 -1.35352132e-02 0.00000000e+00 0.00000000e+00

5.26418500e-04 6.09717199e-01 0.00000000e+00 0.00000000e+00

0.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00

0.00000000e+00 0.00000000e+00]]

camera matrix: [[ 964.28616544 0. 961.13919655]

[ 0. 962.12057895 581.30821674]

[ 0. 0. 1. ]]

distortion: [[ 0.34504854 -0.02941867 0. 0. 0.00217154 0.69914104

0. 0. 0. 0. 0. 0.

0. 0. ]]

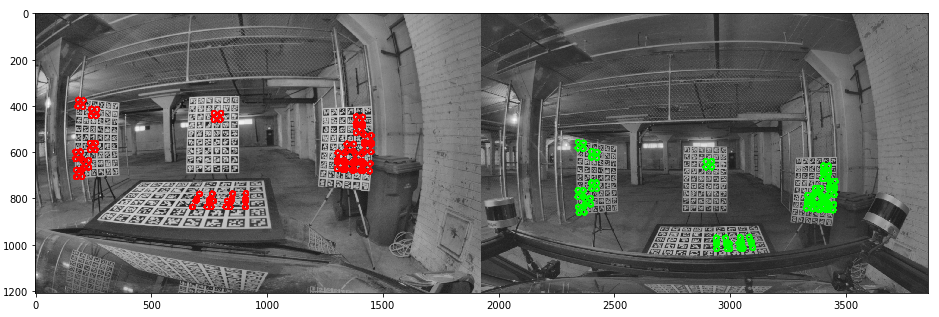

For extrinsic calibration, I am using four Aruco charts as shown in the images below where I colored the detected image points.

Here's the code I am using for extrinsic calibration.

rot = np.eye(3, dtype=np.float64)

essential = np.eye(3, dtype=np.float64)

fondamental = np.eye(3, dtype=np.float64)

tvec = np.zeros((3, 1), dtype=np.float64)

flags = cv2.CALIB_FIX_INTRINSIC | cv2.CALIB_RATIONAL_MODEL

criteria = (cv2.TERM_CRITERIA_EPS & cv2.TERM_CRITERIA_COUNT, 10000, 1e-10)

ret = cv2.stereoCalibrate(ext_calib12['obj_pts'], ext_calib12['img_pts'][0], ext_calib12['img_pts'][1],

int_calib1['est_cam_mat'], int_calib1['est_dist'],

int_calib2['est_cam_mat'], int_calib2['est_dist'],

int_calib1["imsize"], rot, tvec, essential,

fondamental, flags=flags, criteria=criteria)

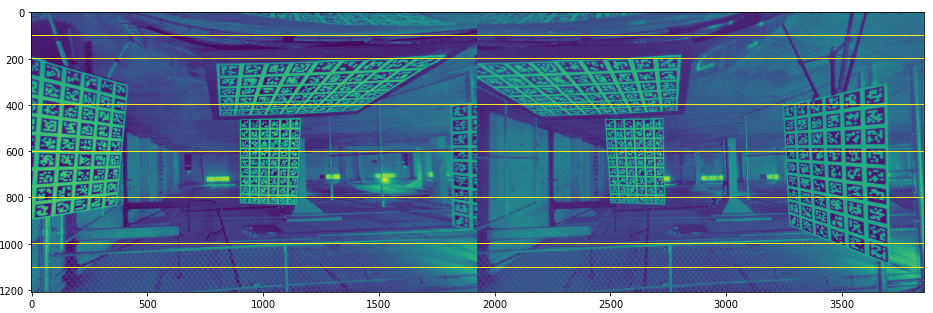

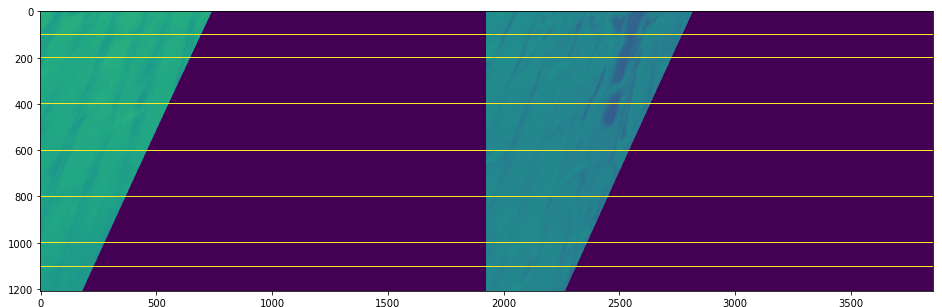

Then to validate the result of my calibration I am doing the rectification of the image as follow

imsize = int_calib1["imsize"]

R1 = np.eye(3)

R2 = np.eye(3)

Q = np.eye(4)

P1 = np.ones((3, 4))

P2 = np.ones((3, 4))

res = cv2.stereoRectify(int_calib1['est_cam_mat'], int_calib1['est_dist'],

int_calib2['est_cam_mat'], int_calib2['est_dist'], imsize, rot,

tvec, R1, R2, P1, P2, Q, alpha=-1, flags=cv2.CALIB_ZERO_DISPARITY)

img = ext_calib12['img1']

mapx, mapy = cv2.initUndistortRectifyMap(int_calib1['est_cam_mat'], int_calib1['est_dist'], R1, P1, imsize, 5)

dst1 = cv2.remap(img, mapx, mapy, cv2.INTER_LINEAR)

img = ext_calib12['img2']

mapx, mapy = cv2.initUndistortRectifyMap(int_calib2['est_cam_mat'], int_calib2['est_dist'], R2, P2, imsize, 5)

dst2 = cv2.remap(img, mapx, mapy, cv2.INTER_LINEAR)

img_stereo = np.hstack((dst1, dst2))

Result

The result rectifying image between 1->3 looks fairly good and the extrinsic output is inside3 the error margin of my experimental measurements.

tvec = [[843.28295989], [ 2.38669229], [-58.21281309]]

However, the rectifying image between 1->2 is completely off. In addition, the extrinsic result is not totally inside the experimental margin.

tvec = [[385.5120268 ], [368.36674983], [496.03076513]]

Here's a two hypothesis I have to explain my poor results

The intrinsic calibration is not good. I had a hard time performing intrinsic calibration. I took somewhere around 100 images and took a subset of images to get the maximal coverage of the FOV of the camera. In the end, there was a lot of variation in the output intrinsic based on the images I decided to choose for calibration. I tried to perform the rectification with different intrinsic output but never got a good result in the end. Is there a way I could improve my intrinsic calibration? Maybe a flag to add in my calibration? Right now I am using one Aruco chart with 54 markers that I moved around my camera to cover every part of the FOV. Is there some specific thing I could do to improve my setup?

I need more charts to perform my extrinsic calibration. Right now I have at least 5 markers detected 3 orthogonal planes. Could it help to add more charts or to improve the detection of more features (more image pre-processing)?

Every time I am doing the extrinsic on cameras that only have a displacement in X direction it works relatively well. However, once I start to add displacement in Y and Z direction, calibration start to be off.

I am using OpenCV 4.0.0