I believe I am off the track. I need to achieve something like a 1D->2D pose estimation, which I was hoping to be able to reduce to the 3D pose estimation problem, to which widely used solutions exist, such as OpenCVs solvePnP() implementations.

What I am trying to solve:

My camera is point _along a surface_, i.e. it doesn't sees the surface from the side and sees everything that is on the surface. That means, I already know the z-coordinate which is z=0.

I have 2 cameras pointed at the surface and I want to detect objects on the surface by intersecting their projection lines. But for that, I need to know the 2D location of my cameras with respect to the 2D coordinate system of the surface.

The cameras are fixed, so I should be able to determine each of their location by placing objects on the surface in a known setup, and from the object positions on the image, determine the camera location. Although my camera images are 2D, I think I can treat them to be 1D, because the objects will be all on the same y-axis. (Assuming leveled and aligned camera setup).

By that, I believe that the problem I need to solve is somethingl ike a 1D->2D pose estimation.

I can't find a geometrical solution to figuring out the 2D location from a known set of 1D points, so I was trying to use solvePnP(), feeding it only the dimensions I need. I have calibrated my camera to get the camera matrix and distortion vector, and also applied solvePnP() in 3D space - which gave me okay results and showed me that my implementation seems alright.

But when I provide the 1D image points (i.e. points which all have the same y-coordinate), the result is not usable. I was lucky to use once points with slightly different y-coordinates, which actually produced a valid camera location in world coordinates (z was near zero). So I think this is exactly a limitation of the PnP solutions, that they need some assymetry in the image points. Using them as 1D points puts them all on the same line, which can't help the solvers.

But now, what can I do? Shouldn't the 1D-2D pose estimation be a more simple to solve problem than the 2D-3D one? Can anyone help me to think of a geometrical solution or guide me to some other way of interpreting the problem of locating objects on a surface?

Any feedback, hints, discussions are highly appreciated!

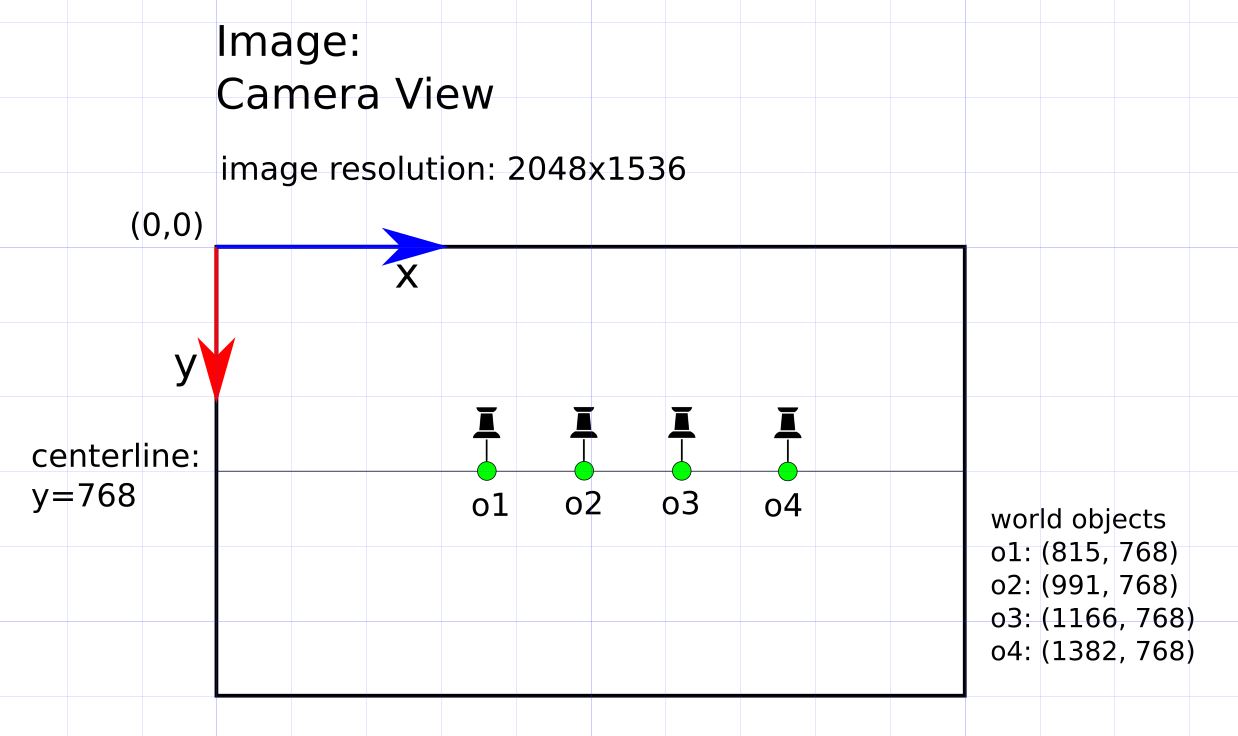

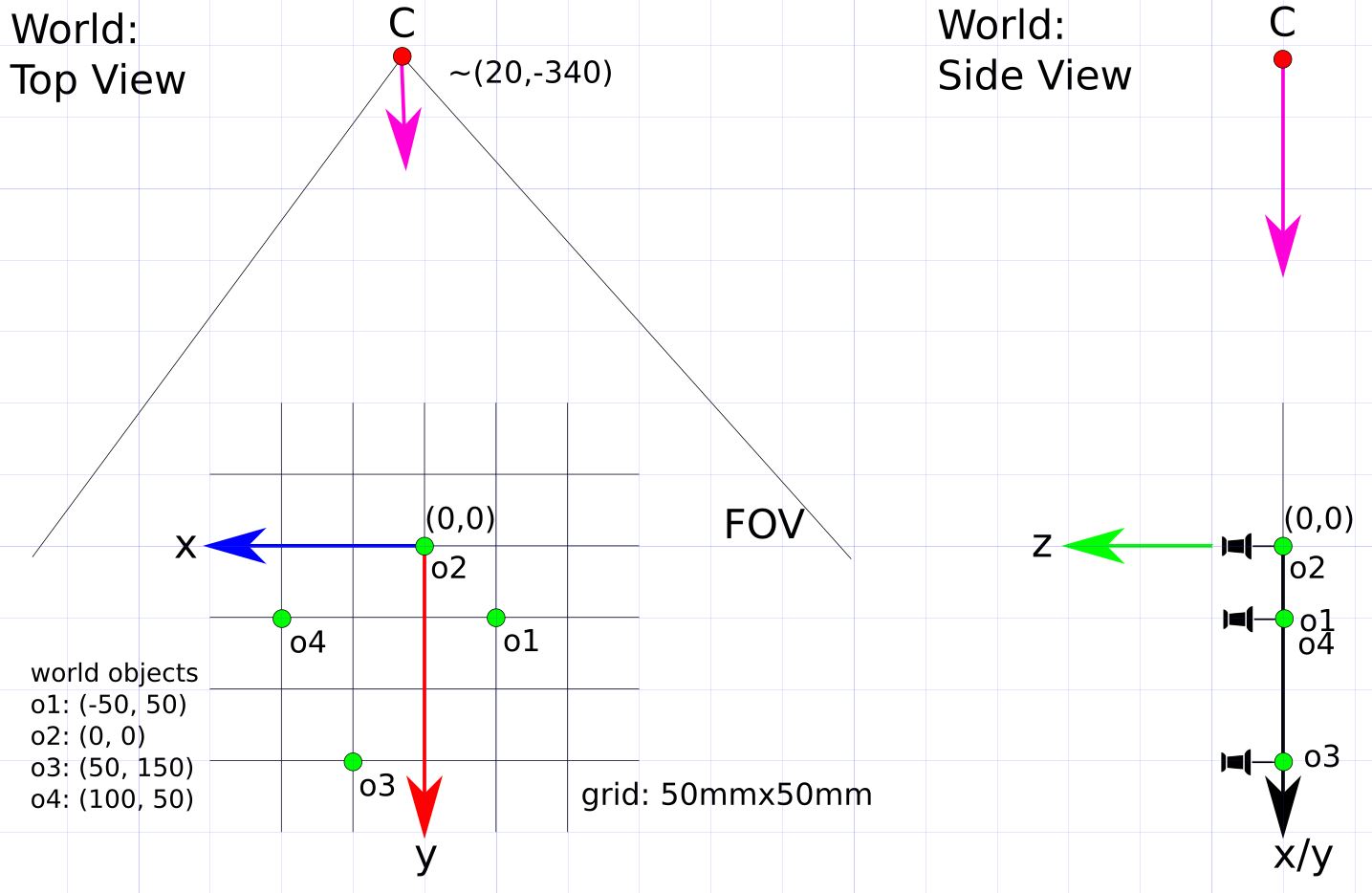

Below am providing some sketches to (hopefully) illustrate the setup and how I am trying to solve it with pose estimation.

The world, looking at the surface from the top, and from the side (as the camera).

C: The camera, with it's measured real world location around (20,-340).o<N>: object points 1-4. These could be some pins or anything.- x/y/z: The world coordinate system, how I define it.

- FOV: field of view of the camera

The image, taken from camera C. All objects are roughly at the center/zero line.