I have been struggling trying to get the Structured light examples going with Opencv (Windows 10, C++, Visual Studio). Something I have noticed when trying with multiple computers, projectors, and even using the Java version of OpenCV, the Graycode patterns it generates seem to be messed up (or there is something in the graycode theory I am missing).

My thoughts are that the graycode patterns should be something like 0) horizontal, left 50% pixels black, right 50% pixels white 0b) horizontal, inverse of previous 1)horizontal, left 25% pixels black, next 25% pixels white, next 25% pixels black, next 25% pixels white 1b)horizontal, inverse of previous ... and so on until there are single pixel wide stripes across then repeat for the vertical direction 0) vertical, top 50% pixels black, bottom 50% pixels white 0b) vertical, inverse of previous 1)vertical, top 25% pixels black, next 25% pixels white, next 25% pixels black, next 25% pixels white 1b)vertical, inverse of previous ...and so on until single pixel length strips and then a final -All Black Pixels -All White pixels

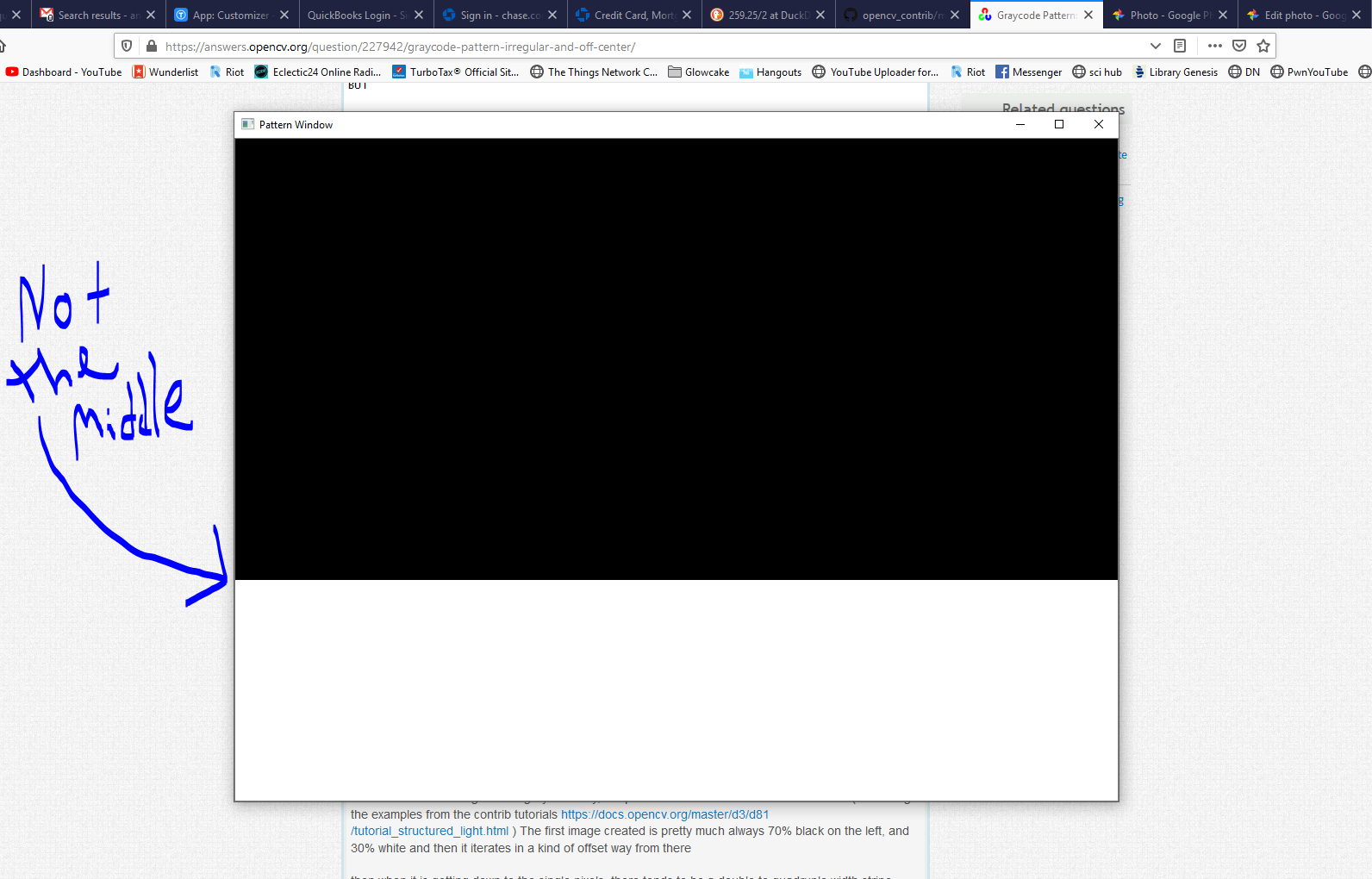

BUT in each version of the generate graycode i try, the pixels are never divided how i would think (i am using the examples from the contrib tutorials https://docs.opencv.org/master/d3/d81/tutorial_structured_light.html ) The first image created is pretty much always 70% black on the left, and 30% white and then it iterates in a kind of offset way from there

then when it is getting down to the single pixels, there tends to be a double to quadruple width stripe present

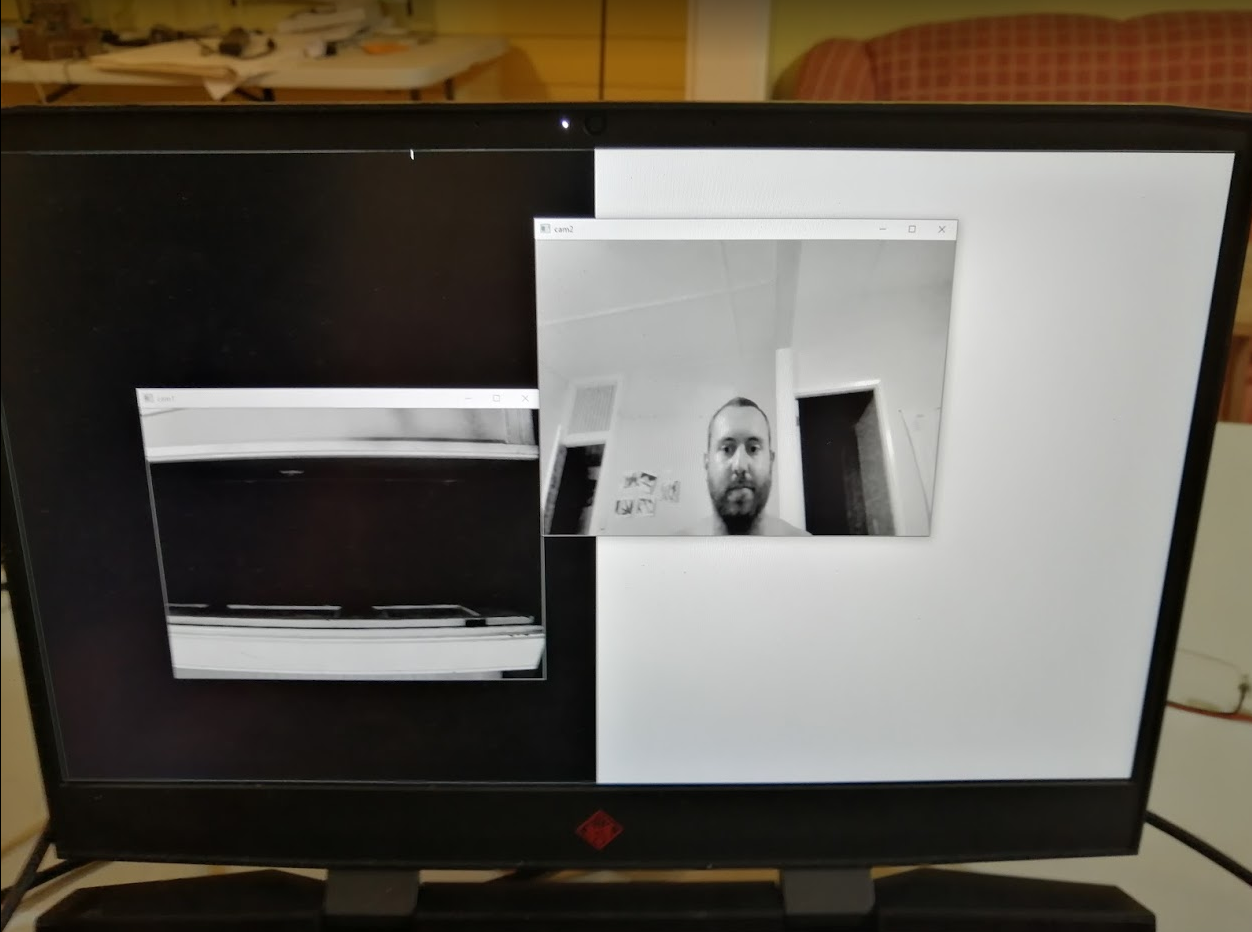

attached are some images showing what happens

i can run the full process by the way, and decode these pictures, but they don't seem to come out correct at all, usually just a blank screen instead of a depth map.