I am using ORB in Python to detect features in images, in order to match a new image against a set of reference images. The new images have sub-images of interest against unknown backgrounds.

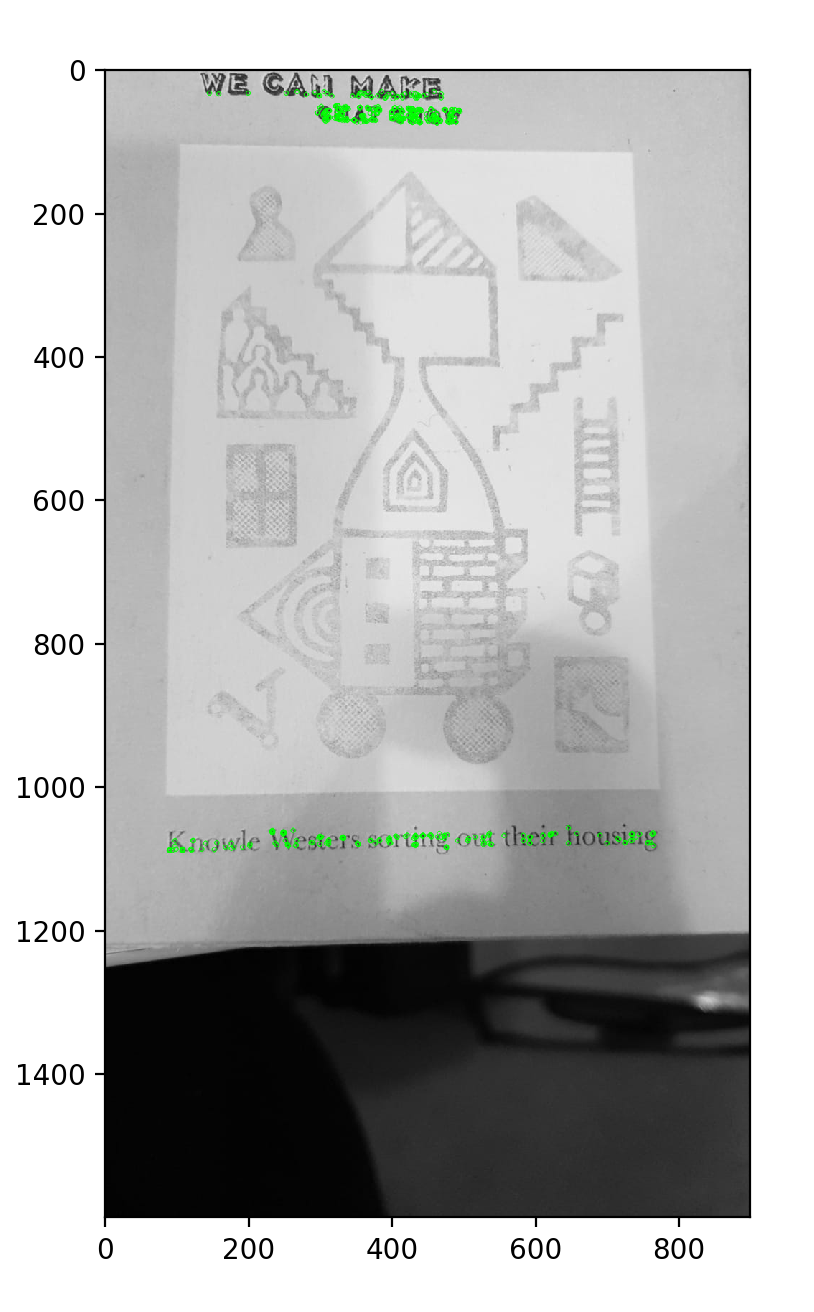

I am finding that, in some cases, many keypoints are being detected to the exclusion of larger keypoints of interest. Take the following example:

If I request the (default) 500 keypoints, none of them is in the region of interest. The writing in the image is actually 'background' to what I am trying to match, which is the large sub-image against a paler background. If I request (a lot) more keypoints, I start to get some in the region of interest, but now everything runs correspondingly more slowly: the detection and the matching.

In this case, looking for larger features would help. What parameter(s) can I provide to cv2.ORB_create() to increase the size of the features I am interested in as a proportion of the overall image size? (The images vary in size.) Or is this not possible?

And a bigger question (I am pretty new to computer vision): are there any heuristics people have tried to help match objects somewhere inside user-taken snapshots (on mobiles) with unknown objects in the background?

Thanks for your help.