I'm currently extracting the calibration parameters of two images that were taken in a stereo vision setup via cv2.aruco.calibrateCameraCharucoExtended(). I'm currently using the cv2.undistortPoints() & cv2.triangulatePoints() function to convert any two 2D points to a 3D point, point coordinate, which works perfectly fine. fine.

I'm however now looking for a way to convert the two 2D images images, which can be seen under approach 1, to one 3D image. I'm doing I need this 3D image because I would like to match the determine the order of these cups from both left to right, to correctly use the triangulatePoints function. If I determine the order of the cups from left to right purely based on the x-coordinates of each of the 2D images images, I get different results for each camera (the cup on the front left corner of the table for example is in the 3D image in the image a bit further down. I already tried some approaches which are listed below.

Thanks a lot in advance!

a different 'order' depending on the camera angle).

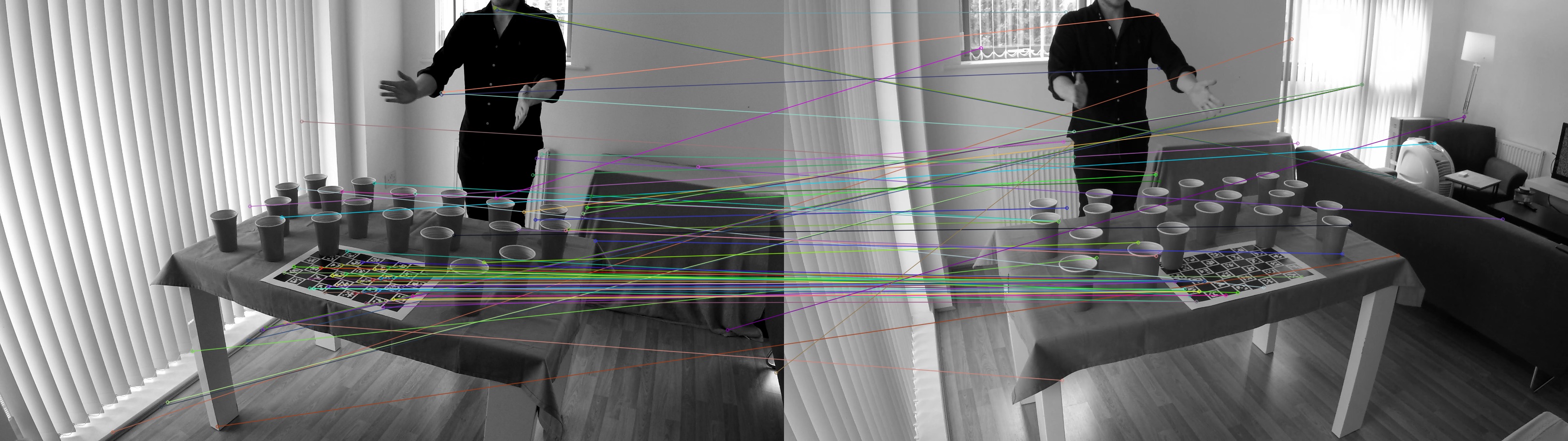

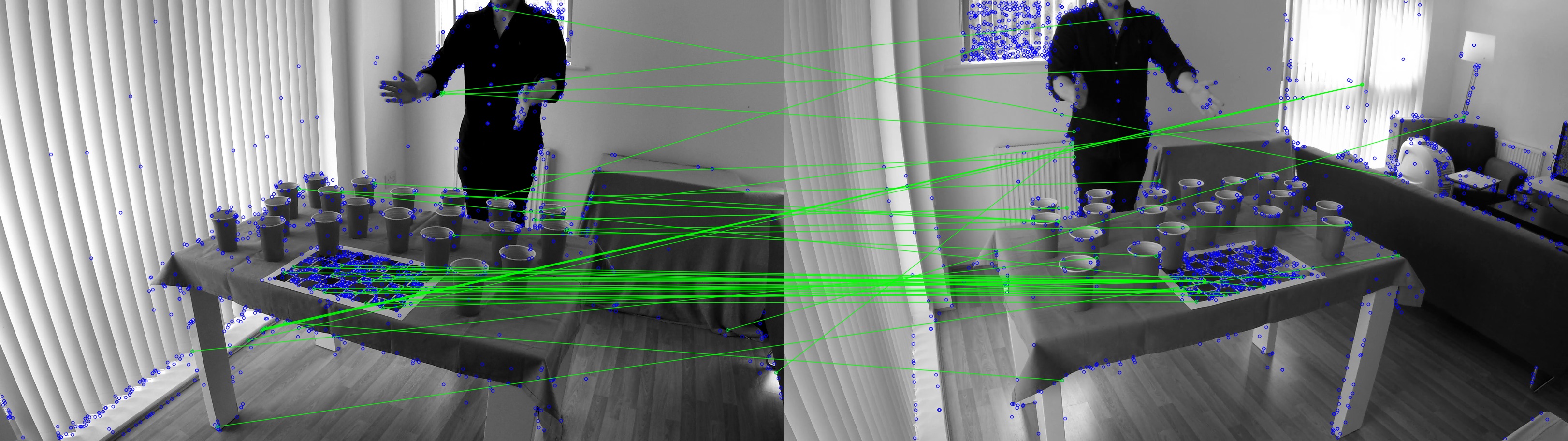

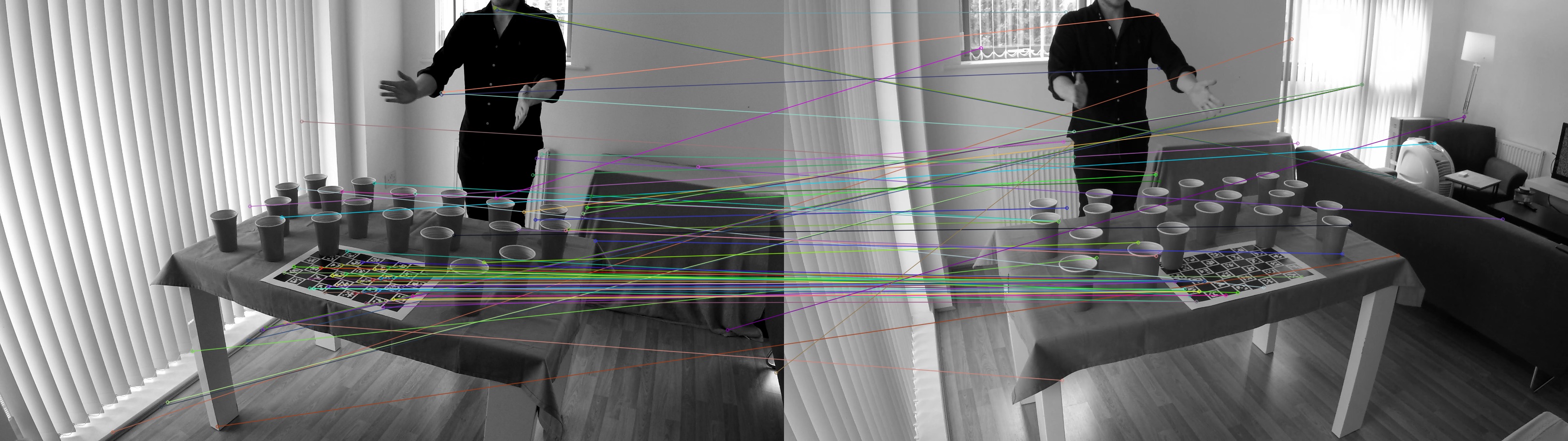

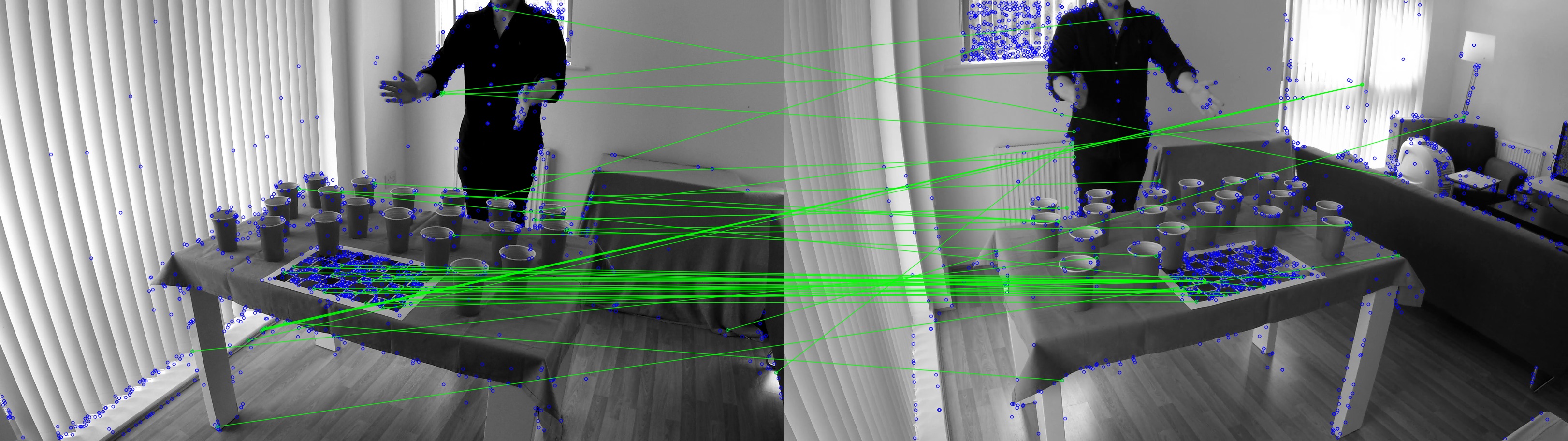

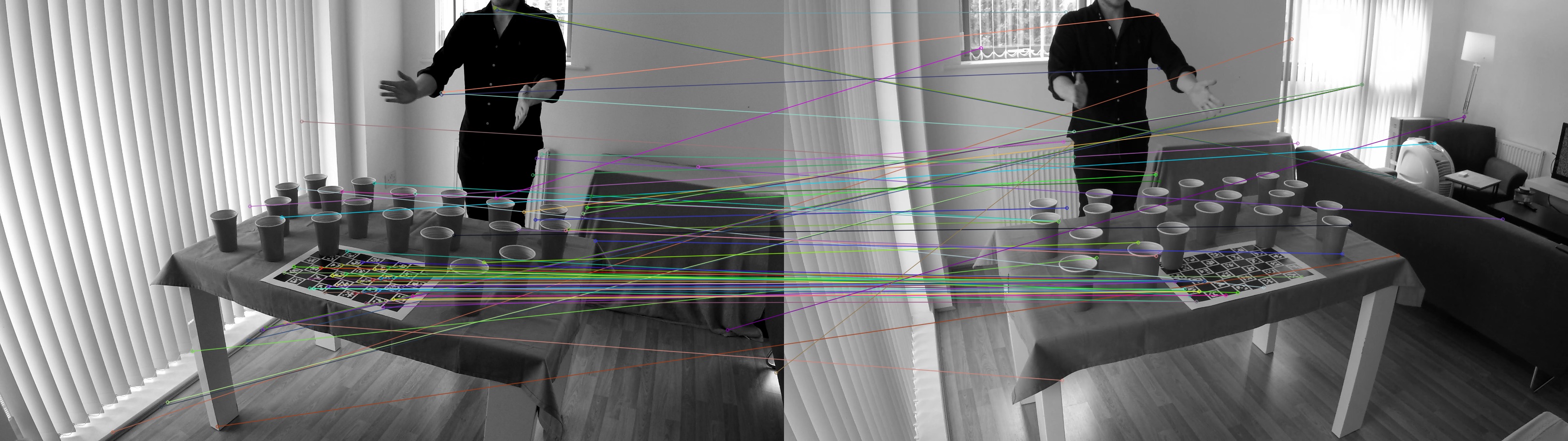

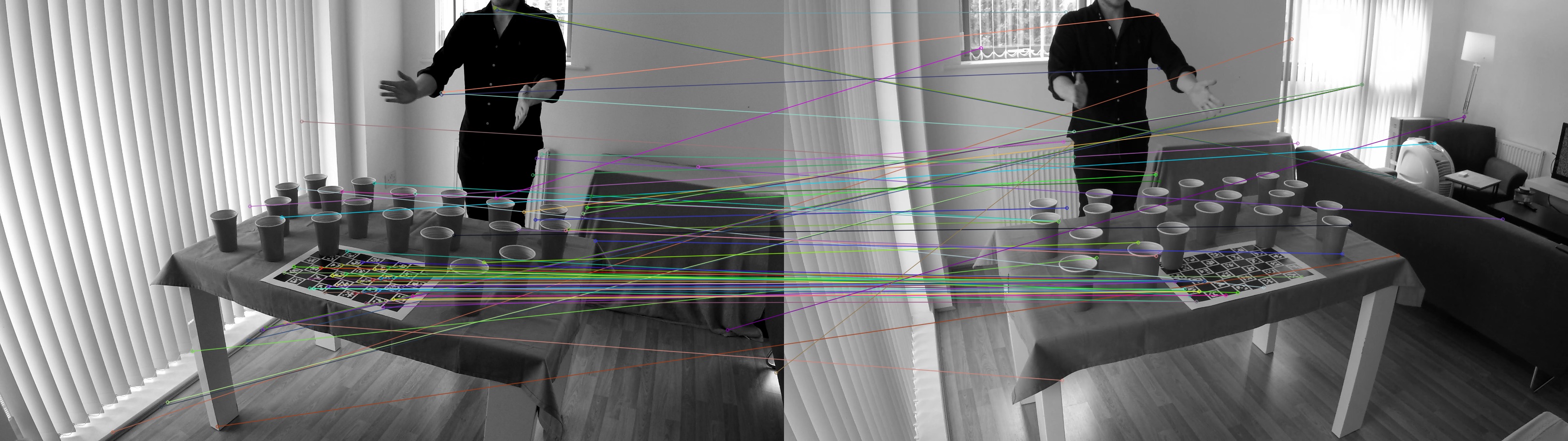

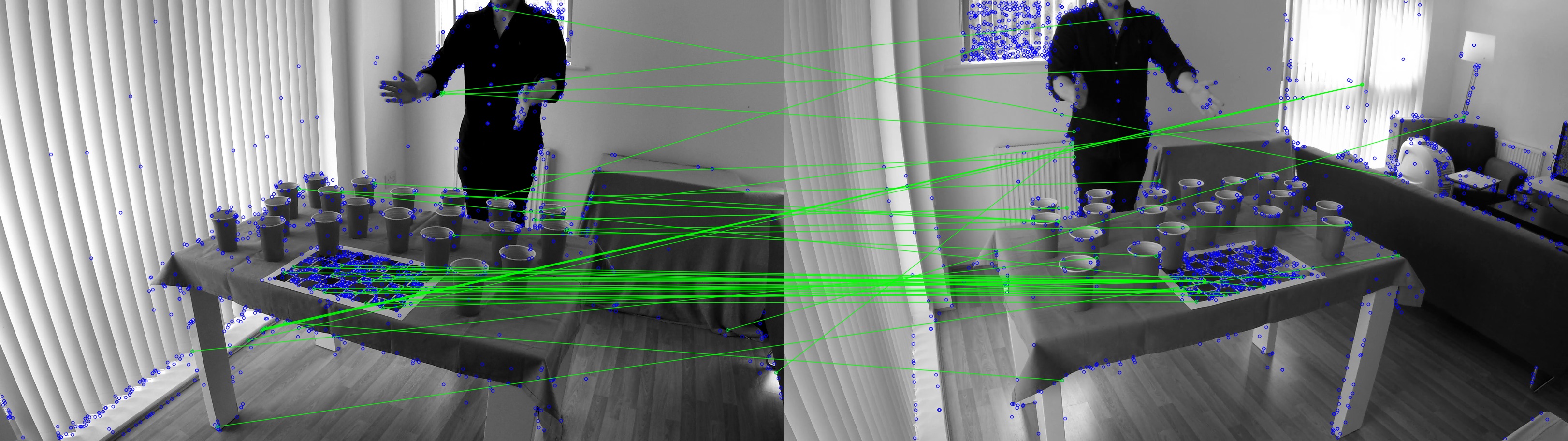

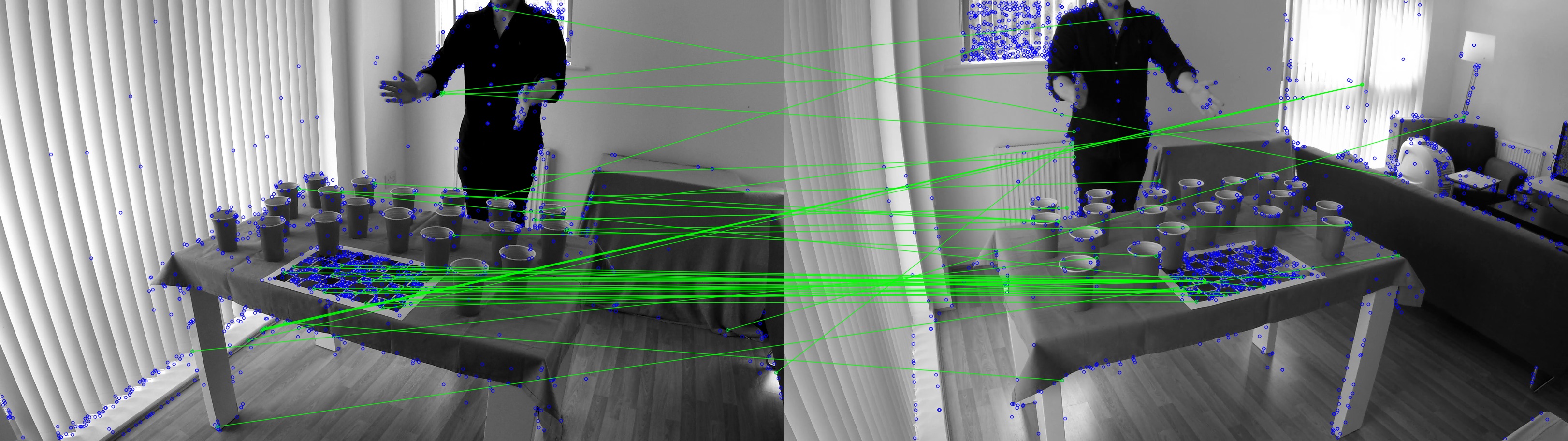

Approach 1: Keypoint Feature Matching

I was first thinking about using a keypoint feature extractor like SIFT or SURF, so I therefore tried to do some keypoint extraction and matching. I tried using both the Brute-Force Matching and FLANN based Matcher, but the results are not really good:

Brute-Force

FLANN-based

I also tried to swap the images, but it still gives more or less the same results.

Approach 2: ReprojectImageTo3D()

I looked further into the issue and I think I need the cv2.reprojectImageTo3D() [docs] function. However, to use this function, I first need the Q matrix which needs to be obtained with cv2.stereoRectify [docs]. This stereoRectify function on its turn expects a couple of parameters that I'm able to give, provide, but there's two I'm confused about:

- R – Rotation matrix between the

coordinate systems of the first and

the second cameras.

- T – Translation vector between

coordinate systems of the cameras.

I do have the rotation and translation matrices for each camera separately, but not between them? Also, do I really need to do this stereoRectify all over again when I already did a full calibration in ChArUco?

ChArUco and already have the camera matrix, distortion coefficients, rotation vectors and translations vectors?

Some extra info that might be useful

I'm using 40 calibration images per camera of the ChArUco board to calibrate. I first extract all corners and markers after which I estimate the calibration parameters with the following code:

(ret, camera_matrix, distortion_coefficients0,

rotation_vectors, translation_vectors,

stdDeviationsIntrinsics, stdDeviationsExtrinsics,

perViewErrors) = cv2.aruco.calibrateCameraCharucoExtended(

charucoCorners=allCorners,

charucoIds=allIds,

board=board,

imageSize=imsize,

cameraMatrix=cameraMatrixInit,

distCoeffs=distCoeffsInit,

flags=flags,

criteria=(cv2.TERM_CRITERIA_EPS & cv2.TERM_CRITERIA_COUNT, 10000, 1e-9))

The board paremeter is created with the following settings:

CHARUCO_BOARD = aruco.CharucoBoard_create(

squaresX=9,

squaresY=6,

squareLength=4.4,

markerLength=3.5,

dictionary=ARUCO_DICT)

Thanks a lot in advance!