Hi there!

I am using the calibrateHandEye functions from the Calib3d library to get the handEye calibration of a UR10 robot with a camera RealSense fixed to the end effector. After some problems I think, that I got to get the function work properly. But now I am seeing two things that do not fit completely, and I was hoping for a third opinion from your point of view.

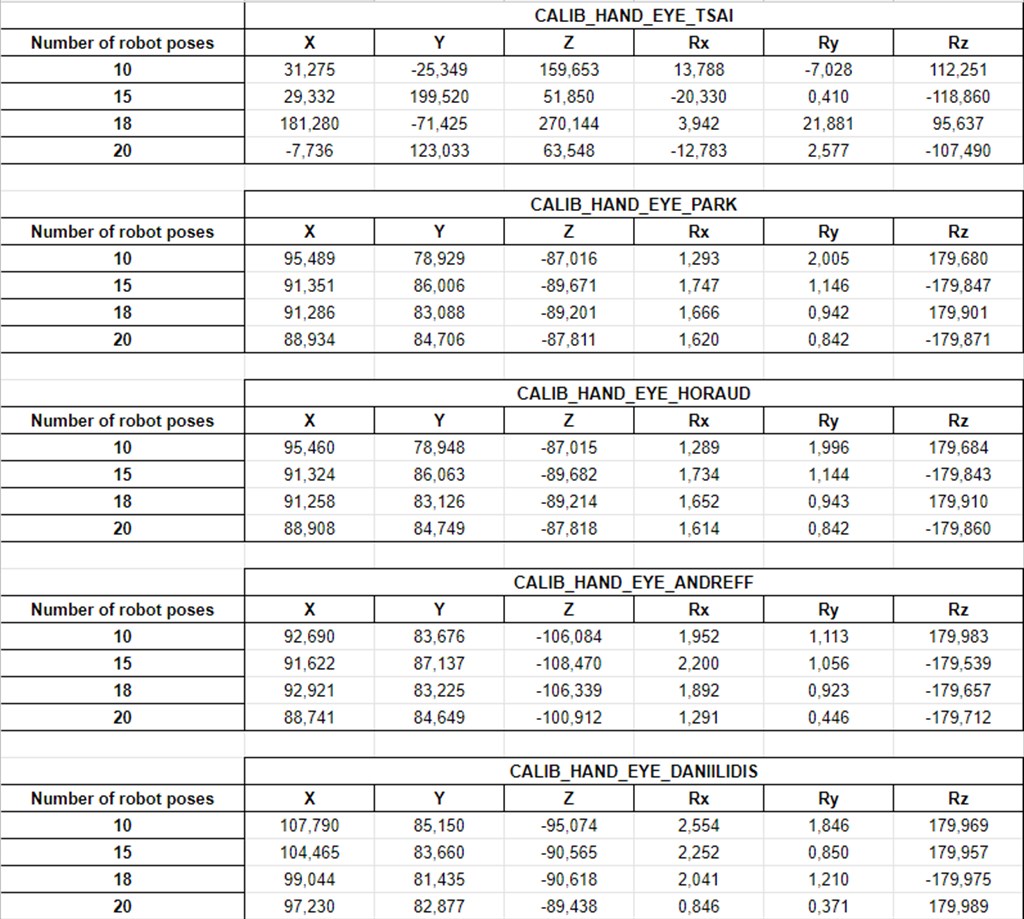

As you can see from the image below, I made 4 four measurements for each of the 5 methods that the function proposes. For each of the methods I used 10, 15, 18 and 20 robot poses with their respective pictures. From here I got a little confused:

- The first method (CALIB_HAND_EYE_TSAI) give completely different values depending on the amount of robot poses used. At the same time, and with the same values, the others 4 methods seem to converge to certain values close enough between them. Why is that?

- Though the values of the last 4 methods seem to converge, between them there are important differences. Why is that?

Thank you for the help!