Hi all,

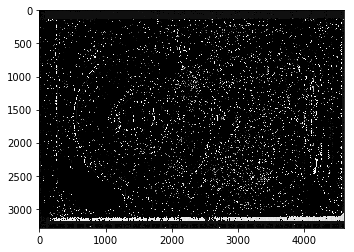

I'm going to try to imitate stereo-imaging by having 1 camera (I am using a fixed cam with 4K res) fixed, and moving the object 3 cm left/right (baseline) and taking two pictures later on feeding them into:

import numpy as np

import cv2

from matplotlib import pyplot as plt

imgL = cv2.imread('left.png',0)

imgR = cv2.imread('right.png',0)

stereo = cv2.StereoBM_create(numDisparities=16, blockSize=15)

disparity = stereo.compute(imgL,imgR)

plt.imshow(disparity,'gray')

plt.show()

However, before I try this, I wanted to consult the vision experts here.

- Is OpenCV able to construct a depth map from two images taken from a single camera, with a shift of 3 cm or so?

- If this is possible, what's the accuracy of the depth map? Like, how accurate would it get? Could I perceive depths like 3-4 mm? What kind of custom setup do I need to perceive that little gap differences? How far should the distance to the object be, as well as the baseline? (shift here, basically)

Thanks in advance.