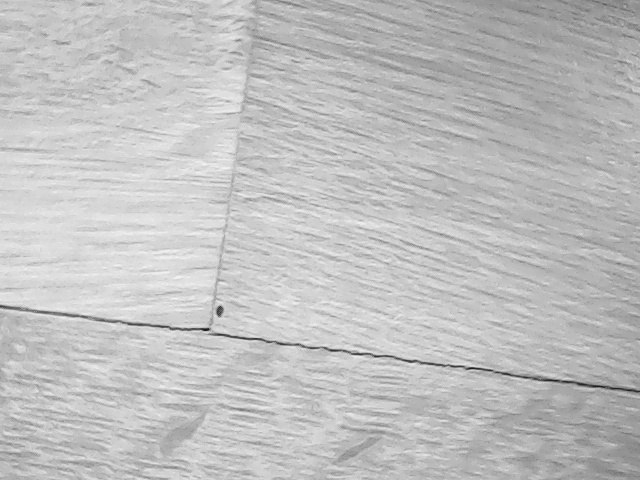

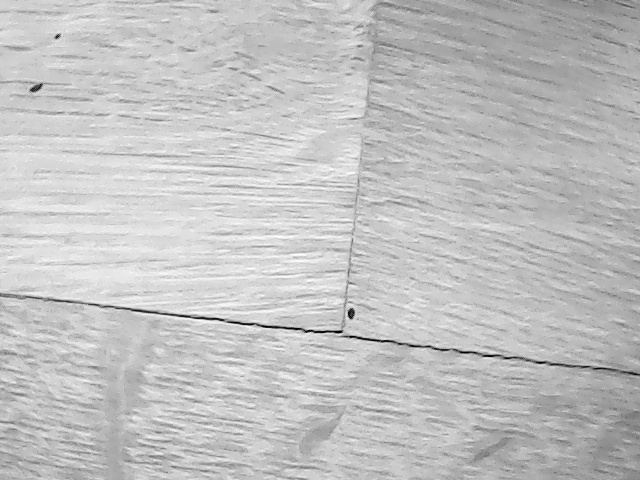

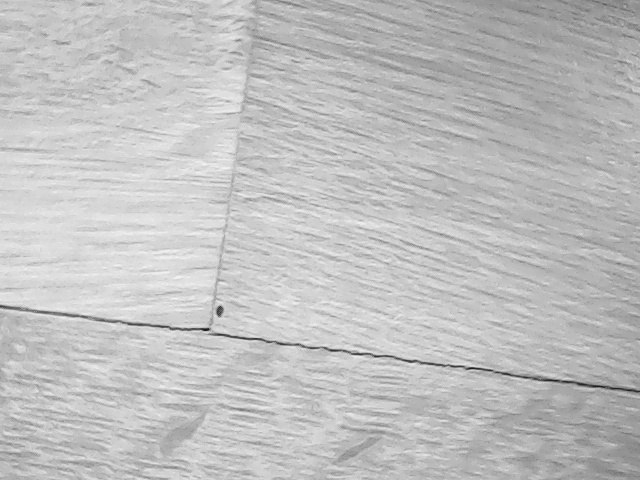

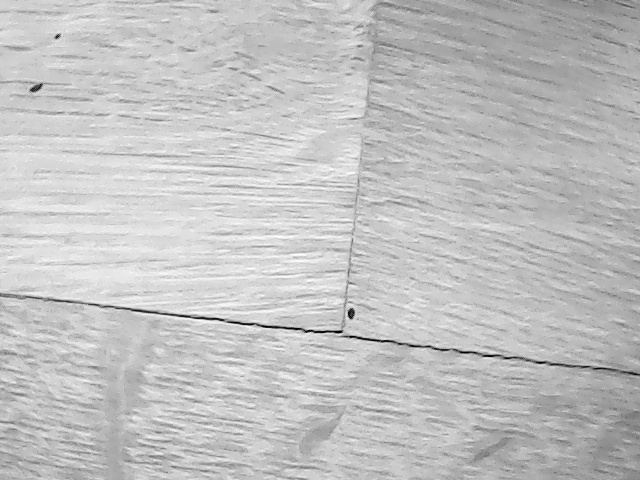

result of recoverPose for 2D shifted image

I am trying to get odometry values using a ground watching cam (13 cm vertical distance) on a wooden floor. The cart has mecanum wheels and can move in 8 directions and additionally may rotate left and right. It has also inherent sliding with the wheels slighly pushing each other when moving, wheel rotation measure wont help much thereforetherefore. I can adjust speed to allow for small image delta.

I use feature_detector.detect on 2 conscutive images, do a calcOpticalFlowPyrLK, findEssentialMat and recoverPose.

Thought I could use the result of recoverPose (R, t) to find the x/y values travelled.

I however end up with nonfitting values. The only thing I was able to evaluate is the Z-rotation (roll) between 2 images using a rotationMatrixToEulerAngles function in combination with getRotationMatrix2D and my angles[2] value.

Example of image 1 and 2, image 2 is taken with cam moving left. Distance to floor is 13 cm, width cam is 640480 pix and covers about 117 cm of woodbar is 7.5 cm.

the floor and I expected to get a 2cm x-movement to be detected resp. a yaw between 8 and 9 degrees?.

resulting t vector [[ 0.8696361 ] [ 0.09754516] [-0.48396074]]

resulting R matrix

[[ 9.94251600e-01 -1.65265050e-02 -1.05785777e-01]

[ 1.63426435e-02 9.99863057e-01 -2.60472019e-03]

[ 1.05814337e-01 8.60927979e-04 9.94385531e-01]]

my calculated rotation angles in degrees:

[-6.07408602 0.04960604 0.94169341]

my essential code:

def evalDistance(img1, img2):

feature_detector = cv2.FastFeatureDetector_create(threshold=25, nonmaxSuppression=True)

lk_params = dict(winSize=(21, 21),

criteria=(cv2.TERM_CRITERIA_EPS |

cv2.TERM_CRITERIA_COUNT, 30, 0.03))

keypoint1 = feature_detector.detect(img1, None)

keypoint2 = feature_detector.detect(img2, None)

p1 = np.array(list(map(lambda x: [x.pt], keypoint1)), dtype=np.float32)

p2, st, err = cv2.calcOpticalFlowPyrLK(img1, img2, p1, None, **lk_params)

E, mask = cv2.findEssentialMat(p2, p1, camera_matrix, cv2.RANSAC, 0.999, 1.0, None)

points, R, t, mask = cv2.recoverPose(E, p2, p1, camera_matrix)