So I have been working these last few weeks on detecting and tracking motion on a video. My purpose is simple, detecting a dog once he moves and tracking it (with a rectangle box surrounding it). I only want to track one motion (the dog's) ignoring any other motion. After many unsuccessful trials with object and motion tracking algorithms and codes using Opencv, I came across something that I was able to modify to get closer to my goal. The only issue is that the code seems to keep the information from the first frame during the whole video, which causes the code to detect and put a rectangle in an empty area ignoring the actual motion.

Here's the code I'm using:

import imutils

import time

import cv2

previousFrame = None

def searchForMovement(cnts, frame, min_area):

text = "Undetected"

flag = 0

for c in cnts:

# if the contour is too small, ignore it

if cv2.contourArea(c) < min_area:

continue

#Use the flag to prevent the detection of other motions in the video

if flag == 0:

(x, y, w, h) = cv2.boundingRect(c)

#print("x y w h")

#print(x,y,w,h)

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

text = "Detected"

flag = 1

return frame, text

def trackMotion(ret,frame, gaussian_kernel, sensitivity_value, min_area):

if ret:

# Convert to grayscale and blur it for better frame difference

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

gray = cv2.GaussianBlur(gray, (gaussian_kernel, gaussian_kernel), 0)

global previousFrame

if previousFrame is None:

previousFrame = gray

return frame, "Uninitialized", frame, frame

frameDiff = cv2.absdiff(previousFrame, gray)

thresh = cv2.threshold(frameDiff, sensitivity_value, 255, cv2.THRESH_BINARY)[1]

thresh = cv2.dilate(thresh, None, iterations=2)

_, cnts, _ = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

frame, text = searchForMovement(cnts, frame, min_area)

#previousFrame = gray

return frame, text, thresh, frameDiff

if __name__ == '__main__':

video = "Track.avi"

video0 = "Track.mp4"

video1= "Ntest1.avi"

video2= "Ntest2.avi"

camera = cv2.VideoCapture(video1)

time.sleep(0.25)

min_area = 5000 #int(sys.argv[1])

cv2.namedWindow("Security Camera Feed")

while camera.isOpened():

gaussian_kernel = 27

sensitivity_value = 5

min_area = 2500

ret, frame = camera.read()

#Check if the next camera read is not null

if ret:

frame, text, thresh, frameDiff = trackMotion(ret,frame, gaussian_kernel, sensitivity_value, min_area)

else:

print("Video Finished")

break

cv2.namedWindow('Thresh',cv2.WINDOW_NORMAL)

cv2.namedWindow('Frame Difference',cv2.WINDOW_NORMAL)

cv2.namedWindow('Security Camera Feed',cv2.WINDOW_NORMAL)

cv2.resizeWindow('Thresh', 800,600)

cv2.resizeWindow('Frame Difference', 800,600)

cv2.resizeWindow('Security Camera Feed', 800,600)

# uncomment to see the tresh and framedifference displays

cv2.imshow("Thresh", thresh)

cv2.imshow("Frame Difference", frameDiff)

cv2.putText(frame, text, (10, 20), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2)

cv2.imshow("Security Camera Feed", frame)

key = cv2.waitKey(3) & 0xFF

if key == 27 or key == ord('q'):

print("Bye")

break

camera.release()

cv2.destroyAllWindows()

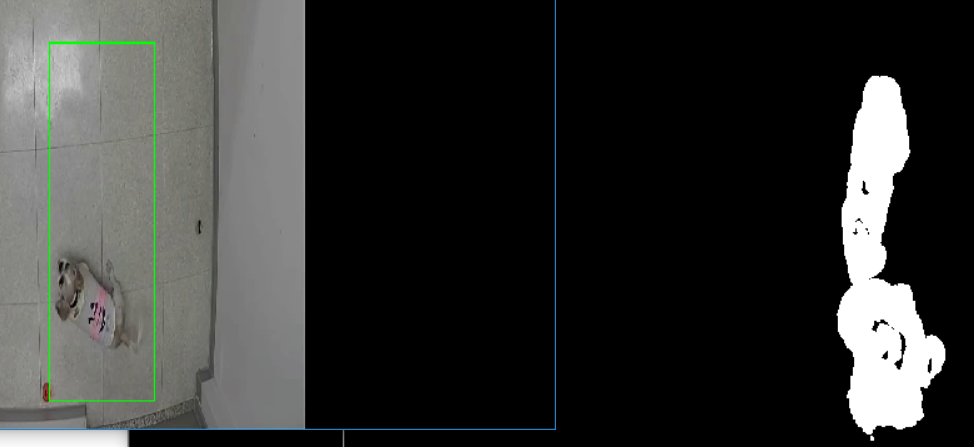

The following pictures show the problems I run into. Both Threshold and Frame difference keep displaying a result in the area where the dog was in the first frame, even after the dog is completely out of it. When I limit the tracking to only 1 motion, it starts ignoring the actual motion and keeps detecting that same area from the first frame.

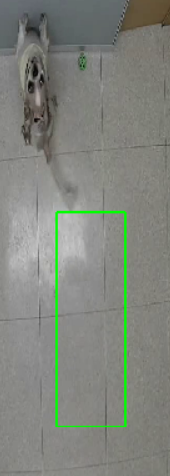

Second picture:

I hope someone can help me solve this issue or suggest a better approach maybe.

Thanks a lot !!