I have 3 images generated from a CAD file. The images are different angles of various components. I have following python code that finds similair points between two images.

import numpy as np

import cv2

from matplotlib import pyplot as plt

MIN_MATCH_COUNT = 4

img1 = cv2.imread('mutter_g_seite.png', 0) # queryImage

img2 = cv2.imread('Bauteile/6.jpg', 0) # trainImage

img1 = cv2.resize(img1, (800,600))

img2 = cv2.resize(img2, (800,600))

# Edge detection

img1 = cv2.Canny(img1, 50, 50)

img2 = cv2.Canny(img2, 50, 50)

# Laplacian

#img1 = cv2.Laplacian(img1, cv2.CV_64F)

#img2 = cv2.Laplacian(img2, cv2.CV_64F)

# Initiate SIFT detector

sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# FLANN parameters

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks=100) # or pass empty dictionary

flann = cv2.FlannBasedMatcher(index_params,search_params)

matches = flann.knnMatch(des1,des2,k=2)

# store all the good matches as per Lowe's ratio test.

good = []

for m,n in matches:

if m.distance < 0.7*n.distance:

print(m.distance, 0.7*n.distance)

good.append(m)

if len(good)>MIN_MATCH_COUNT:

src_pts = np.float32([ kp1[m.queryIdx].pt for m in good ]).reshape(-1,1,2)

dst_pts = np.float32([ kp2[m.trainIdx].pt for m in good ]).reshape(-1,1,2)

M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC,5.0)

matchesMask = mask.ravel().tolist()

print(matchesMask)

h,w = img1.shape

pts = np.float32([ [0,0],[0,h-1],[w-1,h-1],[w-1,0] ]).reshape(-1,1,2)

dst = cv2.perspectiveTransform(pts,M)

img2 = cv2.polylines(img2,[np.int32(dst)],True,255,3, cv2.LINE_AA)

else:

print("Not enough matches are found - %d/%d" % (len(good),MIN_MATCH_COUNT))

matchesMask = None

draw_params = dict(matchColor = (0,255,0),

singlePointColor = (255,0,0),

matchesMask = matchesMask,

flags = 0)

img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,matches,None,**draw_params)

plt.imshow(img3)

plt.show()

Queryimage 1

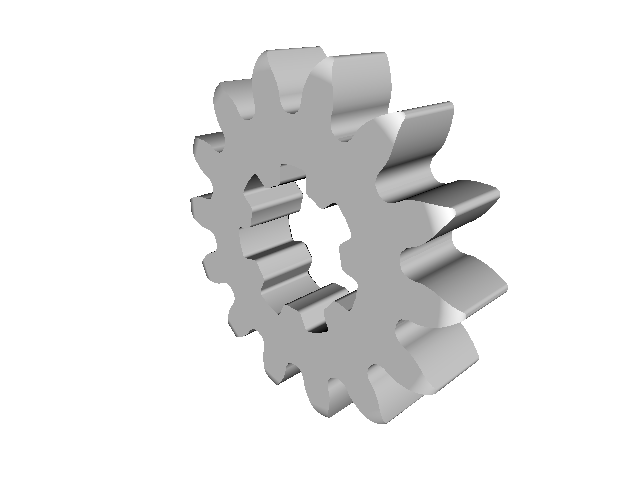

Queryimage 2

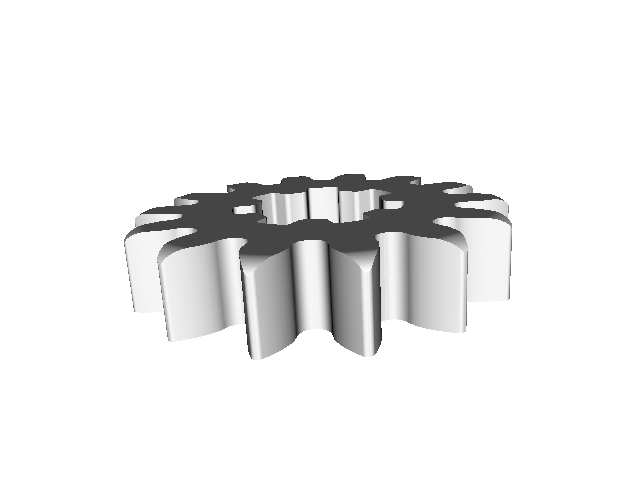

Queryimage 3

Train Image

So as you can see I have 3 query images where I want to detect if my train image contains the object. I have set a min_match of points to define if the object is on my image. I have read that Lowe's ratio and calculating the homograph help to get better results. Thats what I have been trying to do but I have encountered following issues:

- I can't find good result with my current approach.

- I'm comparing only 1 query image. Is it possible to compare all 3 at once and get there result?

- If I reduce the min_match to 2 (in my examples I get 2 good matches with query image 2) I try to build the homograph but I get following error:

119.74974060058594 127.00901641845702 135.53598022460938 168.57069396972656 [0, 0] OpenCV Error: Assertion failed (scn + 1 == m.cols) in perspectiveTransform, file /tmp/opencv-20170825-90583-1pdhamg/opencv-3.3.0/modules/core/src/matmul.cpp, line 2299 Traceback (most recent call last): File "flann-feature-matching.py", line 53, in <module> dst = cv2.perspectiveTransform(pts,M) cv2.error: /tmp/opencv-20170825-90583-1pdhamg/opencv-3.3.0/modules/core/src/matmul.cpp:2299: error: (-215) scn + 1 == m.cols in function perspectiveTransform

What I'm I doing wrong?