I have two cameras that I calibrated using OpenCV’s stereoCalibration functions.

The calibration is done on a 19 pairs of left and right camera images, the chessboard grid is 9*6 and I have set the square size to 2.4 (in centimetres) :

Calibration of left camera gave an error of 0.229

Calibration of left camera gave an error of 0.256

Stereo Calibration gave an error of 0.313

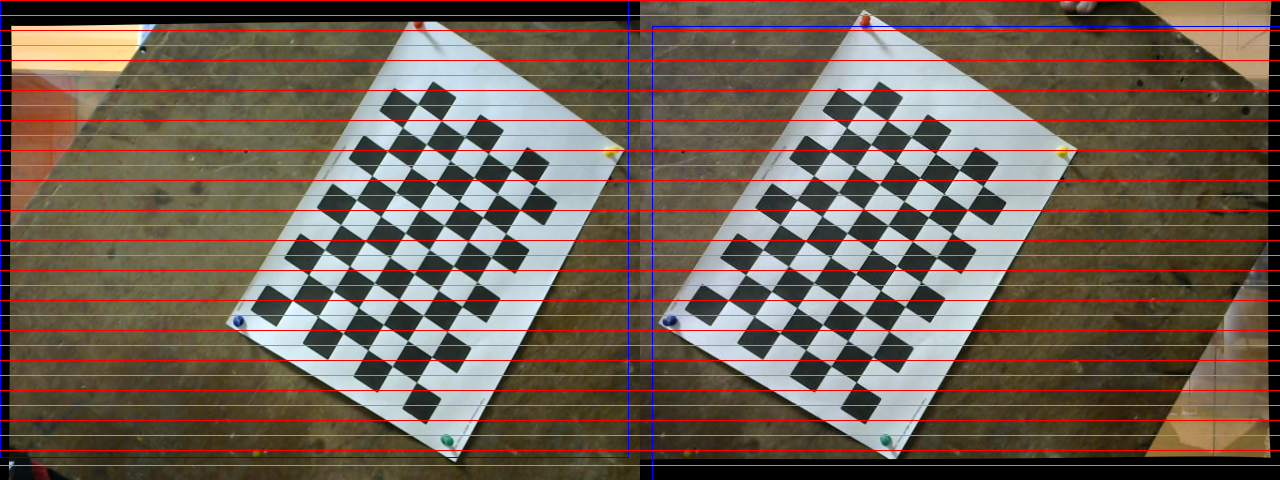

The rectified images look like this and seem to be correct.

I then took few photos of me standing at certain distances to check whether it is working properly or not. Here is one pair of images that i took at about 600 cms away from camera:

The images on top row are original images and the images on the second row are rectified:

On running stereoSGBM I get the following result:

I calculated the distance of white dot on the disparity image and the result was 272. Considering I calibrated the cameras using chessboard square size as 2.4cms the result is in cms (i.e 272 cms) This is a huge error (600 – 272 = 328 cms). Any help that would help me solve this issue will be appreciated. Here are some relevant parts of my code, if this code doesnt provide enough information leave a comment and ill add more snippets.

SINGLE CAMERA CALIBRATION CODE:

def calibration(PATH_TO_FOLDER,PATTERN_SIZE, visualize, output_file):

'''

1.Detect chessboard corners.

2.Camera Calibration

Calibrated data stored at @output_file

'''

### STEP 1 : DETECTING CHESSBOARD CORNERS

x_pattern, y_pattern = PATTERN_SIZE

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(6,5,0)

objp = np.zeros((y_pattern*x_pattern,3), np.float32)

objp[:,:2] = np.mgrid[0:x_pattern,0:y_pattern].T.reshape(-1,2)

objp *= SQUARE_SIZE_FOR_CALIBRATION

# Arrays to store object points and image points from all the images.

objpoints = [] # 3d point in real world space

imgpoints = [] # 2d points in image plane.

for filename in os.listdir(PATH_TO_FOLDER):

if filename[-3:] == IMAGE_FORMAT:

img = cv2.imread(os.path.join(PATH_TO_FOLDER,filename))

if img is not None:

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# Find the chess board corners

chessboardFlags = cv2.CALIB_CB_ADAPTIVE_THRESH | cv2.CALIB_CB_NORMALIZE_IMAGE | cv2.CALIB_CB_FILTER_QUADS

ret, corners = cv2.findChessboardCorners(gray, PATTERN_SIZE,chessboardFlags)

# If found, add object points, image points (after refining them)

if ret == True:

#Add the corresponding object coordinate system points

objpoints.append(objp)

#Refine image coordinates

corners2 = cv2.cornerSubPix(gray,corners,(11,11),(-1,-1),criteria)

#Add corresponding image coordinates

imgpoints.append(corners2)

# Draw and display the corners

if visualize:

img = cv2.drawChessboardCorners(img, PATTERN_SIZE, corners2,ret)

cv2.imshow('img',img)

cv2.waitKey(500)

cv2.destroyAllWindows()

### STEP 2 : CAMERA CALIBRATION

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, gray.shape[::-1],None ,cv2.CALIB_RATIONAL_MODEL)

STEREO CAMERA CALIBRATION CODE

def stereocalibrate(PATH_TO_LEFT_IMAGES, PATH_TO_RIGHT_IMAGES, NUM_IMAGES, PATTERN_SIZE, VISUALIZATION, RECALIBRATE_INDIVIDUAL_CAMS):

'''

1.Detecting chessboard corners.

2.Stereo calibration of cameras.(Geometry between cams)

3.Stereo rectification of cameras.(Row aligned images)

'''

if(RECALIBRATE_INDIVIDUAL_CAMS):

left_camera = calibration(PATH_TO_LEFT_IMAGES,PATTERN_SIZE, VISUALIZATION, open(LEFT_CAMERA_DATA_FILE, 'wb'))

right_camera = calibration(PATH_TO_RIGHT_IMAGES,PATTERN_SIZE, VISUALIZATION, open(RIGHT_CAMERA_DATA_FILE, 'wb'))

else:

left_camera = pickle.load(open(LEFT_CAMERA_DATA_FILE, 'rb'))

right_camera = pickle.load(open(RIGHT_CAMERA_DATA_FILE, 'rb'))

x_pattern, y_pattern = PATTERN_SIZE

CURRENT_IMAGE = 1

objp = np.zeros((y_pattern*x_pattern,3), np.float32)

objp[:,:2] = np.mgrid[0:x_pattern,0:y_pattern].T.reshape(-1,2)

objp *= SQUARE_SIZE_FOR_CALIBRATION

#Arrays to store these data for each test image

obj_points = [] #real world coordinates of each image

img_left_points = [] #left image coordinates of corners of each image

img_right_points = [] #right image coordinates of corners of each image

image_size = None

### STEP 1: DETECTING CHESSBOARD CORNERS

while CURRENT_IMAGE <= NUM_IMAGES:

# Load images and stored the RGB and grayscale versions separately

_left_img = cv2.imread(PATH_TO_LEFT_IMAGES + "left" + str(CURRENT_IMAGE) + "." + IMAGE_FORMAT)

left_img = cv2.cvtColor(_left_img,cv2.COLOR_BGR2GRAY)

_right_img = cv2.imread(PATH_TO_RIGHT_IMAGES + "right" + str(CURRENT_IMAGE) + "." + IMAGE_FORMAT)

right_img = cv2.cvtColor(_right_img,cv2.COLOR_BGR2GRAY)

#Setting image size (Incase of different resolution images set it to smaller resolution.

if image_size == None:

if left_img.shape[::-1] < right_img.shape[::-1]:

image_size = left_img.shape[::-1]

else:

image_size = right_img.shape[::-1]

#Find corners

chessboard_flag = cv2.CALIB_CB_ADAPTIVE_THRESH | cv2.CALIB_CB_NORMALIZE_IMAGE | cv2.CALIB_CB_FILTER_QUADS

left_found, left_corners = cv2.findChessboardCorners(left_img, PATTERN_SIZE, chessboard_flag)

right_found, right_corners = cv2.findChessboardCorners(right_img, PATTERN_SIZE, chessboard_flag)

#IF CHESSBOARD CORNERS ARE DETECTED IN BOTH IMAGES, ADD REAL WORD COORDINATES, CORRESPONDING LEFT AND RIGHT COORDINATES TO THEIR RESPECTIVE ARRAYS

if left_found and right_found:

matched_imgs.append(CURRENT_IMAGE)

obj_points.append(objp)

#FURTHER REFINE IMAGE COORDINATES

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

left_corners2 = cv2.cornerSubPix(left_img, left_corners, (11,11), (-1,-1), criteria)

img_left_points.append(left_corners2)

right_corners2 = cv2.cornerSubPix(right_img, right_corners, (11,11), (-1,-1), criteria)

img_right_points.append(right_corners2)

if VISUALIZATION == True:

left_img_check = cv2.drawChessboardCorners(_left_img, PATTERN_SIZE, left_corners2,left_found)

right_img_check = cv2.drawChessboardCorners(_right_img, PATTERN_SIZE, right_corners2,right_found)

cv2.imshow("left", left_img_check)

cv2.imshow("right", right_img_check)

cv2.waitKey(500)

CURRENT_IMAGE = CURRENT_IMAGE + 1

cv2.destroyAllWindows()

### STEP 2 : STEREO-CALIBRATION

#Individually calibrated camera parameters.

_cameraMatrix1 = left_camera["matrix"]

_cameraMatrix2 = right_camera["matrix"]

_distCoeffs1 = left_camera["distCoeffs"]

_distCoeffs2 = right_camera["distCoeffs"]

stereocalib_criteria = (cv2.TERM_CRITERIA_MAX_ITER + cv2.TERM_CRITERIA_EPS, 100, 1e-5)

#Fix the above calibrated data, dont change them, only optimize R,T,E,F

stereocalib_flags = cv2.CALIB_FIX_INTRINSIC

retval, cameraMatrix1, distCoeffs1, cameraMatrix2, distCoeffs2, R, T, E, F = cv2.stereoCalibrate(objectPoints = obj_points, imagePoints1 = img_left_points, imagePoints2 = img_right_points, imageSize = image_size, cameraMatrix1 = _cameraMatrix1, distCoeffs1 = _distCoeffs1, cameraMatrix2 = _cameraMatrix2, distCoeffs2 = _distCoeffs2,criteria = stereocalib_criteria, flags = stereocalib_flags)

print("Stereo Error: " ,retval)

### STEP 3: STEREO-RECTIFY

R1, R2, P1, P2, Q, roi1, roi2 = cv2.stereoRectify(_cameraMatrix1, _distCoeffs1, _cameraMatrix2, _distCoeffs2, image_size, R, T, cv2.CALIB_ZERO_DISPARITY, 0,image_size)

#Creates undistortion maps, they map a pixel in distorted image to a pixel in undistorted image. One map for x coord and other map for y coord

left_map1, left_map2 = cv2.initUndistortRectifyMap(cameraMatrix1, distCoeffs1, R1, P1, image_size, cv2.CV_32FC1)

right_map1, right_map2 = cv2.initUndistortRectifyMap(cameraMatrix2, distCoeffs2, R2, P2, image_size, cv2.CV_32FC1)

RECTIFICATION,DISPARITY GENERATION AND DEPTH CALCULATION:

left_img = cv2.imread(LEFT_CAMERA_IMAGES + "left1.png" , cv2.IMREAD_UNCHANGED)

right_img = cv2.imread(RIGHT_CAMERA_IMAGES + "right1.png",cv2.IMREAD_UNCHANGED)

left_img_remap = cv2.remap(left_img, left_map1, left_map2, cv2.INTER_LINEAR)

right_img_remap = cv2.remap(right_img, right_map1, right_map2, cv2.INTER_LINEAR)

disp = stereo.compute(left_img_remap, right_img_remap).astype(np.float32)/16.0

points = cv2.reprojectImageTo3D(disp, Q)

XYZ = points[y][x]

depth = XYZ[2]