Hello

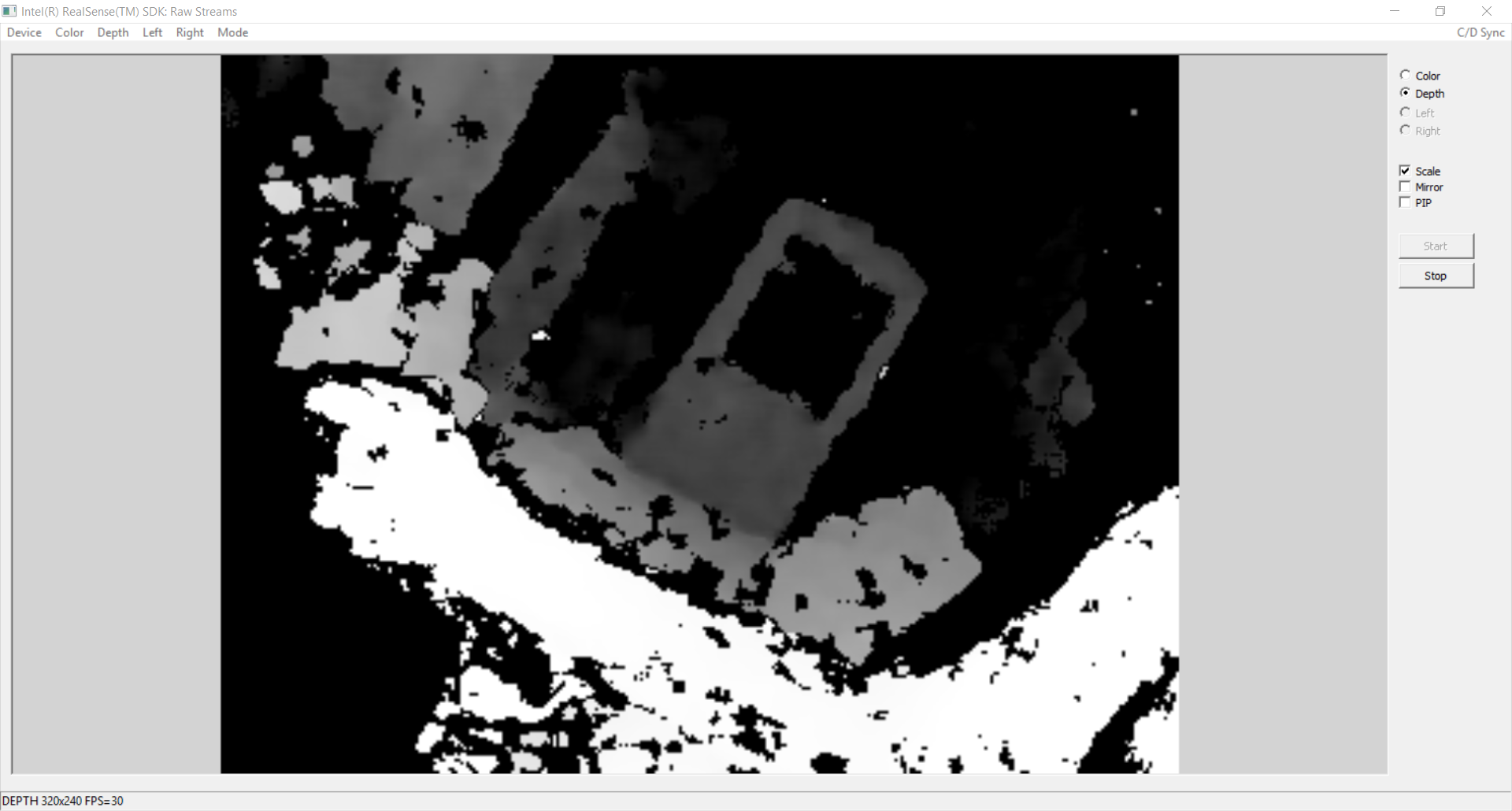

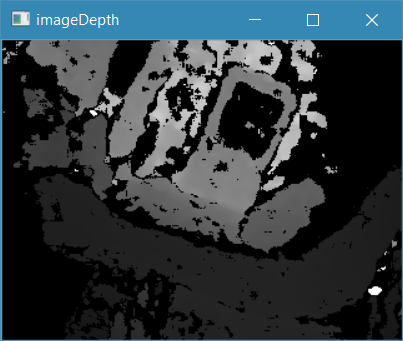

I am working with the RealSense R200 camera using Visual Studio(c++) and opencv. I need to use OpenCV to do image processing so I converted the PXCImage * into cv::Mat. But, as you can see, the two images are different.

My main problem is each pixel in my image when object closer camera it show black but in demo it show white.

Does anybody can help me?

Demo in realsense:

Result of my code use opencv:

void main() {

Mat imageColor;

Mat imageDepth;

// Create a PXCSenseManager instance

PXCSenseManager *sm = PXCSenseManager::CreateInstance();

// Select the color stream

sm->EnableStream(PXCCapture::STREAM_TYPE_COLOR, 640, 480, 30);

sm->EnableStream(PXCCapture::STREAM_TYPE_DEPTH, 320, 240, 30);

// Initialize and Stream Samples

sm->Init();

for (;;) {

// This function blocks until a color sample is ready

if (sm->AcquireFrame(true)<PXC_STATUS_NO_ERROR) break;

// retrieve the sample

PXCCapture::Sample *sample = sm->QuerySample();

if (sample->color != NULL) {

ConvertPXCImageToOpenCVMat(sample->color, &imageColor);

namedWindow("imageColor", CV_WINDOW_AUTOSIZE);

imshow("imageColor", imageColor);

}

if (sample->depth != NULL) {

ConvertPXCImageToOpenCVMat(sample->depth, &imageDepth);

imageDepth.convertTo(imageDepth, CV_8U, 255.0 / 4096.0);

namedWindow("imageDepth", CV_WINDOW_AUTOSIZE);

imshow("imageDepth", imageDepth);

}

if (waitKey(1) >= 0) break;

sm->ReleaseFrame();

}

// Close down

sm->Release();

}

//ConvertPXCImageToOpenCVMat From https://stackoverflow.com/questions/37266390/how-to-convert-realsense-rgb-frame-to-cvmat-in-ubuntu-or-other-linux-env

void ConvertPXCImageToOpenCVMat(PXCImage *inImg, Mat *outImg) {

int cvDataType;

int cvDataWidth;

PXCImage::ImageData data;

inImg->AcquireAccess(PXCImage::ACCESS_READ, &data);

PXCImage::ImageInfo imgInfo = inImg->QueryInfo();

switch (data.format) {

/* STREAM_TYPE_COLOR */

case PXCImage::PIXEL_FORMAT_YUY2: /* YUY2 image */

case PXCImage::PIXEL_FORMAT_NV12: /* NV12 image */

throw(0); // Not implemented

case PXCImage::PIXEL_FORMAT_RGB32: /* BGRA layout on a little-endian machine */

cvDataType = CV_8UC4;

cvDataWidth = 4;

break;

case PXCImage::PIXEL_FORMAT_RGB24: /* BGR layout on a little-endian machine */

cvDataType = CV_8UC3;

cvDataWidth = 3;

break;

case PXCImage::PIXEL_FORMAT_Y8: /* 8-Bit Gray Image, or IR 8-bit */

cvDataType = CV_8U;

cvDataWidth = 1;

break;

/* STREAM_TYPE_DEPTH */

case PXCImage::PIXEL_FORMAT_DEPTH: /* 16-bit unsigned integer with precision mm. */

case PXCImage::PIXEL_FORMAT_DEPTH_RAW: /* 16-bit unsigned integer with device specific precision (call device->QueryDepthUnit()) */

cvDataType = CV_16U;//cv16u

cvDataWidth = 2;

break;

case PXCImage::PIXEL_FORMAT_DEPTH_F32: /* 32-bit float-point with precision mm. */

cvDataType = CV_32F;

cvDataWidth = 4;

break;

/* STREAM_TYPE_IR */

case PXCImage::PIXEL_FORMAT_Y16: /* 16-Bit Gray Image */

cvDataType = CV_16U;

cvDataWidth = 2;

break;

case PXCImage::PIXEL_FORMAT_Y8_IR_RELATIVE: /* Relative IR Image */

cvDataType = CV_8U;

cvDataWidth = 1;

break;

}

// suppose that no other planes

if (data.planes[1] != NULL) throw(0); // not implemented

// suppose that no sub pixel padding needed

if (data.pitches[0] % cvDataWidth != 0) throw(0); // not implemented

outImg->create(imgInfo.height, data.pitches[0] / cvDataWidth, cvDataType);

memcpy(outImg->data, data.planes[0], imgInfo.height*imgInfo.width*cvDataWidth * sizeof(pxcBYTE));

inImg->ReleaseAccess(&data);

}