Hi all,

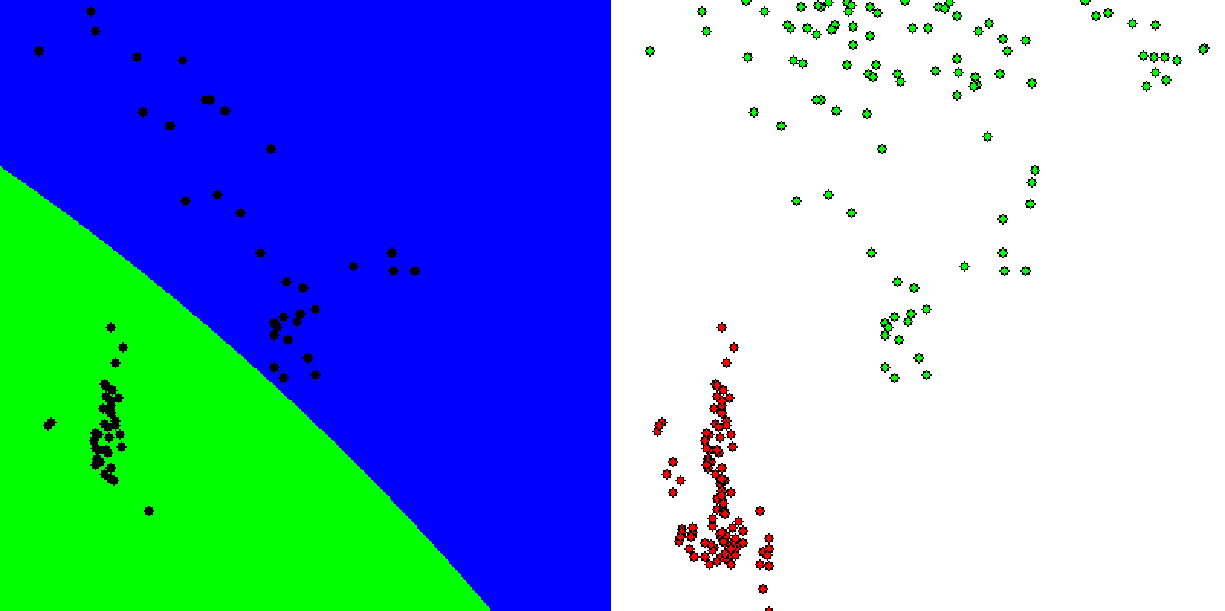

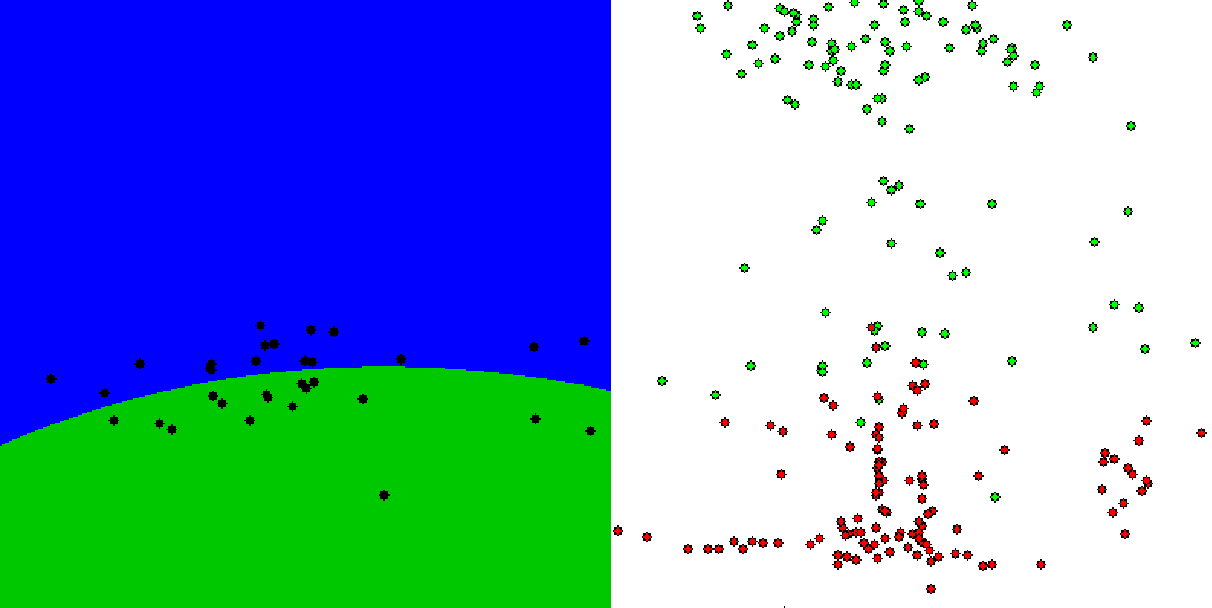

I am using the CUDA-based SURF implementation to extract key points in real-time. Afterwards, the properties (response value and some specific entries of the description vector) of the key points are used as features for SVM classification. The CPU version of OpenCV's SURF implementation works as expected. However, both GPU variants (CUDA and OpenCL) do not because the entries of the description vectors differ w.r.t the CPU version. As a consequence, my SVM yields bad classification results which is shown by the following figures:

The first 2 figures show the training results (decision boundary/support vectors and training samples of both classes) for the CPU version and the last 2 figures show the results of the OpenCL version, respectively (... for the same input, of course).

For example, the first 10 entries of the [descriptors](http://docs.opencv.org/modules/nonfree/doc/feature_detection.html#surf-operator) (using the CPU version) are as follows:

[ -0.0076033915, -3.6063837e-005, 0.0099780094, 6.7446483e-005, -0.037933301, -0.0013044993,

0.069664747, 0.0016015058, 0.0415693, -0.00026058216, ... ],

whereas the ocl:: version yields (for the same input):

[ 0.011228492, 0.00025490398, 0.011255275, 0.00027764714, -0.058959745, -0.0031194154,

0.061687313, 0.0032532166, 0.0554257, 0.0012606722, ... ].

The CUDA variant (gpu::) returns:

[ 3.9510203e-005, 0.0077131023, 0.00025647611, 0.007940894, 0.0021626796, 0.056031514,

0.0069103027, 0.056422122, -0.00029563709, 0.049984295, ... ].

However, the detector responses are identical in all 3 versions.

BTW: The ctor of GPU_SURF expects the parameter _keypointsRatio as stated in the documenation. What's this parameter for? (Maybe it's related to my issue?)

Another OpenCL related problem is also described here. In short: All apps (incl. the official samples) using ocl:: API calls crash when they terminate (due to a segfault). Unfortunately, a debugger doesn't provide any further hints as he just guides me to a disassembly (without any high level code):

Unhandled exception at 0x00000000 in oclocv.exe: 0xC0000005: Access violation at 0x0000000000000000.

Looks like someone accesses a 0-ptr?! Note that just calling ocl::getDevice() (or even without any ocl:: calls), the error does not occur. Additionally, Is this a known issue as the OCL module is rather "experimental"?

Any help/hints is highly appreciated! Thanks in advance! :-)

Setup:

- GTX 560 Ti, GF114 (AFAIK, still Fermi arch.), Driver v320.49 (latest)

- Win7-64 Prof. and CUDA SDK 5.0, OpenCV 2.4.5

PS: If you need more information, just ask! ;-)