in this post @Tetragramm clarify the documentation of the generalized HoughTransform in OpenCV a bit.

As I still do not find any tutorial or further information about this implementation, i hope someone can help me to get the following crashing code to work. update:->@berak found a sample of this function, and i was able to fix the code, so that it does not crash anymore. (Thank you very much!)

The remaining question is why the key instance is not found properly: I assume that it sicks close to the threshold which has to be set for Canny.

In the documentation for setCannyHighThresh it only says: "Canny low/high threshold." I don't find templ.png from the given example and would assume that both input images are filtered with the same canny-filter, arn't they?

update:

I found both used images pic1.png and templ.png from the sample in this folder.

My problem is, that even with the given example settings i get wrong results: I detect 4 wrong objects shown in the output image below. Can someone try the code from the sample with this pictures as a confirmation?

confirmation?

The key-images are taken from this tutorial

Thank you for your help!

int main()

{

Mat queryImage, //image where template key is located

bigTemplateImage, //uncropped template

templateImage; //cropped template

queryImage = imread("b.bmp", IMREAD_GRAYSCALE);

bigTemplateImage = imread("template_original.jpg", IMREAD_GRAYSCALE);

// Crop template

Rect myROI(197, 36, 80, 165);

templateImage = bigTemplateImage(myROI);

Ptr<GeneralizedHoughGuil> ghB = createGeneralizedHoughGuil();

ghB->setTemplate(templateImage);

vector<Vec4f> position;

TickMeter tm;

tm.start();

ghB->detect(queryImage, position);

tm.stop();

cout << "Found : " << position.size() << " objects" << endl;

cout << "Detection time : " << tm.getTimeMilli() << " ms" << endl;

Mat out;

cv::cvtColor(queryImage, out, COLOR_GRAY2BGR);

for (size_t i = 0; i < position.size(); ++i)

{

Point2f pos(position[i][0], position[i][1]);

float scale = position[i][2];

float angle = position[i][3];

RotatedRect rect;

rect.center = pos;

rect.size = Size2f(queryImage.cols * scale, queryImage.rows * scale);

rect.angle = angle;

Point2f pts[4];

rect.points(pts);

line(out, pts[0], pts[1], Scalar(0, 0, 255), 3);

line(out, pts[1], pts[2], Scalar(0, 0, 255), 3);

line(out, pts[2], pts[3], Scalar(0, 0, 255), 3);

line(out, pts[3], pts[0], Scalar(0, 0, 255), 3);

}

imshow("out", out);

waitKey();

return 0;

}

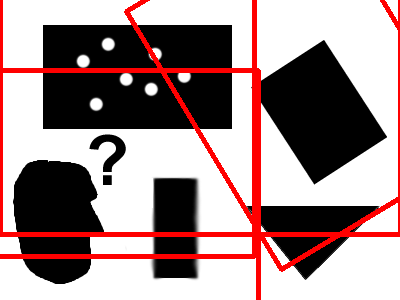

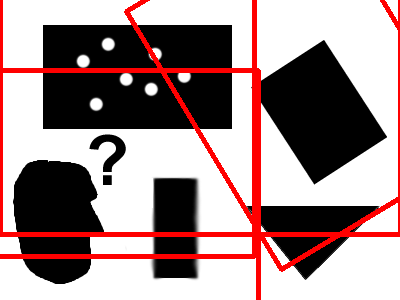

b.bmp:  template_original.jpg

template_original.jpg

result of sample code

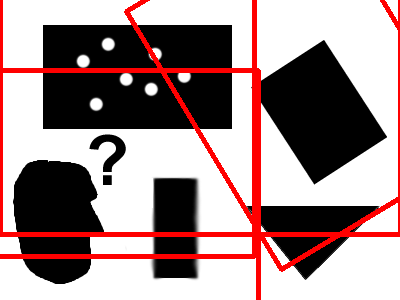

template_original.jpg

template_original.jpg