Hi,

I am using LatentSVM in opencv 2.4 on models from opencv_extra as suggested here.

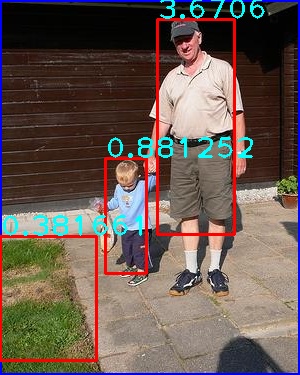

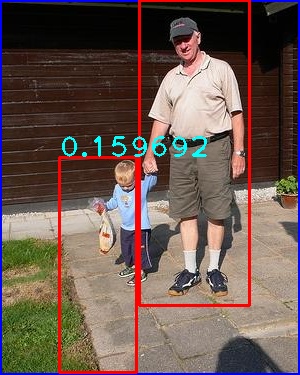

This is a voc sample Test image,

I noticed different scores and bounding boxes each 1-pixel shift, what are the causes of this variation although the object is fully present in these cases? Here is the code:

#include <opencv2/contrib/contrib.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/core/core_c.h>

#include <opencv2/core/mat.hpp>

#include <opencv2/core/operations.hpp>

#include <opencv2/core/types_c.h>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/imgproc/imgproc_c.h>

#include <opencv2/imgproc/types_c.h>

#include <opencv2/objdetect/objdetect.hpp>

#include <opencv2/video/background_segm.hpp>

#include <iostream>

#include <vector>

#include <sstream>

using namespace std;

using namespace cv;

vector<pair<Rect, float> > detectWithLSVM(Mat img, LatentSvmDetector& detector, float overlapThreshold,

float confThresh) {

vector<pair<Rect, float> > res;

vector<LatentSvmDetector::ObjectDetection> detections;

detector.detect(img, detections, overlapThreshold);

for (int i = 0; i < detections.size(); i++) {

if (detections[i].score < confThresh)

continue;

Rect r = detections[i].rect;

res.push_back(make_pair(r, detections[i].score));

}

return res;

}

int main(int argc, char **argv) {

LatentSvmDetector detector(vector<String>(1, "person.xml"));

Mat image = imread("09.jpg",1);

if (image.empty()) {

cout << "Frame is empty! .. Quit" << endl;

return 0;

}

int width = 300;

string sstr;

for (int i = 0; i < image.cols - width; i++) {

Mat imaged = image.clone();

Mat roi;

Rect rectInput;

rectInput = Rect(i, 0, width, image.rows);

cout << rectInput << endl;

roi = image(rectInput).clone();

vector<pair<Rect, float> > r = detectWithLSVM(roi, detector, 0.1, 0.0);

rectangle(imaged, rectInput, Scalar(255, 0, 0), 2);

for (int ridx = 0; ridx < r.size(); ridx++) {

r[ridx].first.x += rectInput.x;

r[ridx].first.y += rectInput.y;

rectangle(imaged, r[ridx].first, Scalar(0, 0, 255), 2);

stringstream ss;

ss<<r[ridx].second;

ss >> sstr;

putText(imaged, sstr, Point(r[ridx].first.x, r[ridx].first.y), 1, 2, Scalar(255, 255, 0), 2);

imshow("LSVM",imaged);

waitKey(1);

}

}

return 0;

}

- I want to ask about why different translation/roi image size produces different scores and predictions? And are there any way to guarantee what kind of displacements are best to achieve good detection (e.g the object is in the middle or in the boarder of the image, etc)

- Are there any down resolution factors other than HOG blocks calculation? like stride in convolution and score calculation step, is it dense overlapped with 1 pixel stride?

- For speeding up the detection, I changed LAMBDA to 5 instead of 10. Other than misdetection from different pyramid scale size, are there any miscalculation or dependent parameters in LSVM code to be ware of? or is it safe to change LAMBDA only limiting pyramid scale size?