Hello,

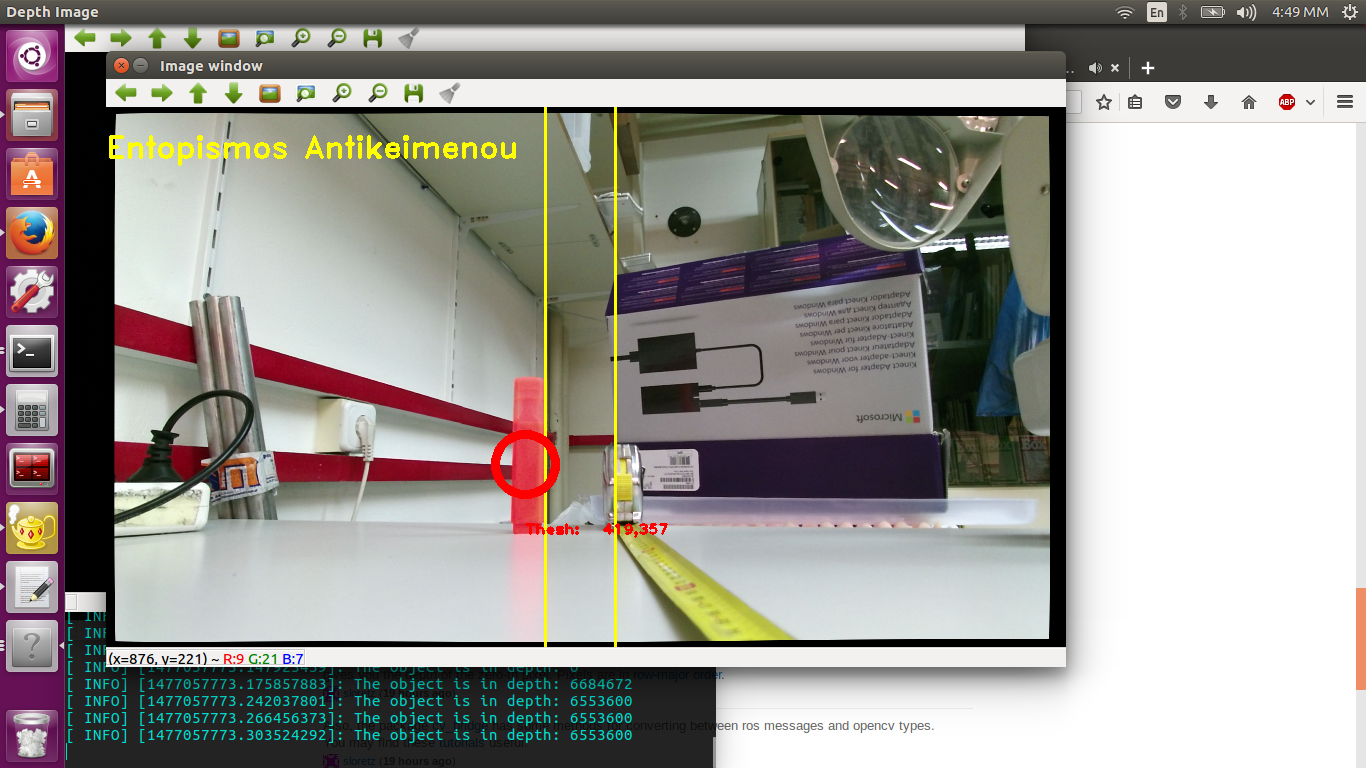

I am working with ROS Kinetic and I am using a Kinect v2. I am generally new to depth sensors and as a undergraduate student I don't have someone to ask these kind of questions so please bare with me. I have managed to get both color and depth image's data and detect an object from its color. From this detection, I find its position in the 2d image (let's say (x,y)) and I am trying with these points to go to the depth image and detect its depth. I am using mainly qhd resolutions.

To be more specific:

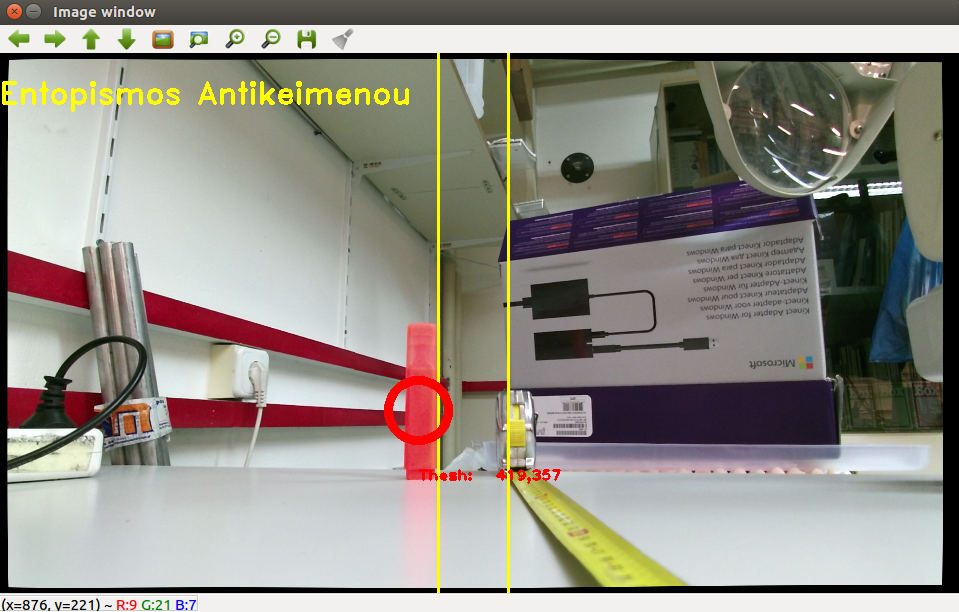

Here is the color detection in the rgb image:

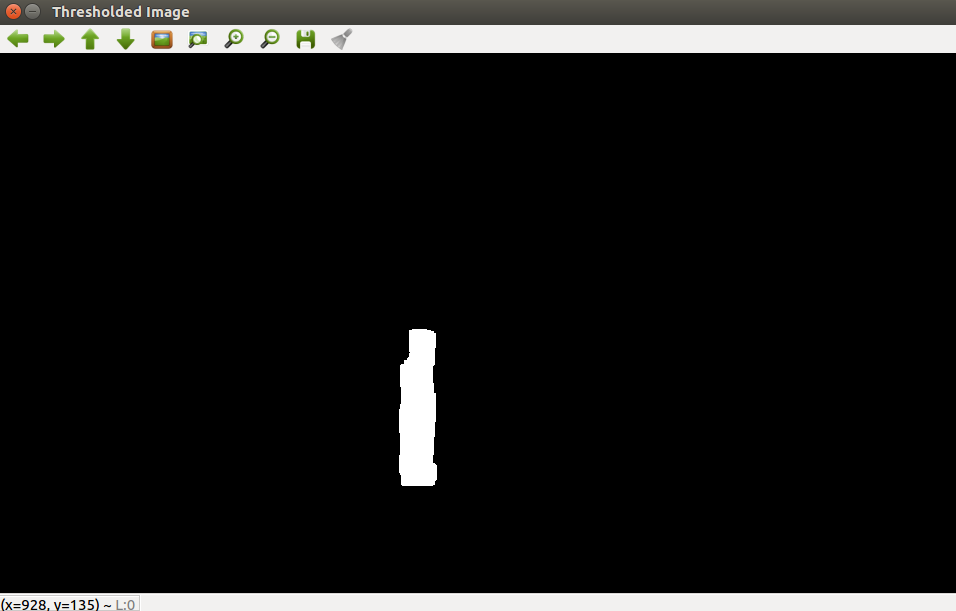

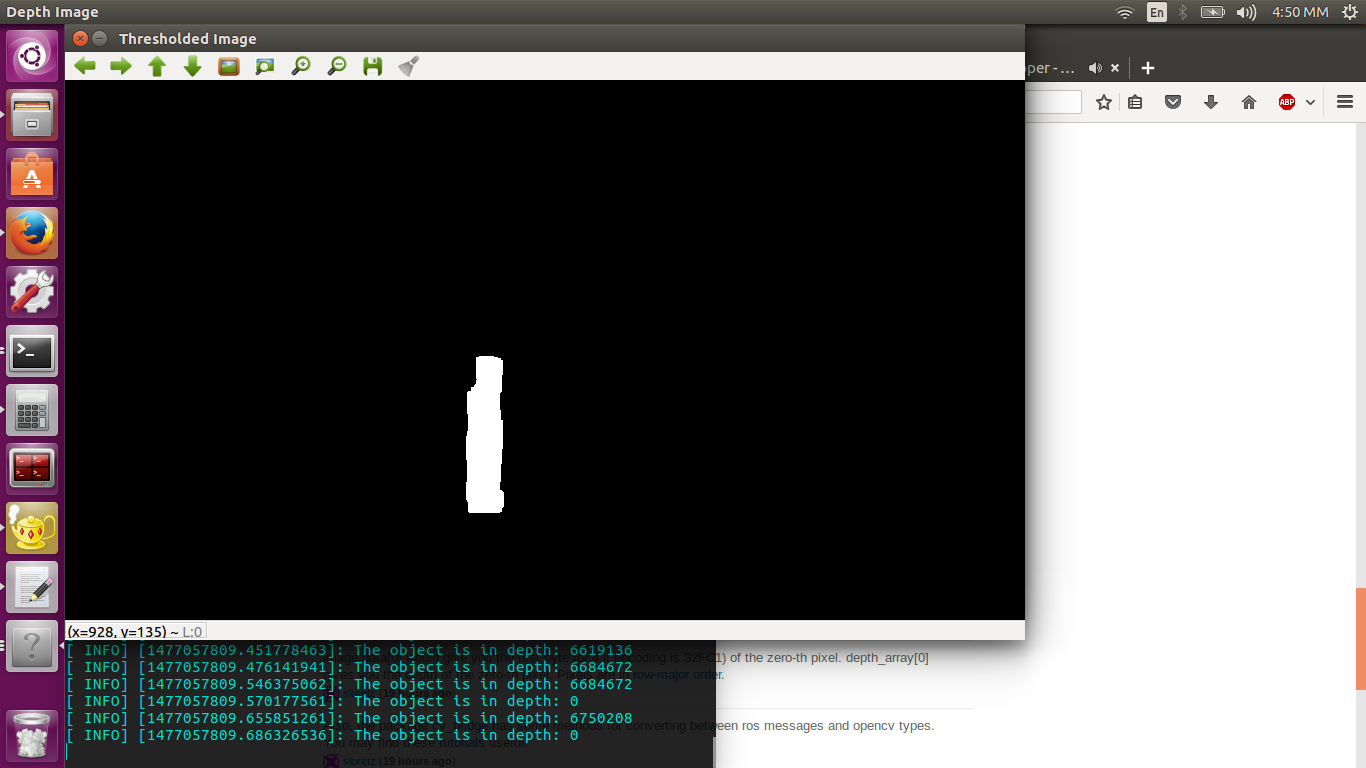

Here is the thresholded image:

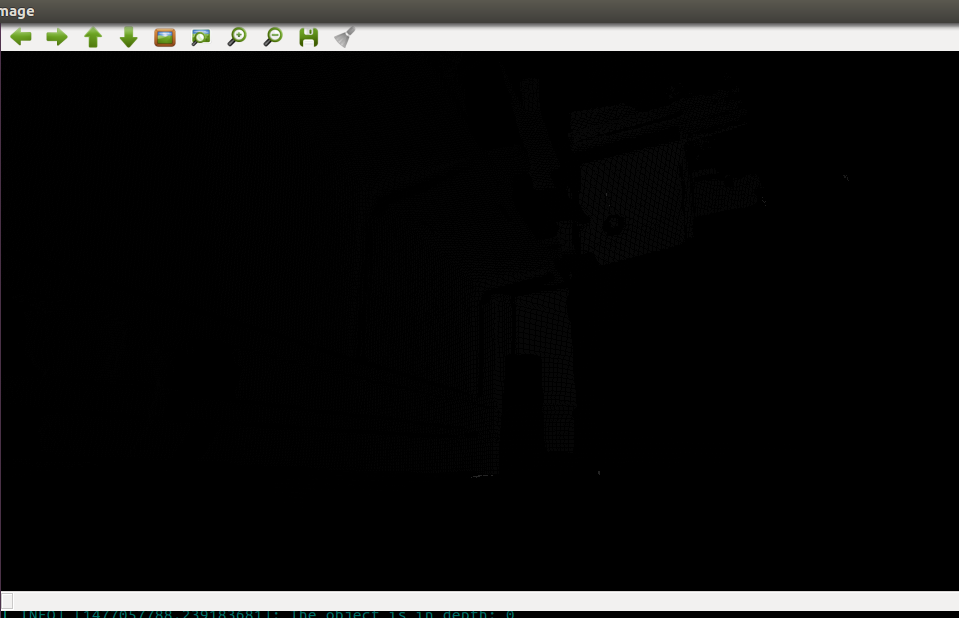

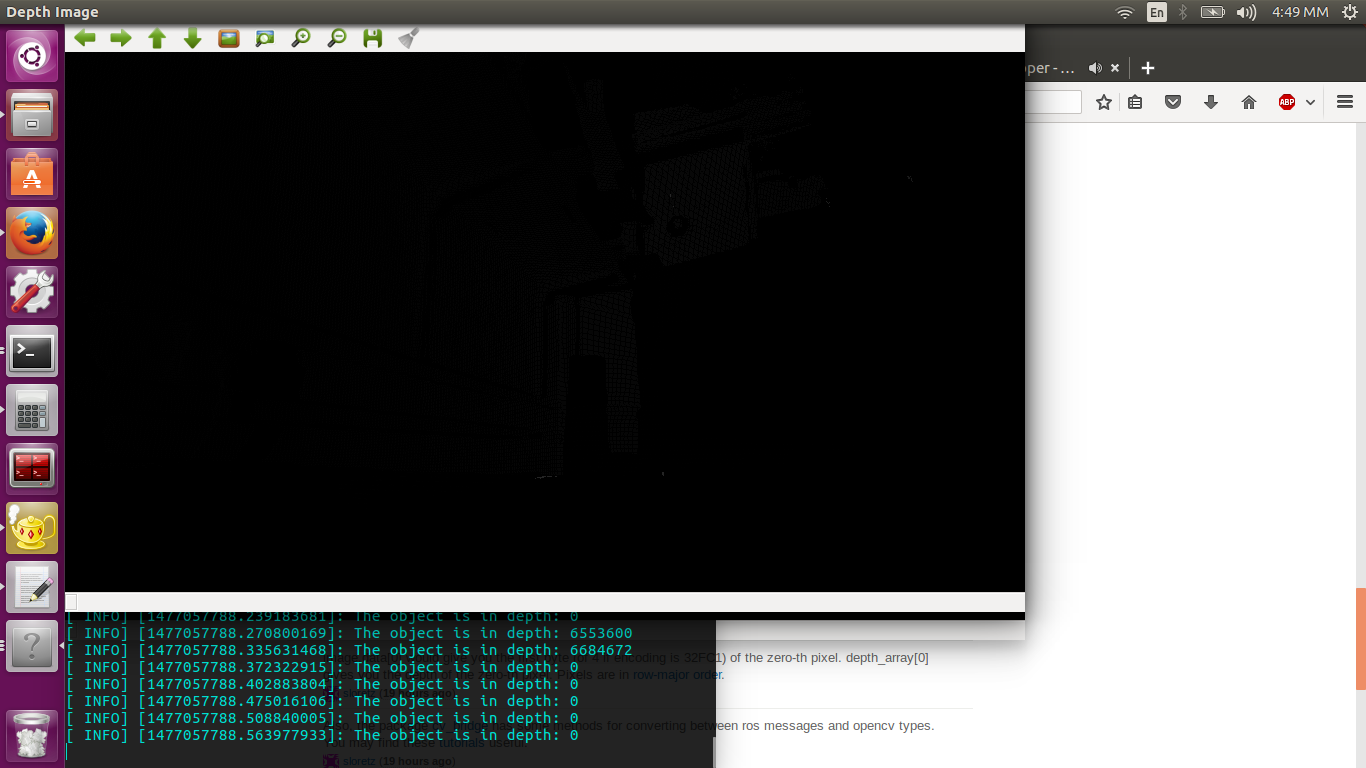

and here is the depth image I get:

I have measured the distance from my object at 48cm but what I get from the depth image is 0 distance. The depth image is mostly black, but you can see some points that indicate some kind of depth.

A snapshot of my code for the kinect's depth callback function is presented below:

void kinectdepthCallback(const sensor_msgs::ImageConstPtr& msg2){

cv_bridge::CvImagePtr cv_ptr2;

try{

cv_ptr2 = cv_bridge::toCvCopy(msg2, sensor_msgs::image_encodings::TYPE_16UC1);

}

catch (cv_bridge::Exception& e){

ROS_ERROR("Could not convert from '%s' to '16UC1'.", e.what());

return;

}

cv::imshow("Depth Image",cv_ptr2->image);

cv::waitKey(20);

ROS_INFO("The object is in depth: %d",cv_ptr2->image.at<int>(Point(x,y)));

}

My main question is what is the most probable cause of getting zeros instead of non zero depth data even if I have put my object at a distance long enough for the sensor to detect (48-49 cm)?

I would also like to ask how can I convert the raw data to meters?

Is this malfunction caused maybe from bad calibration of the sensor ?

I would greatly appreciate your help, especially from someone who has worked with depth sensors and ROS.

Thank you for your answers and for your time in advance,

Chris