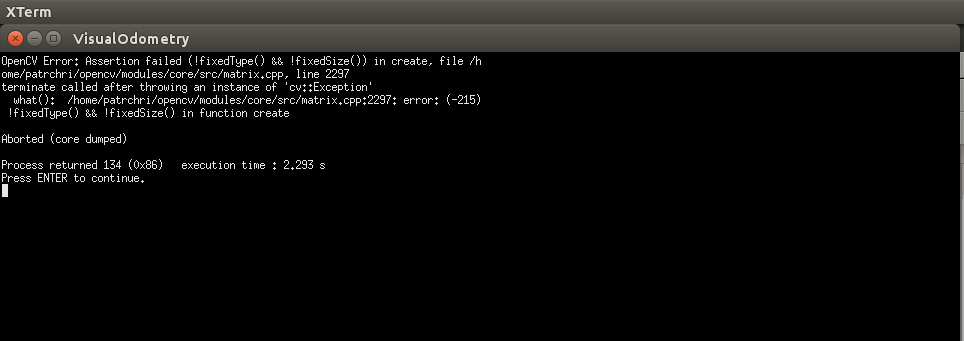

I am using findEssentialMat() function so I can later recover the relative pose (translation and rotation) between two following frames of a webcam with recoverPose(). However, I get an asserion failed error:

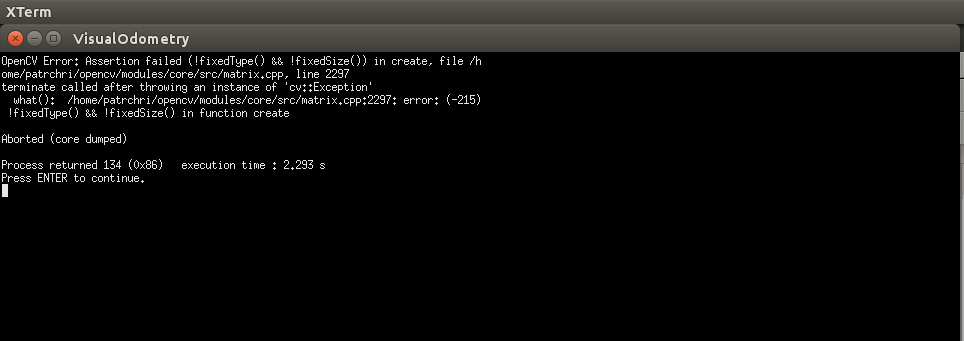

OpenCV Error: Assertion failed(!fixedType() && !fixedSize()) in create, file /home/patrchri/opencv/modules/core/src/matrix.cpp, line 2297

To be more specific, I have written the following in a while loop which reads the following frames :

vector<KeyPoint> kpointsframe1;

vector<KeyPoint> kpointsframe2;

vector<Point2f> pointsframe1;

vector<Point2f> pointsframe2;

vector<Point2f> velocityvect;

vector<uchar> status;

vector<float> err;

Size winSize = Size(21,21);

TermCriteria termcrit = TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,30,0.01);

Mat Essential,R,T;

capture.read(frame1);

capture.read(frame2);

undistort(frame1,frame1undist,CameraMatrix,distCoeffs);

undistort(frame2,frame2undist,CameraMatrix,distCoeffs);

cvtColor(frame1undist,frame1gray,CV_BGR2GRAY);

cvtColor(frame2undist,frame2gray,CV_BGR2GRAY);

//goodFeaturesToTrack(frame1gray,corners,500,qualityLevel,minDistance,Mat(),blockSize,useHarrisDetector);

FAST(frame1gray,kpointsframe1,THRESHOLD_DIFFERENCE,false,FastFeatureDetector::TYPE_9_16);

for(int i=0;i<kpointsframe1.size();i++) pointsframe1.push_back(kpointsframe1.at(i).pt);

calcOpticalFlowPyrLK(frame1gray,frame2gray,pointsframe1,pointsframe2,status,err,winSize,3,termcrit,0,0.001);

int ind = 0;

for( int i=0; i<status.size(); i++){

Point2f pt = pointsframe2.at(i- ind);

if ((status.at(i) == 0)||(pt.x<0)||(pt.y<0)){

if((pt.x<0)||(pt.y<0)){

status.at(i) = 0;

}

pointsframe1.erase(pointsframe1.begin()+(i-ind));

pointsframe2.erase(pointsframe2.begin()+(i-ind));

ind++;

}

}

Point2d pp(320,240);

double focal = 9.5327626068874099e+02;

Essential = findEssentialMat(pointsframe1,pointsframe2,CameraMatrix,RANSAC,0.999,1,Mat());

recoverPose(Essential,pointsframe1,pointsframe2,CameraMatrix,R,T,Mat());

I have declared the CameraMatrix outside this while loop.

The issue arises from the findEssentialMat() but I am confused on what is wrong with it.

I searched for similar assertion failures but I couldn't find something that it would be helpful. Surely the error comes from the sizes of the Mats used.

What could be wrong with it?

Thank you for your answers and for your time in advance,

Chris

1st EDIT:

The CameraMatrix has been declared as follows after the callibration of the webcam:

double data[3][3];

data[0][0] = 9.5327626068874099e+02;

data[0][1] = 0.0;

data[0][2] = 320.;

data[1][0] = 0.0;

data[1][1] = 9.5327626068874099e+02;

data[1][2] = 240.0;

data[2][0] = 0.0;

data[2][1] = 0.0;

data[2][2] = 1.0;

double avg_reprojection_error = 3.5640854681839190e-01;

Mat CameraMatrix(3,3,CV_64F,&data);

2nd EDIT:

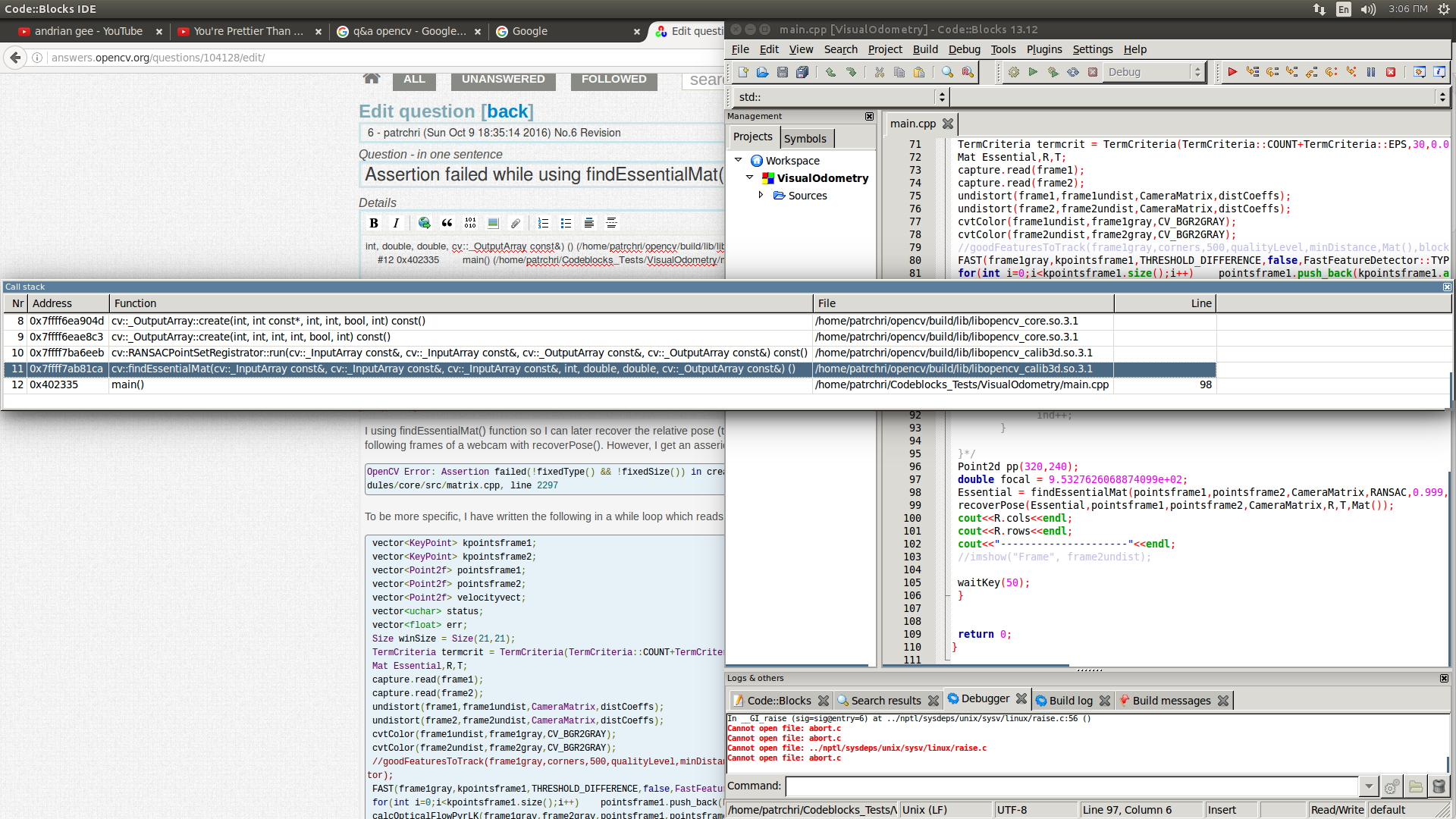

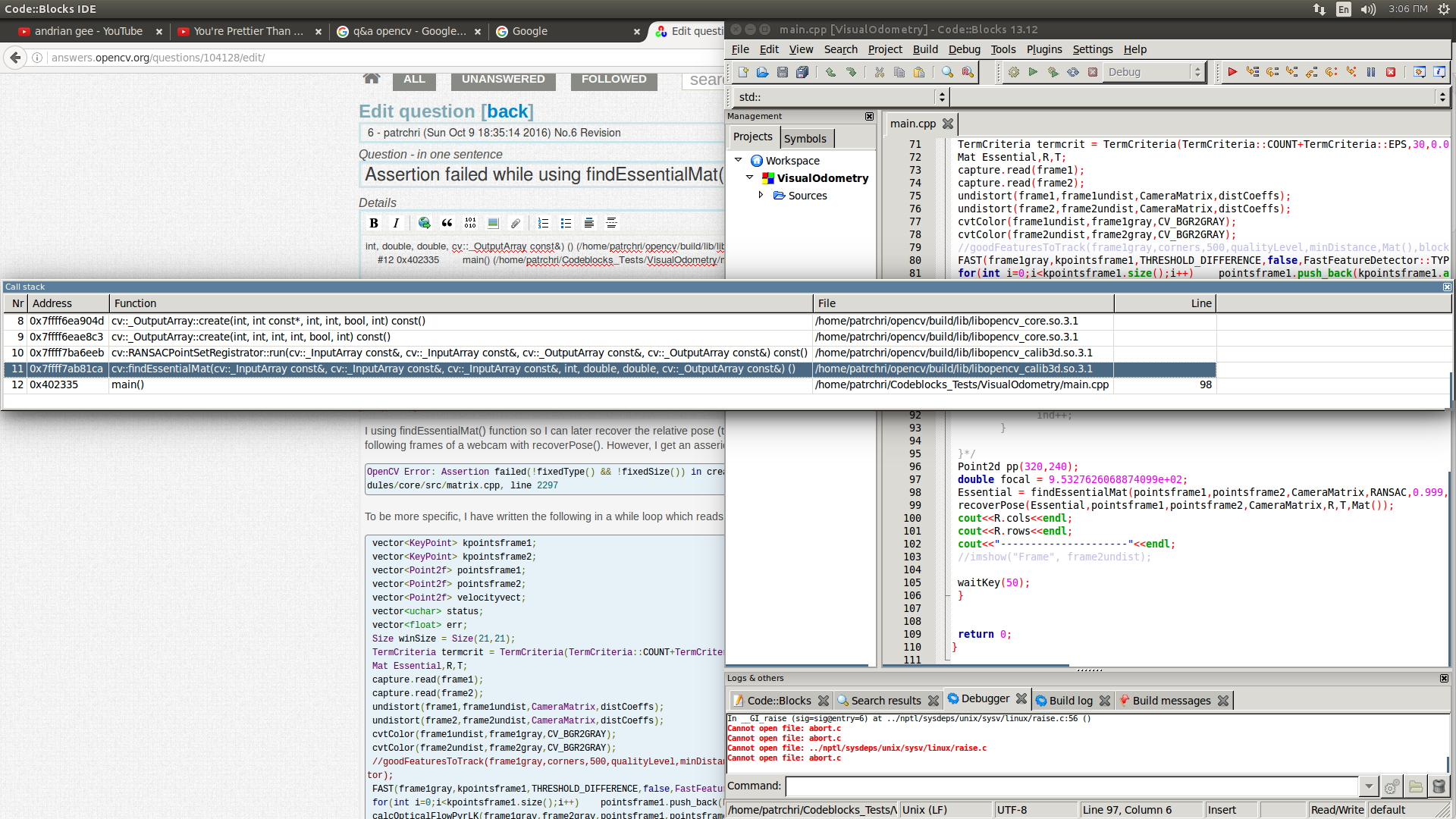

The full printscreen of the error is the following:

If I follow the trace I see that it comes from:

void _OutputArray::create(int d, const int* sizes, int mtype, int i,

bool allowTransposed, int fixedDepthMask) const

{

int k = kind();

mtype = CV_MAT_TYPE(mtype);

if( k == MAT )

{

CV_Assert( i < 0 );

Mat& m = *(Mat*)obj;

if( allowTransposed )

{

if( !m.isContinuous() )

{

--->CV_Assert(!fixedType() && !fixedSize());<---- (That is line 2297)

m.release();

}

if( d == 2 && m.dims == 2 && m.data &&

m.type() == mtype && m.rows == sizes[1] && m.cols == sizes[0] )

return;

}

if(fixedType())

{

if(CV_MAT_CN(mtype) == m.channels() && ((1 << CV_MAT_TYPE(flags)) & fixedDepthMask) != 0 )

mtype = m.type();

else

CV_Assert(CV_MAT_TYPE(mtype) == m.type());

}

3rd EDIT:

// Input should be a vector of n 2D points or a Nx2 matrix

cv::Mat cv::findEssentialMat( InputArray _points1, InputArray _points2, InputArray _cameraMatrix,

int method, double prob, double threshold, OutputArray _mask)

{

CV_INSTRUMENT_REGION()

Mat points1, points2, cameraMatrix;

_points1.getMat().convertTo(points1, CV_64F);

_points2.getMat().convertTo(points2, CV_64F);

_cameraMatrix.getMat().convertTo(cameraMatrix, CV_64F);

int npoints = points1.checkVector(2);

--> CV_Assert( npoints >= 0 && points2.checkVector(2) == npoints &&

points1.type() == points2.type());

--> CV_Assert(cameraMatrix.rows == 3 && cameraMatrix.cols == 3 && cameraMatrix.channels() == 1);

if (points1.channels() > 1)

{

points1 = points1.reshape(1, npoints);

points2 = points2.reshape(1, npoints);

}

double fx = cameraMatrix.at<double>(0,0);

double fy = cameraMatrix.at<double>(1,1);

double cx = cameraMatrix.at<double>(0,2);

double cy = cameraMatrix.at<double>(1,2);

points1.col(0) = (points1.col(0) - cx) / fx;

points2.col(0) = (points2.col(0) - cx) / fx;

points1.col(1) = (points1.col(1) - cy) / fy;

points2.col(1) = (points2.col(1) - cy) / fy;

// Reshape data to fit opencv ransac function

points1 = points1.reshape(2, npoints);

points2 = points2.reshape(2, npoints);

threshold /= (fx+fy)/2;

Mat E;

if( method == RANSAC )

createRANSACPointSetRegistrator(makePtr<EMEstimatorCallback>(), 5, threshold, prob)->run(points1, points2, E, _mask);

else

createLMeDSPointSetRegistrator(makePtr<EMEstimatorCallback>(), 5, prob)->run(points1, points2, E, _mask);

return E;

}

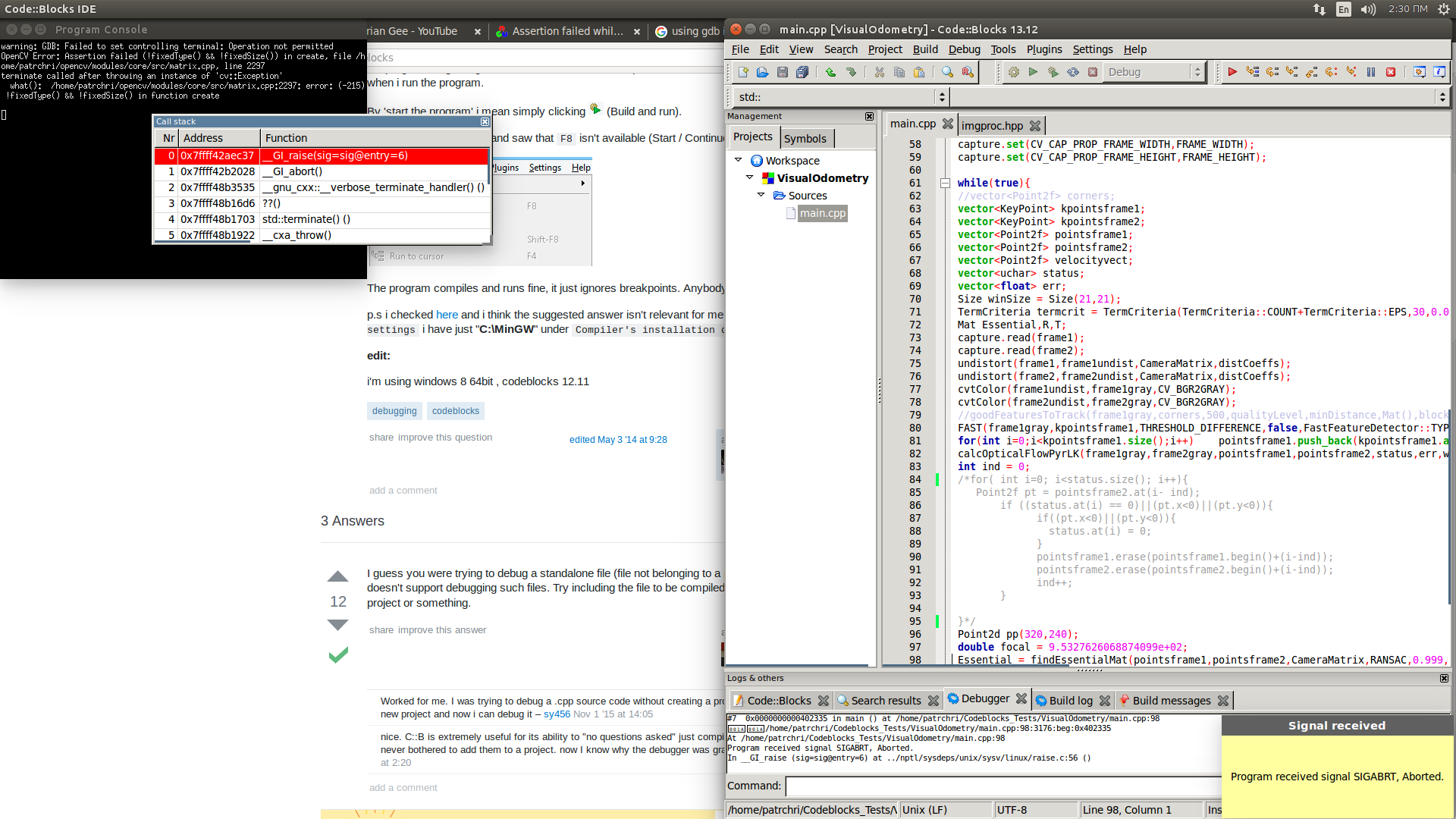

4th EDIT:

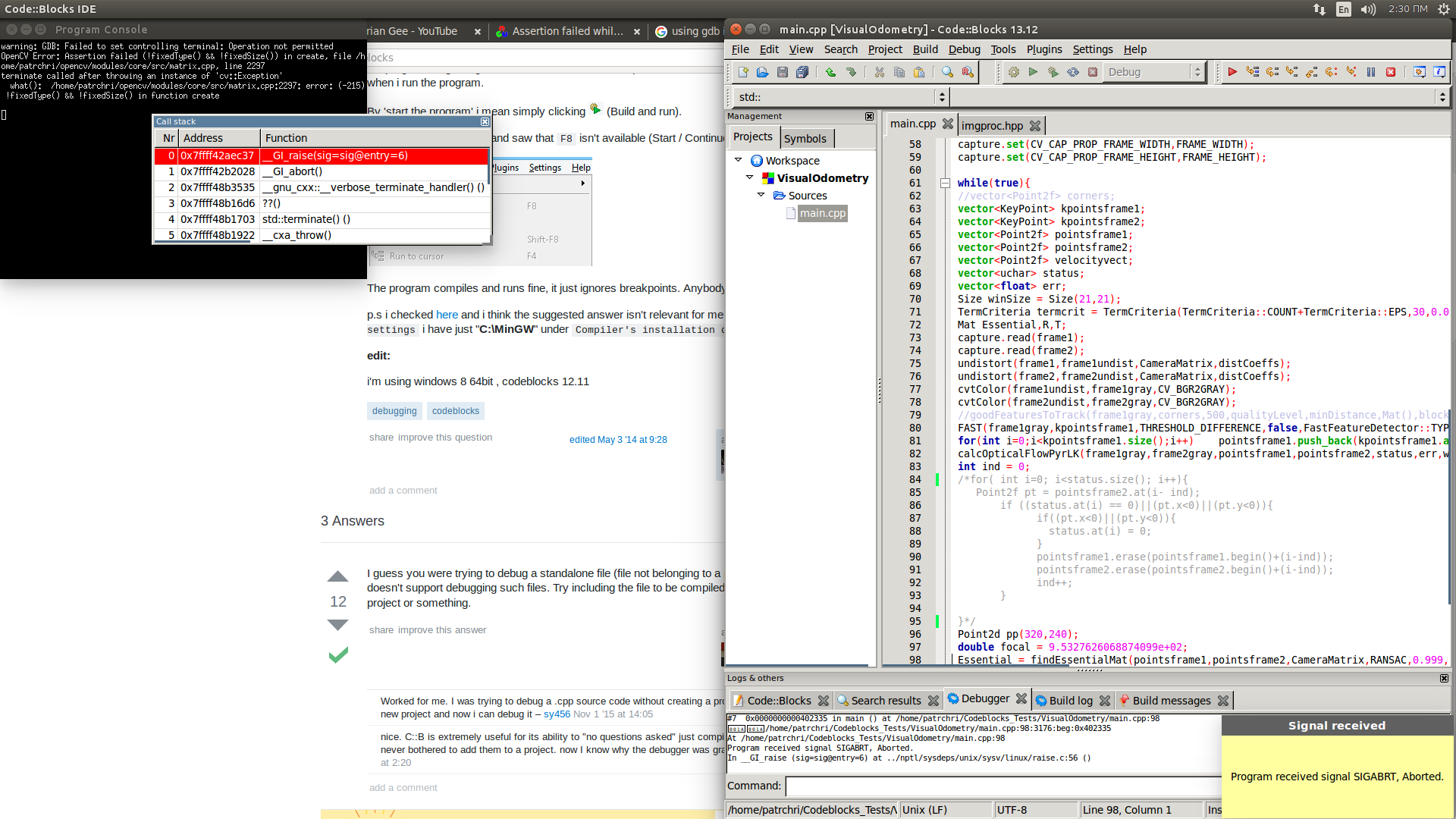

I am not an expert in debugging but I used the default debugger of codeblocks and I got the following results at the line of the calling of the findEssentialMat() function:

#0 0x7ffff42aec37 __GI_raise(sig=sig@entry=6) (../nptl/sysdeps/unix/sysv/linux/raise.c:56)

#1 0x7ffff42b2028 __GI_abort() (abort.c:89)

#2 0x7ffff48b3535 __gnu_cxx::__verbose_terminate_handler() () (/usr/lib/x86_64-linux-gnu/libstdc++.so.6:??)

#3 0x7ffff48b16d6 ??() (/usr/lib/x86_64-linux-gnu/libstdc++.so.6:??)

#4 0x7ffff48b1703 std::terminate() () (/usr/lib/x86_64-linux-gnu/libstdc++.so.6:??)

#5 0x7ffff48b1922 __cxa_throw() (/usr/lib/x86_64-linux-gnu/libstdc++.so.6:??)

#6 0x7ffff6f79560 cv::error(cv::Exception const&) () (/home/patrchri/opencv/build/lib/libopencv_core.so.3.1:??)

#7 0x7ffff6f796e0 cv::error(int, cv::String const&, char const*, char const*, int) () (/home/patrchri/opencv/build/lib/libopencv_core.so.3.1:??)

#8 0x7ffff6ea904d cv::_OutputArray::create(int, int const*, int, int, bool, int) const() (/home/patrchri/opencv/build/lib/libopencv_core.so.3.1:??)

#9 0x7ffff6eae8c3 cv::_OutputArray::create(int, int, int, int, bool, int) const() (/home/patrchri/opencv/build/lib/libopencv_core.so.3.1:??)

#10 0x7ffff7ba6eeb cv::RANSACPointSetRegistrator::run(cv::_InputArray const&, cv::_InputArray const&, cv::_OutputArray const&, cv::_OutputArray const&) const() (/home/patrchri/opencv/build/lib/libopencv_calib3d.so.3.1:??)

#11 0x7ffff7ab81ca cv::findEssentialMat(cv::_InputArray const&, cv::_InputArray const&, cv::_InputArray const&, int, double, double, cv::_OutputArray const&) () (/home/patrchri/opencv/build/lib/libopencv_calib3d.so.3.1:??)

#12 0x402335 main() (/home/patrchri/Codeblocks_Tests/VisualOdometry/main.cpp:98)

I still cannot understand why I get this error.

5th EDIT:

Printscreen of the call stack window with the lines: