Goal: I want to calibrate and rectify my Zed camera. The input video feed leads to some complications. However, this method produces fewer headaches than buying a new computer which contains an NVIDIA GPU just for the sake of using the proprietary software (which may or may not allow of the end goal). For now, all I want to do is calibrate my camera so I can start estimating sizes and distances of (first known, then unknown) objects.

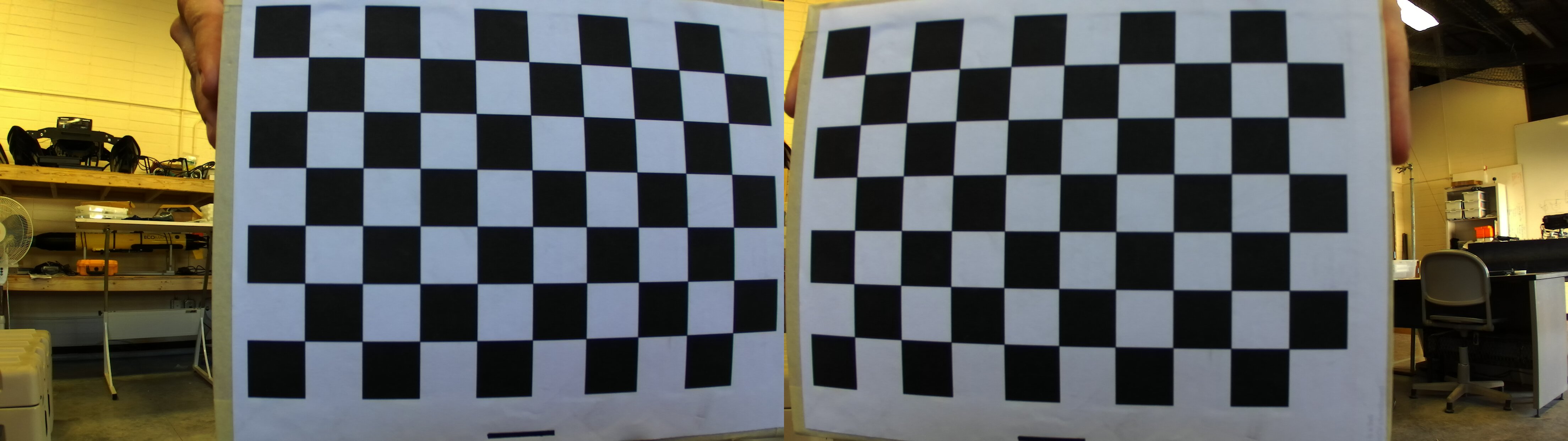

For reference, this is what the Zed camera's video looks like:

FIG. 1 - Raw image from Zed Camera.

I figured out a quick-and-dirty method of splitting the video feed. Generally:

cap = cv2.VideoCapture(1) # video sourced from Zed camera

while(True):

# Capture frame-by-frame

ret, frame = cap.read()

# partition video

vidL = frame[0:1080, 0:1280] # left camera

vidR = frame[0:1080, 1281:2560] # right camera

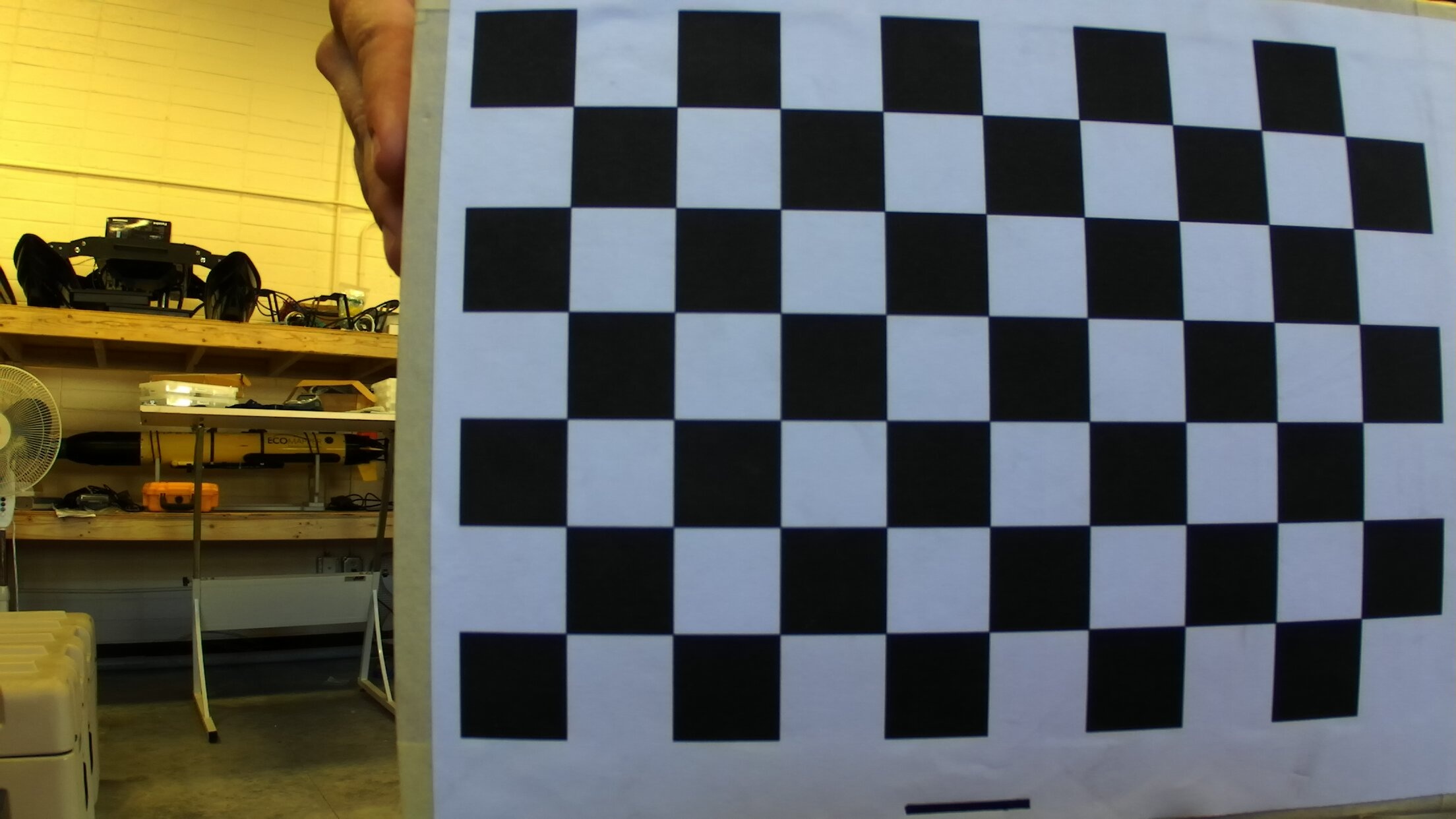

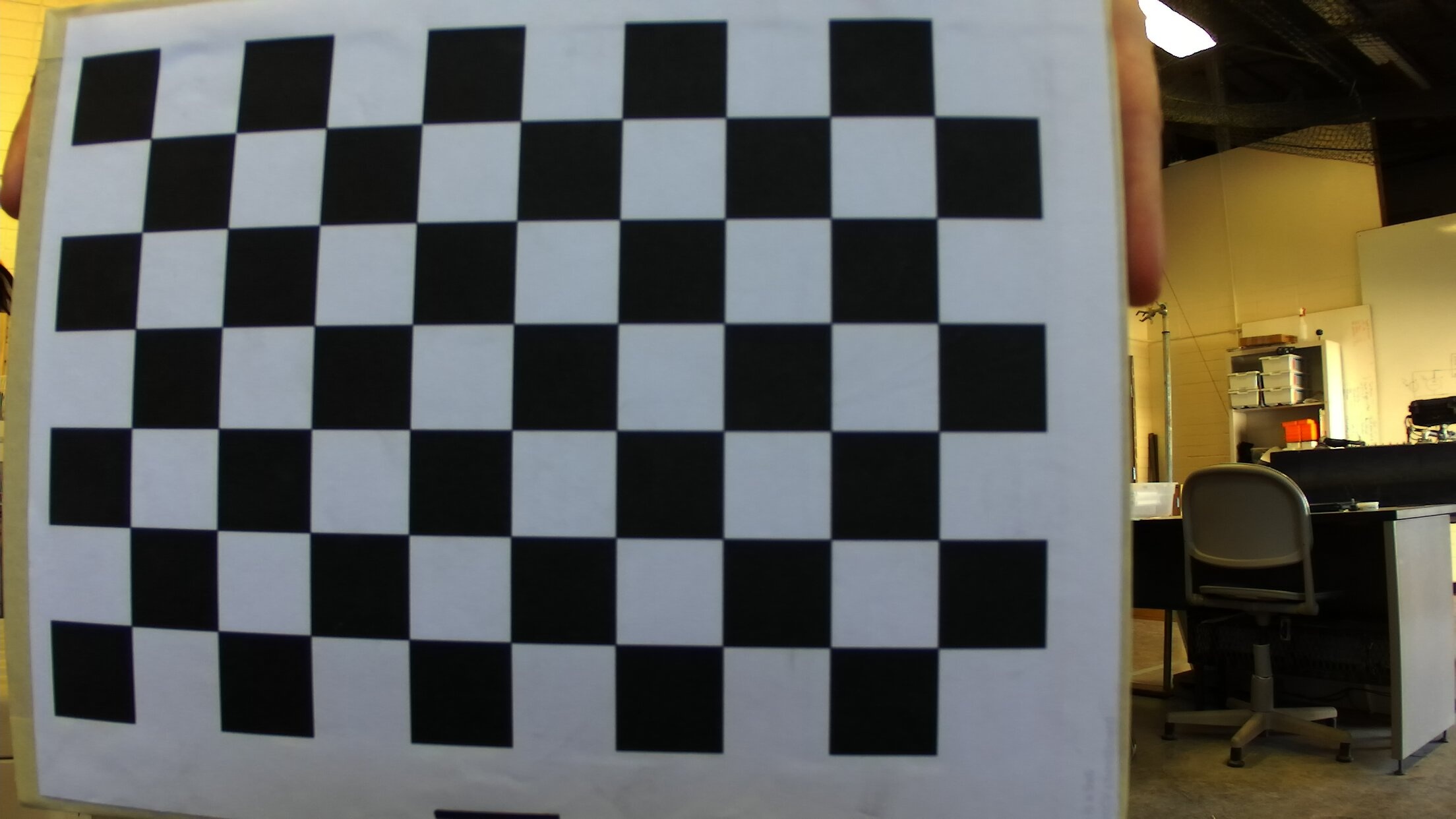

The resulting images look like this:

FIG 2. - Left image from Zed camera.

FIG 3. - Right image from Zed Camera.

My code is adapted from this source . It runs a calibration and rectification of a camera with live video feed. I tested it before I made any changes and it worked, albeit with some odd results. (It partially rectified a section of the image.)

#!usr/bin/python

import cv, cv2, time, sys

import numpy as np

#n_boards=0 #no of boards

#board_w=int(sys.argv[1]) # number of horizontal corners

#board_h=int(sys.argv[2]) # number of vertical corners

#n_boards=int(sys.argv[3])

#board_n=board_w*board_h # no of total corners

#board_sz=(board_w,board_h) #size of board

n_boards=0 #no of boards

board_w=(9) # number of horizontal corners

board_h=(6) # number of vertical corners

n_boards=1

board_n=board_w*board_h # no of total corners

board_sz=(board_w,board_h) #size of board

# creation of memory storages

# Left

image_pointsL=cv.CreateMat(n_boards*board_n,2,cv.CV_32FC1)

object_pointsL=cv.CreateMat(n_boards*board_n,3,cv.CV_32FC1)

point_countsL=cv.CreateMat(n_boards,1,cv.CV_32SC1)

intrinsic_matrixL=cv.CreateMat(3,3,cv.CV_32FC1)

distortion_coefficient_L=cv.CreateMat(5,1,cv.CV_32FC1)

# Right

image_pointsR=cv.CreateMat(n_boards*board_n,2,cv.CV_32FC1)

object_pointsR=cv.CreateMat(n_boards*board_n,3,cv.CV_32FC1)

point_countsR=cv.CreateMat(n_boards,1,cv.CV_32SC1)

intrinsic_matrixR=cv.CreateMat(3,3,cv.CV_32FC1)

distortion_coefficient_R=cv.CreateMat(5,1,cv.CV_32FC1)

# capture frames of specified properties and modification of matrix values

i=0

y=0

z=0 # to print number of frames

successes=0

# Capture video from camera

capture = cv2.VideoCapture(1) # 1 references Zed camera, cange as necessary

# partition video

while(successes<n_boards):

found=0

ret, frame = capture.read()

capL = frame[0:1080, 0:1280] # left camera

capR = frame[0:1080, 1281:2560] # right camera

imageL = cv2.cv.iplimage(capL)

imageR = cv2.cv.iplimage(capR)

# Left

gray_imageL=cv.CreateImage(cv.GetSize(imageL),8,1)

cv.CvtColor(imageL,gray_imageL,cv.CV_BGR2GRAY)

# Right

gray_imageR=cv.CreateImage(cv.GetSize(imageR),8,1)

cv.CvtColor(imageR,gray_imageR,cv.CV_BGR2GRAY)

# Left

(foundL,cornersL)=cv.FindChessboardCorners(gray_imageL,board_sz,cv.CV_CALIB_CB_ADAPTIVE_THRESH| cv.CV_CALIB_CB_FILTER_QUADS)

cornersL=cv.FindCornerSubPix(gray_imageL,cornersL,(11,11),(-1,-1),(cv.CV_TERMCRIT_EPS+cv.CV_TERMCRIT_ITER,30,0.1))

# Right

(foundR,cornersR)=cv.FindChessboardCorners(gray_imageR,board_sz,cv.CV_CALIB_CB_ADAPTIVE_THRESH| cv.CV_CALIB_CB_FILTER_QUADS)

cornersR=cv.FindCornerSubPix(gray_imageR,cornersR,(11,11),(-1,-1),(cv.CV_TERMCRIT_EPS+cv.CV_TERMCRIT_ITER,30,0.1))

# if got a good image,draw chess board

# Left

if foundL==1:

print "Left: found frame number {0}".format(y+1)

cv.DrawChessboardCorners(imageL,board_sz,cornersL,1)

corner_countL=len(cornersL)

y=y+1

# Right

if foundR==1:

print "Right: found frame number {0}".format(z+1)

cv.DrawChessboardCorners(imageR,board_sz,cornersR,1)

corner_countR=len(cornersR)

z=z+1

# if got a good image, add to matrix

if len(cornersL)==board_n:

step=successes*board_n

k=step

for j in range(board_n):

cv.Set2D(image_pointsL,k,0,corners[j][0])

cv.Set2D(image_pointsL,k,1,corners[j][1])

cv.Set2D(object_pointsL,k,0,float(j)/float(board_w))

cv.Set2D(object_pointsL,k,1,float(j)%float(board_w))

cv.Set2D(object_pointsL,k,2,0.0)

k=k+1

cv.Set2D(point_countsL,successes,0,board_n)

if len(cornersR)==board_n:

step=successes*board_n

g=step

for j in range(board_n):

cv.Set2D(image_pointsR,g,0,corners[j][0])

cv.Set2D(image_pointsR,g,1,corners[j][1])

cv.Set2D(object_pointsR,g,0,float(j)/float(board_w))

cv.Set2D(object_pointsR,g,1,float(j)%float(board_w))

cv.Set2D(object_pointsR,g,2,0.0)

g=g+1

cv.Set2D(point_counts,successes,0,board_n)

successes=successes+1

time.sleep(2)

print "-------------------------------------------------"

print "\n"

cv.ShowImage("Test Frame Left",imageL)

cv.ShowImage("Test Frame Right",imageR)

cv.WaitKey(33)

print "checking is fine ,all matrices are created"

cv.DestroyWindow("Test Frame Left")

cv.DestroyWindow("Test Frame Right")

# now assigning new matrices according to view_count

# Left

object_points2_L=cv.CreateMat(successes*board_n,3,cv.CV_32FC1)

image_points2_L=cv.CreateMat(successes*board_n,2,cv.CV_32FC1)

point_counts2_L=cv.CreateMat(successes,1,cv.CV_32SC1)

# Right

object_points2_R=cv.CreateMat(successes*board_n,3,cv.CV_32FC1)

image_points2_R=cv.CreateMat(successes*board_n,2,cv.CV_32FC1)

point_counts2_R=cv.CreateMat(successes,1,cv.CV_32SC1)

#transfer points to matrices

# Left

for i in range(successes*board_n):

cv.Set2D(image_points2_L,i,0,cv.Get2D(image_pointsL,i,0))

cv.Set2D(image_points2_L,i,1,cv.Get2D(image_pointsL,i,1))

cv.Set2D(object_points2_L,i,0,cv.Get2D(object_pointsL,i,0))

cv.Set2D(object_points2_L,i,1,cv.Get2D(object_pointsL,i,1))

cv.Set2D(object_points2_L,i,2,cv.Get2D(object_pointsL,i,2))

# Right

for i in range(successes*board_n):

cv.Set2D(image_points2_R,i,0,cv.Get2D(image_pointsR,i,0))

cv.Set2D(image_points2_R,i,1,cv.Get2D(image_pointsR,i,1))

cv.Set2D(object_points2_R,i,0,cv.Get2D(object_pointsR,i,0))

cv.Set2D(object_points2_R,i,1,cv.Get2D(object_pointsR,i,1))

cv.Set2D(object_points2_R,i,2,cv.Get2D(object_pointsR,i,2))

# Left

for i in range(successes):

cv.Set2D(point_counts2_L,i,0,cv.Get2D(point_countsL,i,0))

# Right

for i in range(successes):

cv.Set2D(point_counts2_R,i,0,cv.Get2D(point_countsR,i,0))

# Left

cv.Set2D(intrinsic_matrixL,0,0,1.0)

cv.Set2D(intrinsic_matrixL,1,1,1.0)

# Right

cv.Set2D(intrinsic_matrixR,0,0,1.0)

cv.Set2D(intrinsic_matrixR,1,1,1.0)

# Left

rcv_L = cv.CreateMat(n_boards, 3, cv.CV_64FC1)

tcv_L = cv.CreateMat(n_boards, 3, cv.CV_64FC1)

# Right

rcv_R = cv.CreateMat(n_boards, 3, cv.CV_64FC1)

tcv_R = cv.CreateMat(n_boards, 3, cv.CV_64FC1)

print "checking camera calibration............."

# camera calibration

# Left

cv.CalibrateCamera2(object_points2_L,image_points2_L,point_counts2_L,cv.GetSize(imageL),intrinsic_matrixL,distortion_coefficient_L,rcv_L,tcv_L,0)

# Right

cv.CalibrateCamera2(object_points2_R,image_points2_R,point_counts2_R,cv.GetSize(imageR),intrinsic_matrixR,distortion_coefficient_R,rcv_R,tcv_R,0)

print " checking camera calibration.........................OK "

# storing results in xml files

cv.Save("Intrinsics_LEFT.xml",intrinsic_matrixL)

cv.Save("Distortion_LEFT.xml",distortion_coefficient_L)

cv.Save("Intrinsics_RIGHT.xml",intrinsic_matrixR)

cv.Save("Distortion_RIGHT.xml",distortion_coefficient_R)

# Loading from xml files

intrinsic = cv.Load("Intrinsics_LEFT.xml")

distortion = cv.Load("Distortion_LEFT.xml")

intrinsic = cv.Load("Intrinsics_RIGHT.xml")

distortion = cv.Load("Distortion_RIGHT.xml")

print " loaded all distortion parameters"

mapx = cv.CreateImage( cv.GetSize(image), cv.IPL_DEPTH_32F, 1 );

mapy = cv.CreateImage( cv.GetSize(image), cv.IPL_DEPTH_32F, 1 );

cv.InitUndistortMap(intrinsic,distortion,mapx,mapy)

cv.NamedWindow( "Undistort" )

print "all mapping completed"

print "Now relax for some time"

time.sleep(8)

print "now get ready, camera is switching on"

while(1):

# Left

imageL=cv.QueryFrame(captureL)

s = cv.CloneImage(imageL);

cv.ShowImage( "Left Camera Calibration", imageL)

cv.Remap( s, image, mapx, mapy )

cv.ShowImage("Undistorted Left", imageL)

# Right

imageR=cv.QueryFrame(captureR)

t = cv.CloneImage(imageR);

cv.ShowImage( "Right Camera Calibration", imageR)

cv.Remap( t, imageR, mapx, mapy )

cv.ShowImage("Undistorted Right", imageR)

c = cv.WaitKey(33)

if(c == 1048688): # enter 'p' key to pause for some time

cv.WaitKey(2000)

elif c==1048603: # enter esc key to exit

break

print "everything is fine"

cv2.destroyAllWindows()

Currently it's returning the error:

user@users_computer:~/Documents/Python_docs$ python cam_calibrate_stereo.py

Segmentation fault (core dumped)

General research tells me that "segmentation fault (core dumped)" is an error often associated with infinite loops. Is there an obvious infinite loop that I'm not noticing? The original author wrote the script using 'cv.arg' commands so I've begun changing to 'cv2.args' as far as was necessary (last changed was line 48/49, "imageL = cv2.cv.iplimage(capL)"). To resolve this error do I need to completely rewrite this with updated OpenCV ('cv2.arg') commands?

As an aside, I already have all the intrinsics and extrinsics for each camera (found using a MATLAB applet). Should I scrap this script, define the necessary variables and apply the calibration that way?

Thank in advance for the help!