Getting better background subtraction

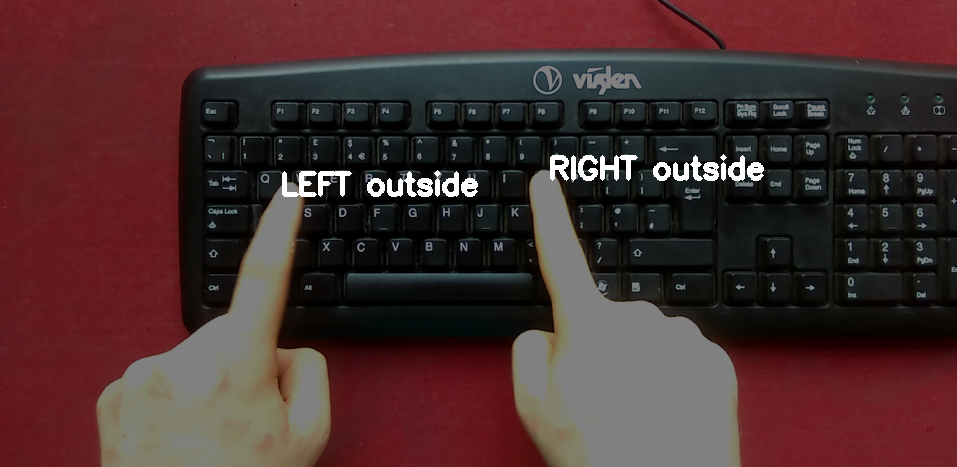

I am trying to generate a mask of hands typing at a keyboard, by analyising every frame of an incoming video. Here is an example of the setup (with some debug info ontop):

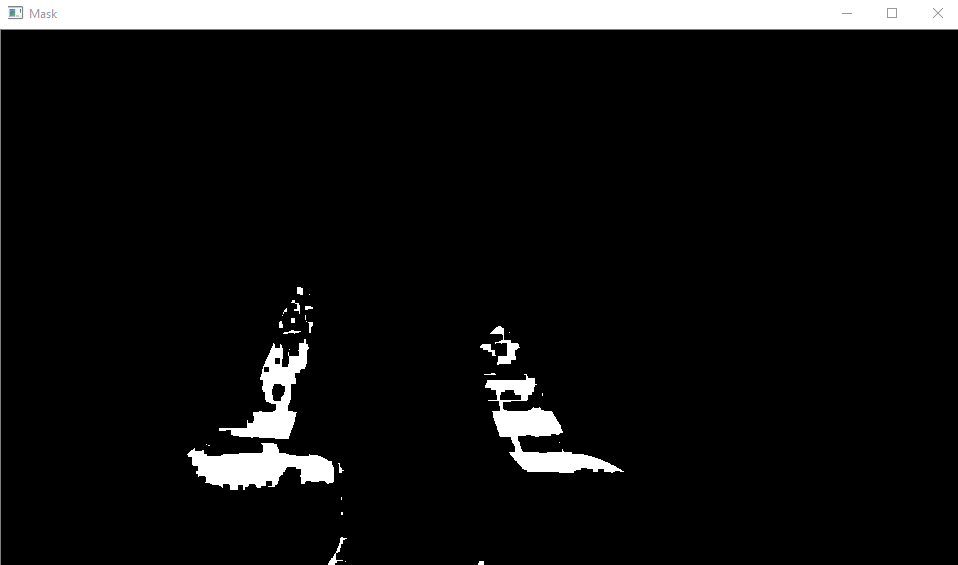

The mask I get currently looks like this, on which I have to perform 1 round of erosion on it to suppress some difference noise. (Note that the two images are not the same frame).

My subtraction technique is very simple. I simple have an image of the keyboard with no hands in front of it and use it as the background template and find difference with every new frame.

As you can see it is not great and I would love any advice on getting this to be a near perfect mask of the entire hand. I have tried many different techniques such as:

- Switching colour space to HSV (works better than BGR)

- Blurring the images to be subtracted

- Using the MOG2 background subtractor

- MorphologyEx to dilate, open, and close the mask

And they all had their own problems.

Blurring caused "noise" to clump together causing bigger masses of difference, yes I did blur both input images.

MOG2 was really good except for one problem which I could not figure out. I would like to tell the built in background subtractor to not update its background model -ever-. Because however it chooses to subtract the background and generate a mask was very impressive when I turned the learning ratio down. So if anyone knows how I can tell MOG2 "use this image as the background model" that would be helpful.

MorphologyEx had a nasty habit of greatly enhancing noise no matter what I did.

Here is a small snipit of how I am calculating difference:

float hue_thresh = 20.0f, sat_thresh = 0.f, val_thresh = 20.f;

for (int j = 0; j<diffImage.rows; ++j)

for (int i = 0; i<diffImage.cols; ++i)

{

cv::Vec3b pix = diffImage.at<cv::Vec3b>(j, i);

if (hue_thresh <= pix[0] && sat_thresh <= pix[1] && val_thresh <= pix[2])

{

foregroundMask.at<unsigned char>(j, i) = 255;

}

}

I understand it is not elegant.

I am hoping someone could give a suggestion that near perfectly subtracts the hands from the background. It's greatly frustrating for me because for me, as a human being, the hands are white and the keyboard and surface are dark colours and yet the differences are so small in pixel values, but I suppose this is the true problem with computer vision.

Some suggestions