Trying to verify Kinect XYZ accuracy with chessboard localization

Hi

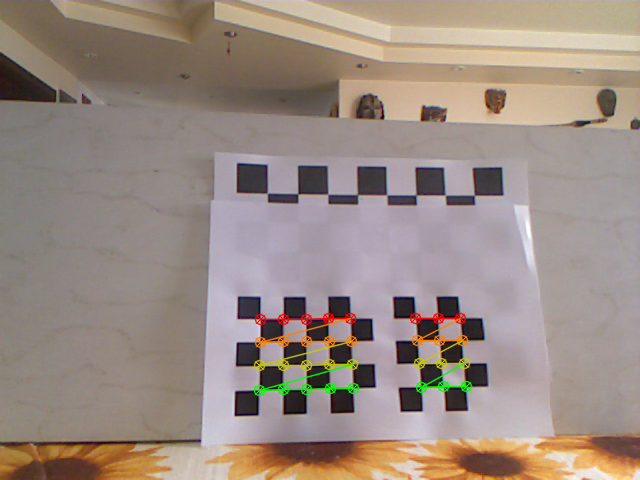

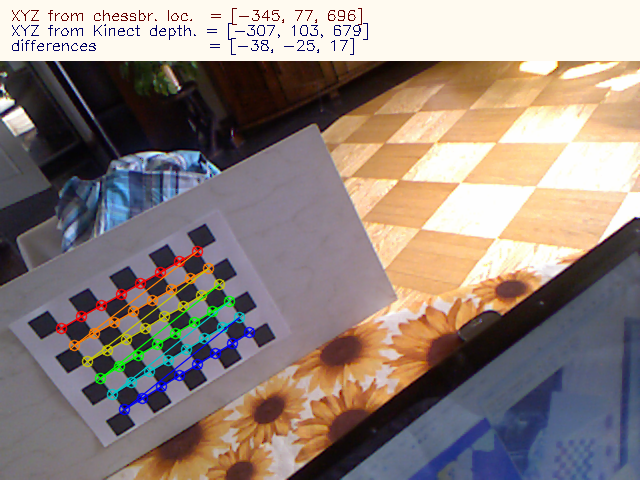

I am trying to verify the accuracy of Kinect depth estimation. I am using openNi to capture Kinect data. Both images (RGB and depth) are pixel aligned. In order to get the true XYZ coordinates of a pixel, I use a chessboard and localize it in a standard way. The RGB camera was calibrated beforehand. I believe, after calibration I get quite precise results of chessboard localization - I printed 2 small chessboards on a sheet of paper, localized them and calculated the distance between their origins. The errors were less than 1 mm. Here is the image:

These two chessboards are less than 140 mm apart (as measured with a ruler) and the Euclidean distance between their detected origins in 3D space is calculated to 139.5 +-0.5 mm. Seems absolutely correct.

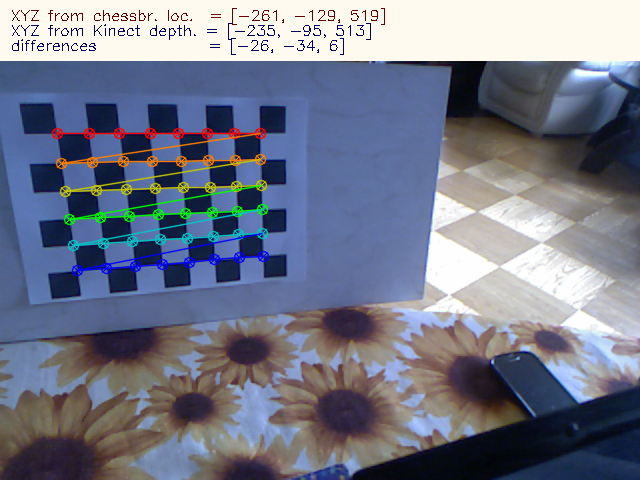

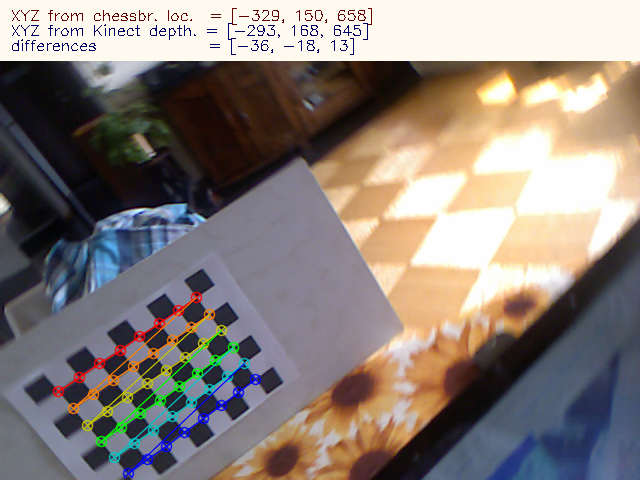

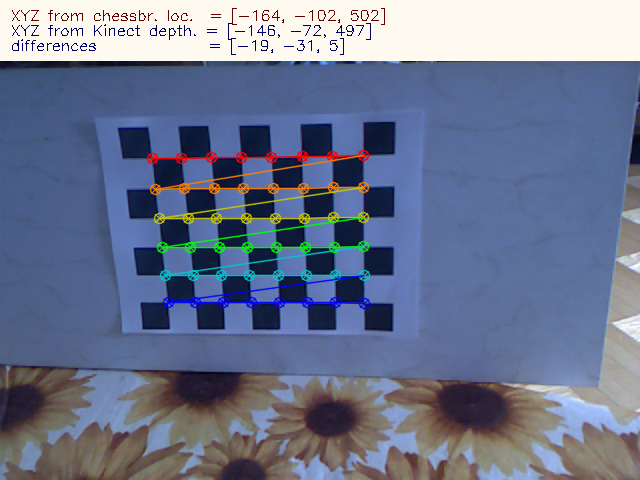

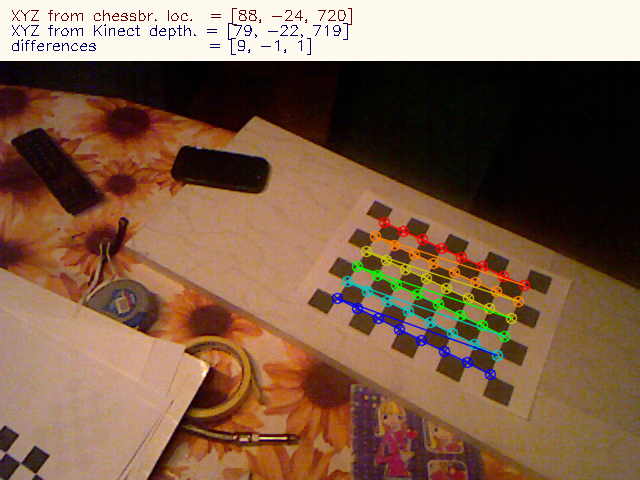

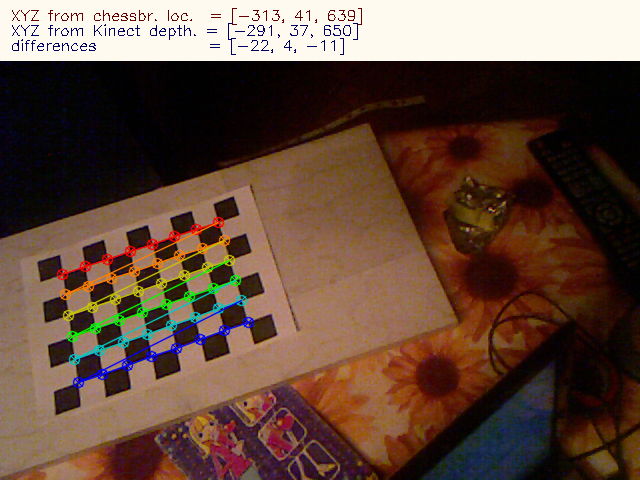

Now, since RGB and depth images are aligned and I know the pixel coordinates of my chessboard origin, I cen get the depth or full XYZ coords of this point. The results are, unfortunately, slightly inconsistent:

From chessboard localization I get similar but clearly different results:

(I inverted the depth-based Y coordinate for consistency with chessboard measurement) As you see, the results are slightly off, but the differences are not constant.

What am I doing wrong? I understand that since images are pixel aligned, I do not need do transform between the coorinates frames of RGB and IR cameras as this is actually done by the Kinect in a way. Or is it possible, that this transformation is good enough for pixel alignment, but still lacks some precision in XYZ calculation?

If I could grab the IR image instead of the RGB, that problem would not exist as I would get the depth map and the chessboard with the same camera, but with OpenNI I don't think I can do it...or am I wrong?

Your comments will be appreciated.

EDIT:

Since both images are aligned, they must be rectified, thus undistorted. Right? The RGB image does look as if it was undistorted - and this is just as it comes from the sensor - I am not doing anything to it. Looking at the images, I would say they are even slightly overundistorted. So, further undistorting will only worsen the measurements. Having realized that, I set all the distortion coefficients to zero and the principal point to 320x240 (perhaps it should be 319.5x239.5, but 0.5 pixel makes no real difference).The focal length is the only thing I kept from my calibration (525). Now, I reran my program and noticed that the differences I reported below in the images dropped significantly! They were up to 5 mm in the center of the image and grew to about 10 as I towards edges and not around +-20 very close to edges.

My guess is that openNI (1.0, which I am using, I think I should move to 2.0, but this is due to compatibility issues with some other software I use ...

You can get all three streams (color, ir and depth) in the same time from the Kinect devices using OpenNI.

This is the short code, be sure to check the result of each operation.

And concerning your question: You need to do the RGB-D registration to get pixel-aligned images. Check if it's correct by overlapping color and depth images. The contours should match.

Based on my experiences, the estimated point coordonates are quite correct.

Thanks. To be exact, for capturing I use:

I think this approach does not allow for IR image capture. I will have a look at your suggestion.

The RGB image and the depth map are definitely aligned - I checked that.

Yep, I think you should use directly OpenNI for a better control. It's worth the few more lines of code.